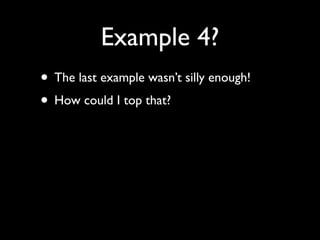

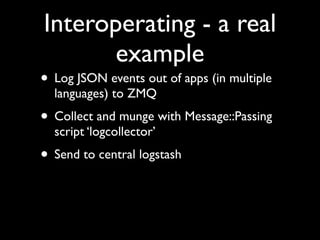

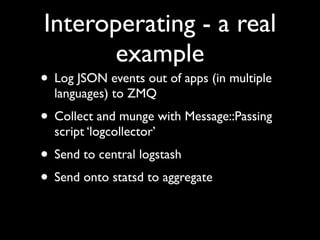

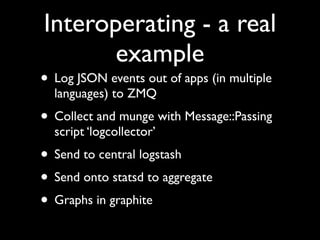

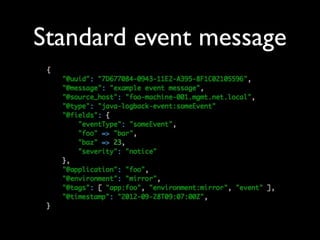

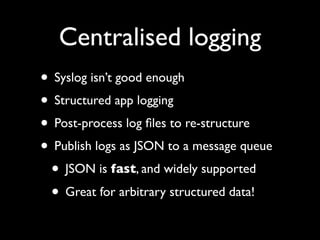

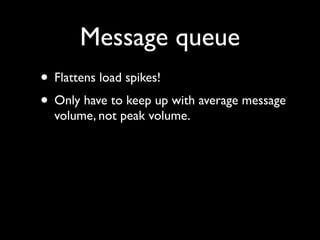

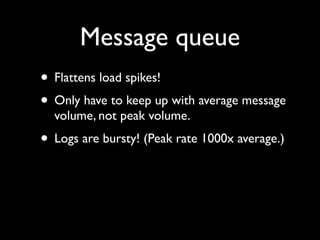

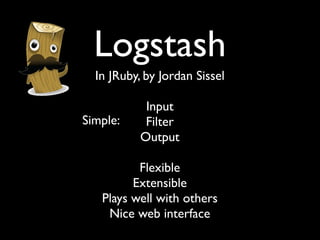

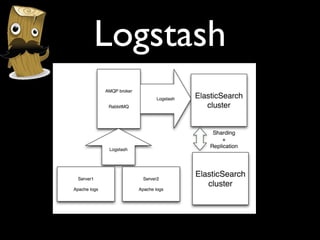

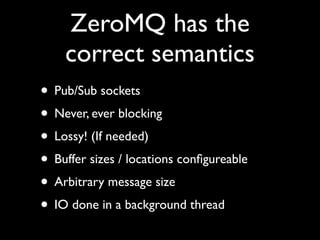

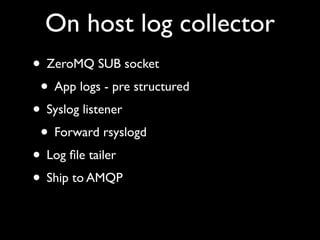

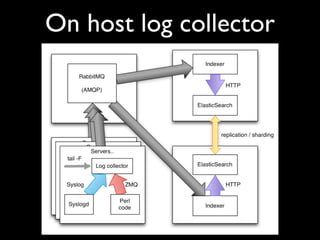

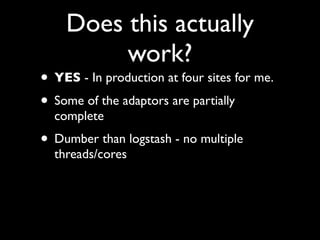

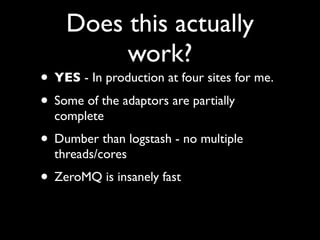

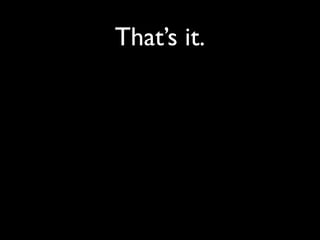

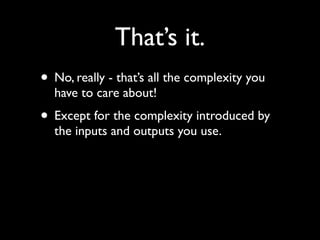

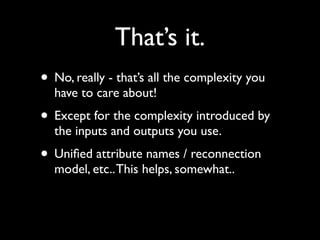

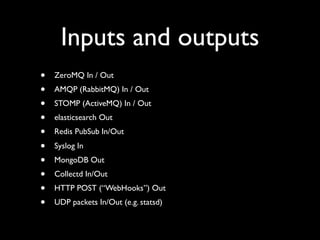

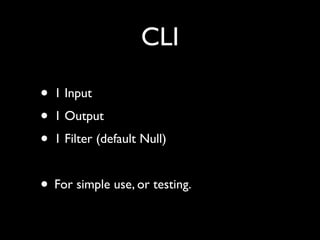

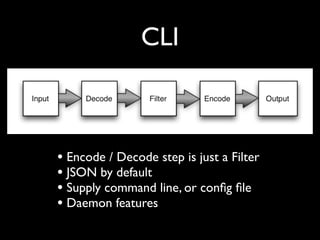

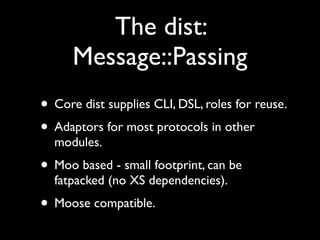

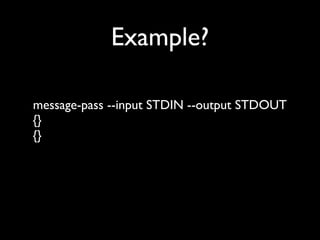

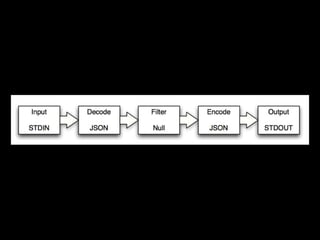

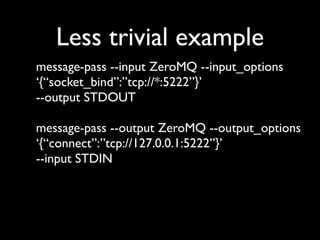

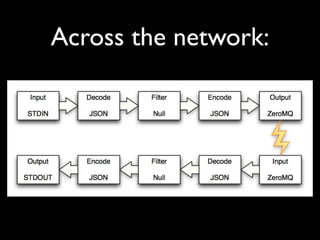

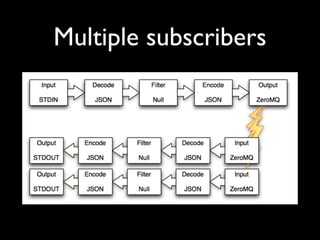

This document summarizes Tomas Doran's talk about his new Message::Passing Perl library for messaging, interoperability, and log aggregation. The library provides a framework for passing messages between different messaging systems and protocols. It uses a simple model where inputs produce events as hashes, outputs consume events, and filters can both produce and consume events. The core distribution includes roles for common inputs, outputs, and filters. Adaptors in separate modules provide connectivity to protocols like ZeroMQ, AMQP, STOMP, Elasticsearch, Redis PubSub, Syslog, MongoDB, and others. The goal is to simplify building complex message processing chains across different systems. Examples are provided to illustrate passing messages between protocols.

![Apache

[27/Jun/2012:23:57:03

+0000]](https://image.slidesharecdn.com/mp-lpw-121129190430-phpapp02/85/Message-Passing-lpw-2012-26-320.jpg)

![ElasticSearch

[2012-06-26

02:08:26,879]](https://image.slidesharecdn.com/mp-lpw-121129190430-phpapp02/85/Message-Passing-lpw-2012-28-320.jpg)

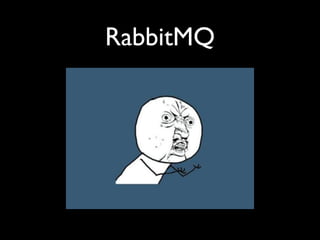

![Jenga:

message-pass --input STDIN --output STOMP --output_options

'{"destination":"/queue/foo","hostname":"localhost", "port":"6163", "username":"guest",

"password":"guest"}'

message-pass --input STOMP --output Redis --input_options '{"destination":"/queue/

foo", "hostname":"localhost","port":"6163","username":"guest","password":"guest"}'

--output_options '{"topic":"foo","hostname":"127.0.0.1","port":"6379"}'

message-pass --input Redis --output AMQP --input_options '{"topics":

["foo"],"hostname":"127.0.0.1","port":"6379"}' --output_options

'{"hostname":"127.0.0.1","username":"guest","password":"guest",

"exchange_name":"foo"}'

message-pass --input AMQP --output STDOUT --input_options

'{"hostname":"127.0.0.1", "username":"guest", "password":"guest",

"exchange_name":"foo","queue_name":"foo"}'](https://image.slidesharecdn.com/mp-lpw-121129190430-phpapp02/85/Message-Passing-lpw-2012-114-320.jpg)