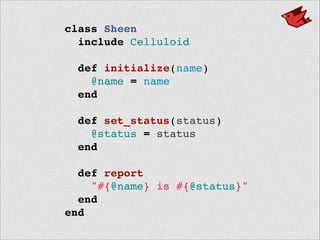

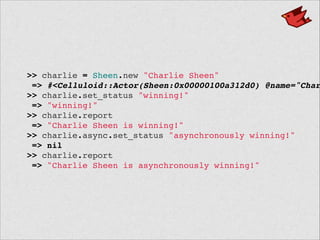

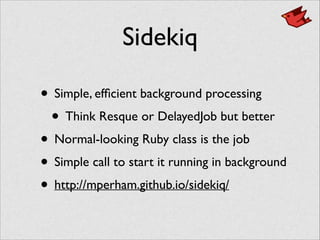

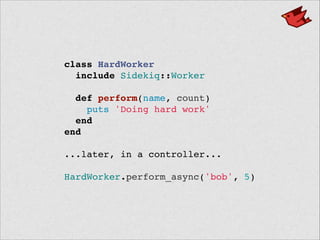

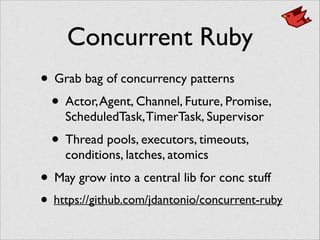

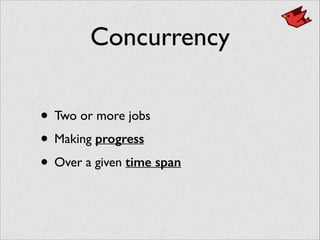

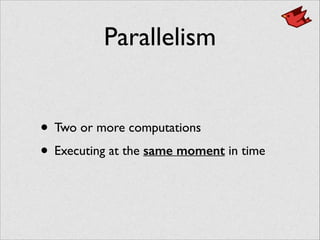

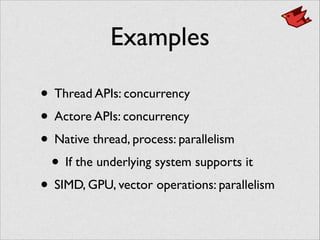

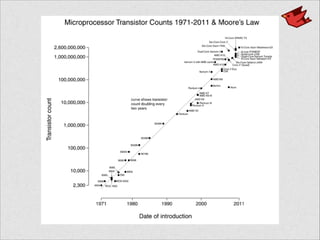

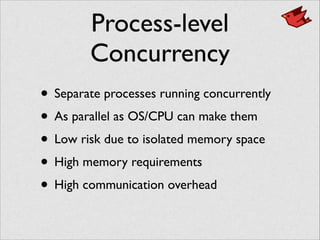

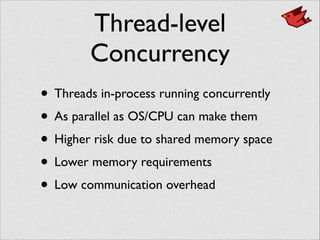

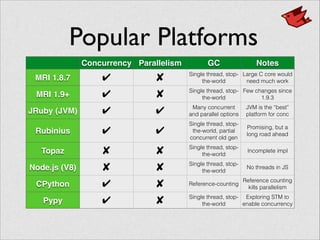

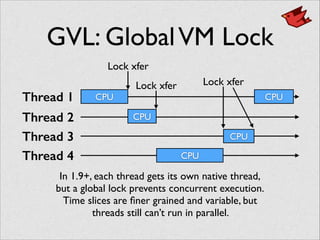

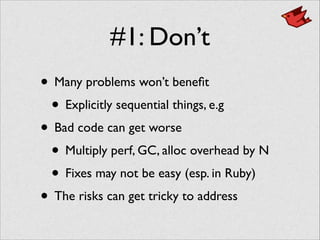

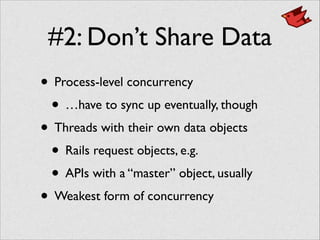

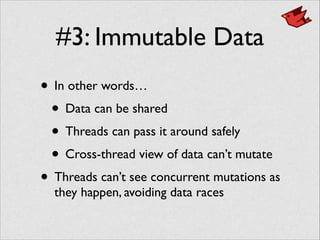

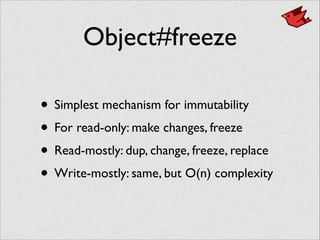

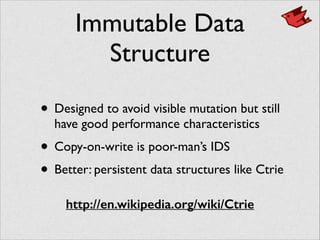

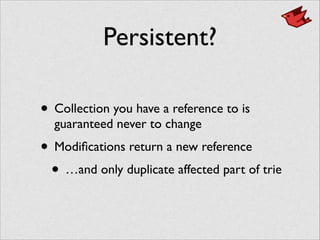

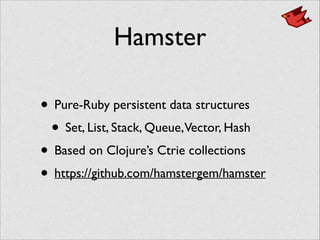

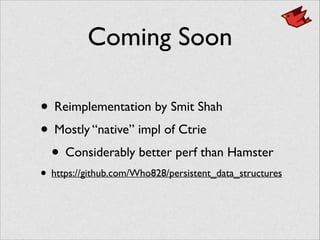

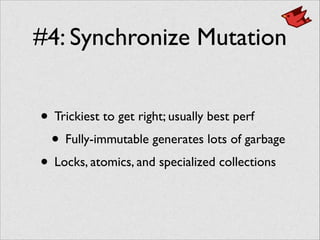

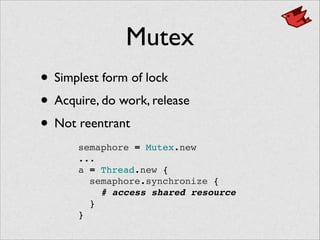

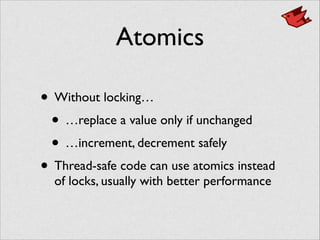

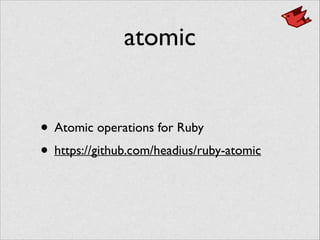

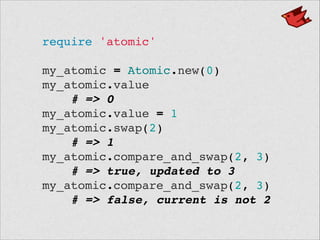

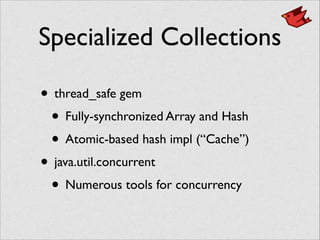

The document discusses bringing concurrency to Ruby. It begins by defining concurrency and parallelism, noting that both are needed but platforms only enable parallelism if jobs can split into concurrent tasks. It reviews concurrency and parallelism in popular Ruby platforms like MRI, JRuby, and Rubinius. The document outlines four rules for concurrency and discusses techniques like immutable data, locking, atomics, and specialized collections for mutable data. It highlights libraries that provide high-level concurrency abstractions like Celluloid for actors and Sidekiq for background jobs.

![person = Hamster.hash(!

:name => “Simon",!

:gender => :male)!

# => {:name => "Simon", :gender => :male}!

!

person[:name]!

# => "Simon"!

person.get(:gender)!

# => :male!

!

friend = person.put(:name, "James")!

# => {:name => "James", :gender => :male}!

person!

# => {:name => "Simon", :gender => :male}!

friend[:name]!

# => "James"!

person[:name]!

# => "Simon"](https://image.slidesharecdn.com/rubyconfindia2014-concurrency-140410075804-phpapp01/85/Bringing-Concurrency-to-Ruby-RubyConf-India-2014-55-320.jpg)

![thread_count = (ARGV[2] || 1).to_i!

queue = SizedQueue.new(thread_count * 4)!

!

word_file.each_line.each_slice(50) do |words|!

queue << words!

end!

queue << nil # terminating condition](https://image.slidesharecdn.com/rubyconfindia2014-concurrency-140410075804-phpapp01/85/Bringing-Concurrency-to-Ruby-RubyConf-India-2014-71-320.jpg)