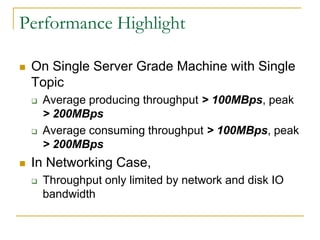

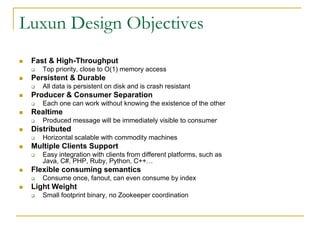

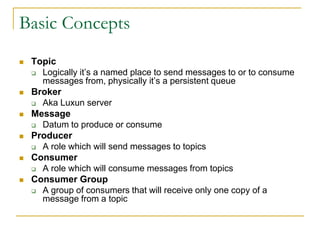

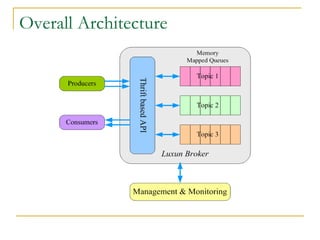

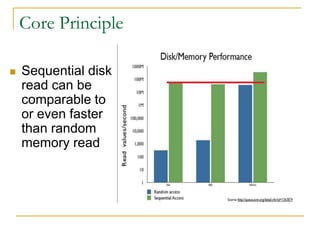

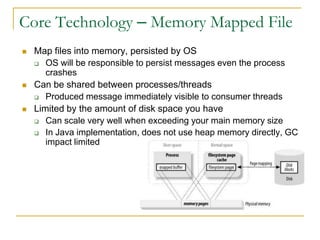

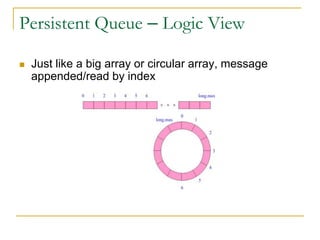

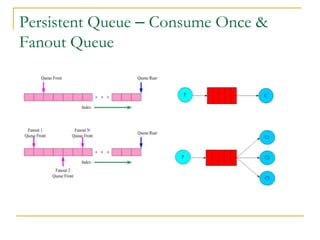

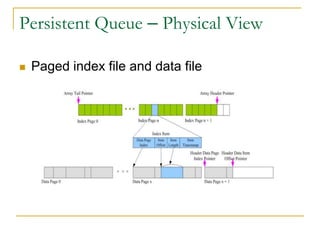

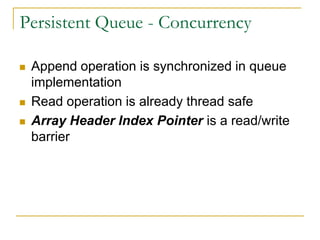

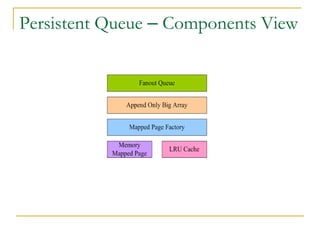

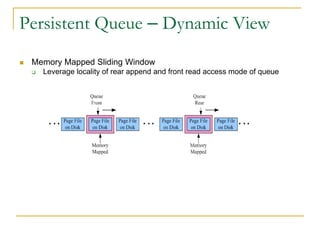

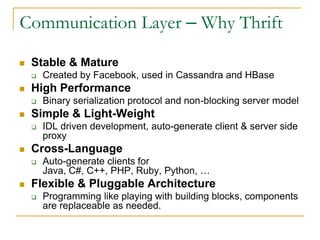

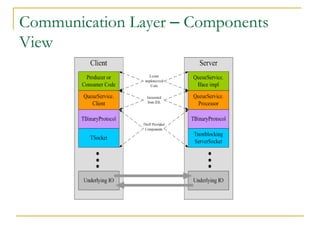

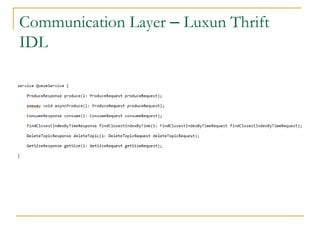

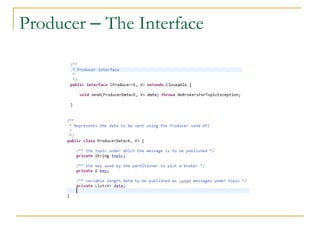

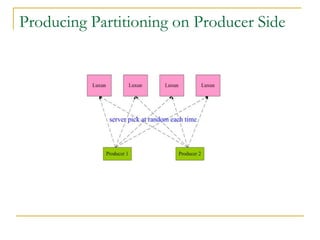

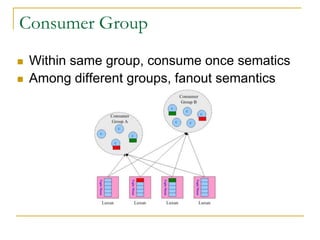

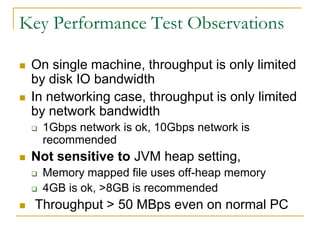

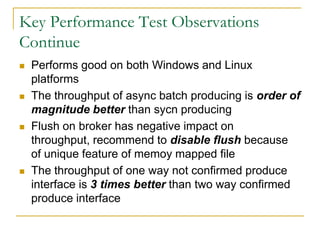

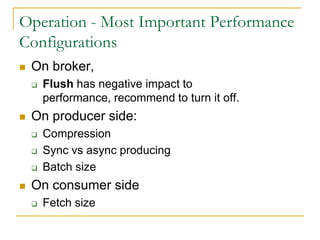

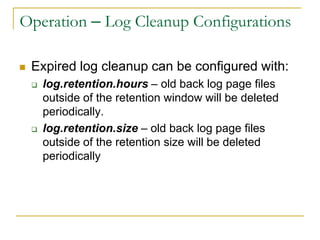

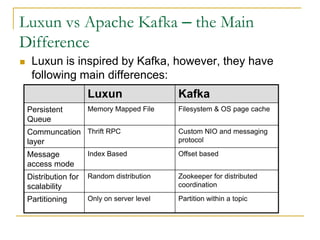

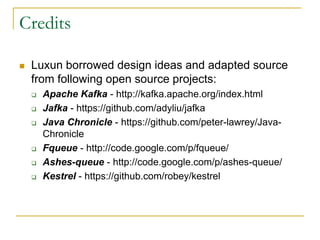

Luxun is a high-performance, persistent messaging system designed for big data collection and analytics, achieving throughput over 200mbps in optimal conditions. It features fast and durable message handling, real-time visibility for consumers, and easy integration across various programming languages. The system is inspired by Apache Kafka but uses a memory-mapped file architecture and a unique communication layer, prioritizing performance and flexibility.