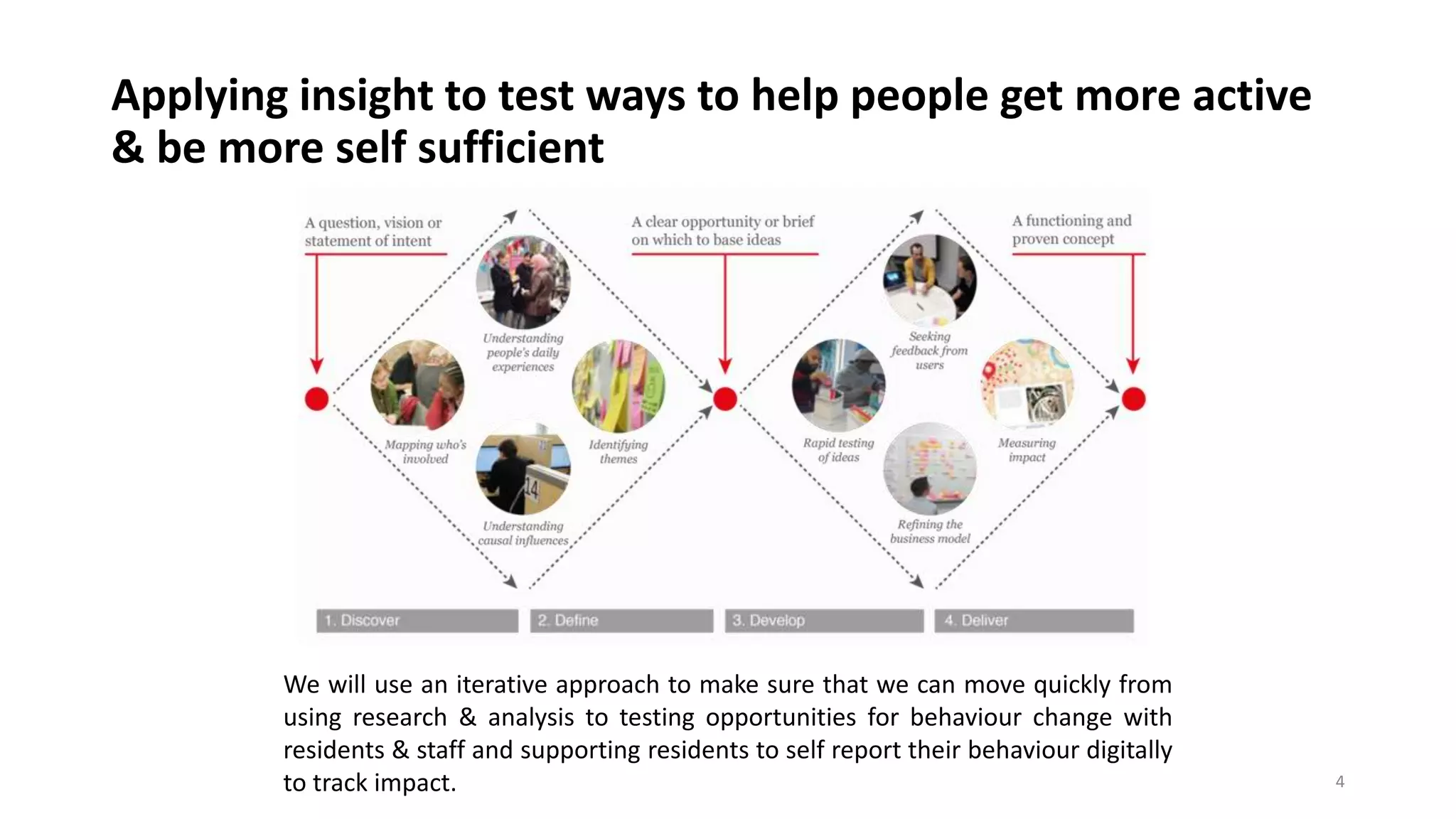

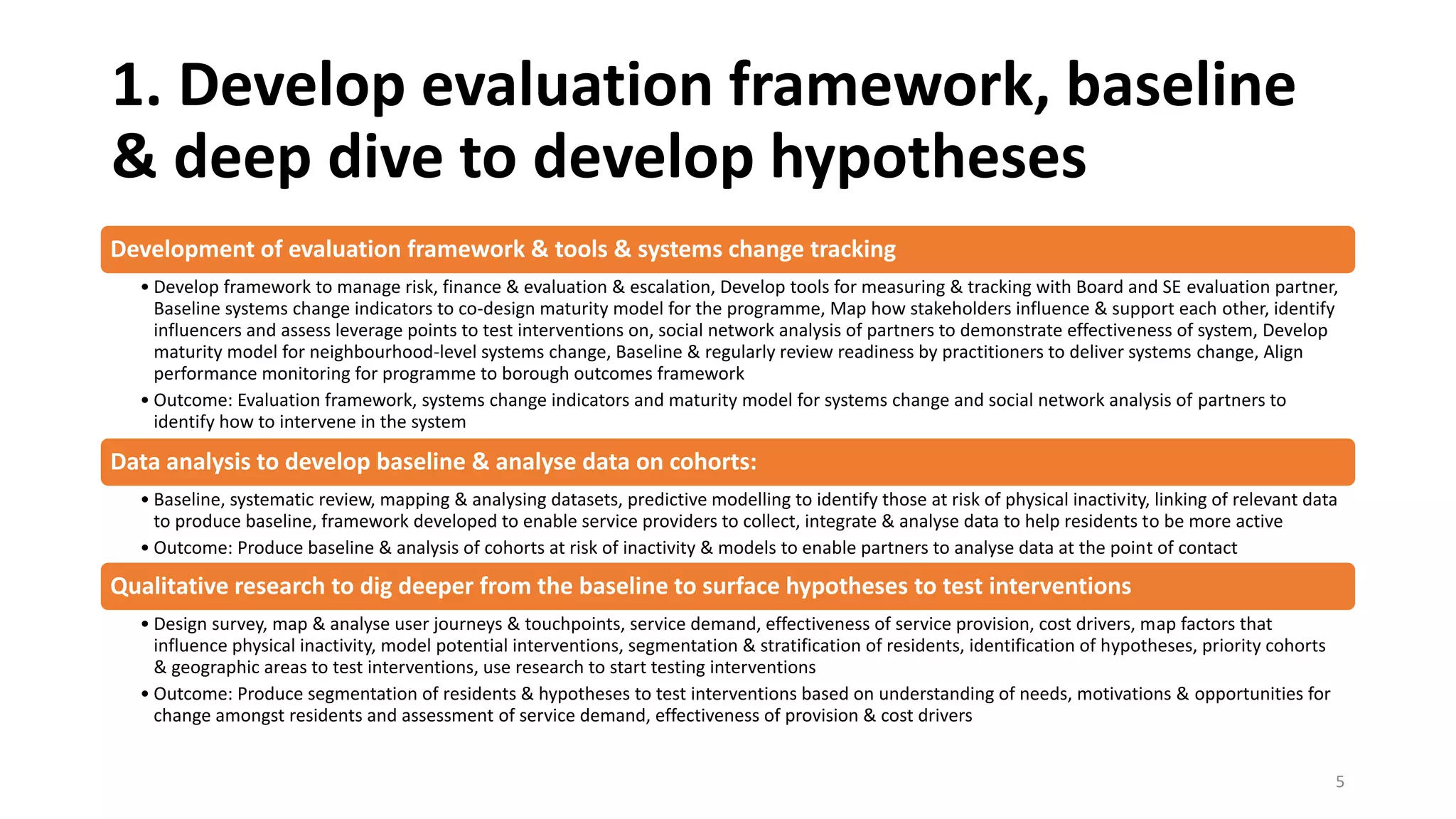

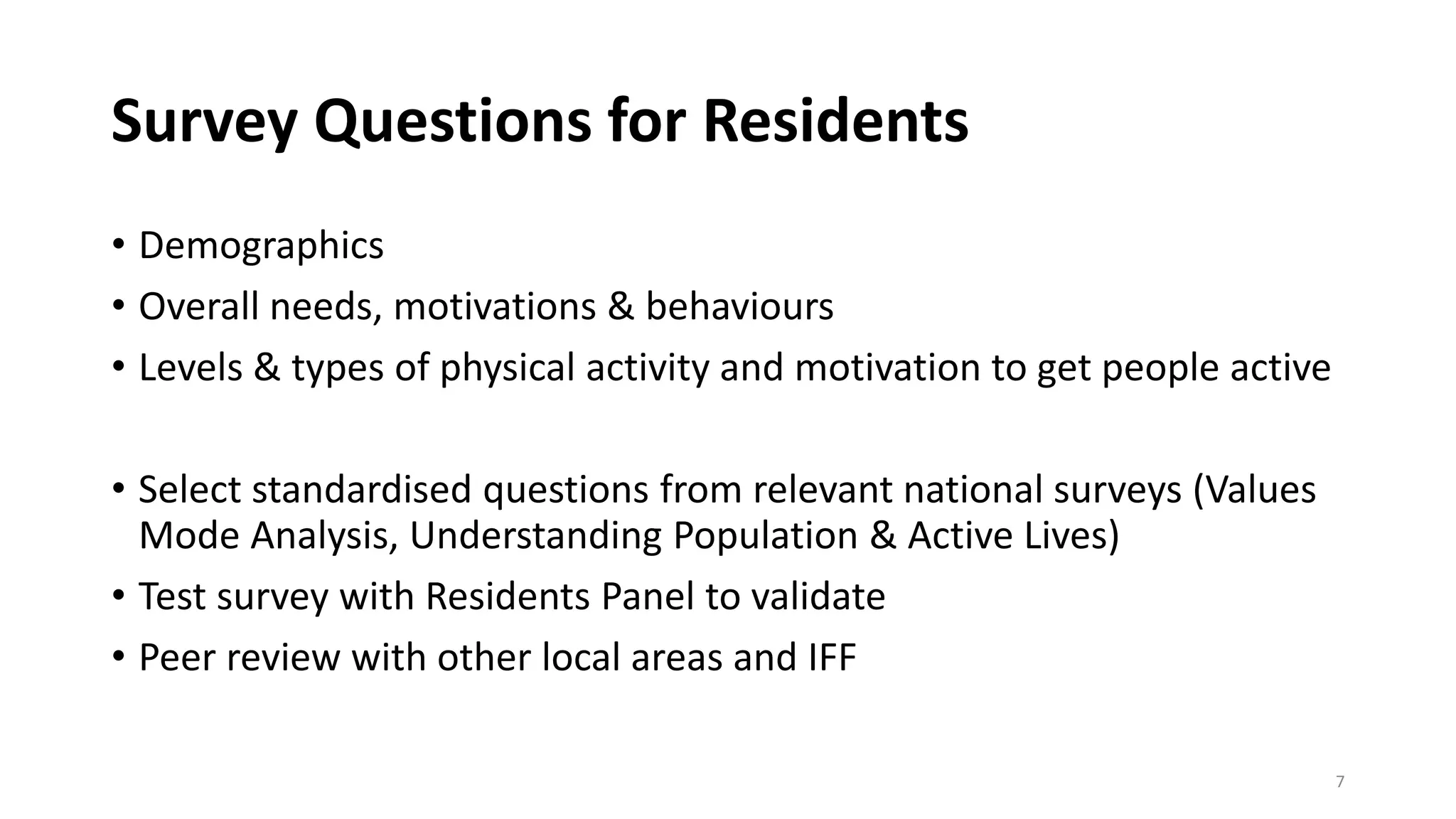

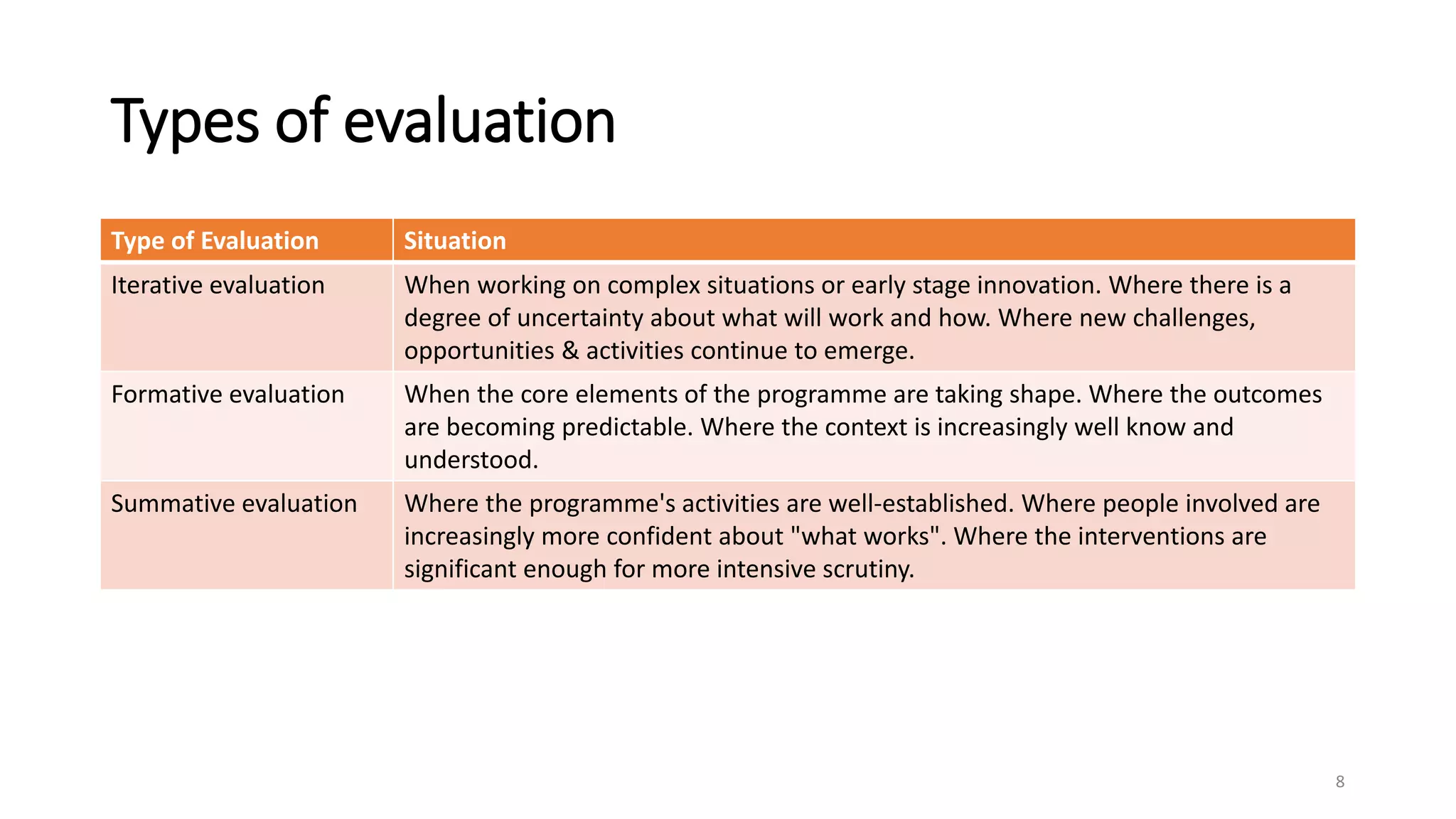

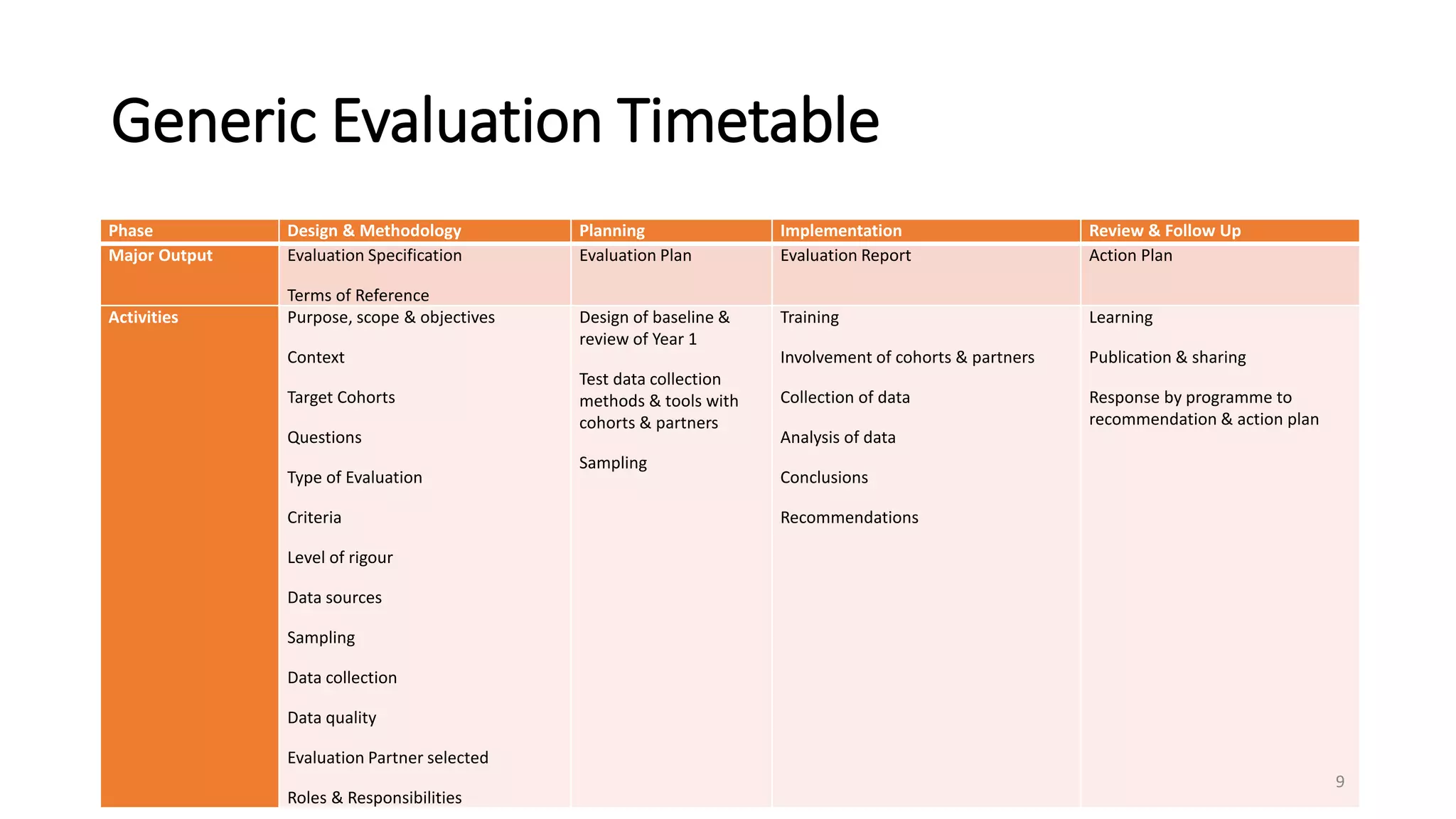

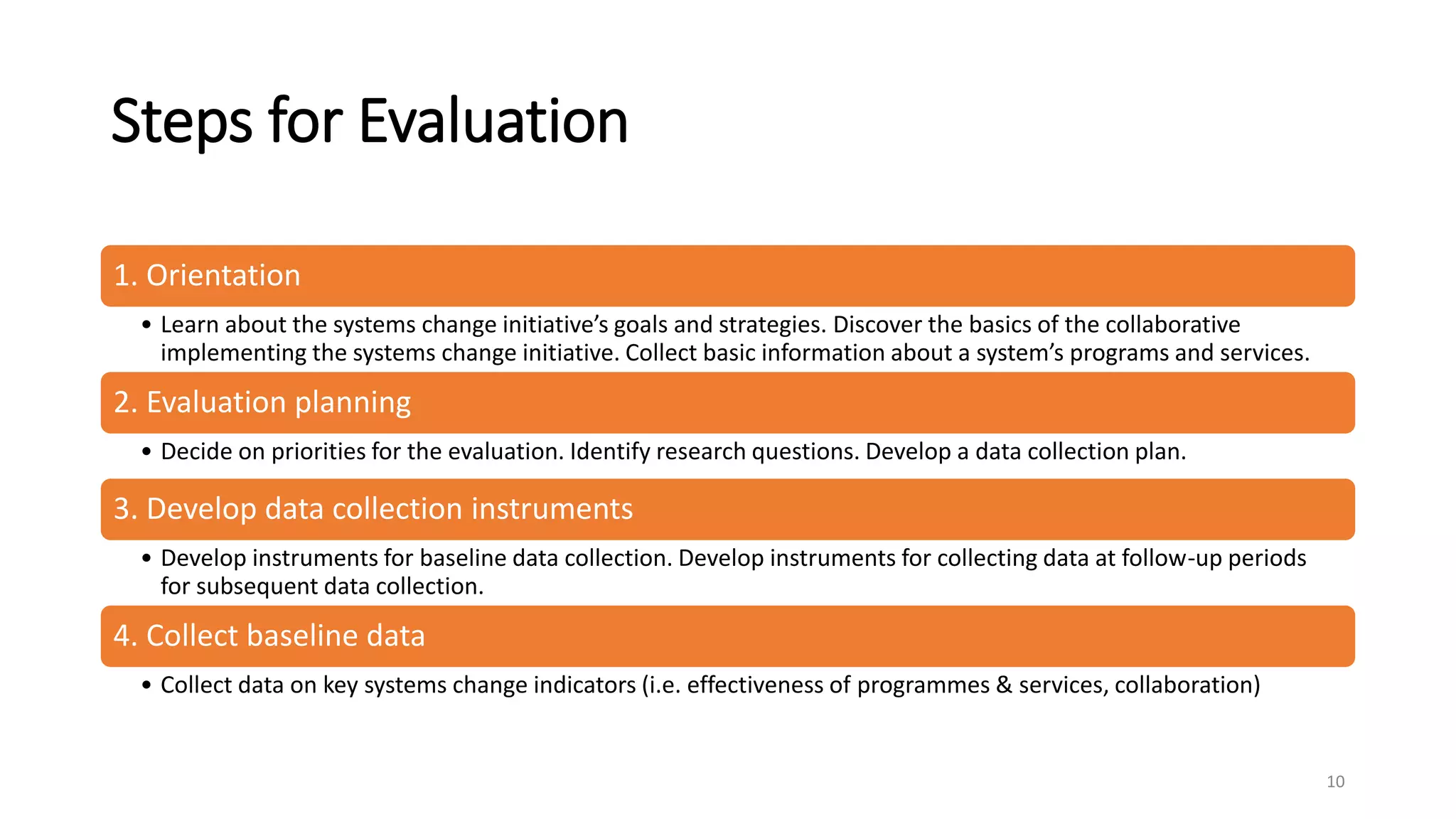

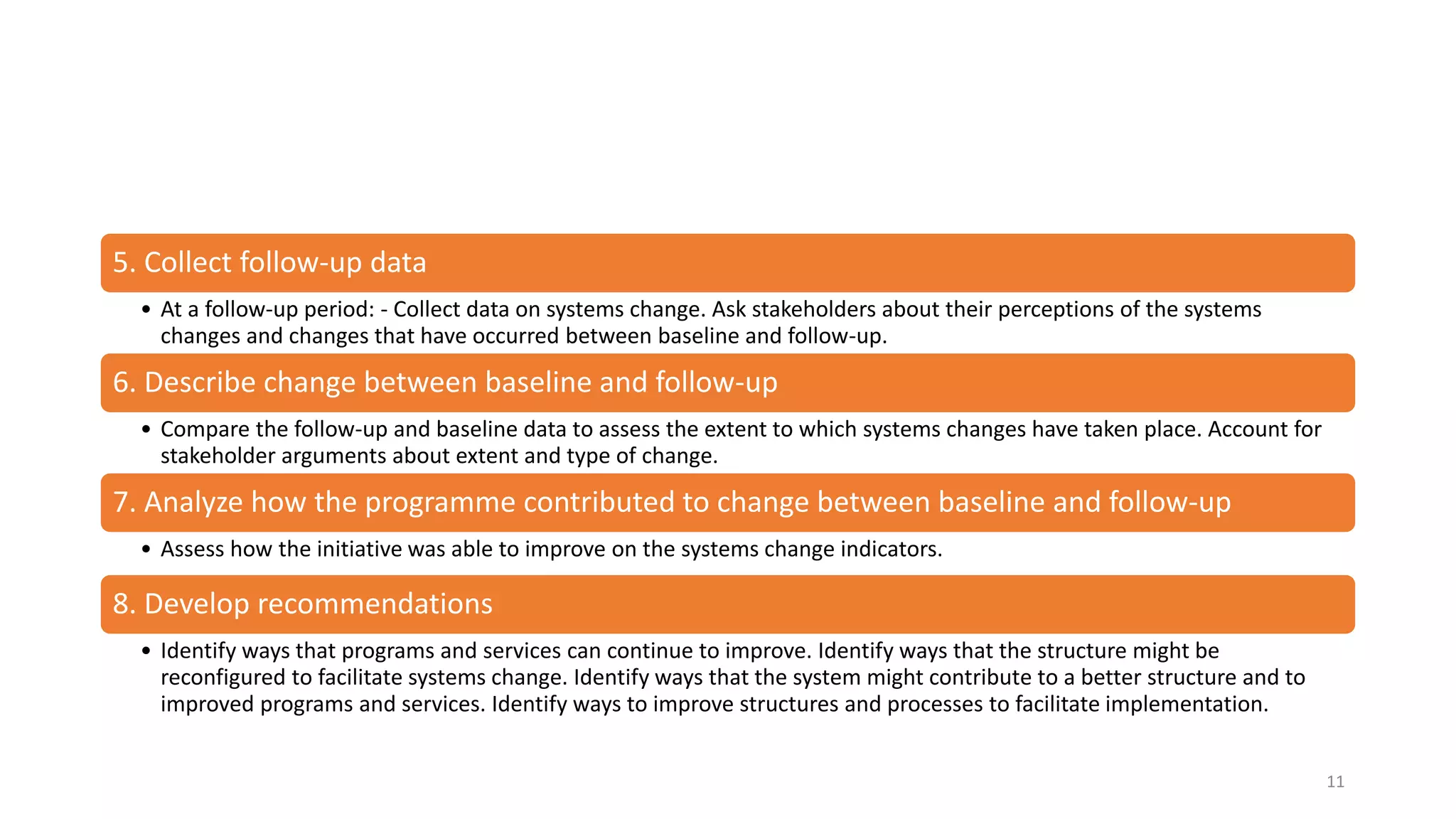

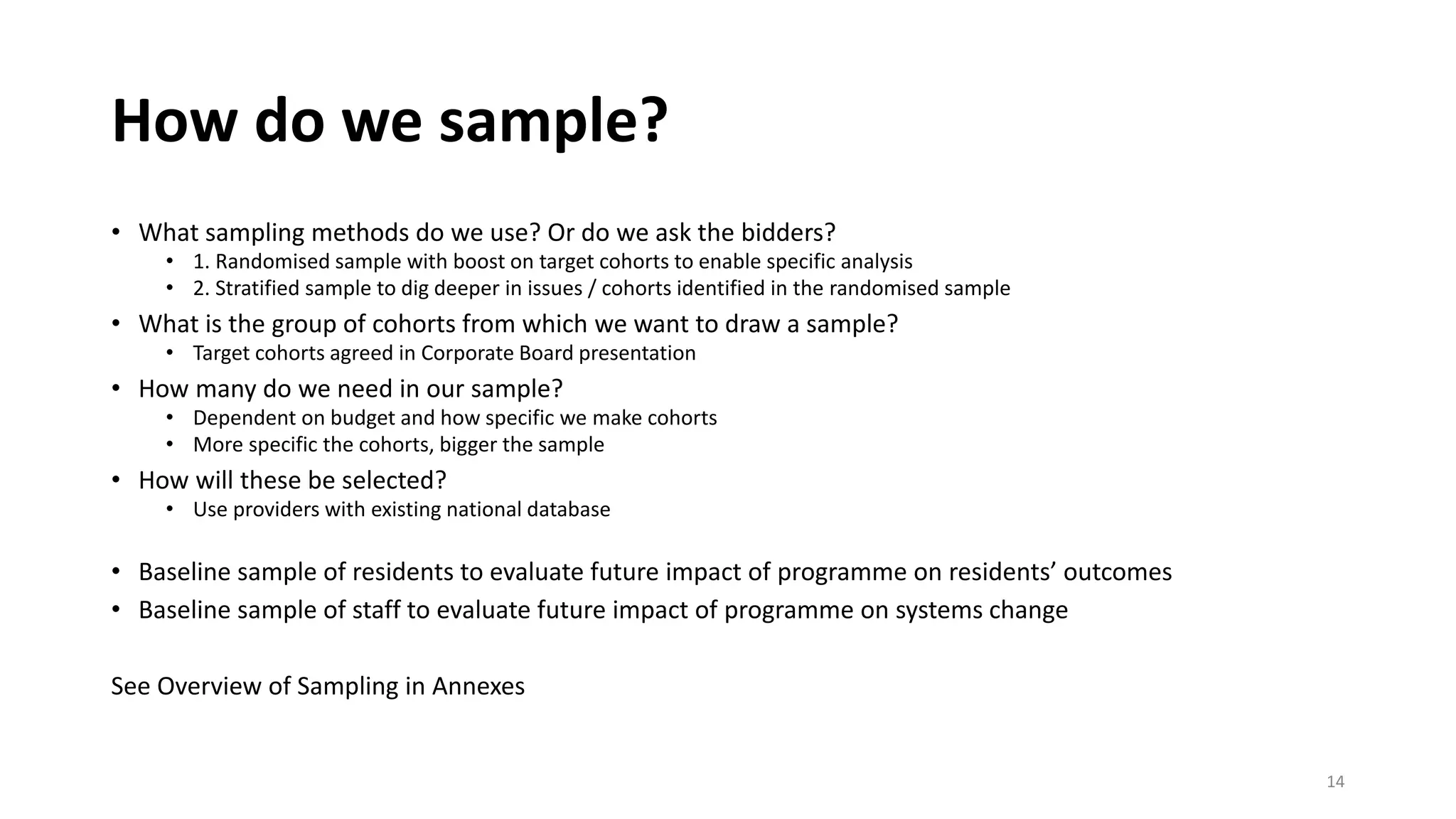

The document outlines the evaluation framework for systems change initiatives, emphasizing the need for a tailored approach that captures baseline data, measures impact over time, and adapts to continuous changes. It details responsibilities towards evaluation partners, various types of evaluation processes, and sampling methods for data collection. Additionally, it highlights the role of community engagement and technology in tracking behavior changes among residents to promote increased physical activity and self-sufficiency.