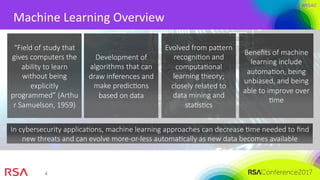

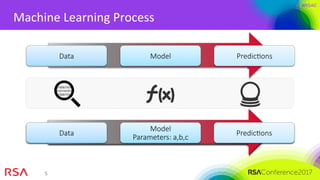

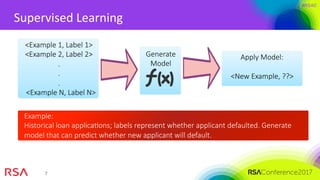

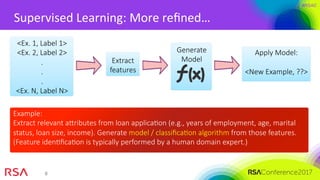

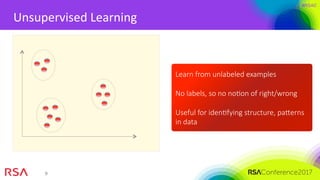

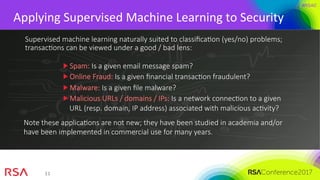

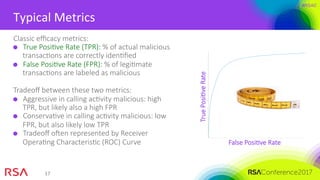

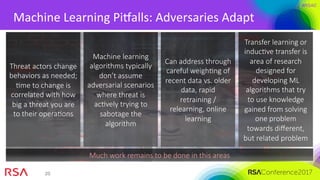

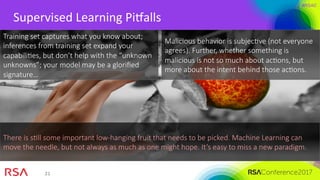

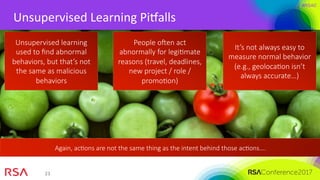

The document presents an overview of machine learning's application in cybersecurity, highlighting its capabilities in classification and pattern recognition through both supervised and unsupervised learning. It emphasizes the critical importance of using high-quality data and understanding metrics for evaluating machine learning models to address challenges like class imbalance and adapting to evolving threats. Best practices for implementation include careful model deployment, continuous updates, and ensuring that all stakeholders understand the algorithms' workings and limitations.