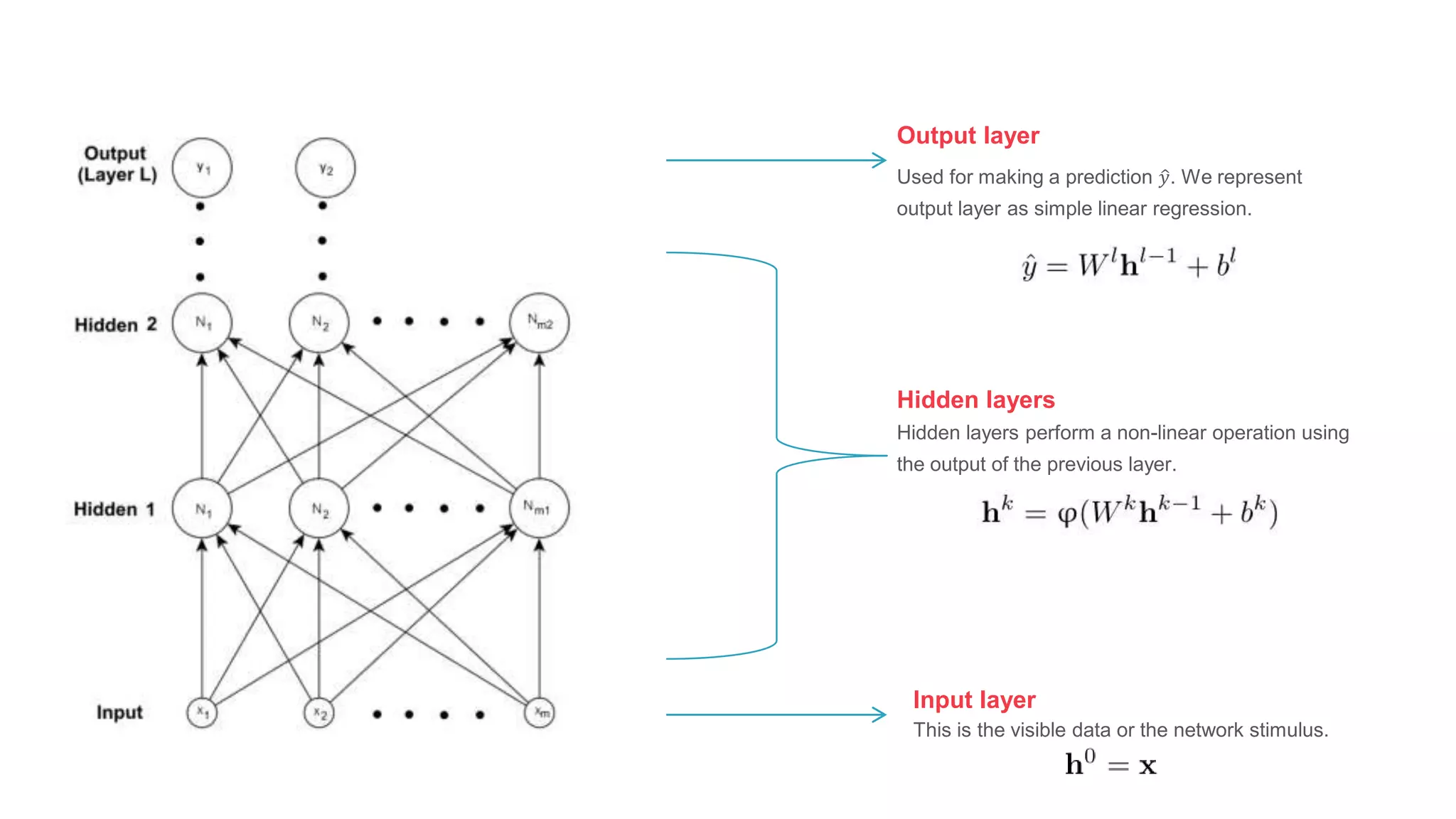

This document discusses machine learning concepts including tasks, experience, and performance measures. It provides definitions of machine learning from Arthur Samuel and Tom Mitchell. It describes common machine learning tasks like classification, regression, and clustering. It discusses supervised and unsupervised learning as experiences and provides examples of performance measures for different tasks. Finally, it provides an example of applying machine learning to the MNIST handwritten digit classification problem.

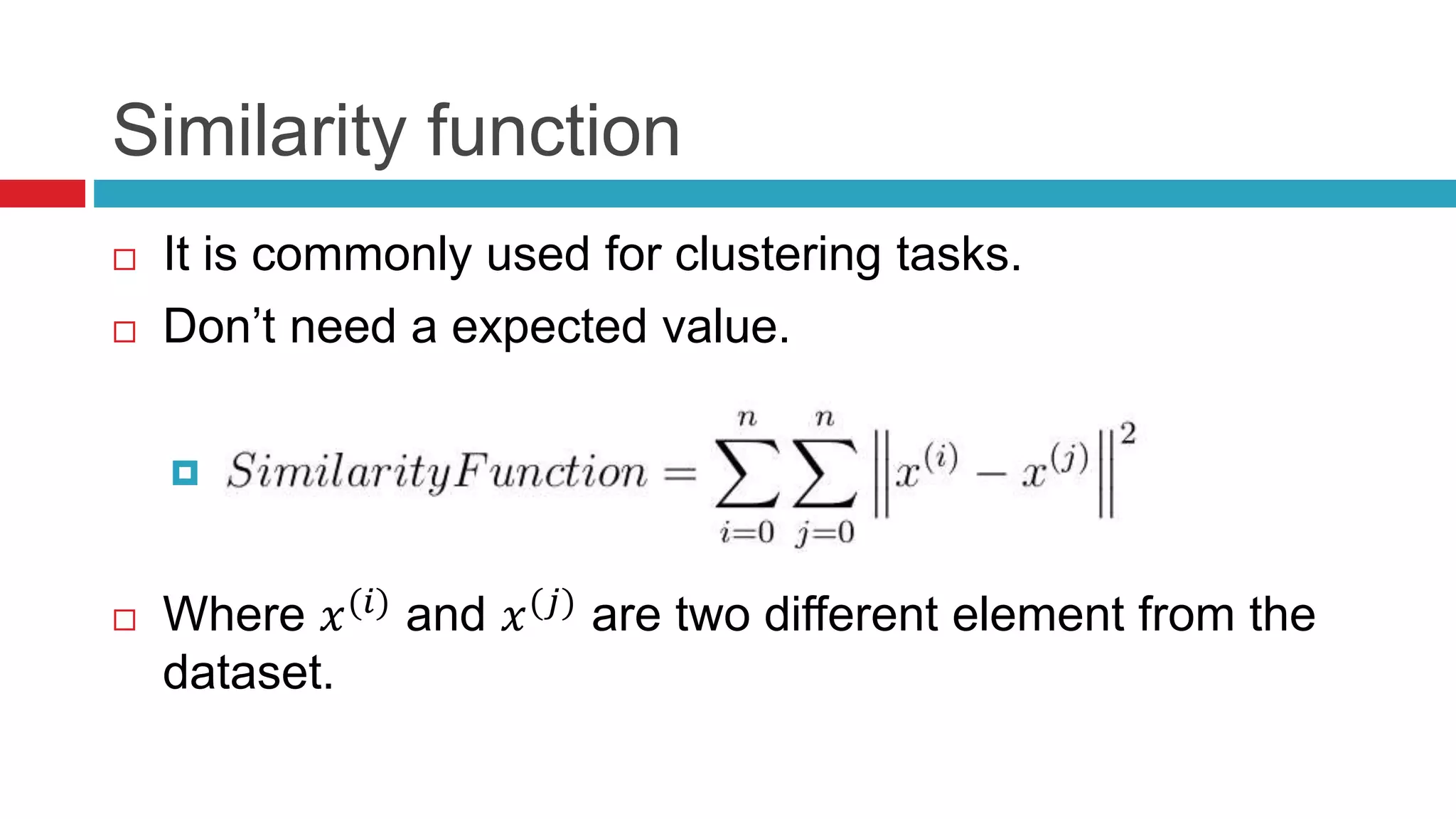

![Input

A gray-scale image of 28x28 pixels.

Classification of the image in the range [0,9].

Output](https://image.slidesharecdn.com/seminariodemachinelearning-180721143847/75/Machine-Learning-Seminar-36-2048.jpg)