This document provides an overview of machine learning basics including:

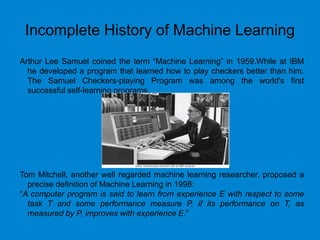

- A brief history of machine learning and definitions of machine learning and artificial intelligence.

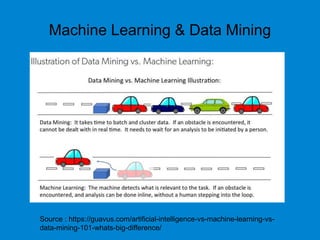

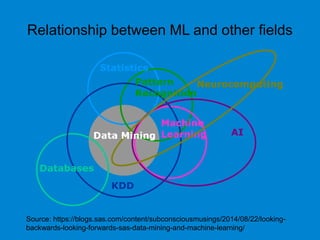

- When machine learning is needed and its relationships to statistics, data mining, and other fields.

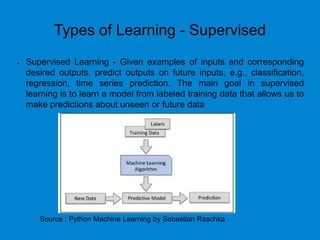

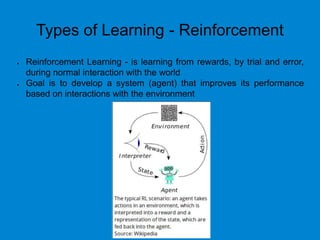

- The main types of learning problems - supervised, unsupervised, reinforcement learning.

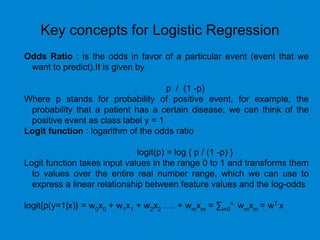

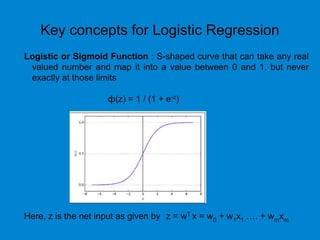

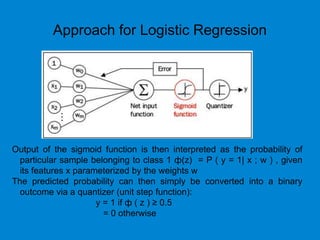

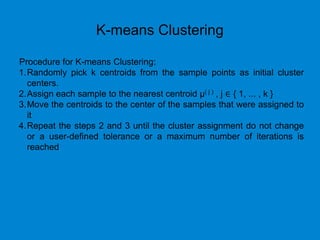

- Common machine learning algorithms and examples of classification, regression, clustering, and dimensionality reduction.

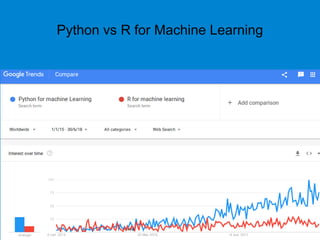

- Popular programming languages for machine learning like Python and R.

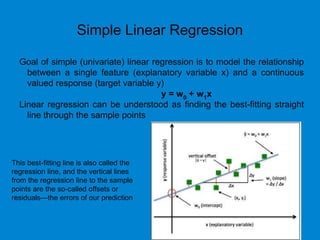

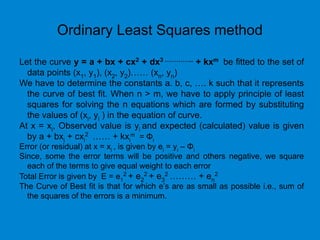

- An introduction to simple linear regression and how it is implemented in scikit-learn.