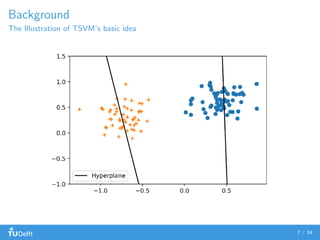

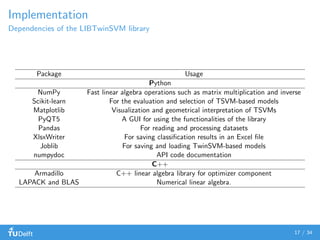

The document presents 'libtwinsvm', an open-source library for Twin Support Vector Machines (TSVM), aimed at providing efficient classification solutions. Key features include a user-friendly graphical interface, support for multiple kernel types, and integration with popular machine learning frameworks like scikit-learn. The library is designed for simplicity, speed, and a variety of useful functionalities to enhance the user experience in machine learning tasks.

![Benchmarks

Classification performance

Experiment details:

The datasets were obtained from UCI8

repository.

All the datasets were normalized in the range [0,1].

8

https://archive.ics.uci.edu/ml/index.php

21 / 34](https://image.slidesharecdn.com/libtwinsvm-tudelft-191129072907/85/LIBTwinSVM-A-Library-for-Twin-Support-Vector-Machine-51-320.jpg)

![Benchmarks

Classification performance

Experiment details:

The datasets were obtained from UCI8

repository.

All the datasets were normalized in the range [0,1].

5-fold cross-validation was used for the evaluation.

8

https://archive.ics.uci.edu/ml/index.php

21 / 34](https://image.slidesharecdn.com/libtwinsvm-tudelft-191129072907/85/LIBTwinSVM-A-Library-for-Twin-Support-Vector-Machine-52-320.jpg)

![Benchmarks

Classification performance

Experiment details:

The datasets were obtained from UCI8

repository.

All the datasets were normalized in the range [0,1].

5-fold cross-validation was used for the evaluation.

The grid search was used to find the optimal value of

hyper-parameters.

8

https://archive.ics.uci.edu/ml/index.php

21 / 34](https://image.slidesharecdn.com/libtwinsvm-tudelft-191129072907/85/LIBTwinSVM-A-Library-for-Twin-Support-Vector-Machine-53-320.jpg)