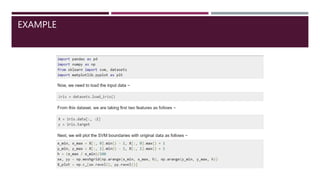

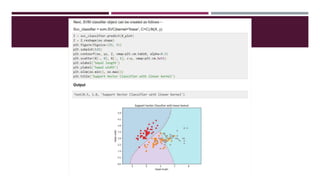

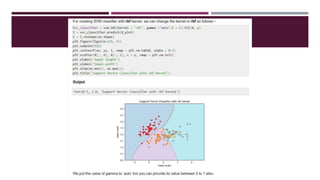

The document discusses different types of kernels used in support vector machines (SVM) for classification, including linear, polynomial, and radial basis function (RBF) kernels. It provides the mathematical formulas for each kernel type and explains that kernels transform input data into a higher dimensional space to make non-separable problems separable. The document also notes that while SVM classifiers offer high accuracy, their long training times make them unsuitable for large datasets.