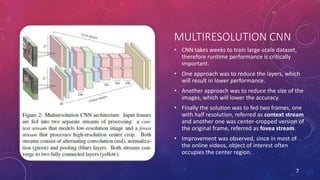

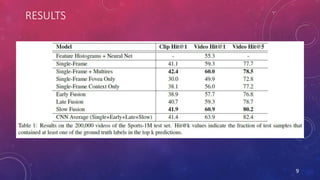

This document discusses the application of convolutional neural networks (CNNs) for large-scale video classification, using a dataset of approximately 1 million YouTube videos. It explores different connectivity approaches, including a multiresolution architecture to enhance training speed and runtime performance, and reveals performance improvements over traditional models. The findings show the potential of CNNs in video classification and highlight avenues for future work, including the use of recurrent neural networks for better predictions.