For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/09/vision-language-models-on-the-edge-a-presentation-from-hugging-face/

Cyril Zakka, Health Lead at Hugging Face, presents the “Vision-language Models on the Edge” tutorial at the May 2025 Embedded Vision Summit.

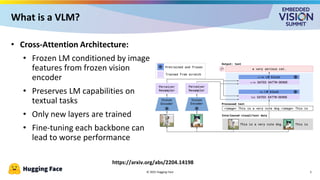

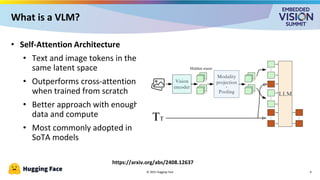

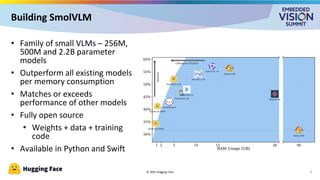

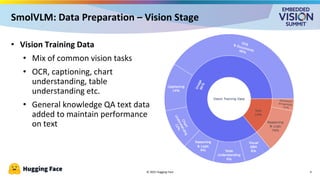

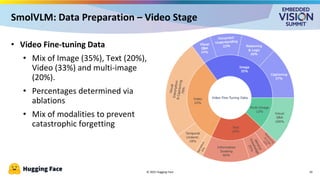

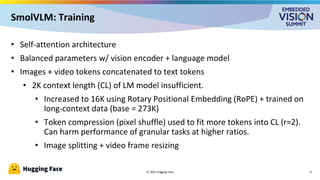

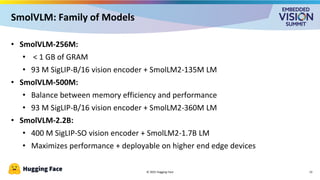

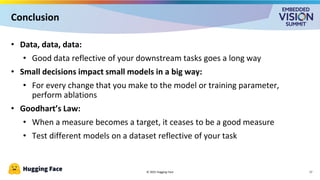

In this presentation, Zakka provides an overview of vision-language models (VLMs) and their deployment on edge devices using Hugging Face’s recently released SmolVLM as an example. He examines the training process of VLMs, including data preparation, alignment techniques and optimization methods necessary for embedding visual understanding capabilities within resource-constrained environments.

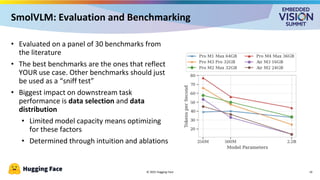

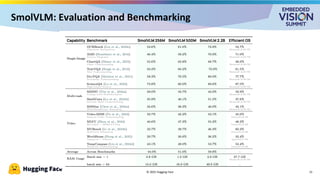

Zakka explains practical evaluation approaches, emphasizing how to benchmark these models beyond accuracy metrics to ensure real-world viability. And to illustrate how these concepts play out in practice, he shares data from recent work implementing SmolVLM in an edge device.