PIC Tier-1 (LHCP Conference / Barcelona)

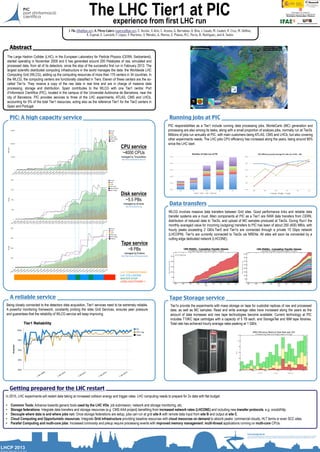

- 1. J. Flix (jflix@pic.es), A. Pérez-Calero (aperez@pic.es), E. Acción, V. Acin, C. Acosta, G. Bernabeu, A. Bria, J. Casals, M. Caubet, R. Cruz, M. Delfino, X. Espinal, E. Lanciotti, F. López, F. Martinez, V. Méndez, G. Merino, E. Planas, M.C. Porto, B. Rodríguez, and A. Sedov The LHC Tier1 at PICexperience from first LHC run The Large Hadron Collider (LHC), in the European Laboratory for Particle Physics (CERN, Switzerland), started operating in November 2009 and it has generated around 200 Petabytes of raw, simulated and processed data, from all of its detectors, since the stop of the successful first run in February 2013. The largest scientific distributed computing infrastructure in the world manages the data: the Worldwide LHC Computing Grid (WLCG), adding up the computing resources of more than 170 centers in 34 countries. In the WLCG, the computing centers are functionally classified in Tiers. Eleven of these centers are the so- called Tier1s. They receive a copy of the raw data in real time and are in charge of massive data processing, storage and distribution. Spain contributes to the WLCG with one Tier1 centre: Port d’Informació Científica (PIC), located in the campus of the Universitat Autònoma de Barcelona, near the city of Barcelona. PIC provides services to three of the LHC experiments, ATLAS, CMS and LHCb, accounting for 5% of the total Tier1 resources, acting also as the reference Tier1 for the Tier2 centers in Spain and Portugal. Abstract CPU service ~4000 CPUs managed by Torque/Maui http://www.clusterresources.com PIC: A high capacity service Disk service ~5.5 PBs managed by dCache http://www.dcache.org Tape service ~8 PBs managed by Enstore http://http://www-ccf.fnal.gov/enstore Being closely connected to the detectors data acquisition, Tier1 services need to be extremely reliable. A powerful monitoring framework, constantly probing the sites Grid Services, ensures peer pressure and guarantees that the reliability of WLCG service will keep improving. A reliable service In 2015, LHC experiments will restart data taking at increased collision energy and trigger rates. LHC computing needs to prepare for 2x data with flat budget: • Common Tools: Advance towards generic tools used by the LHC VOs: job submission, network and storage monitoring, etc. • Storage federations: Integrate data transfers and storage resources (e.g. CMS AAA project) benefiting from increased network rates (LHCONE) and including new transfer protocols, e.g. xrootd/http. • Decouple where data is and where jobs run: Once storage federations are setup, jobs can run at grid site A with remote data input from site B and output at site C. • Cloud Computing and Opportunistic resources: Integrate Grid infrastructure providing baseline resources with cloud resources on demand to absorb peaks: commercial clouds, HLT farms or even SCC sites. • Parallel Computing and multi-core jobs: Increased luminosity and pileup require processing events with improved memory management: multi-thread applications running on multi-core CPUs. Getting prepared for the LHC restart Acknowledgements This work was partially supported and makes use of results produced by the project ”Implantación del Sistema de Computación Tier1 Español para el Large Hadron Collider Fase III” funded by the Ministry of Science and Innovation of Spain under reference FPA2010-21816-C02-00. Running jobs at PIC Data transfers Tape Storage service Tier1s provide the experiments with mass storage on tape for custodial replicas of raw and processed data, as well as MC samples. Read and write average rates have increased along the years as the amount of data increases and new tape technologies become available. Current technology at PIC includes T10KC tape cartridges with a capacity of 5 TB each, and StorageTek and IBM tape libraries. Total rate has achieved hourly average rates peaking at 1 GB/s. WLCG involves massive data transfers between Grid sites. Good performance links and reliable data transfer systems are a must. Main components at PIC as a Tier1 are RAW data transfers from CERN, distribution of reduced data to Tier2s, and upload of MC samples produced at Tier2s. During Run1 the monthly averaged value for incoming (outgoing) transfers to PIC has been of about 250 (400) MB/s, with hourly peaks exceeding 2 GB/s.Tier0 and Tier1s are connected through a private 10 Gbps network (LHCOPN). Tier1s are currently connected to Tier2s via NRENs. All sites will soon be connected by a cutting edge dedicated network (LHCONE). PIC responsibilities as a Tier1 include running data processing jobs. MonteCarlo (MC) generation and processing are also among its tasks, along with a small proportion of analysis jobs, normally run at Tier2s. Millions of jobs run annually at PIC, with main customers being ATLAS, CMS and LHCb, but also covering other experiments needs. The LHC jobs CPU efficiency has increased along the years, being around 90% since the LHC start. LHCP 2013 Barcelona, Spain, May 13-18th, 2013