Embed presentation

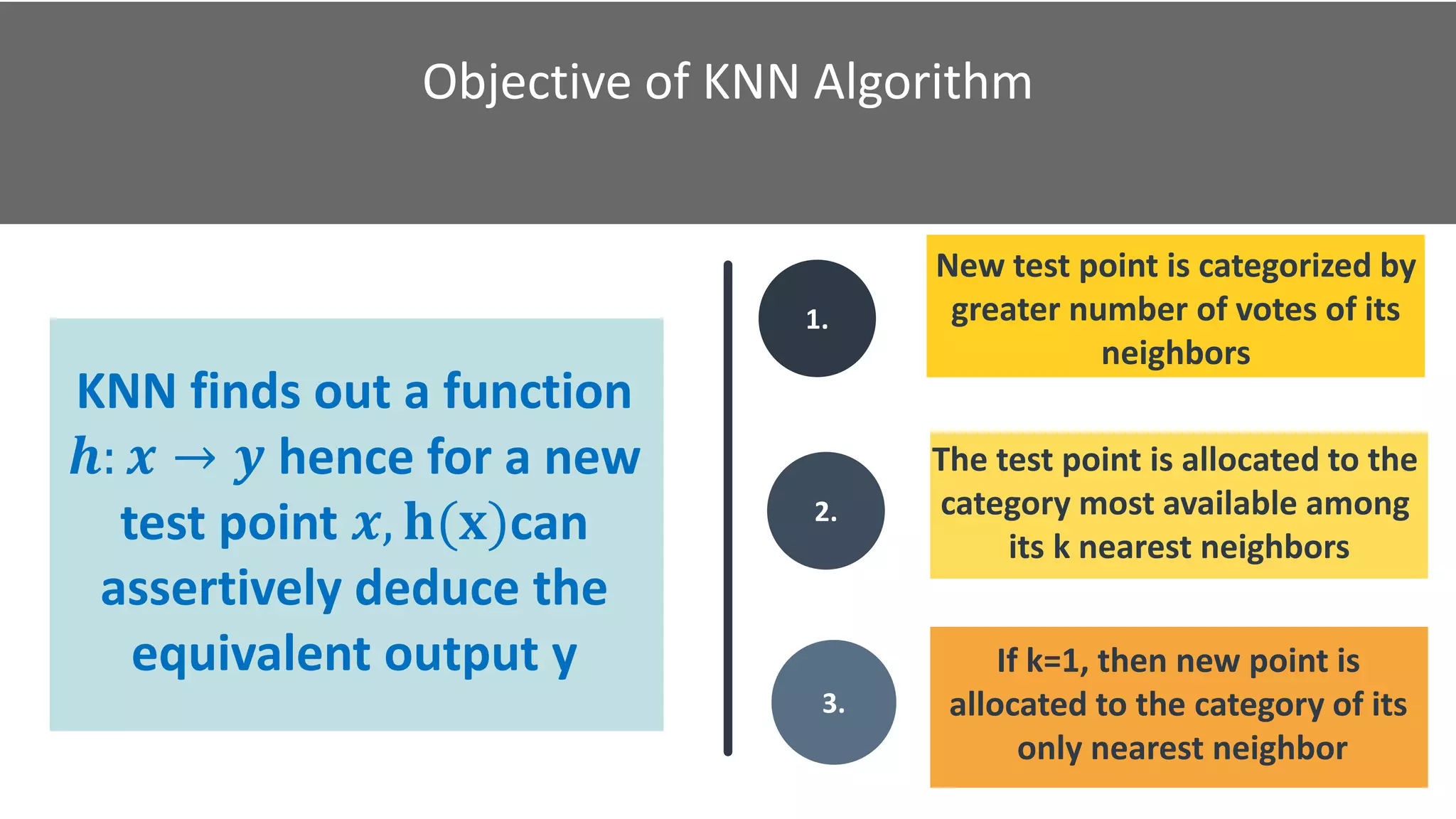

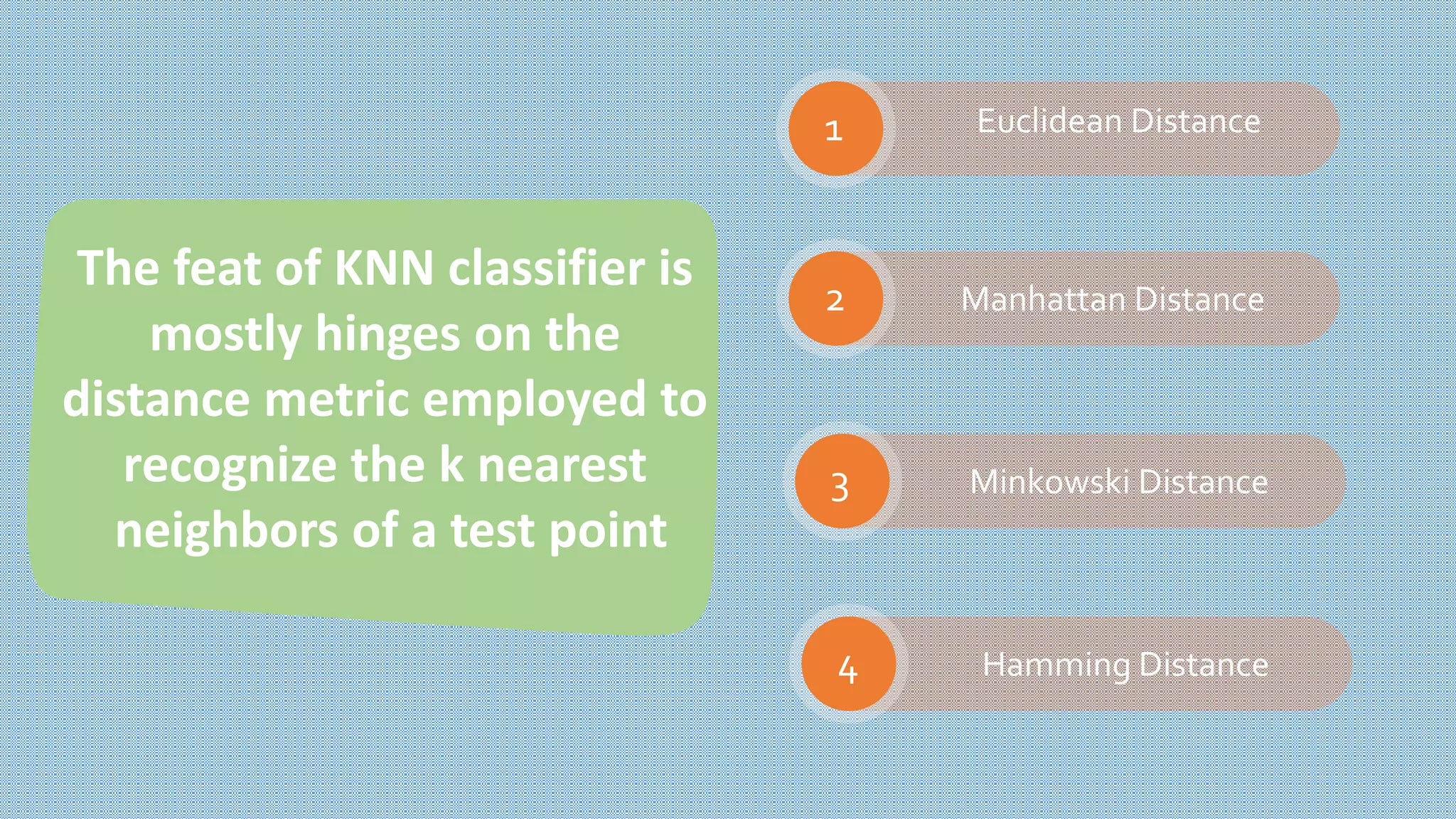

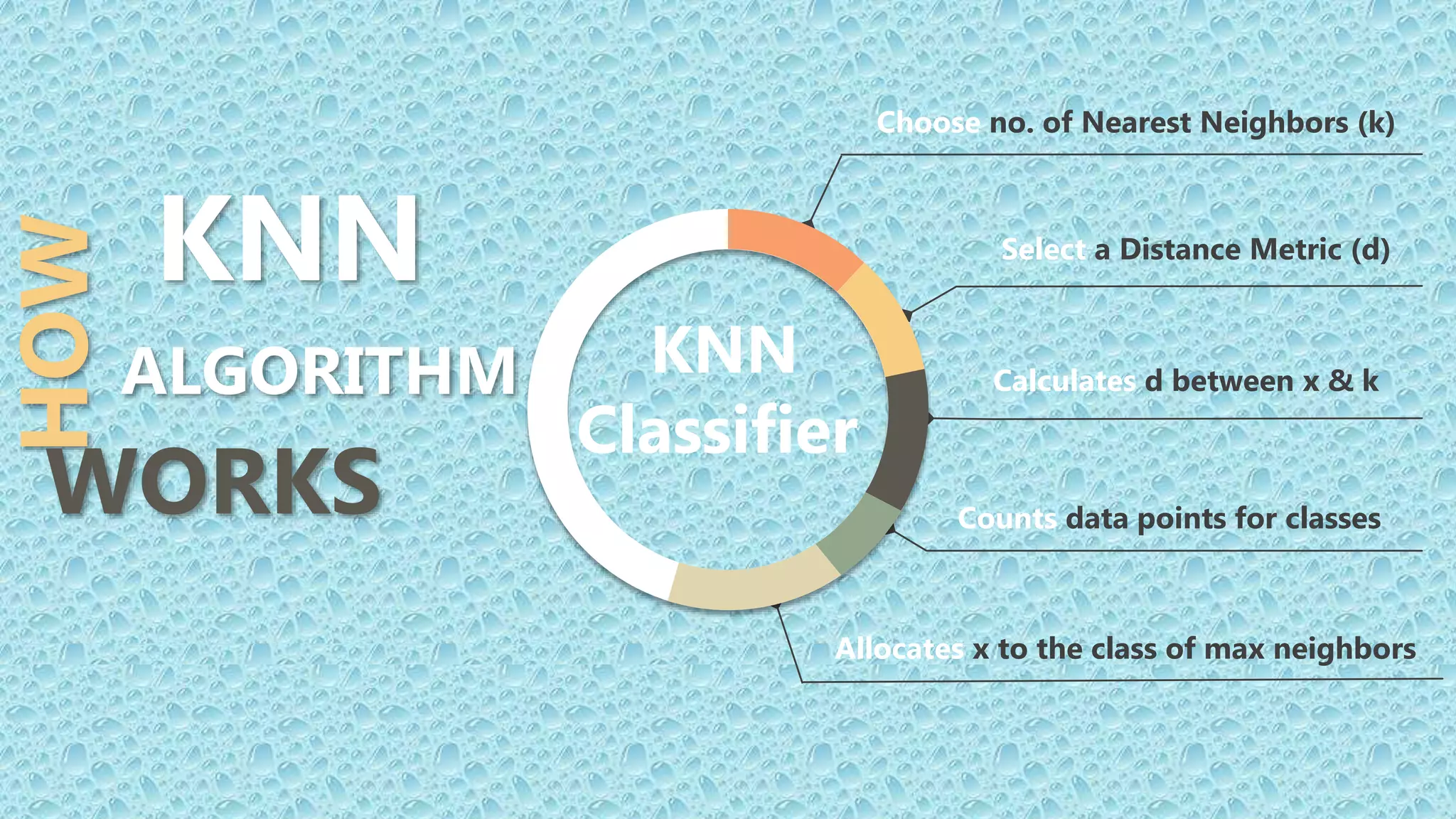

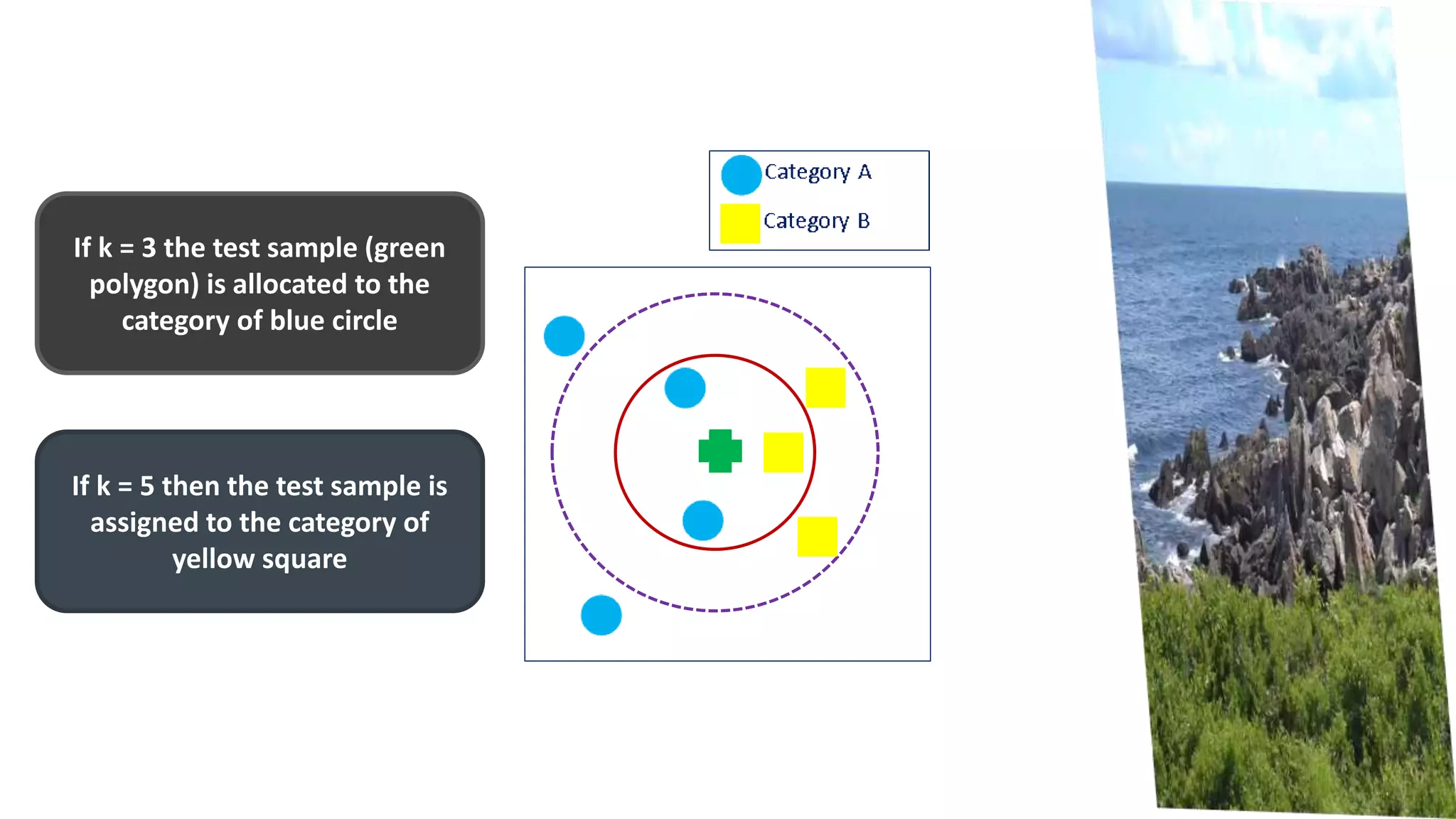

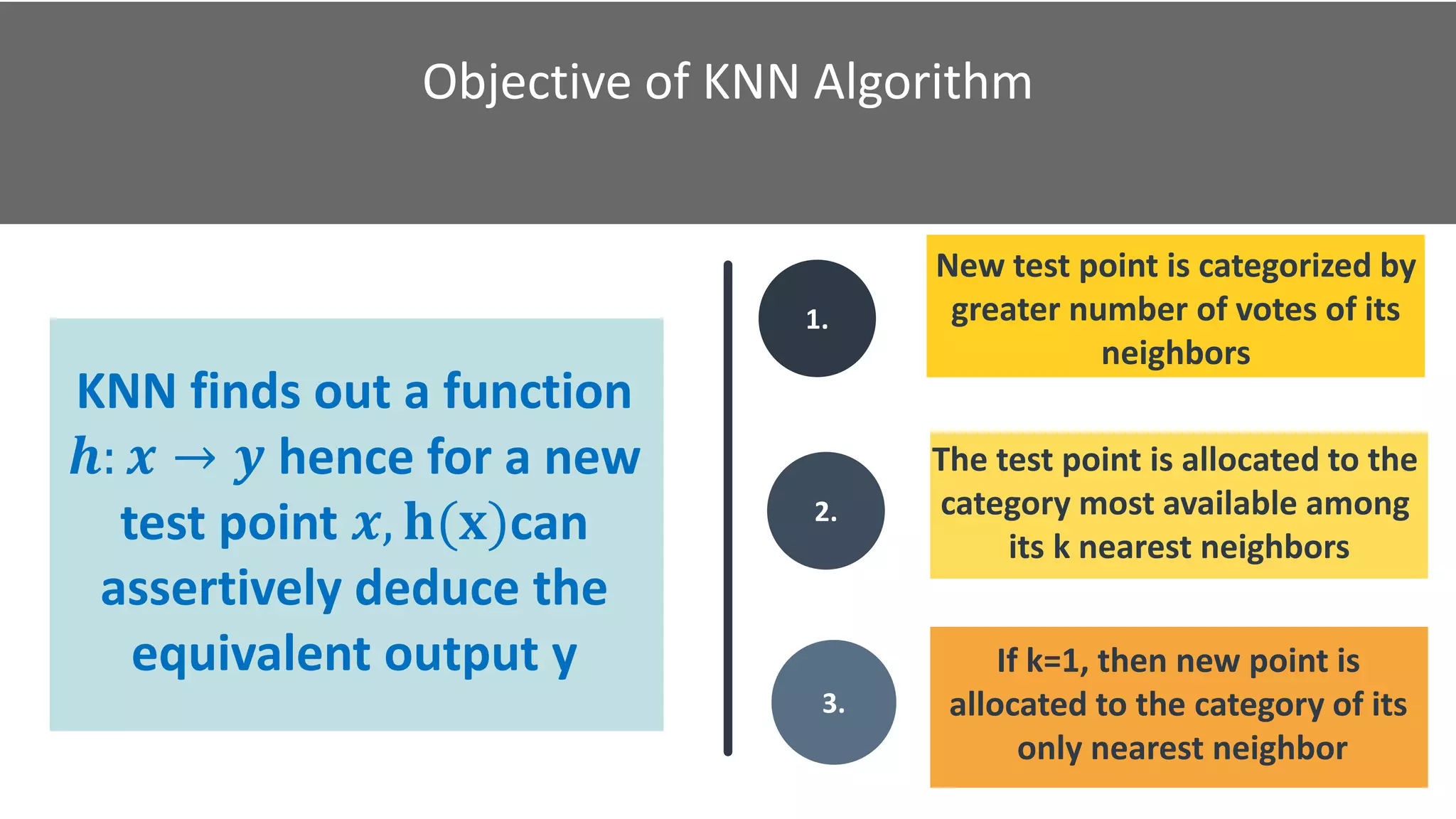

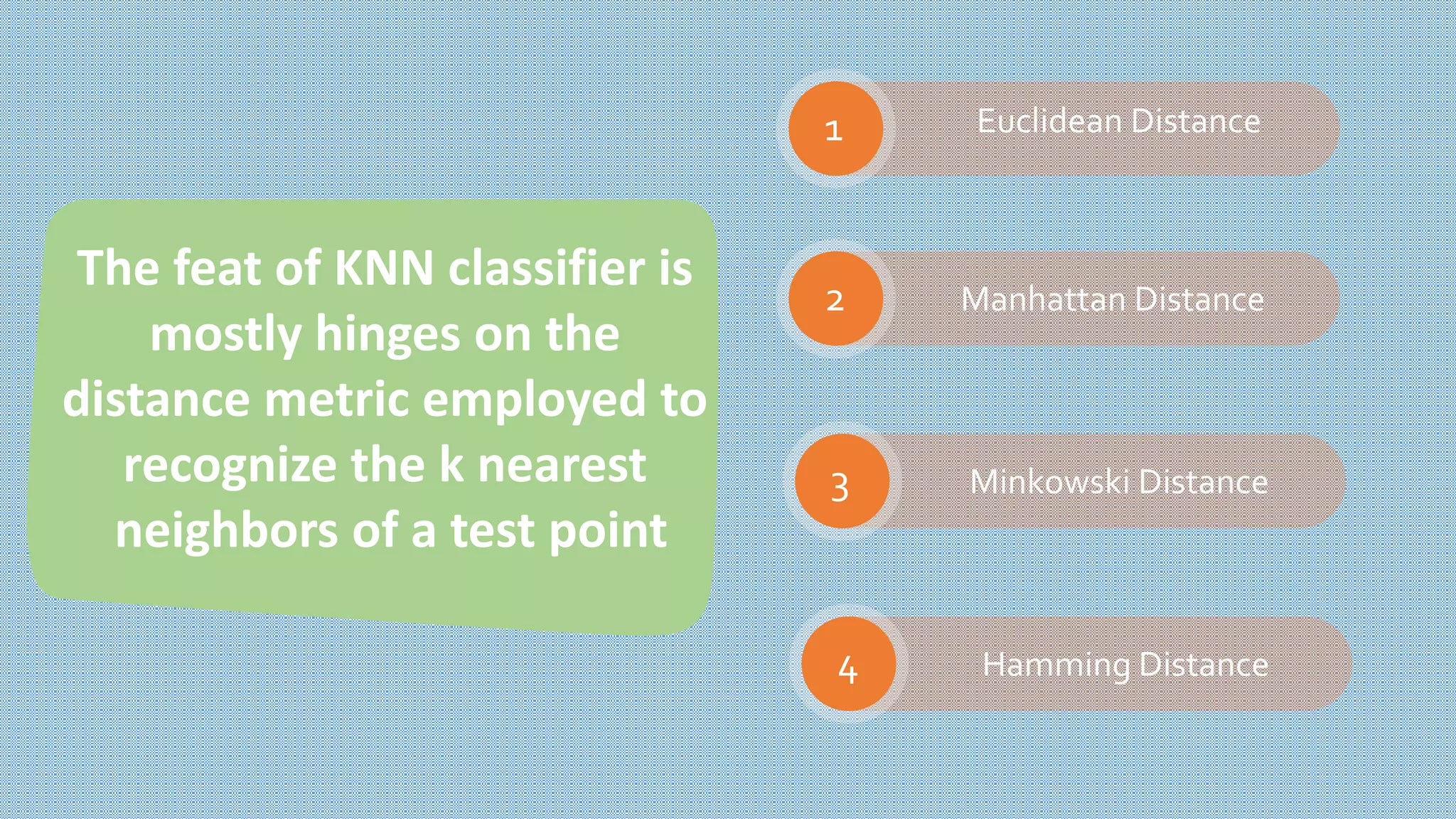

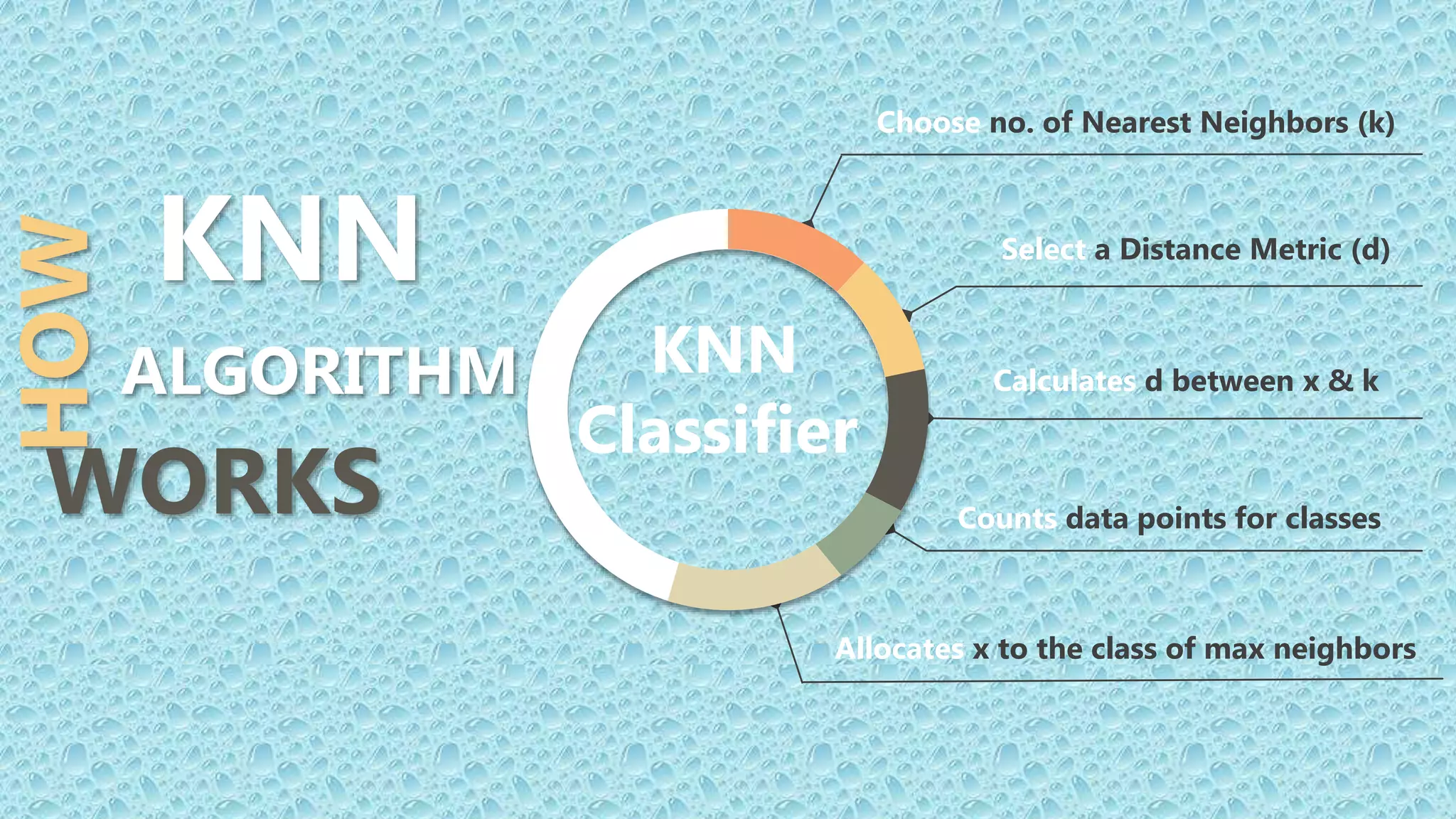

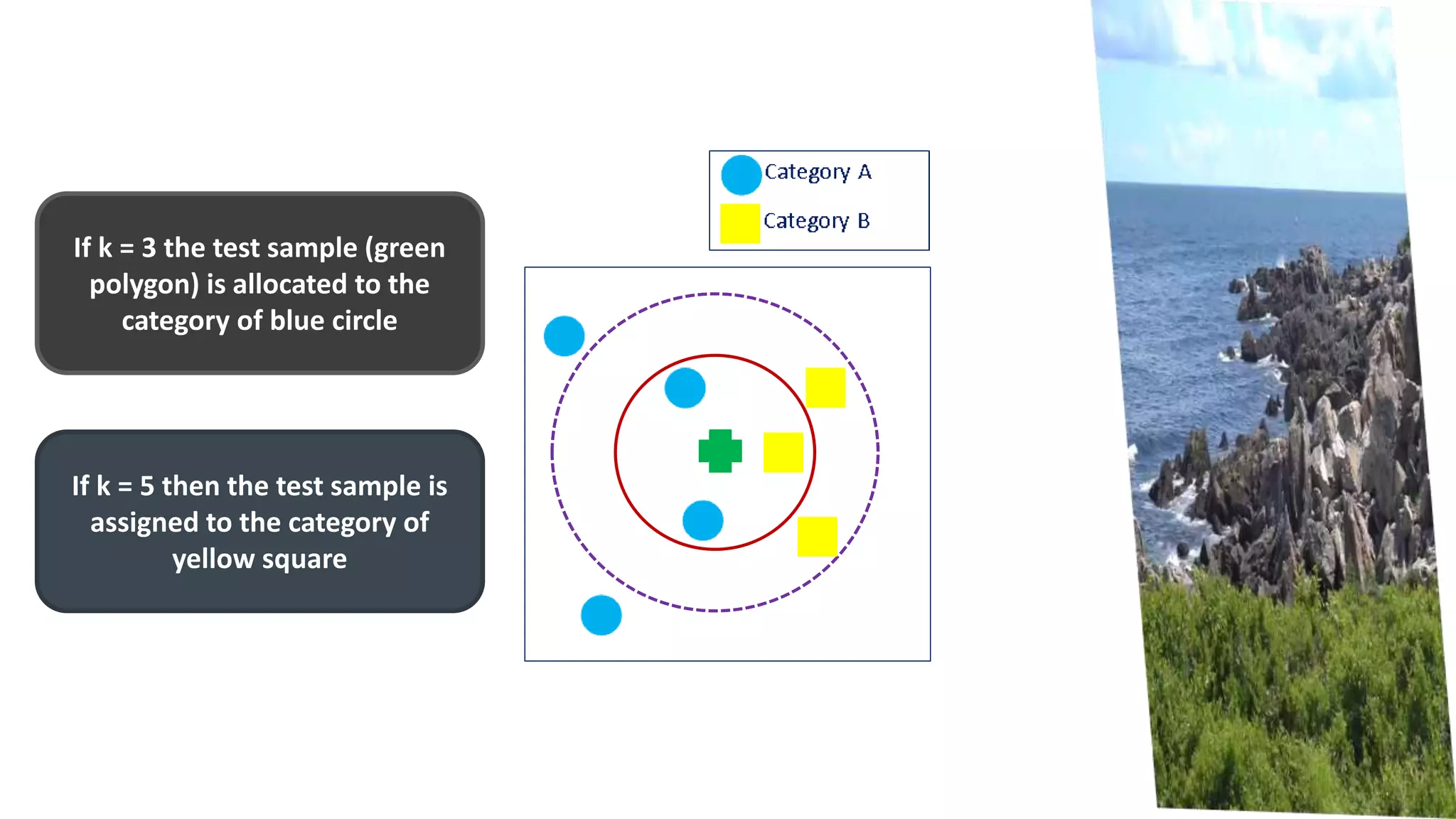

K-Nearest Neighbors is a machine learning algorithm that classifies new data points based on the majority class of its k nearest neighbors. It finds the k closest training examples in the feature space, counts the numbers of each class, and assigns the new point to the majority class. The objective is to learn a function that maps inputs to outputs based on similarity. It performs well when the distance between points is meaningful, such as with Euclidean distance. To apply it, one selects the number of neighbors k and a distance metric to find the closest points and classify new data.