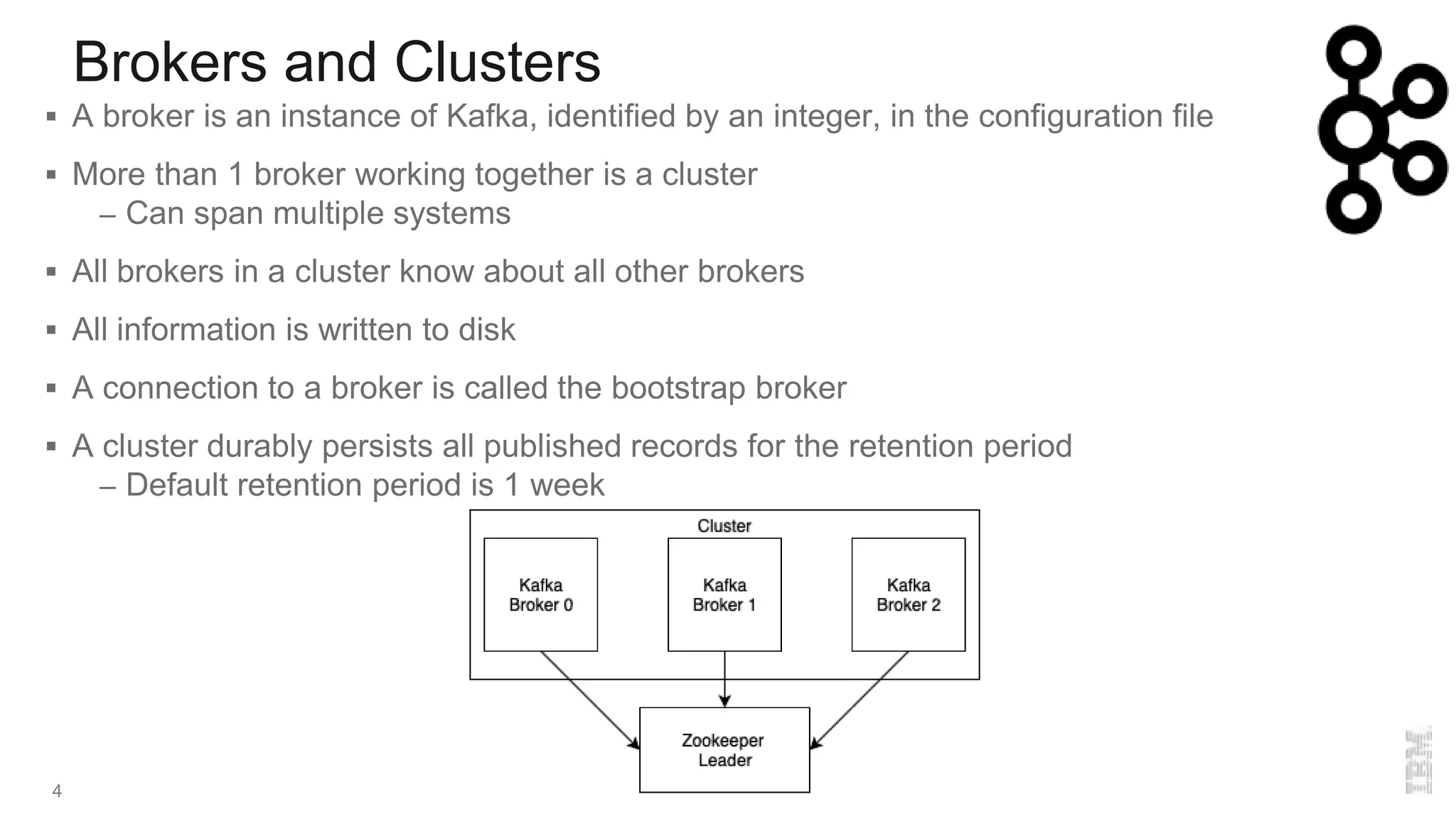

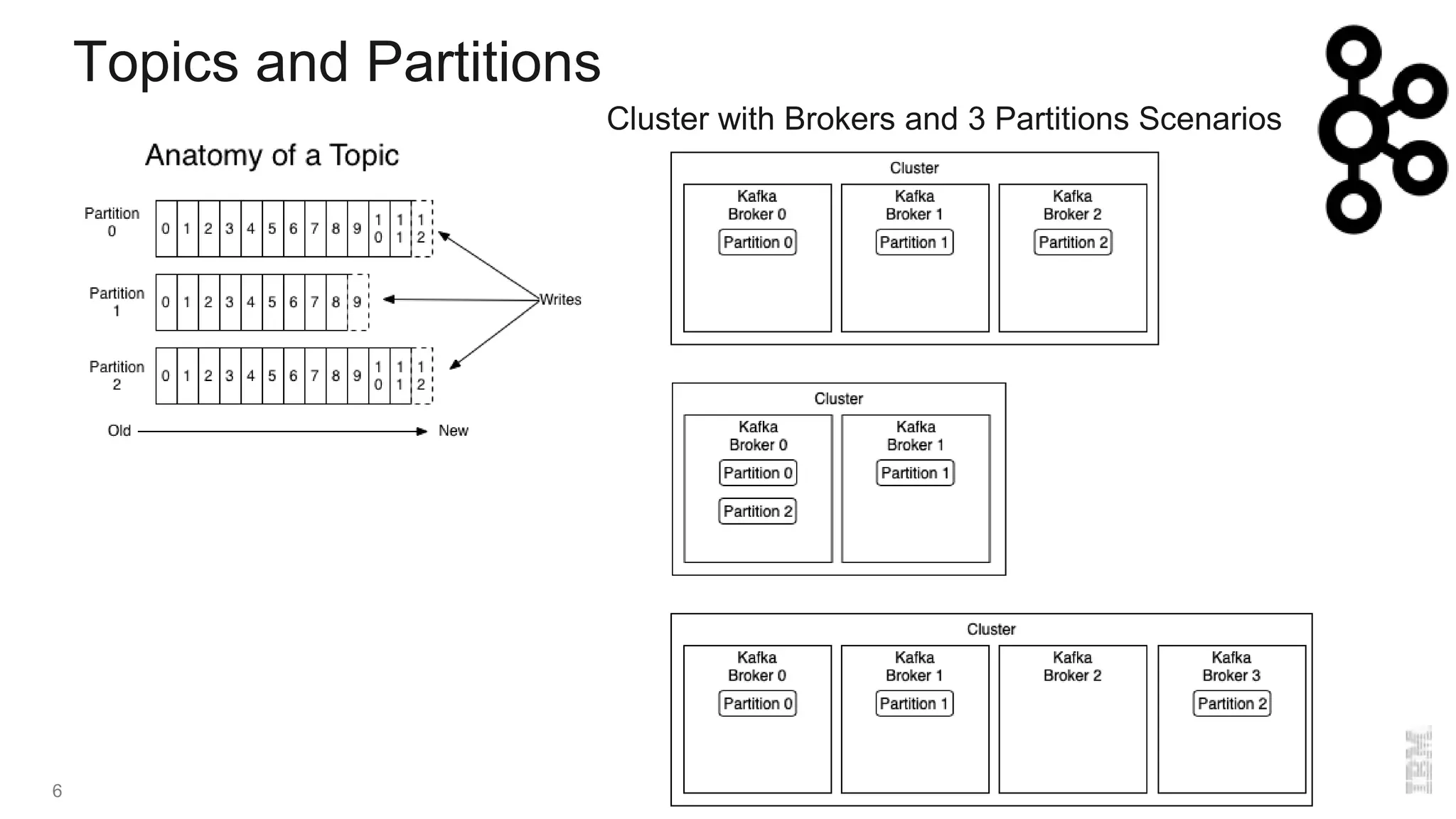

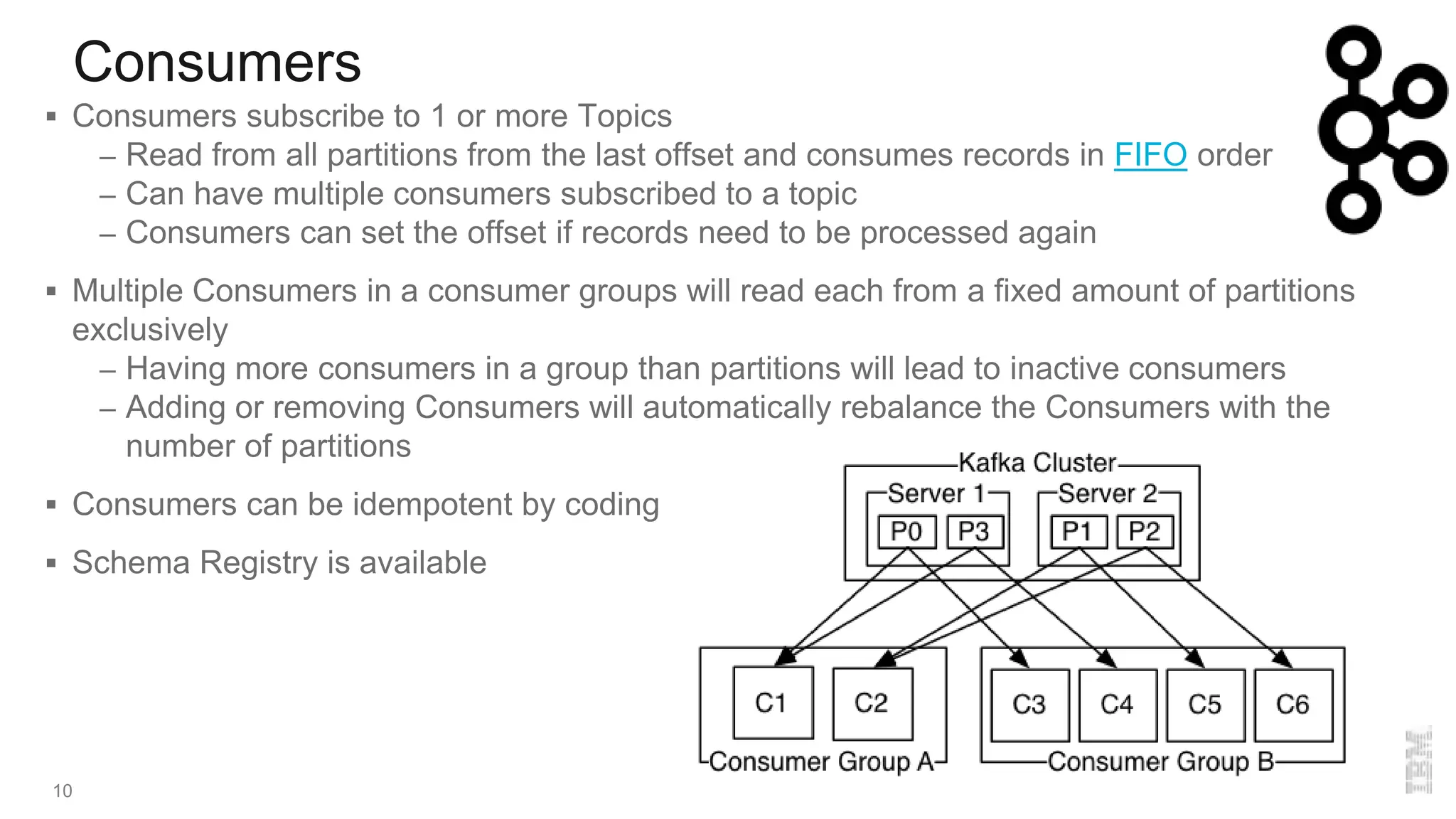

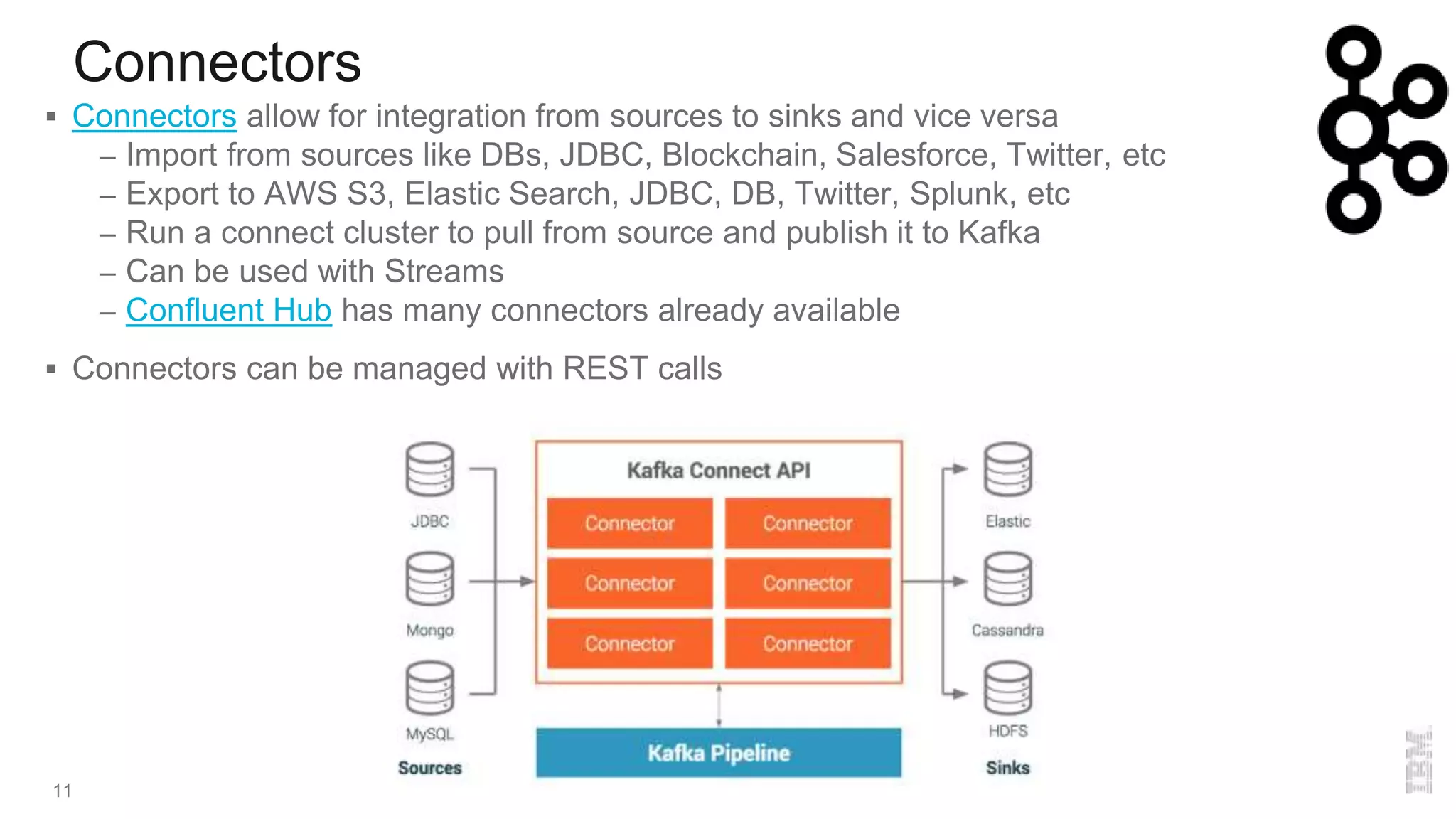

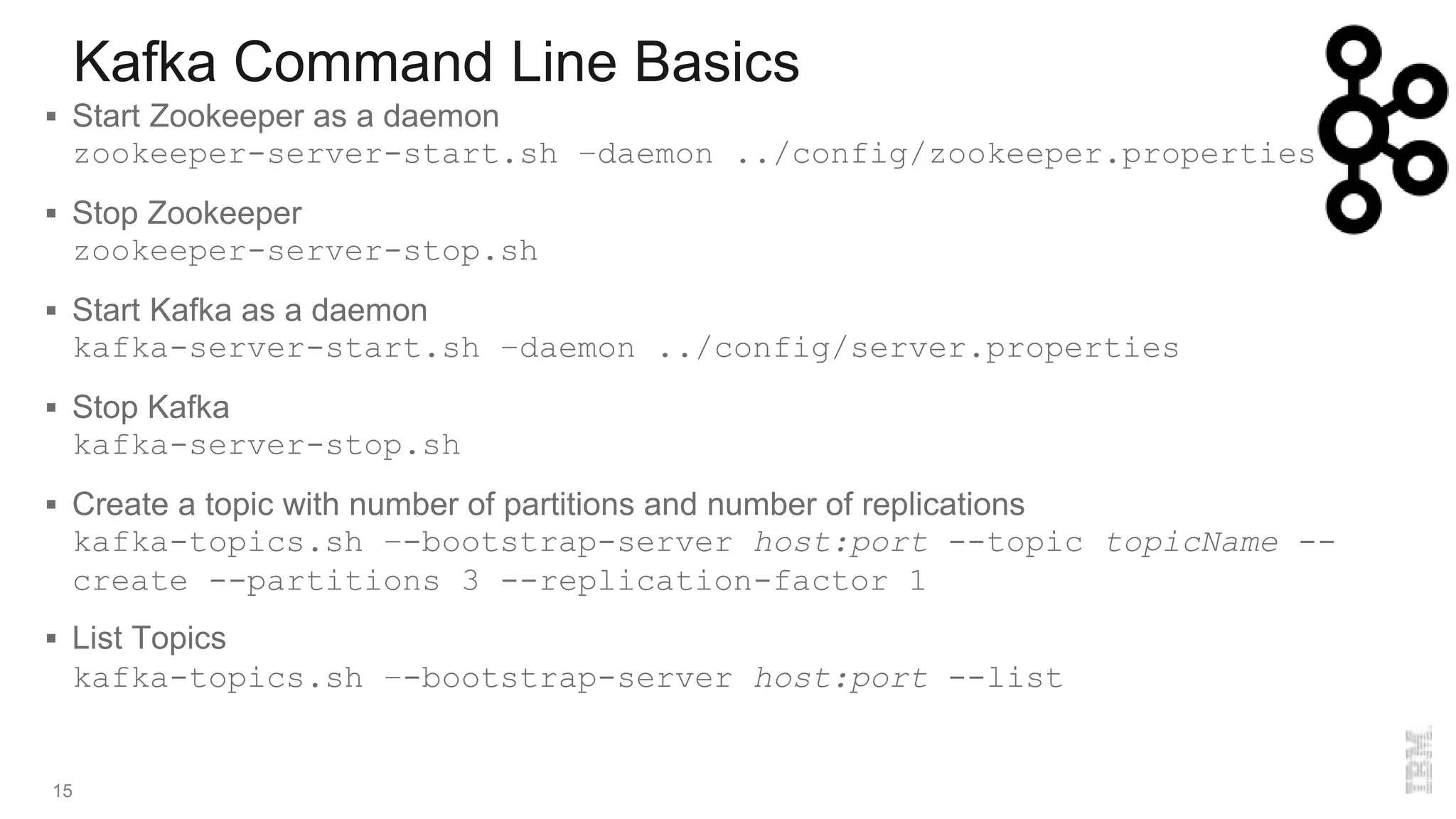

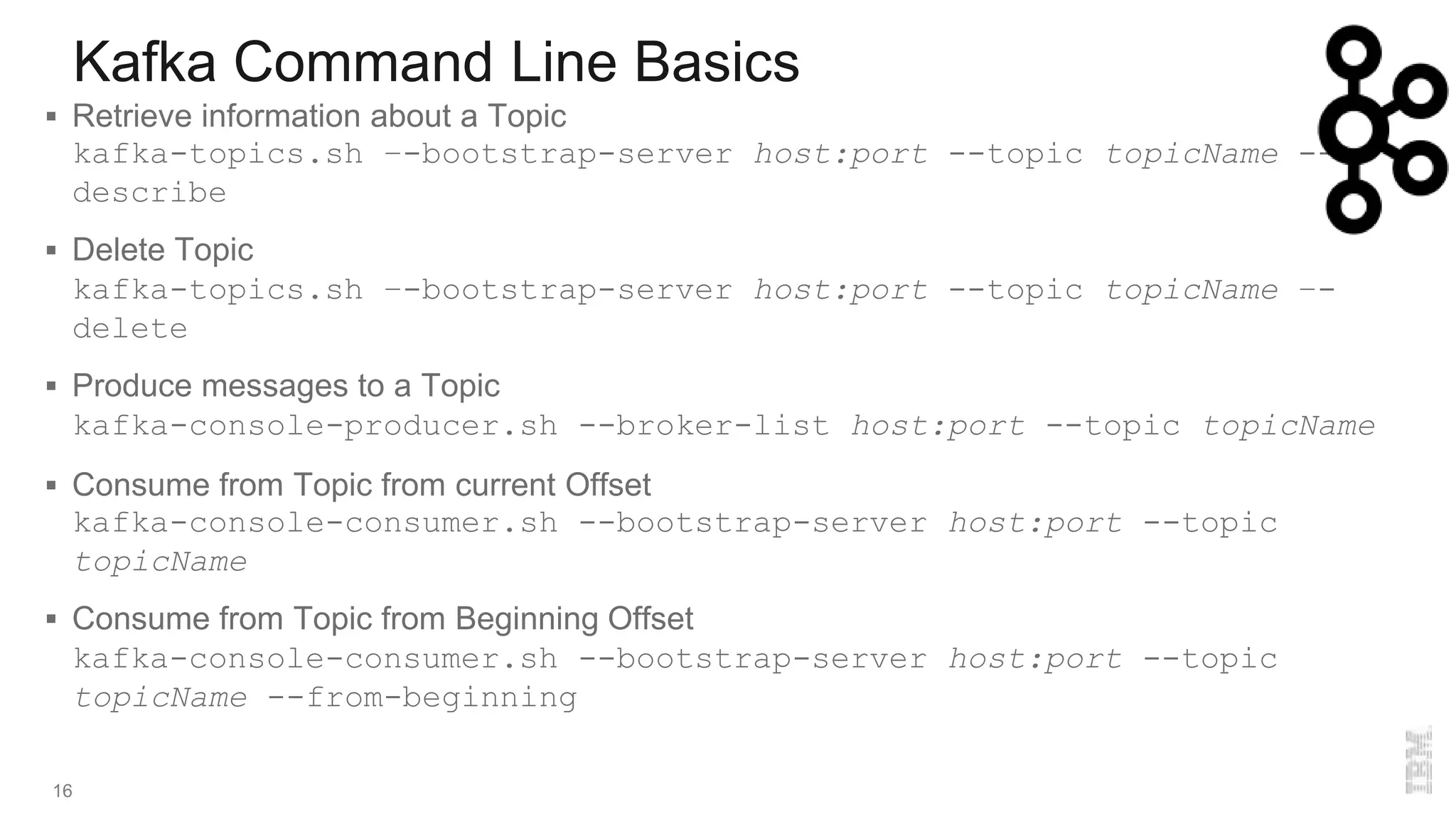

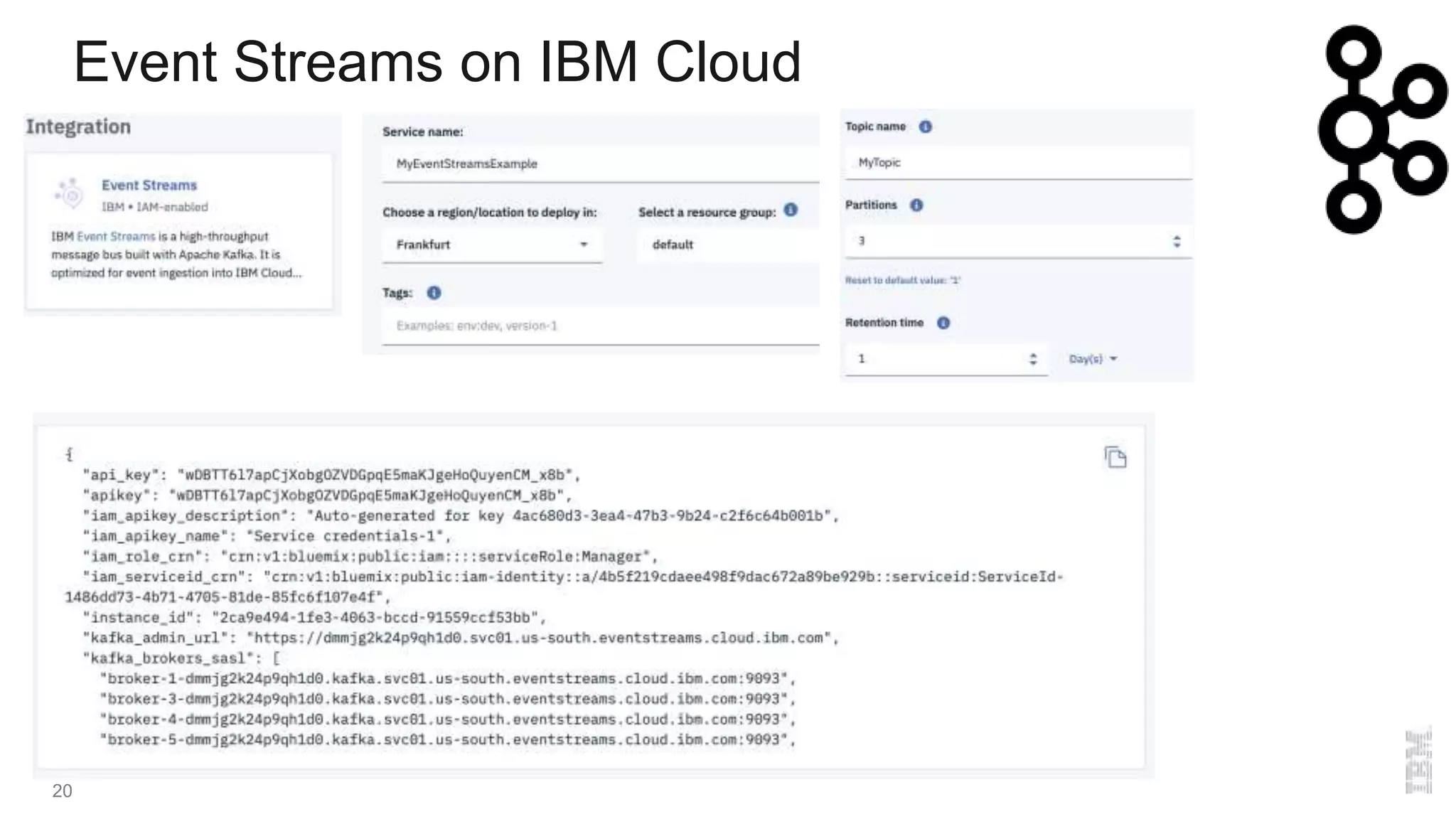

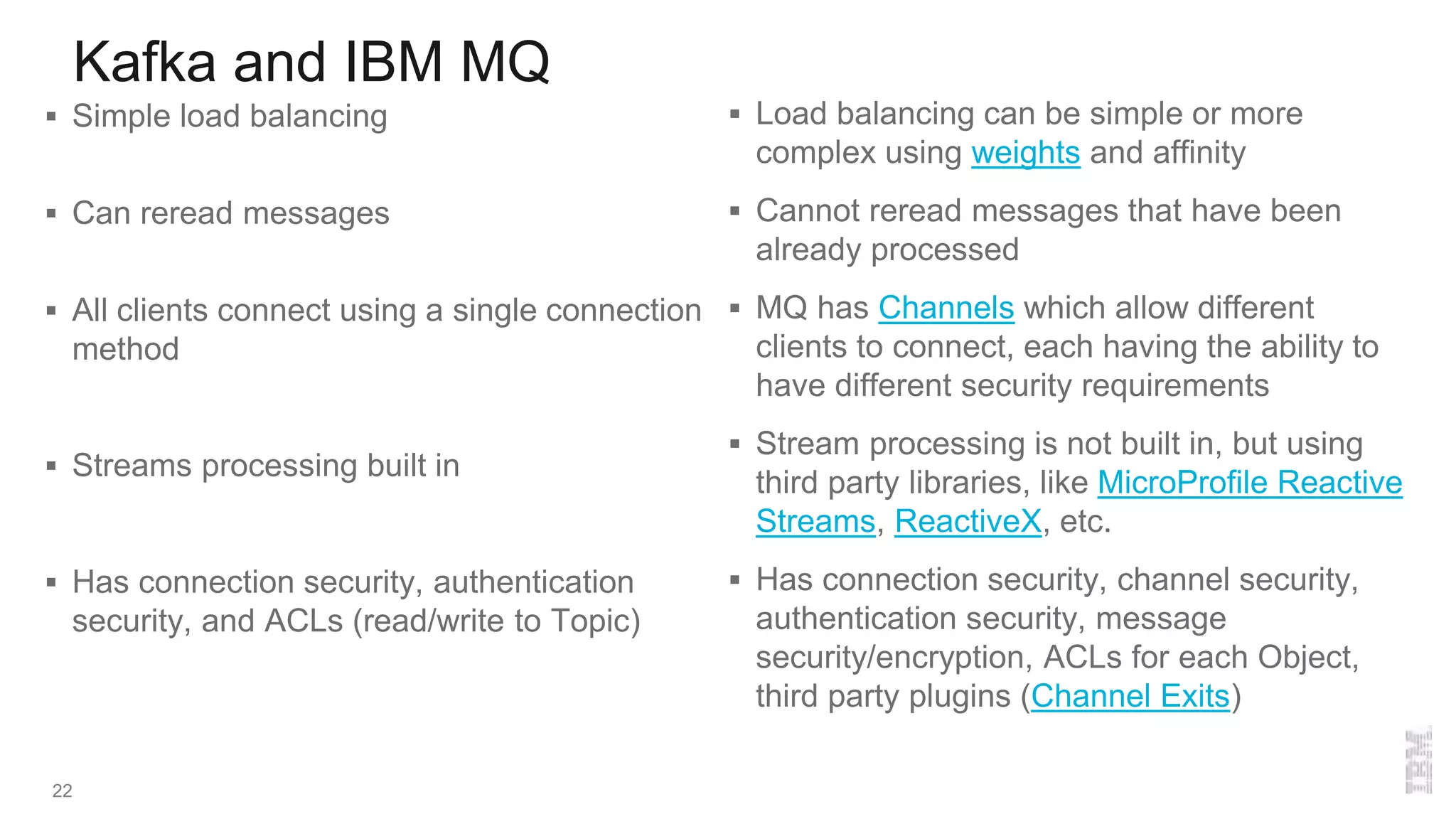

The document provides an overview of Kafka, a distributed publish-subscriber middleware developed by LinkedIn, detailing its architecture, components like brokers, topics, and partitions, along with its features for fault tolerance and scalability. It also covers consumer and producer functionalities, integration with connectors, and tools like the schema registry. Additionally, it introduces IBM Event Streams, which incorporates Kafka with enterprise features, available on IBM Cloud with options for support and various deployment plans.