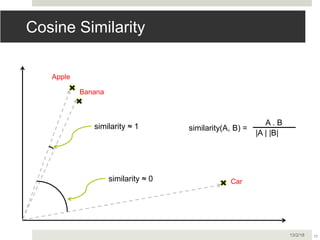

The document discusses word2vec, a tool for natural language processing, focusing on its functionalities and internal mechanisms. It covers various aspects, such as word representation, similarities, and methodologies (CBOW and Skip-gram) for predicting words in context. Additionally, it highlights the importance of word embeddings in tasks like sentiment analysis, categorization, and machine translation.

![Vector Lookup - Python

2113/2/18

array([ 2.3184156e+00, -3.7887740e+00, -1.5767757e+00, 2.0226068e+00,

-6.2421966e-01, -1.9068855e+00, 4.3609171e+00, 5.2191928e-02,

-2.1188414e+00, -2.3100405e+00, 5.8612001e-01, 1.3959754e+00,

-1.3236507e+00, 5.3390409e-05, -3.2201216e-01, 2.6455419e+00,

4.5713658e+00, 4.3059942e-01, -9.1906101e-01, -1.8534625e-02,

-2.7166015e-01, -2.2993209e+00, 7.8024969e-02, -3.2237511e+00,

3.3045592e+00, -1.0334913e+00, 1.4119573e+00, 3.7495871e+00,

2.8075716e+00, 1.0440959e-01, -3.9444833e+00, -2.2009590e-01,

-2.9403548e+00, -1.4462236e+00, 2.4799142e+00, 7.7769762e-01,

5.4318172e-01, -2.6818683e+00, -3.0701482e+00, 4.3109632e+00,

-7.7415538e-01, 1.9786474e+00, 1.1503514e+00, 2.6723063e+00,

-1.5133847e+00, 1.4275682e-01, 3.5057294e-01, 6.3898432e-01,

9.9464995e-01, 1.7852293e+00, 9.5475733e-01, 2.9222999e+00,

-3.5561893e+00, 3.1446383e+00, -4.4377699e+00, -4.3674165e-01,

-1.9084896e-01, 2.8170996e+00, -3.0291042e+00, -1.1227336e+00,

-3.6801448e+00, -1.2687838e+00, 1.7091125e-01, -8.1778312e-01,

2.1771207e+00, -2.6653576e+00, -1.5208750e+00, -1.8047930e-01,

8.8296290e-03, -2.7885602e+00, -1.5657809e+00, -2.3738770e+00,

8.7824135e+00, -9.4801110e-01, 2.1755044e+00, -2.1538272e+00,

-5.9697658e-01, -8.5682195e-01, 2.5586643e+00, -4.3383533e-01,

-1.3269461e+00, 3.8761835e+00, -8.1207365e-02, -1.6046954e+00,

-4.4856617e-01, -3.2454314e+00, 2.5956264e+00, -3.6466745e-01,

7.7527708e-01, -7.4778008e+00, 2.3812482e+00, -4.6497111e+00,

-2.4220943e+00, 1.5012804e-01, -1.5416908e+00, -3.4357128e+00,

3.7048867e+00, -4.2515426e+00, 5.9101069e-01, -1.0800831e+00],

dtype=float32)

]’[’بيانmodel](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-21-320.jpg)

![Vector Most Similar - Python

2213/2/18

model.most_similar([')]'بيان](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-22-320.jpg)

![Vector Most Similar - Python

2313/2/18

model.most_similar([')]'متر](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-23-320.jpg)

![Vector Most Similar - Python

2413/2/18

model.most_similar([')]'كندا](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-24-320.jpg)

![CBOW Architecture

Given a sentence (X) predict

what is the missing word (y).

P(y|X)

P(y=“is” | X = [“The”, “cat”,

“eating”, “dinner”]

V: Vocab Size ~170k

N: Vector embedding size

W: Weights

2813/2/18

…………………………………………

………

……………

Hidden Layer Output Layer

Input Layer

W’NxV

WNxV

WNxV

WNxV

N-dim

C x V-dim

V-dim

x1

x2

xc

yh](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-28-320.jpg)

![SkipGram Architecture

2913/2/18

Given a word (x) predict what

the rest of the sentence (Y).

P(Y|x)

P(Y = [“The”, “is”, “eating”,

“dinner”] | x=“cat”)

V: Vocab Size ~170k

N: Vector embedding size

W: Weights

…………………………………………

………

……………

Hidden Layer

Input Layer

WNxV

N-dim

C x V-dim

V-dim

y1

y2

x

W’NxV

W’NxV

W’NxV

Output Layer

yc

h](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-29-320.jpg)

![Forward Feed

3013/2/18

x = one_hot_representation

W1 = tf.Variable(tf.random_normal([vocab_size, embedding_size]))

b1 = tf.Variable(tf.zeros([embedding_size], dtype=tf.float64))

Y1 = tf.add(tf.matmul(x, W1), b1)

W2 = tf.Variable(tf.random_normal([embedding_size, vocab_size]))

b2 = tf.Variable(tf.zeros([output_length], dtype=tf.float64))

pred = tf.nn.softmax(tf.add(tf.matmul(Y1, W2), b2))

x = OneHot[“dog”]

h = x * W1 W1 is a [V * N] matrix

Y = h * W2 W2 is a [N * V] matrix

P = Softmax(Y)

…………………………………………

………

……………

Hidden Layer

Input Layer

WNxV

N-dim

C x V-dim

V-dim

y1

y2

x

W’NxV

W’NxV

W’NxV

yc

h](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-30-320.jpg)

![Medical Embeddings

V[Heart Failure] – V[Furosemide] ≈ V[Acute Renal Failure] – V[Sodium Cholride]

4513/2/18

Farhan, Wael, et al. "A predictive model for medical events based on contextual embedding of temporal sequences." JMIR medical informatics 4.4 (2016).](https://image.slidesharecdn.com/word2vec4-180214165708/85/JOSA-TechTalks-Word-Embedding-and-Word2Vec-Explained-45-320.jpg)