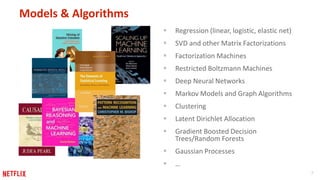

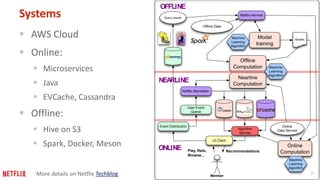

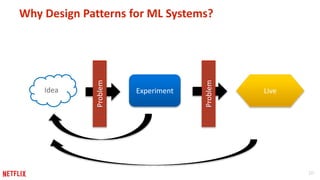

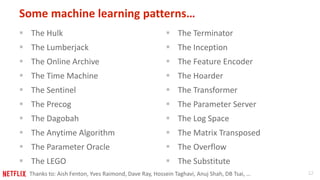

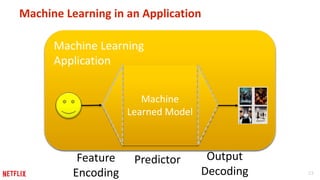

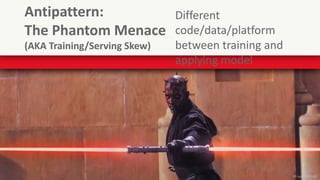

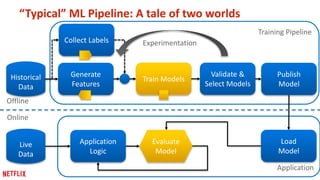

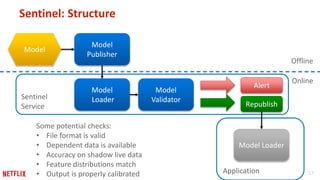

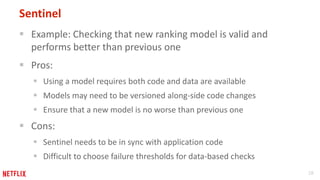

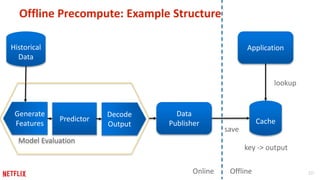

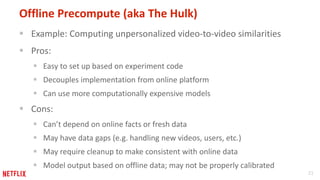

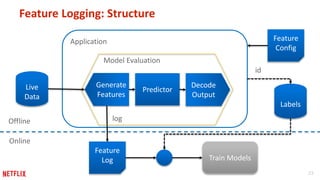

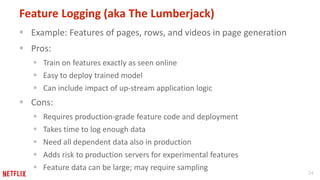

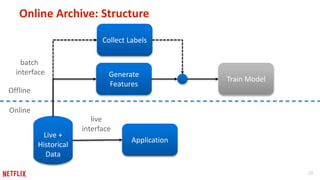

The document discusses various design patterns for building real-world machine learning systems, particularly in the context of Netflix's operations. It outlines common solutions to problems such as the 'Sentinel' for model validation and the 'Hulk' for offline model training and evaluation, providing a menu of reusable abstractions for ML implementations. The document emphasizes the importance of effective design patterns to facilitate communication and streamline machine learning processes.