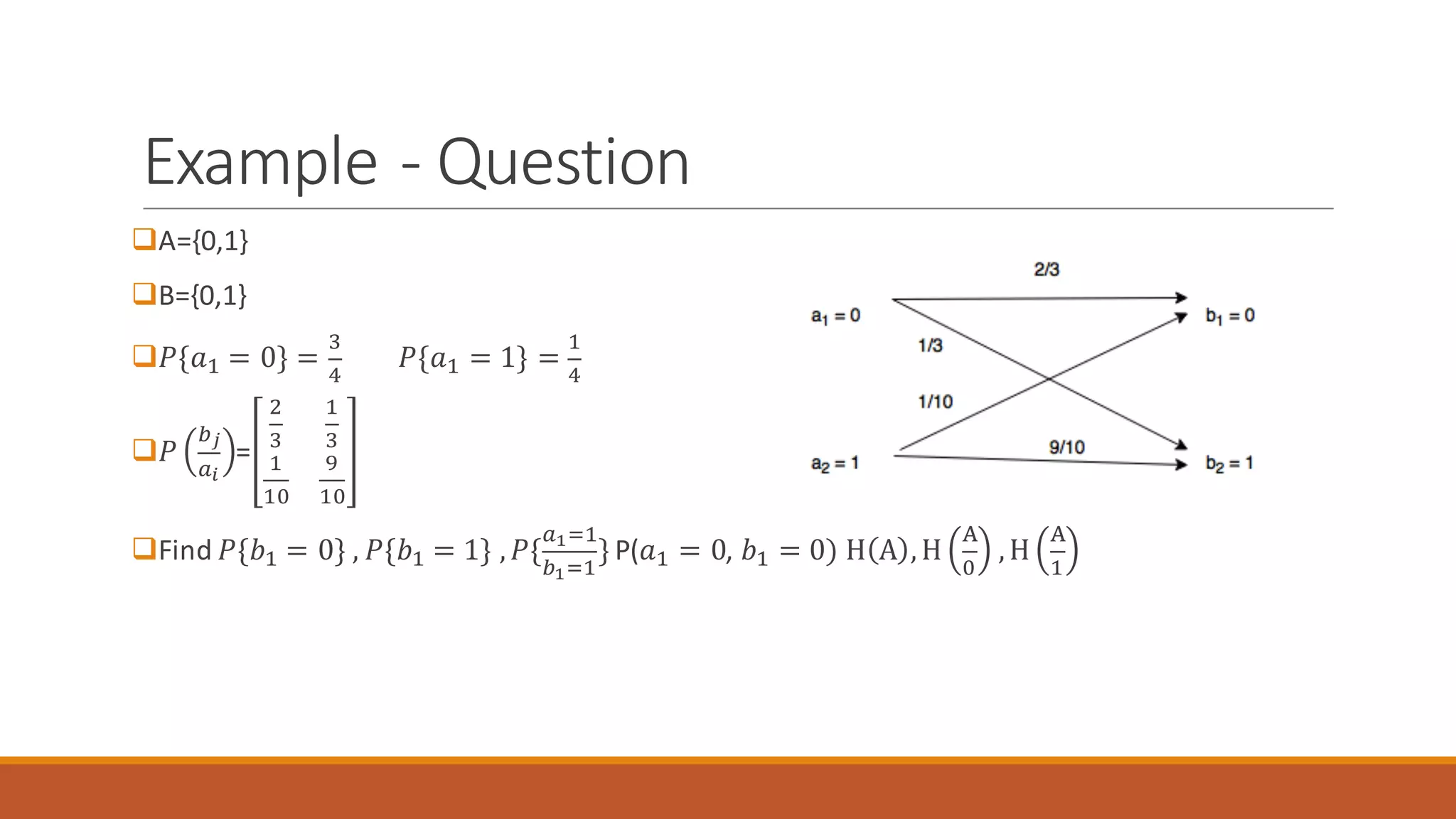

This document provides an introduction to information channels. It defines an information channel as having an input alphabet, output alphabet, and conditional probabilities relating input and output symbols. It discusses how to represent channels in matrix form and calculates various probabilities. It also covers zero-memory channels, extensions of channels to multiple inputs/outputs, entropy, mutual information, and uses the binary symmetric channel as an example.

![Binary Symmetric Channel

qChannel Matrix Form :

𝑃]^_ =

𝑝⃑ 𝑝

𝑝 𝑝⃑

q 2nd – Extension of Binary Symmetric Channel

∏=

𝑝⃑1

𝑝⃑ 𝑝

𝑝𝑝⃑

𝑝1

𝑝⃑ 𝑝

𝑝⃑1

𝑝1

𝑝𝑝⃑

𝑝𝑝⃑

𝑝1

𝑝⃑1

𝑝⃑ 𝑝

𝑝1

𝑝𝑝⃑

𝑝⃑ 𝑝

𝑝⃑1](https://image.slidesharecdn.com/itcinformationchannel-180321052032-181113144326/75/Introduction-to-Information-Channel-14-2048.jpg)

![Generalization of Shannon’s First

Theorem

qGeneralized Shannon’s First Theorem

∑ 𝑃 𝑏 𝐻

f

(

≤

Š

E

< ∑ 𝑃 𝑏 𝐻

f

(

+

0

E]f n-> Nth extension summed over input and

output symbols

𝐻 𝑆 ≤

Š

E

< 𝐻 𝑆 +

0

E

-> for single source

-> valid for only instantaneous codes which are uniquely decodable *

Avg #binits need to encoded from input symbol A

if we already have corresponding out put symbol b

Equivocation of A wrt to B](https://image.slidesharecdn.com/itcinformationchannel-180321052032-181113144326/75/Introduction-to-Information-Channel-25-2048.jpg)

![Channel Equivocation

q𝐻

f

]

= ∑ ∑ 𝑃 𝑎, 𝑏 log

0

V

X

W

]f](https://image.slidesharecdn.com/itcinformationchannel-180321052032-181113144326/75/Introduction-to-Information-Channel-26-2048.jpg)

![Mutual Information Of Channel

q 𝐼 𝐴, 𝐵 = H A − H

m

Ž

On the average observation of single output symbol provides us with these many bits of information

also called uncertainty resolved

q 𝐼 𝐴, 𝐵 = ∑ ∑ 𝑃 𝑎, 𝑏 log (

V *,(

V * V(()] )f *

q𝐼 𝐴E

, 𝐵E

= 𝑛 𝐼 𝐴, 𝐵](https://image.slidesharecdn.com/itcinformationchannel-180321052032-181113144326/75/Introduction-to-Information-Channel-27-2048.jpg)

![Joint Entropy

q 𝐻 𝐴, 𝐵 = ∑ ∑ 𝑃 𝑎, 𝑏 log (

0

V *,(] )f

q𝐻 𝐴, 𝐵 = H(A) + H(B) – I ( A,B)

q𝐻 𝐴, 𝐵 = 𝐻 𝐴 + 𝐻

]

f

q𝐻 𝐴, 𝐵 = 𝐻 𝐵 + 𝐻

f

]](https://image.slidesharecdn.com/itcinformationchannel-180321052032-181113144326/75/Introduction-to-Information-Channel-29-2048.jpg)

![Example – Binary Symmetric Channel

Given Data

qChannel Matrix

𝑃]^_ =

𝑝⃑ 𝑝

𝑝 𝑝⃑

where 𝑝⃑=1- 𝑝

qProbability of transmitting input symbols

1 → 𝜔

0 → 𝜔’](https://image.slidesharecdn.com/itcinformationchannel-180321052032-181113144326/75/Introduction-to-Information-Channel-30-2048.jpg)