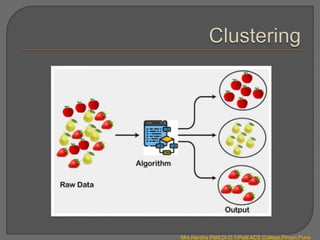

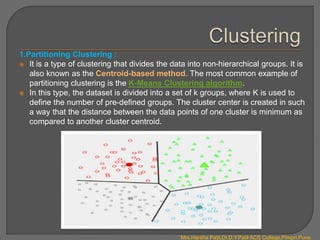

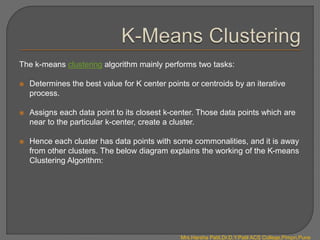

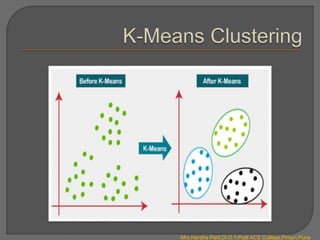

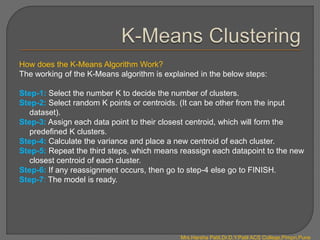

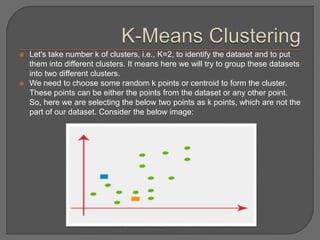

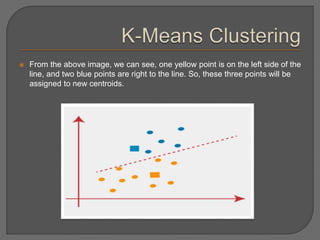

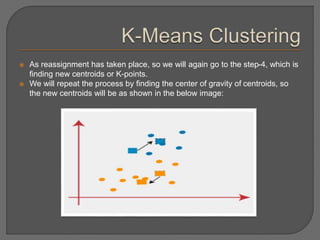

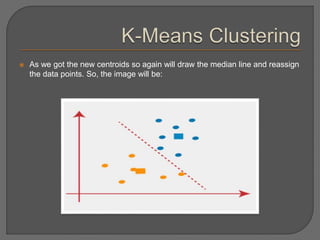

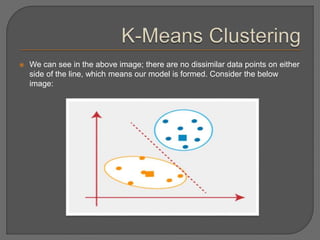

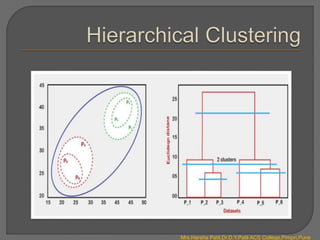

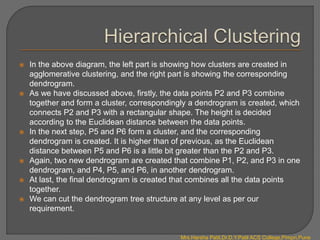

Clustering is an unsupervised machine learning technique that groups unlabeled data points into clusters based on similarities. It can be used for tasks like market segmentation, image segmentation, and anomaly detection. The k-means clustering algorithm is a common partitioning clustering method that divides data into k predefined clusters by minimizing distances between data points and cluster centroids.