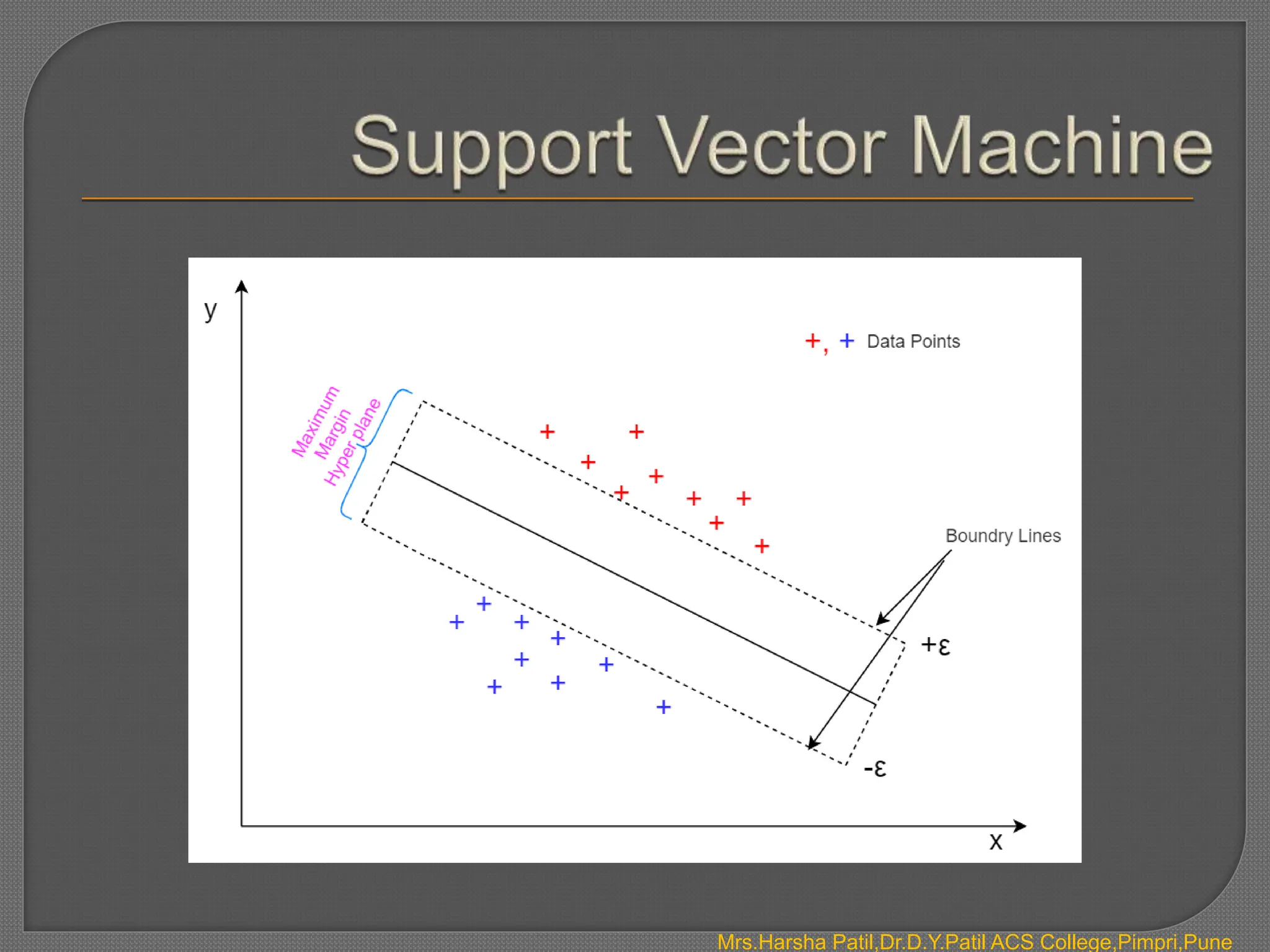

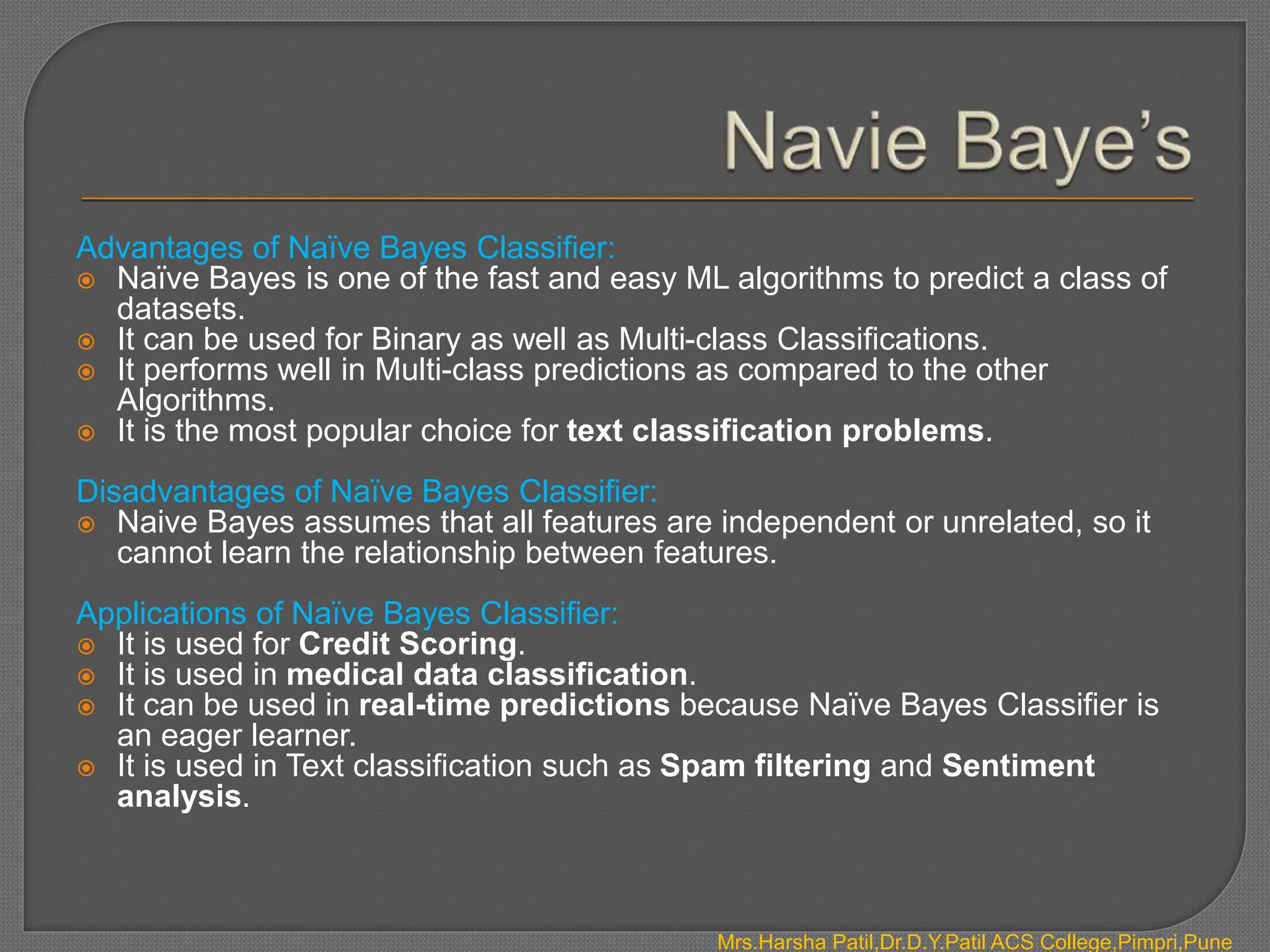

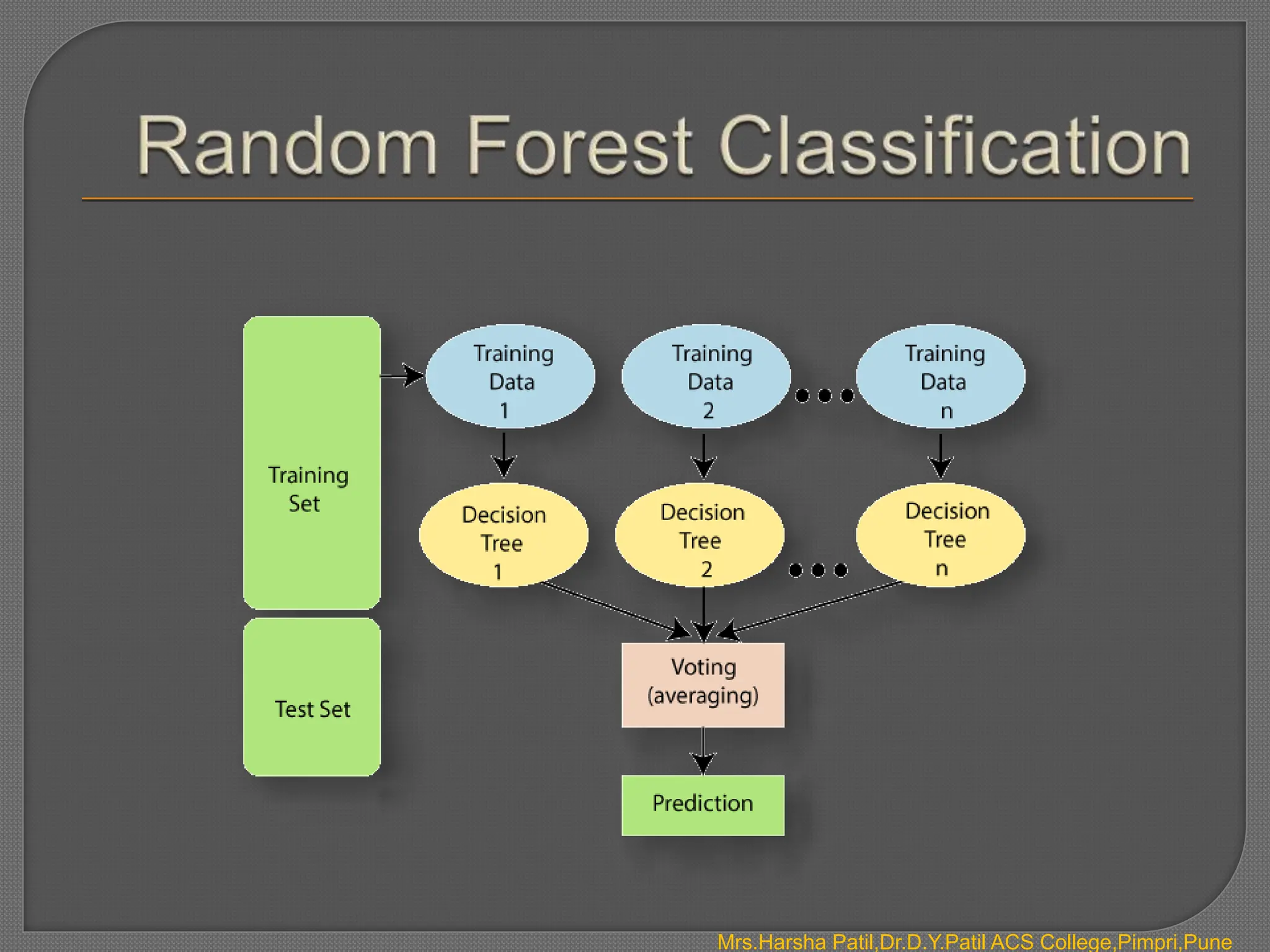

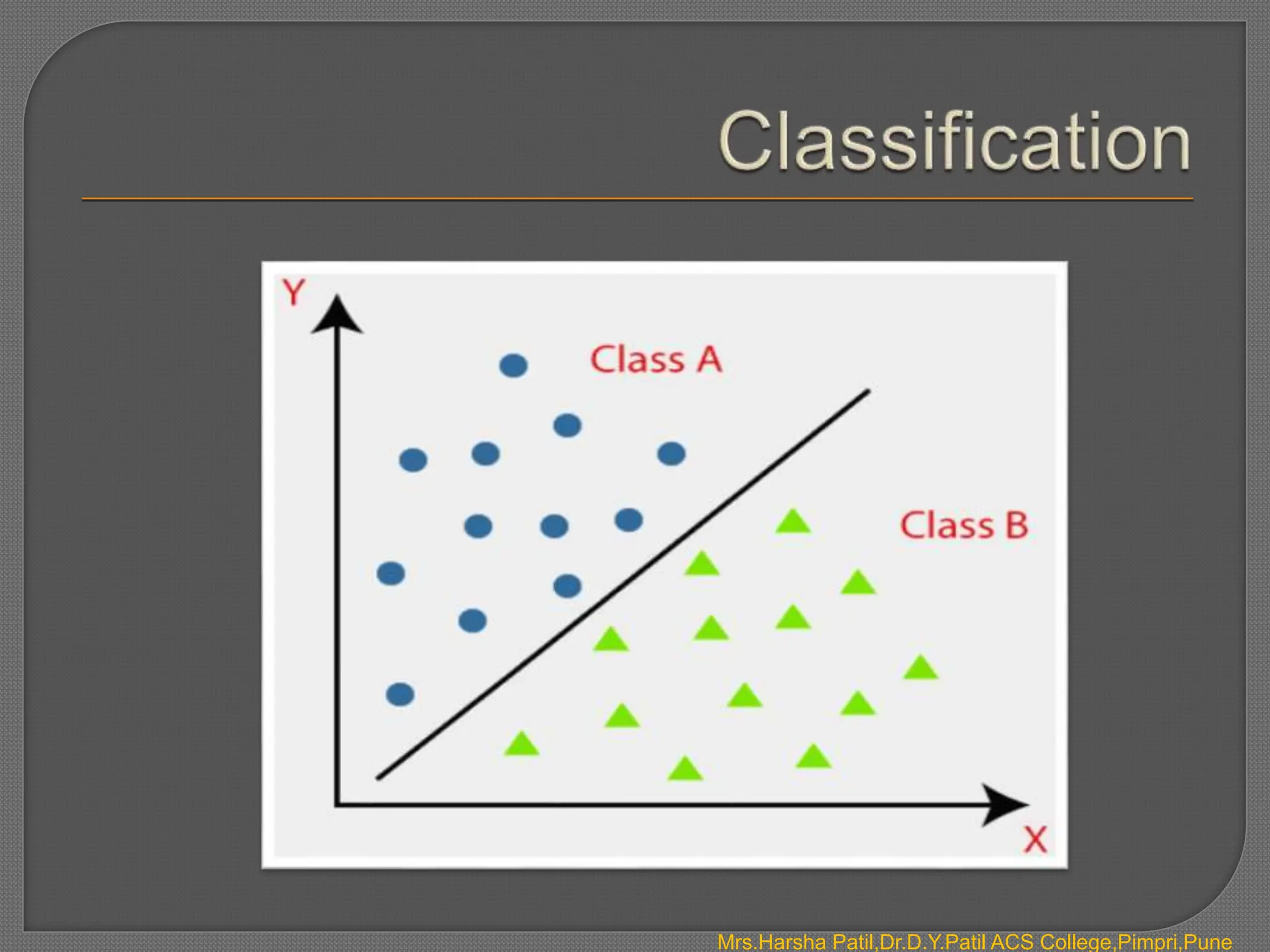

The document discusses classification algorithms, which are supervised machine learning techniques used to categorize new observations based on patterns learned from training data. Classification algorithms learn from labeled training data to classify future observations into a finite number of classes or categories. The document provides examples of classification including spam detection and categorizing images as cats or dogs. It describes key aspects of classification algorithms like binary and multi-class classification and discusses specific algorithms like logistic regression and support vector machines (SVM).

![ To avoid this problem, log-odds function or logit function is used.

Logistic regression can therefore be expressed in terms of logit function as :

log(P(x)/1-P(x)) = b0 + b1 * X

where, the left-hand side is called the logit or log-odds function, and p(x)/(1-

p(x)) is called odds.

The odds signify the ratio of probability of success [p(x)] to probability of failure [

1- p(X)]. Therefore, in Logistic Regression, linear combination of inputs is

mapped to the log(odds) - the output being equal to 1.

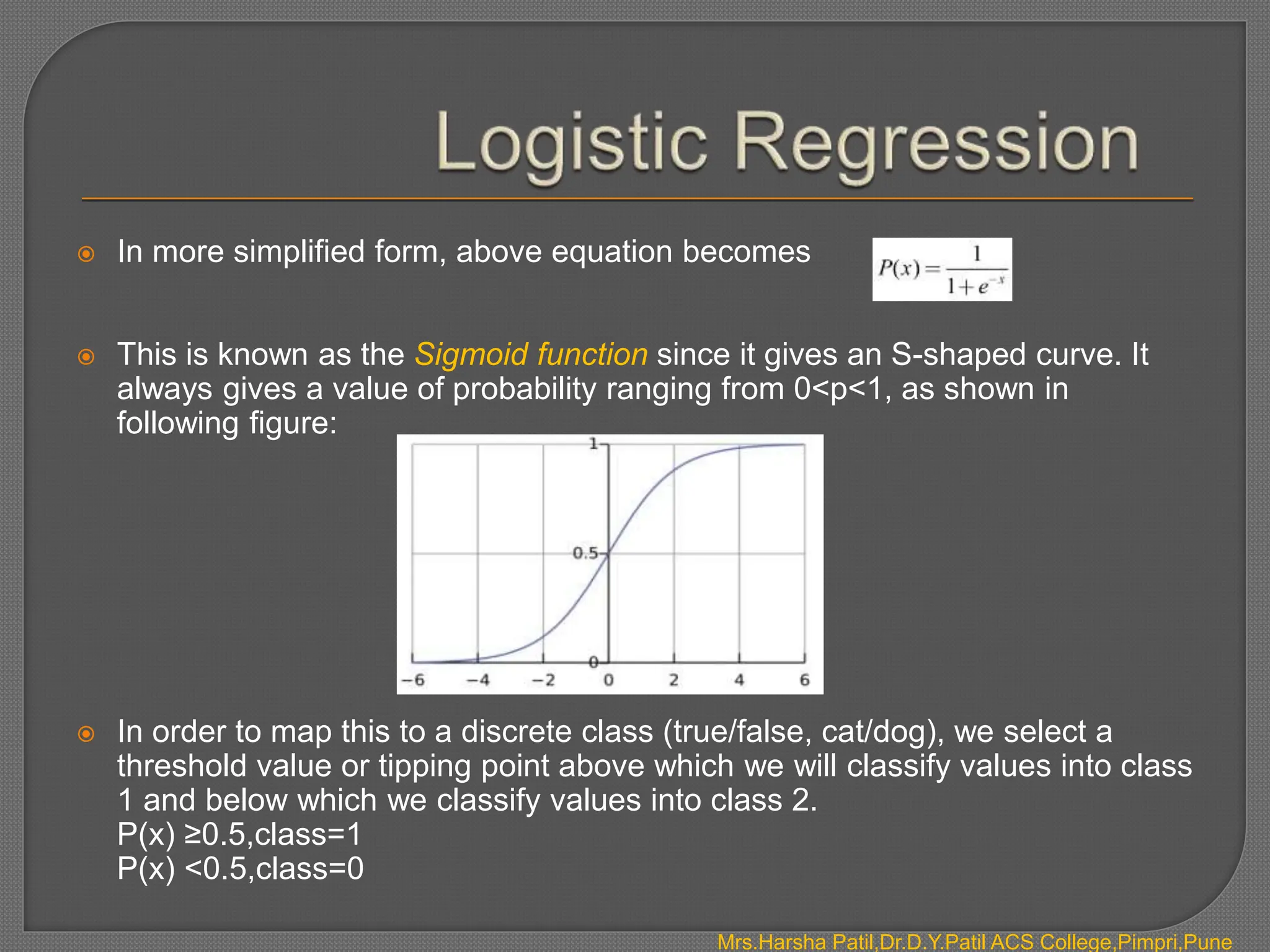

If we take an inverse of the above function, we get:

P(x) =

Mrs.Harsha Patil,Dr.D.Y.Patil ACS College,Pimpri,Pune](https://image.slidesharecdn.com/introductiontoclassification-240407070920-ae4b4e54/75/Introduction-to-Classification-pptx-11-2048.jpg)