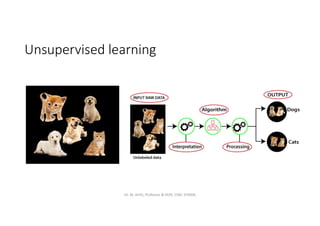

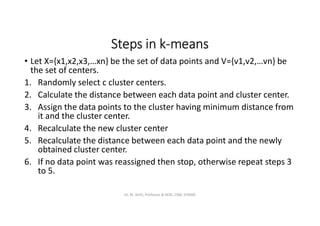

This document provides an introduction to unsupervised learning and clustering algorithms. It discusses how unsupervised learning is used to find patterns in unlabeled data. Clustering algorithms are introduced as a common unsupervised learning technique that groups similar data points together. Specific clustering algorithms covered include k-means, k-medoids, hierarchical clustering, density-based clustering, and grid-based clustering. The document also compares the k-means and k-medoids partitioning clustering algorithms.