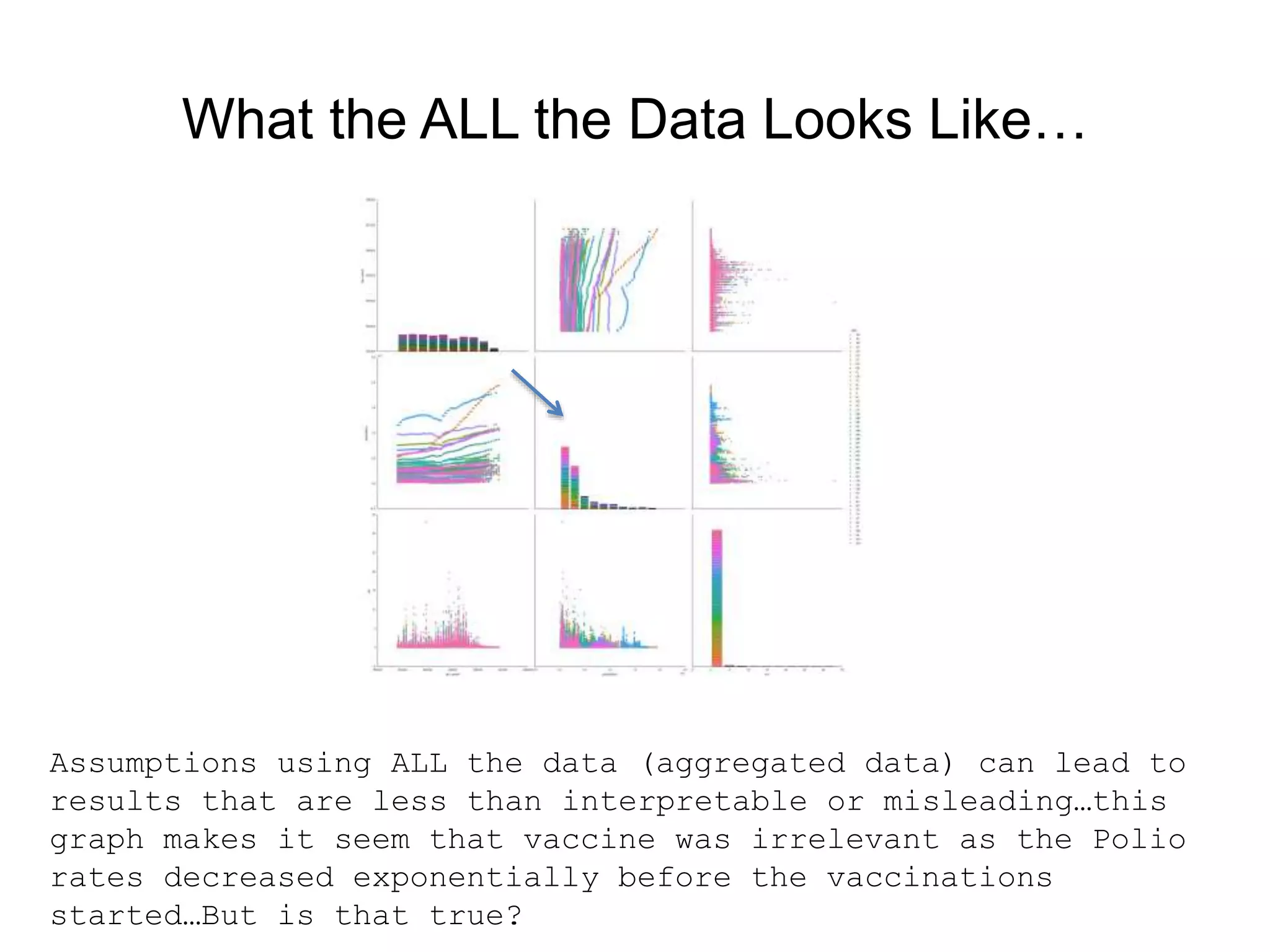

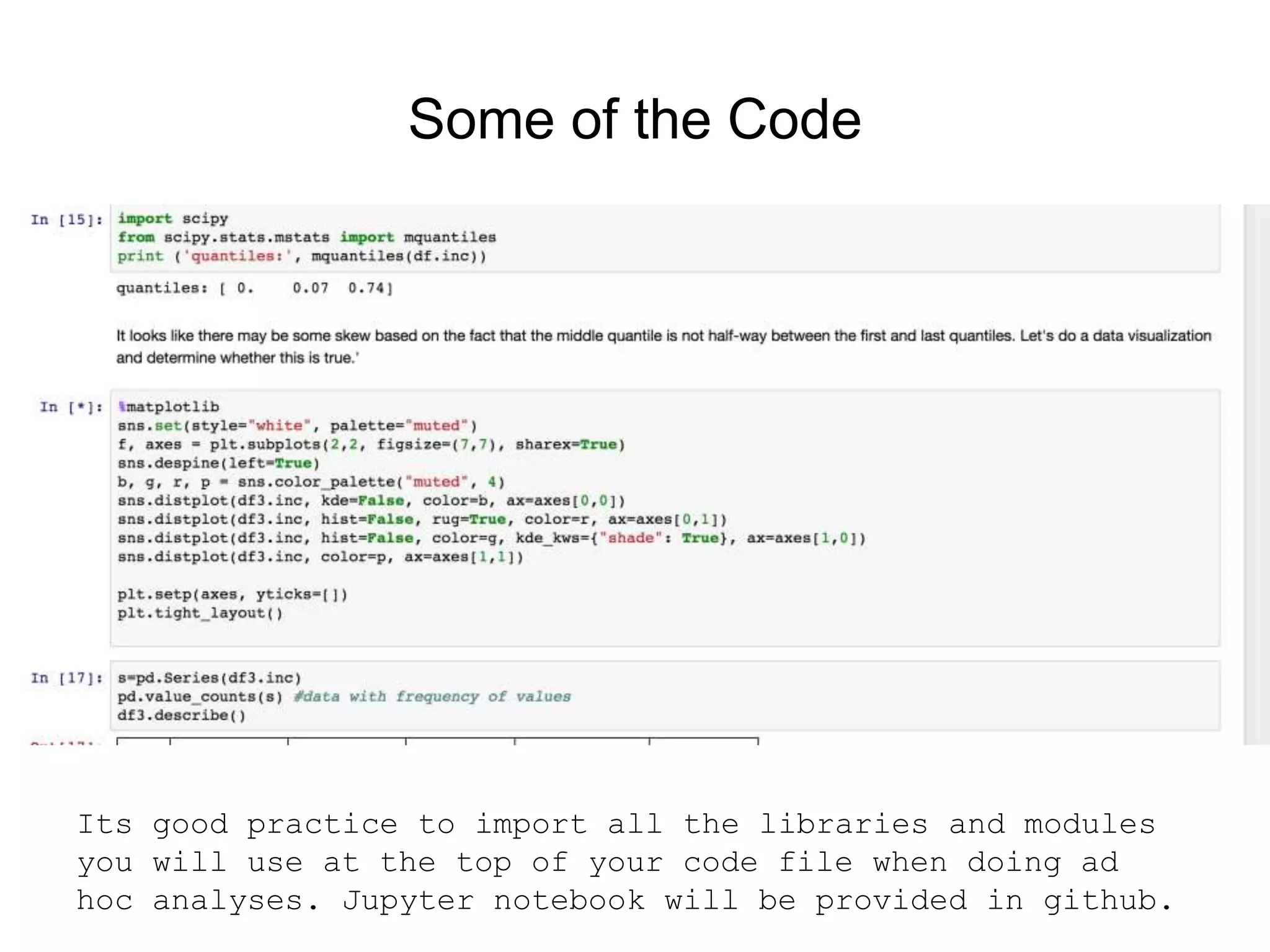

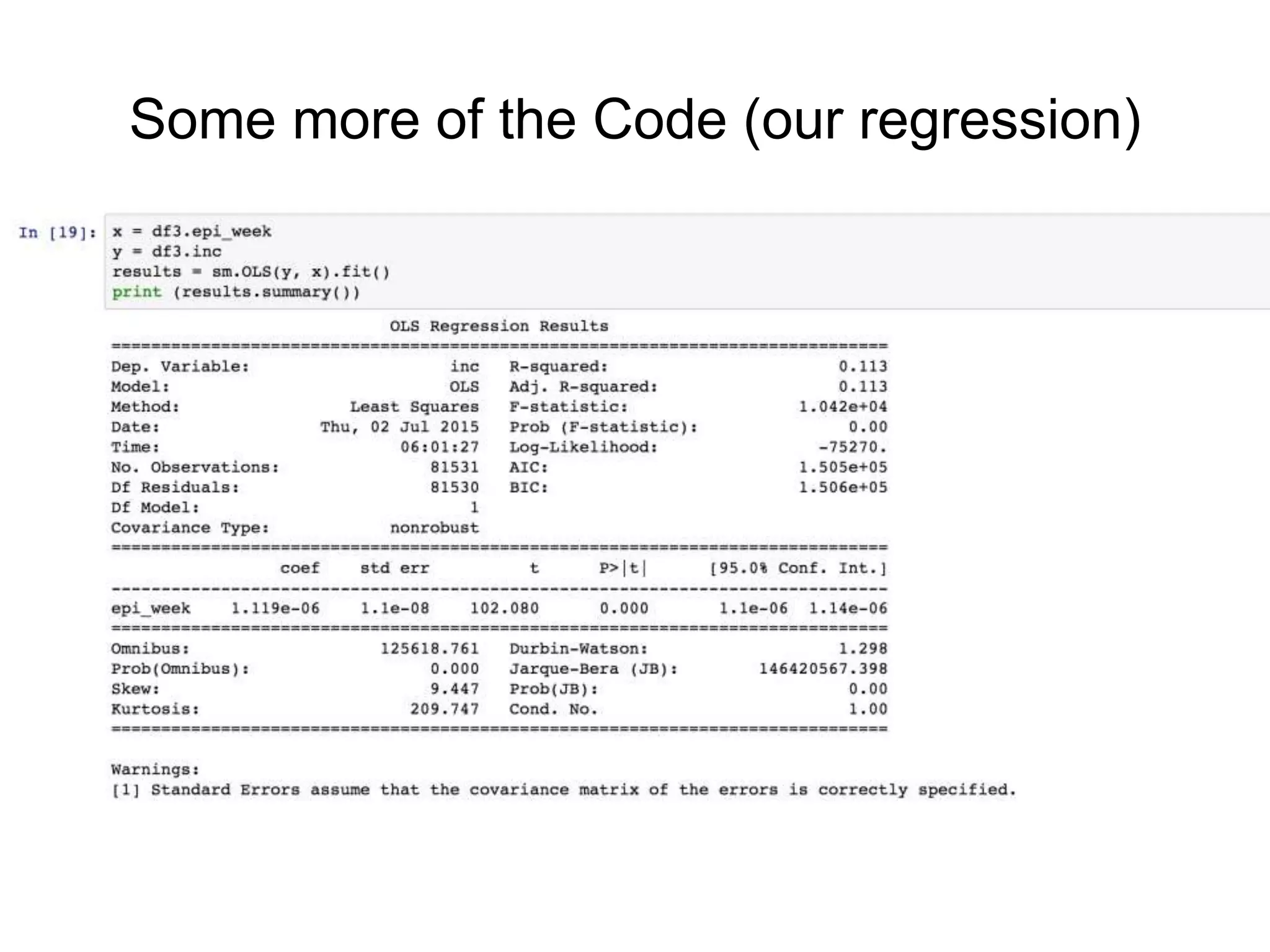

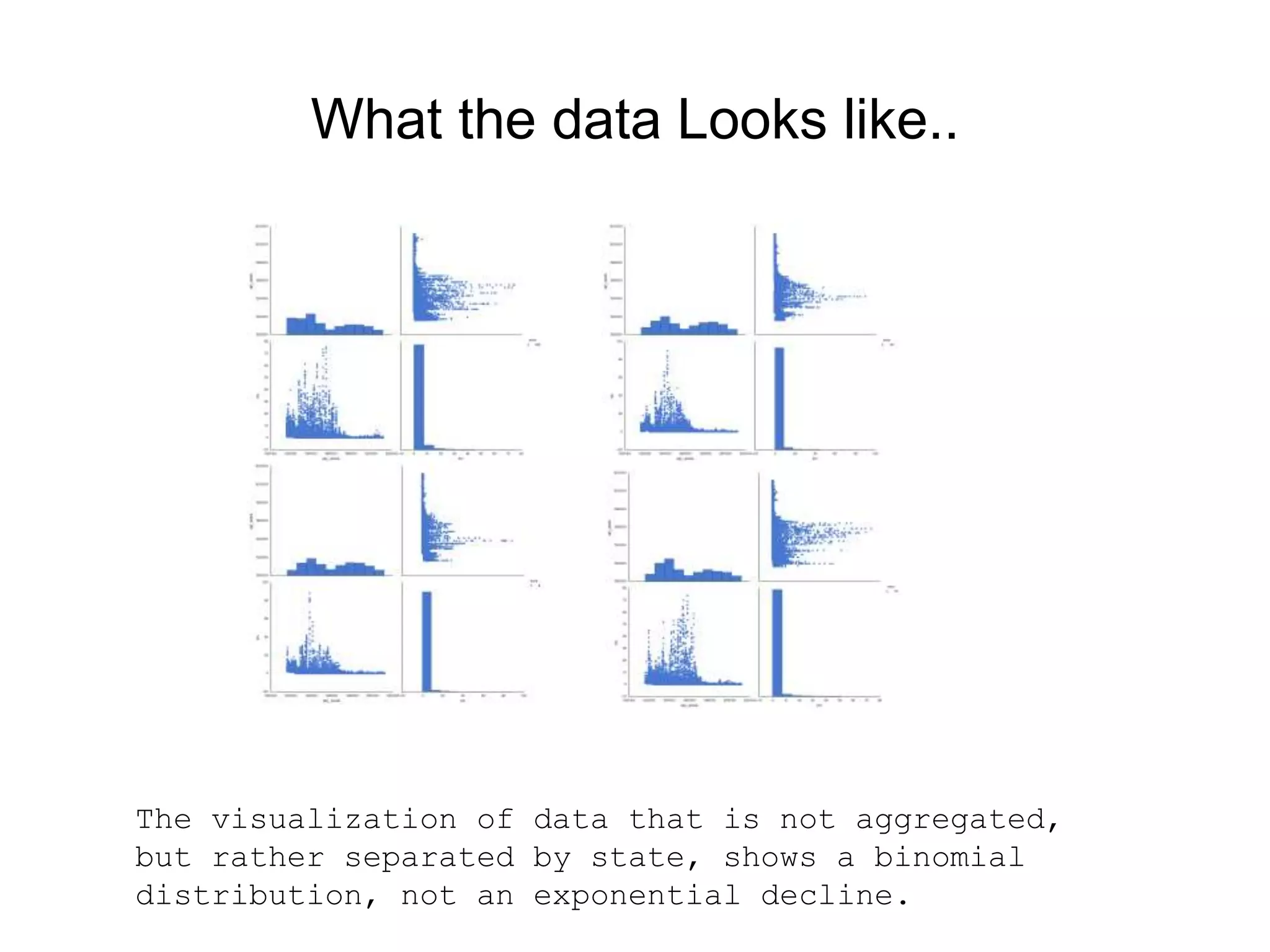

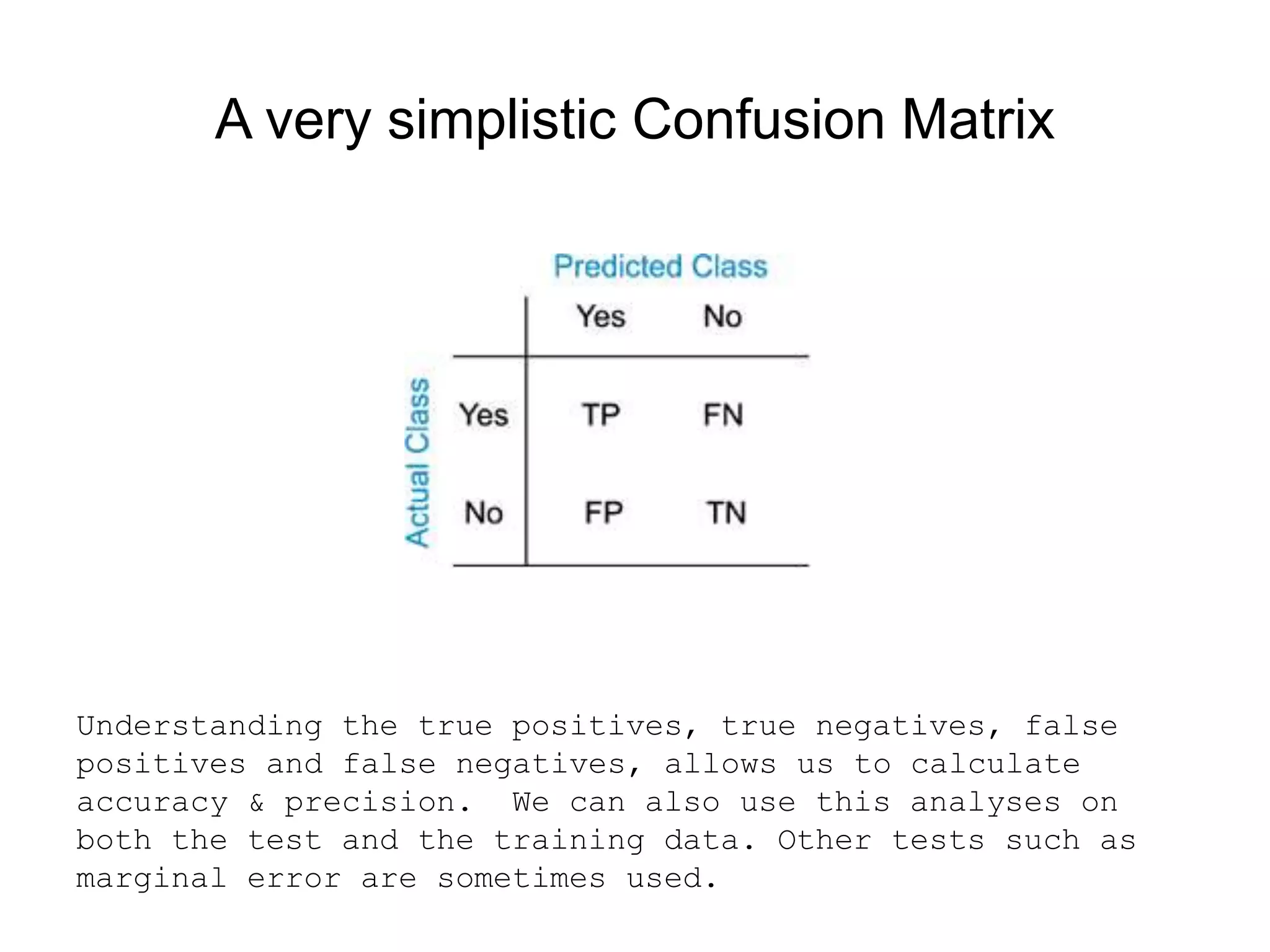

This document provides an introduction to using Scikit-Learn and StatsModels for machine learning and statistical analysis in Python. It outlines popular algorithms in each library, the history and development of the projects, and how machine learning and statistics relate. As a use case, it analyzes public health data on polio rates in the US from 1916 to 1979 to evaluate the impact of vaccinations, finding the rates decreased at different rates by state and an initial dip followed by later rises before being eventually wiped out.