The document provides an overview of Hadoop and its interaction with the Hadoop Distributed File System (HDFS), highlighting its need in managing big data through commodity hardware and data replication. It outlines the components of HDFS such as Namenode and Datanodes, the functionalities of Jobtracker and Tasktrackers, and differences between Hadoop 1.0 and 2.0. Additionally, it covers various HDFS commands for file management, directory operations, and administrative tasks.

![→ Hadoop ←

❏ Open source software

- a Java framework

- initial release: December 10, 2011

❏ It provides both,

❏ Storage → [HDFS]

❏ Processing → [MapReduce]

❏ HDFS: Hadoop Distributed File System

3](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-3-320.jpg)

![→ Before we start ←

❏ Command:

■ hdfs

❏ Usage:

■ hdfs [--config confdir] COMMAND

❏ Example:

■ hdfs dfs

■ hdfs dfsadmin

■ hdfs fsck

■ hdfs namenode

■ hdfs datanode

21](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-21-320.jpg)

![→ In general Syntax for `dfs` commands ←

hdfs

dfs

-<COMMAND>

-[OPTIONS]

<PARAMETERS>

e.g.

hdfs dfs -ls -R /user/USERNAME/demo/data/

23](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-23-320.jpg)

![0. Do It yourself

❏ Syntax:

■ hdfs dfs -help [COMMAND … ]

■ hdfs dfs -usage [COMMAND … ]

❏ Example:

■ hdfs dfs -help cat

■ hdfs dfs -usage cat

24](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-24-320.jpg)

![1. List the file/directory

❏ Syntax:

■ hdfs dfs -ls [-d] [-h] [-R] <hdfs-dir-path>

❏ Example:

■ hdfs dfs -ls

■ hdfs dfs -ls /

■ hdfs dfs -ls /user/USERNAME/demo/list-dir-example

■ hdfs dfs -ls -R /user/USERNAME/demo/list-dir-example

25](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-25-320.jpg)

![2. Creating a directory

❏ Syntax:

■ hdfs dfs -mkdir [-p] <hdfs-dir-path>

❏ Example:

■ hdfs dfs -mkdir /user/USERNAME/demo/create-dir-example

■ hdfs dfs -mkdir -p /user/USERNAME/demo/create-dir-

example/dir1/dir2/dir3

26](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-26-320.jpg)

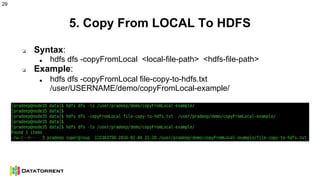

![3. Create a file on local & put it on HDFS

❏ Syntax:

■ vi filename.txt

■ hdfs dfs -put [options] <local-file-path> <hdfs-dir-path>

❏ Example:

■ vi file-copy-to-hdfs.txt

■ hdfs dfs -put file-copy-to-hdfs.txt /user/USERNAME/demo/put-

example/

27](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-27-320.jpg)

![4. Get a file from HDFS to local

❏ Syntax:

■ hdfs dfs -get <hdfs-file-path> [local-dir-path]

❏ Example:

■ hdfs dfs -get /user/USERNAME/demo/get-example/file-copy-from-

hdfs.txt ~/demo/

28](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-28-320.jpg)

![10. Merge files on HDFS

❏ Syntax:

■ hdfs dfs -getmerge [-nl] <hdfs-dir-path> <local-file-path>

❏ Examples:

■ hdfs dfs -getmerge -nl /user/USERNAME/demo/merge-example/

/path/to/all-files.txt

34](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-34-320.jpg)

![12. Remove files/dirs from HDFS

❏ Syntax:

■ hdfs dfs -rm [options] <hdfs-file-path>

❏ Examples:

■ hdfs dfs -rm /user/USERNAME/demo/remove-example/remove-file.txt

■ hdfs dfs -rm -R /user/USERNAME/demo/remove-example/

■ hdfs dfs -rm -R -skipTrash /user/USERNAME/demo/remove-example/

36](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-36-320.jpg)

![13. Change file/dir properties

❏ Syntax:

■ hdfs dfs -chgrp [-R] <NewGroupName> <hdfs-file-path>

■ hdfs dfs -chmod [-R] <permissions> <hdfs-file-path>

■ hdfs dfs -chown [-R] <NewOwnerName> <hdfs-file-path>

❏ Examples:

■ hdfs dfs -chmod -R 777 /user/USERNAME/demo/data/file-change-

properties.txt

37](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-37-320.jpg)

![16. File test operations

❏ Syntax:

■ hdfs dfs -test -[defsz] <hdfs-file-path>

❏ Examples:

■ hdfs dfs -test -e /user/USERNAME/demo/data/file.txt

❏ echo $?

40](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-40-320.jpg)

![17. Get FileSystem Statistics

❏ Syntax:

■ hdfs dfs -stat [format] <hdfs-file-path>

❏ Format Options:

■ %b - file size in blocks, %g - group name of owner

■ %n - filename %o - block size

■ %r - replication %u - user name of owner

■ %y - modification date

41](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-41-320.jpg)

![18. Get File/Dir Counts

❏ Syntax:

■ hdfs dfs -count [-q] [-h] [-v] <hdfs-file-path>

❏ Example:

■ hdfs dfs -count -v /user/USERNAME/demo/

42](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-42-320.jpg)

![22. HDFS Admin Commands: fsck

❏ Syntax:

❏ hdfs fsck <hdfs-file-path>

❏ Options:

[-list-corruptfileblocks |

[-move | -delete | -openforwrite]

[-files [-blocks [-locations | -racks]]]

[-includeSnapshots]

47](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-47-320.jpg)

![23. HDFS Admin Commands: dfsadmin

❏ Syntax:

■ hdfs dfsadmin

❏ Options:

[-report [-live] [-dead] [-decommissioning]]

[-safemode enter | leave | get | wait]

[-refreshNodes]

[-refresh <host:ipc_port> <key> [arg1..argn]]

[-shutdownDatanode <datanode:port> [upgrade]]

[-getDatanodeInfo <datanode_host:ipc_port>]

[-help [cmd]]

❏ Examples:

■ hdfs dfsadmin -report -live

49](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-49-320.jpg)

![24. HDFS Admin Commands: namenode

❏ Syntax:

■ hdfs namenode

❏ Options:

[-checkpoint] |

[-format [-clusterid cid ] [-force] [-nonInteractive] ] |

[-upgrade [-clusterid cid] ] |

[-rollback] |

[-recover [-force] ] |

[-metadataVersion ]

❏ Examples:

■ hdfs namenode -help

51](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-51-320.jpg)

![25. HDFS Admin Commands: getconf

❏ Syntax:

■ hdfs getconf [-options]

❏ Options:

[ -namenodes ] [ -secondaryNameNodes ]

[ -backupNodes ] [ -includeFile ]

[ -excludeFile ] [ -nnRpcAddresses ]

[ -confKey [key] ]

52](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-52-320.jpg)

![Again,,, THE most important command !!

❏ Syntax:

■ hdfs dfs -help [options]

■ hdfs dfs -usage [options]

❏ Examples:

■ hdfs dfs -help help

■ hdfs dfs -usage usage

53](https://image.slidesharecdn.com/interacting-with-hdfs-160215125015/85/Interacting-with-hdfs-53-320.jpg)