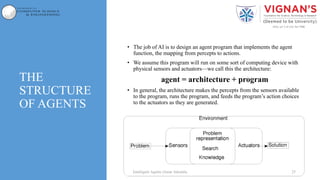

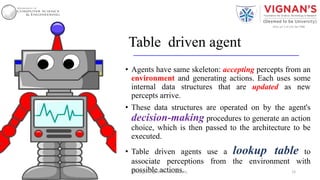

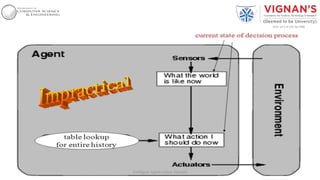

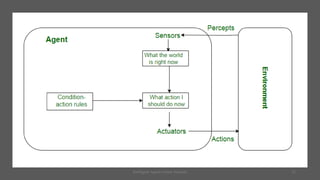

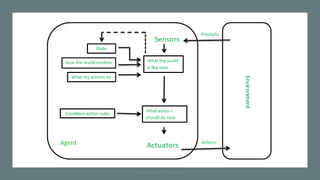

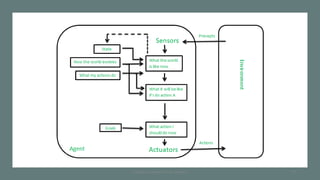

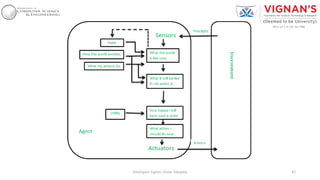

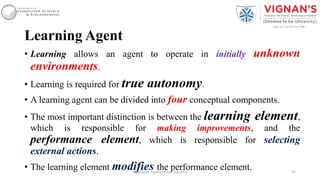

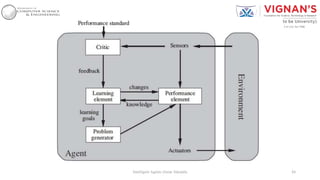

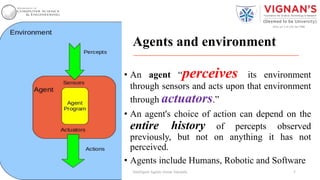

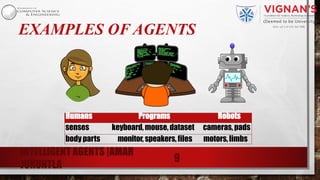

The document provides an overview of intelligent agents, detailing their definitions, types, and architectures. It explains key concepts such as perception, action, autonomy, and rationality in the context of agents operating within various environments. Different types of agents, including simple reflex, model-based, goal-based, and utility-based agents, are discussed, along with the importance of learning and adaptability.

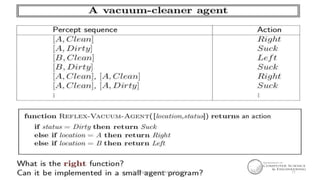

![Example: Vacuum-Cleaner

• Percepts: location and contents,

e.g., [A,Dirty]

• Actions: Left, Right, Suck, NoOp

10Intelligent Agents |Amar Jukuntla](https://image.slidesharecdn.com/agents2-190120183243/85/Intelligent-Agents-10-320.jpg)