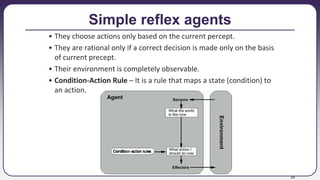

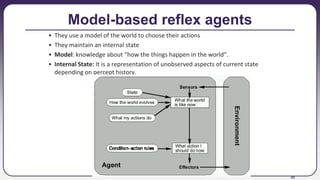

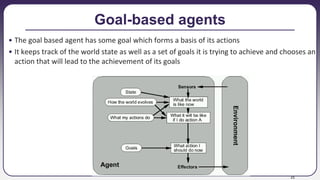

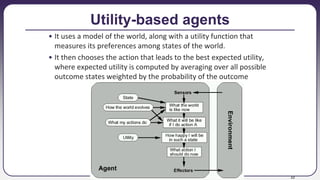

The document discusses different types of intelligent agents and their characteristics. It defines an agent as anything that can perceive its environment and act upon it. Example agent types include human agents, robotic agents, and software agents. The document also discusses windshield wiper agents as an example and covers agent terminology such as goals, percepts, sensors, effectors, and actions. Later sections discuss rational agents and how they are designed to maximize their performance based on their percept sequences and knowledge. Different types of agents are introduced, including simple reflex agents, model-based reflex agents, goal-based agents, and utility-based agents. The document also covers properties of task environments and the structure of agents.