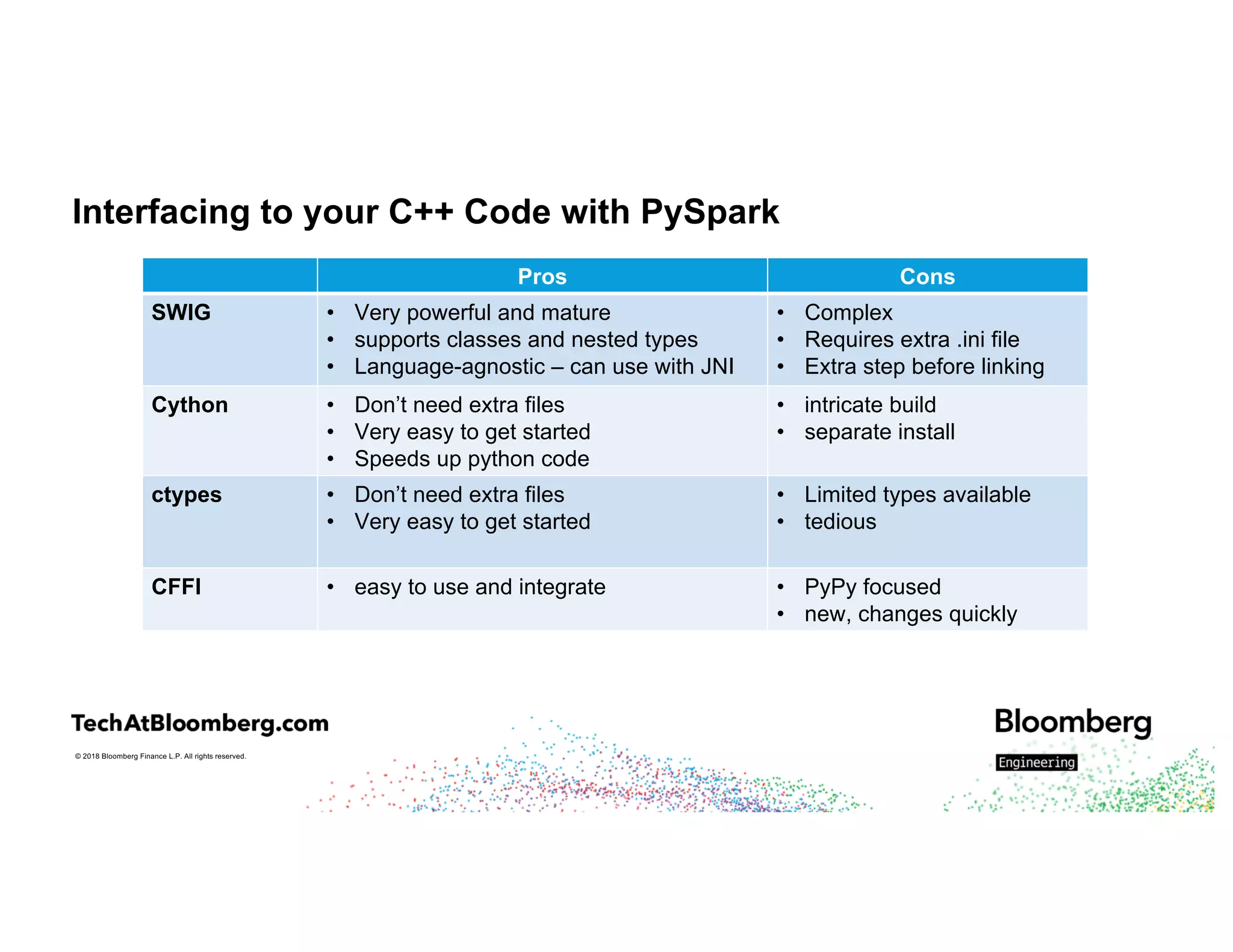

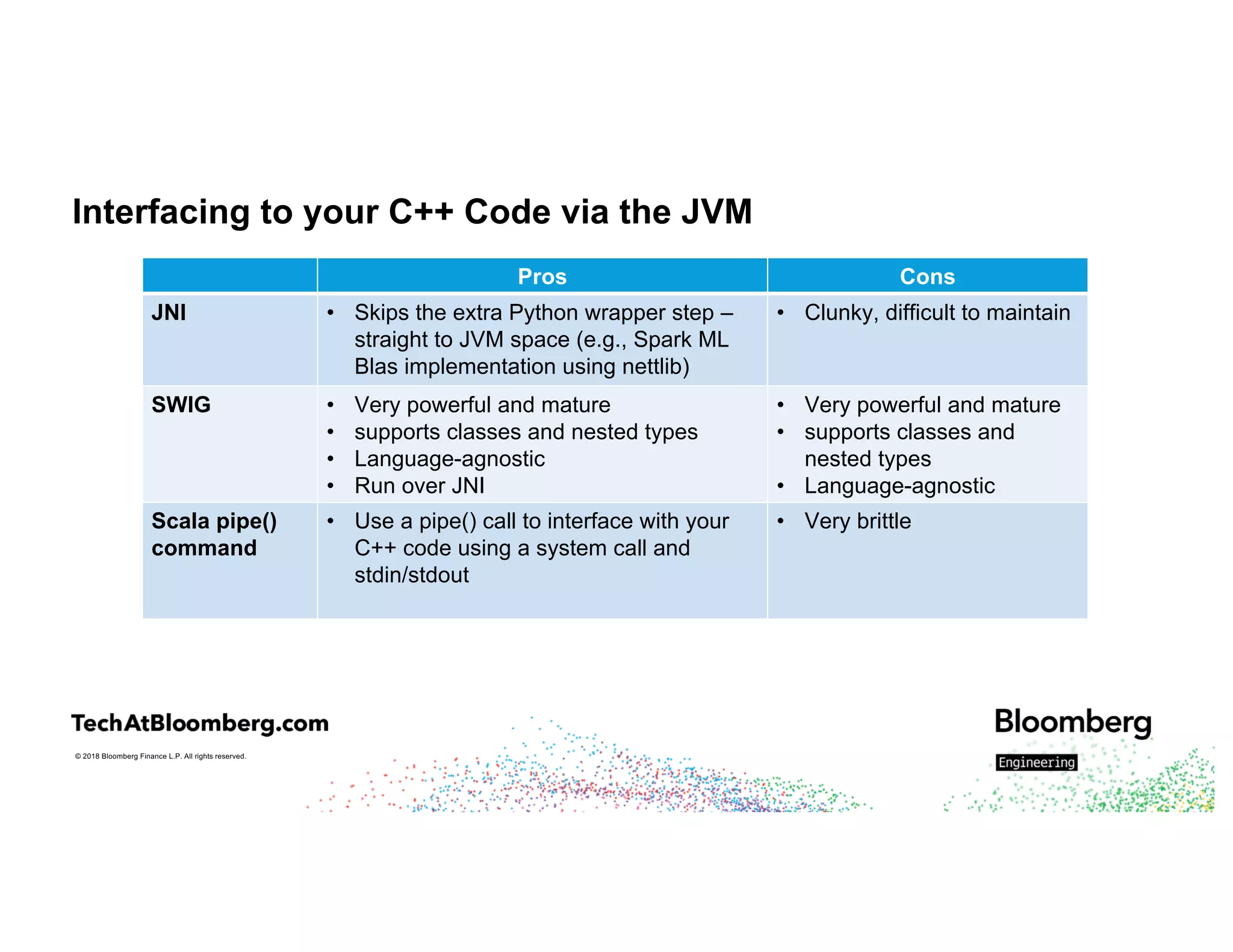

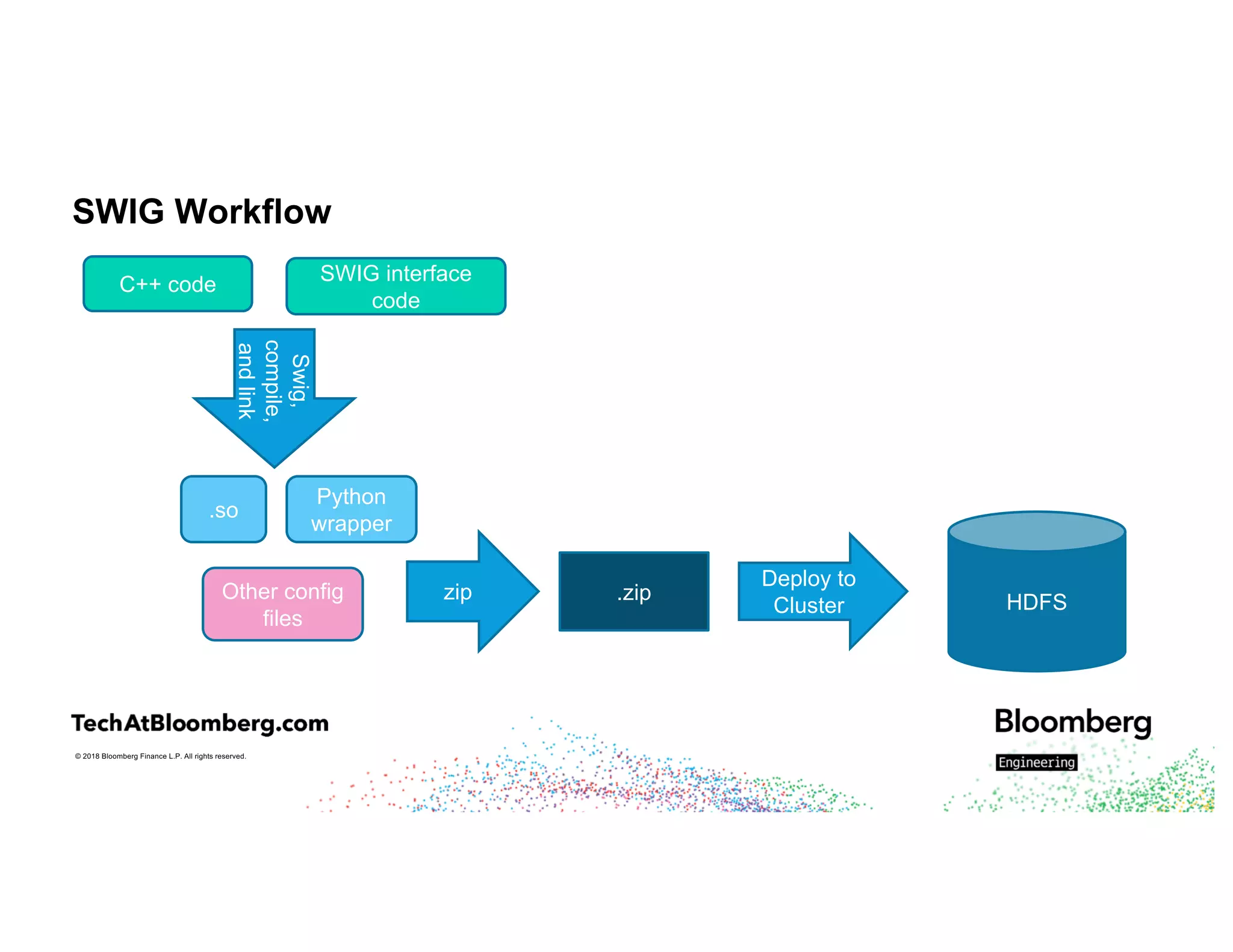

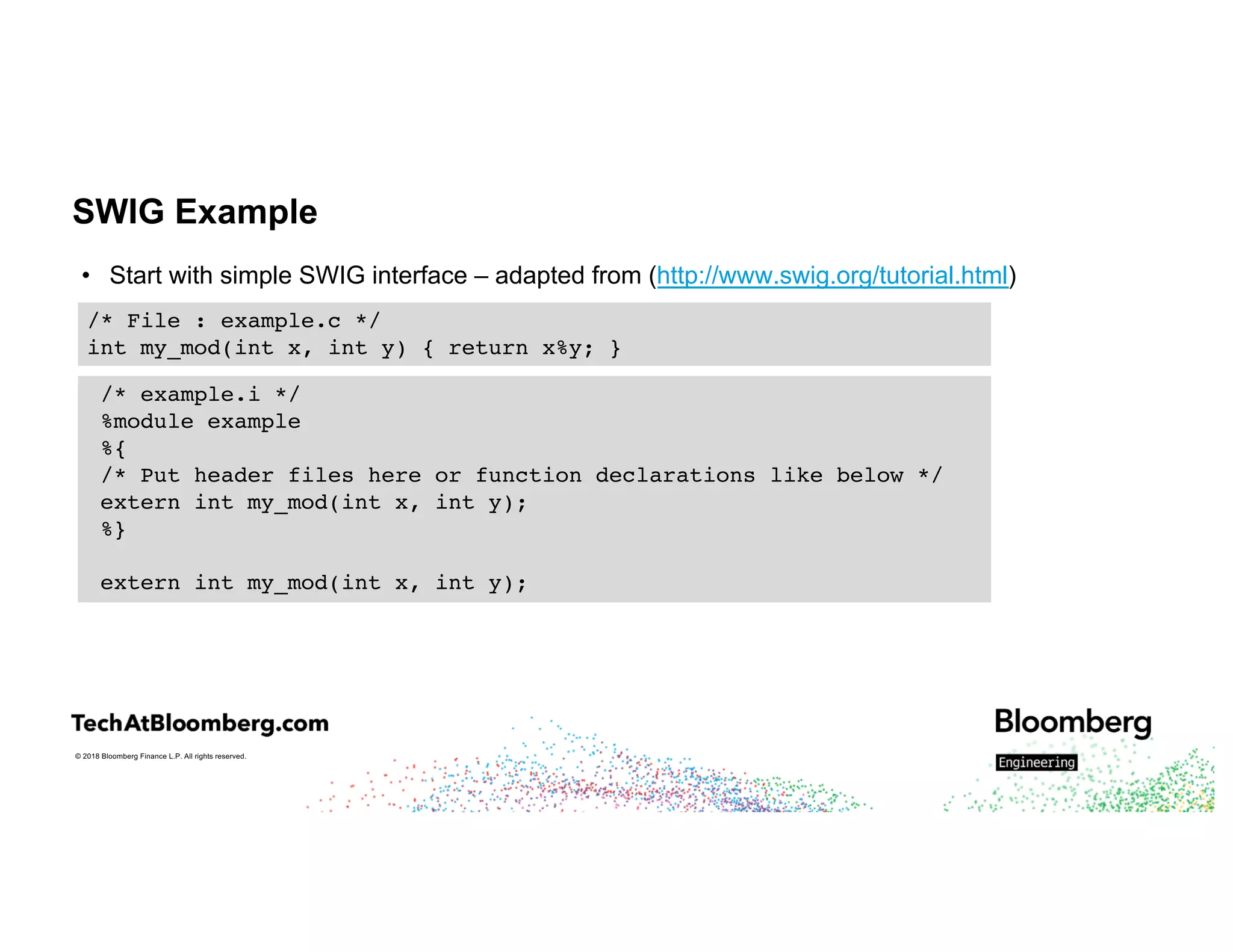

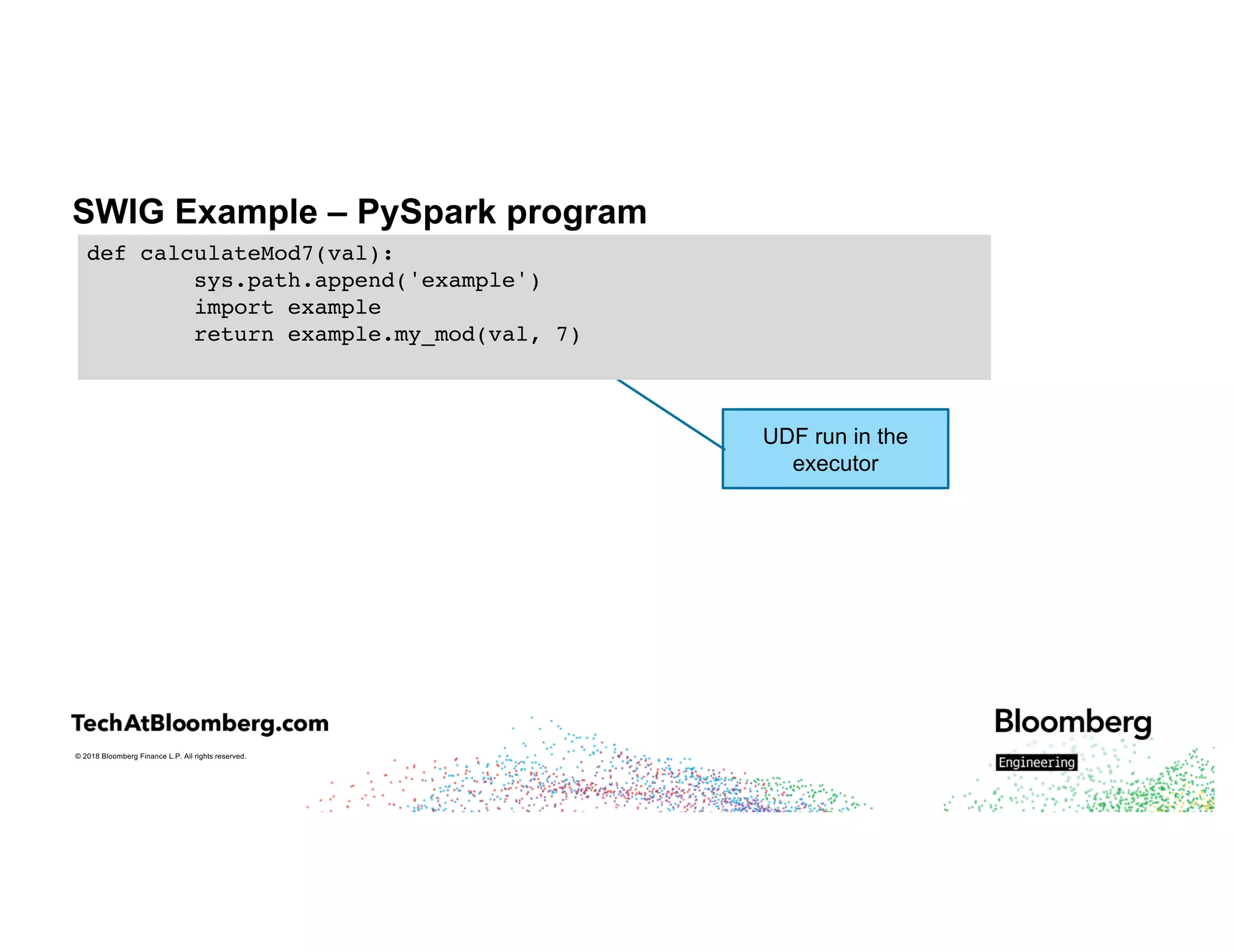

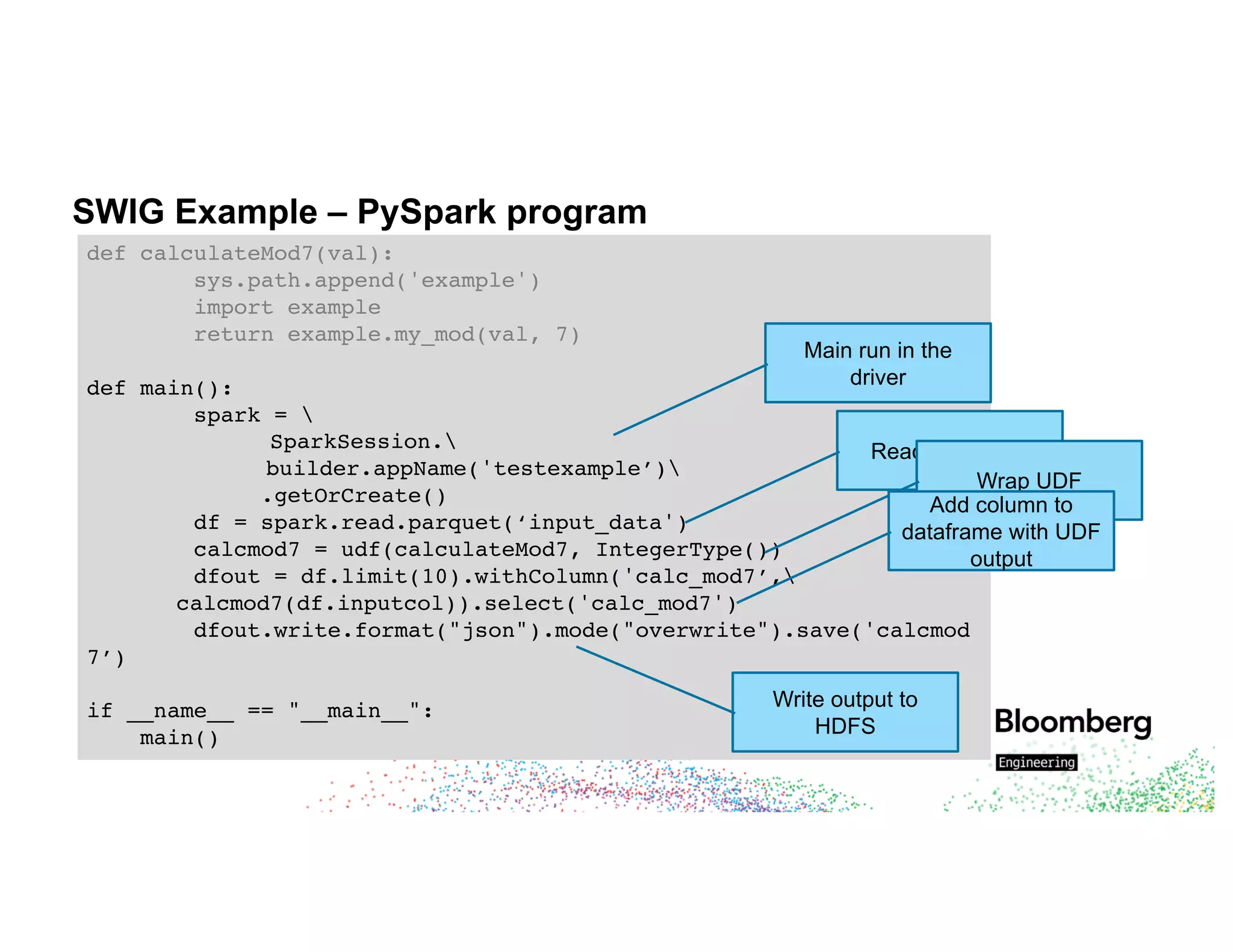

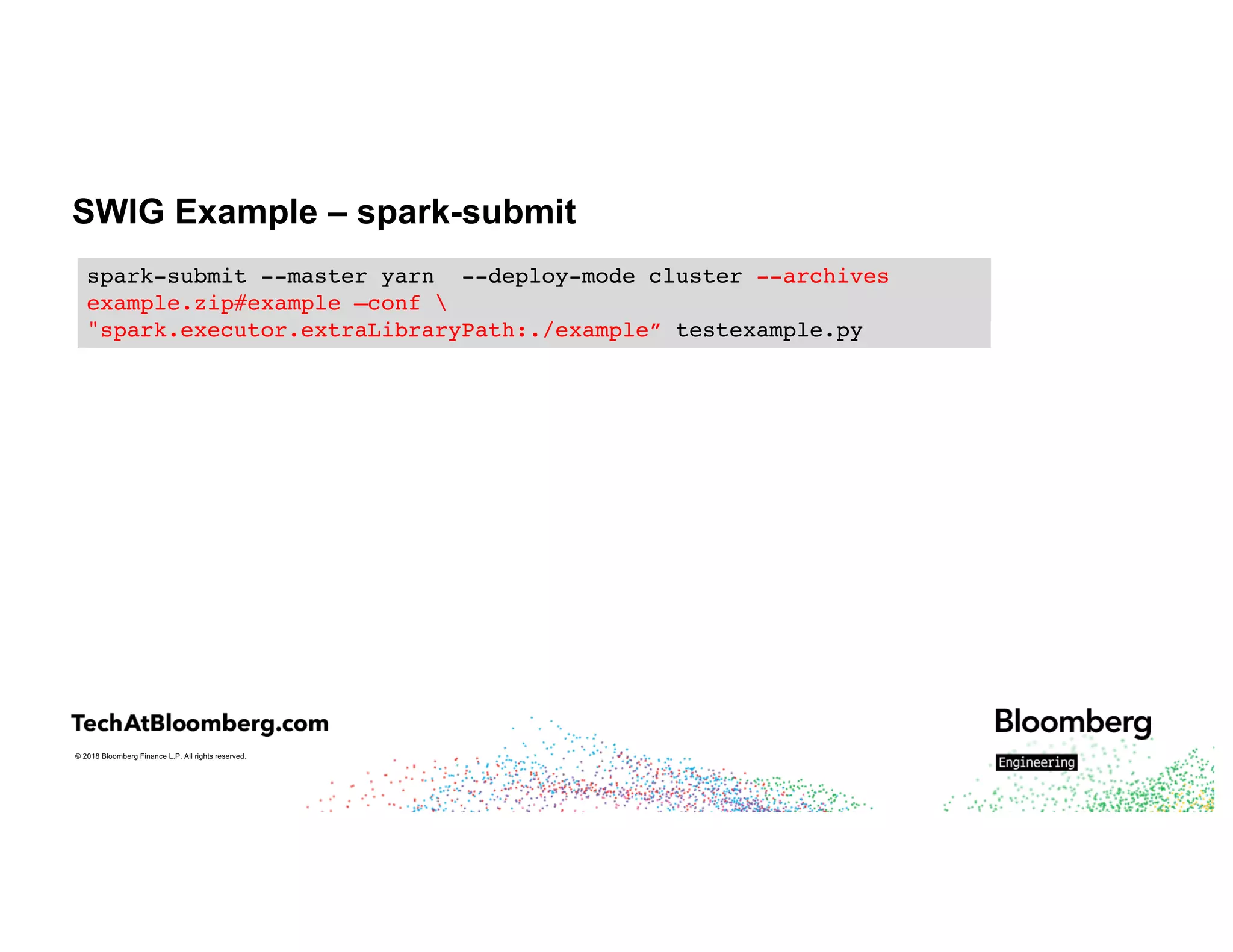

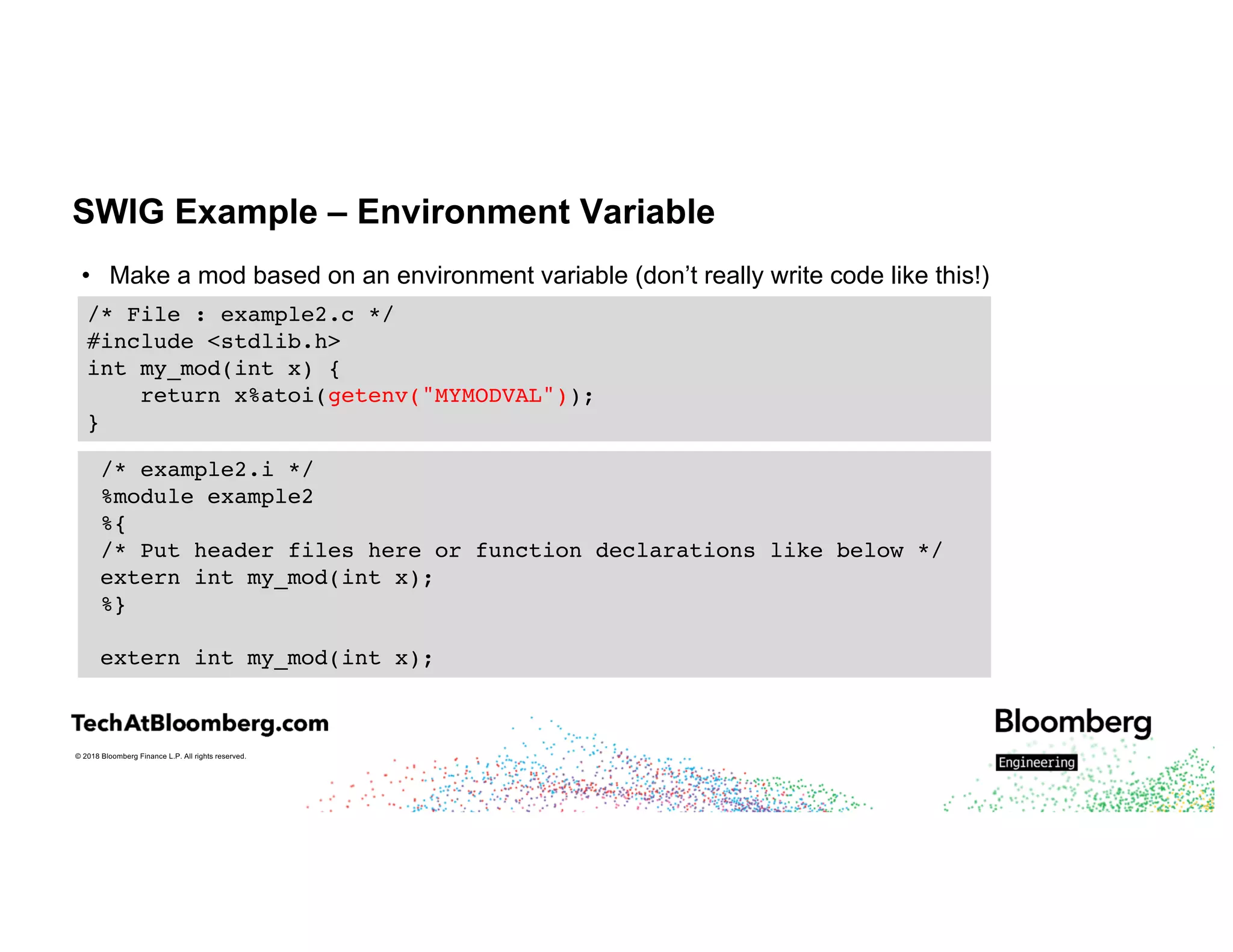

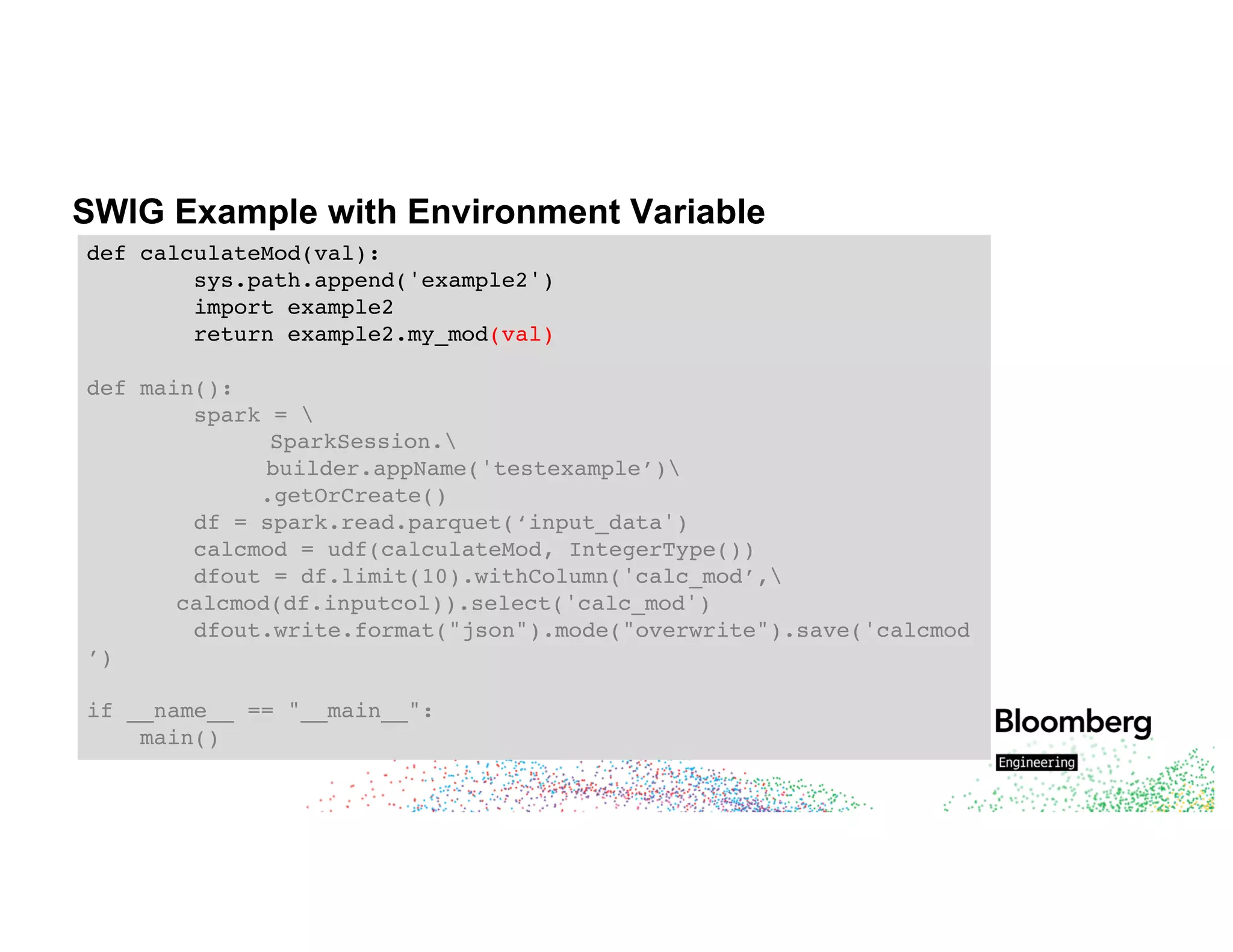

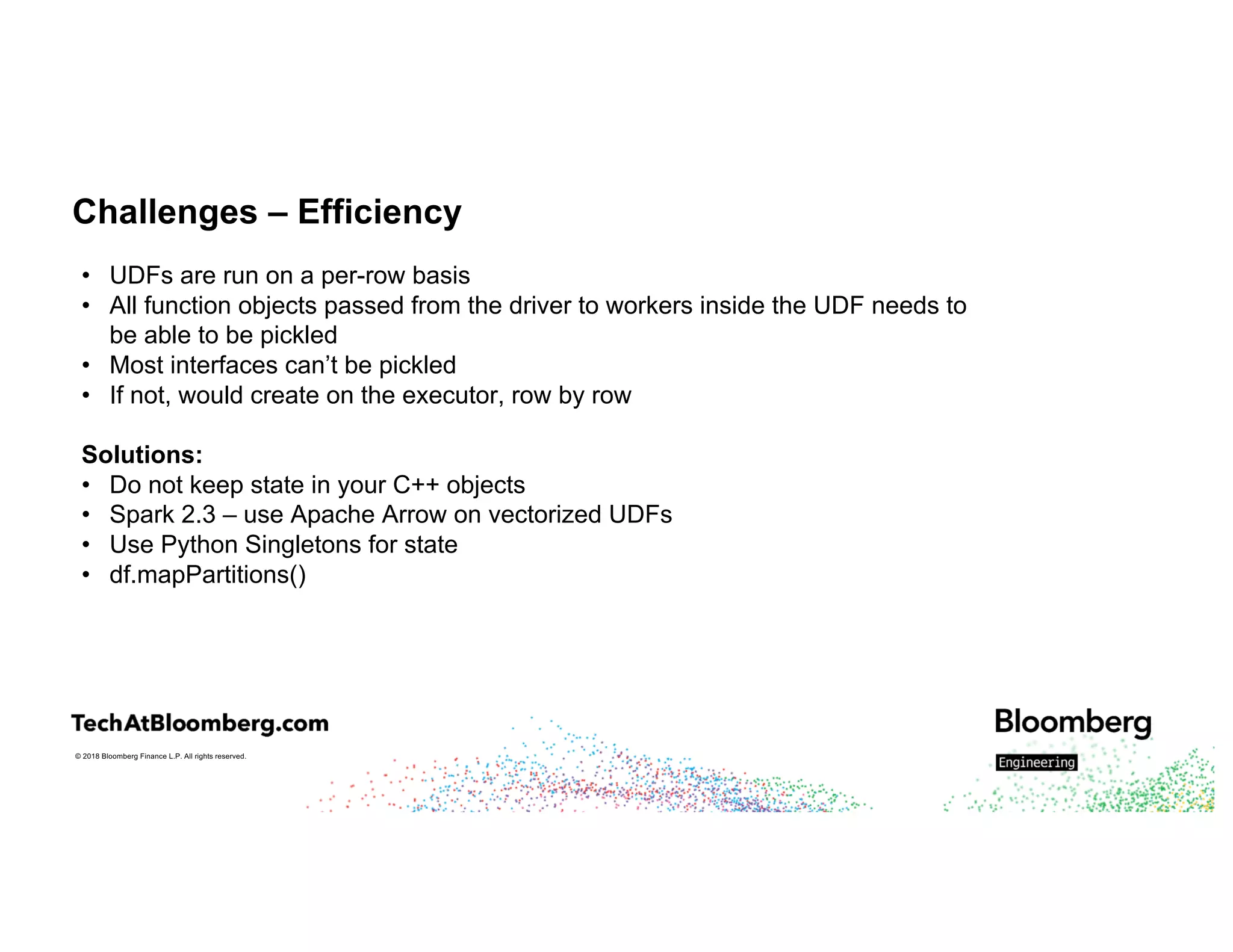

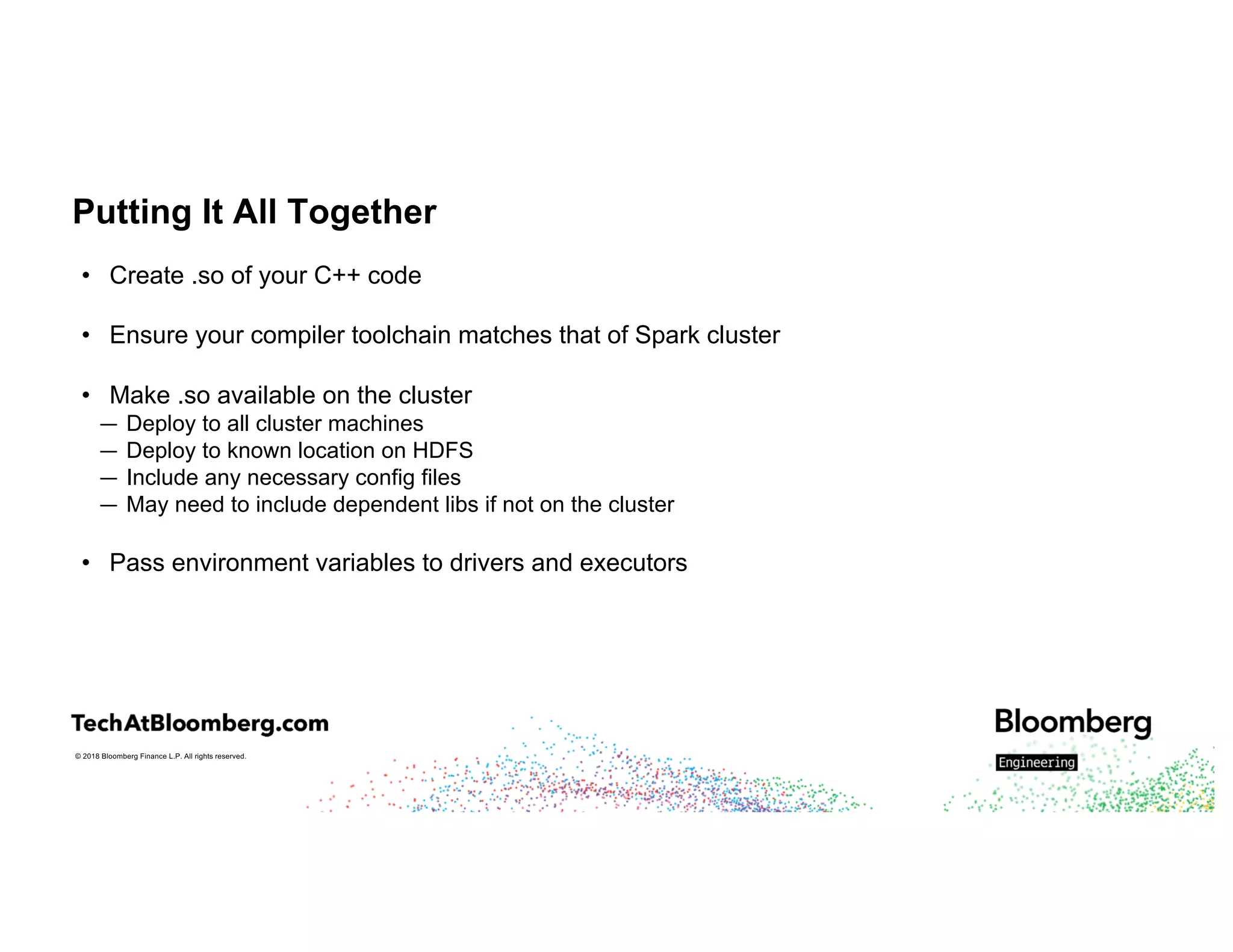

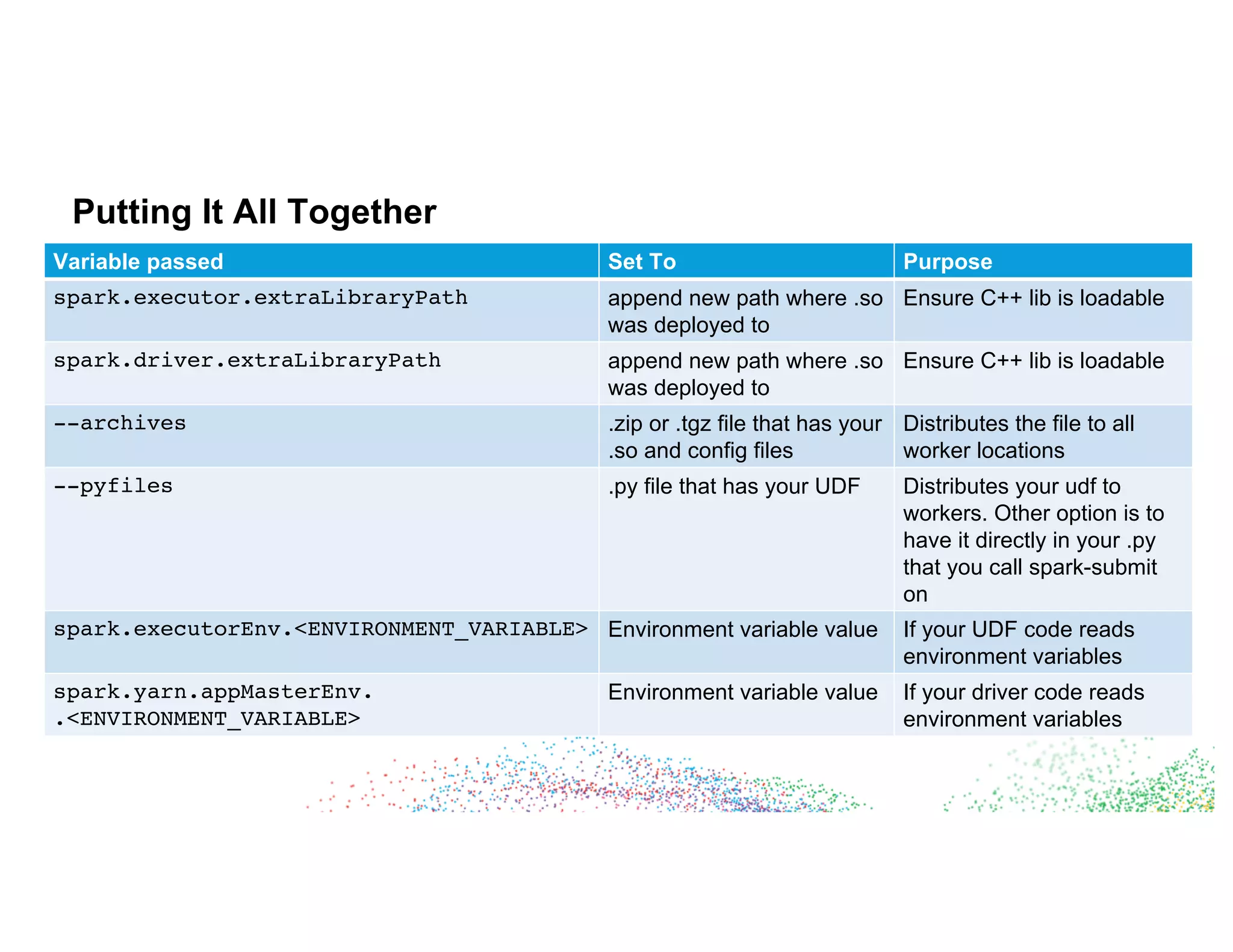

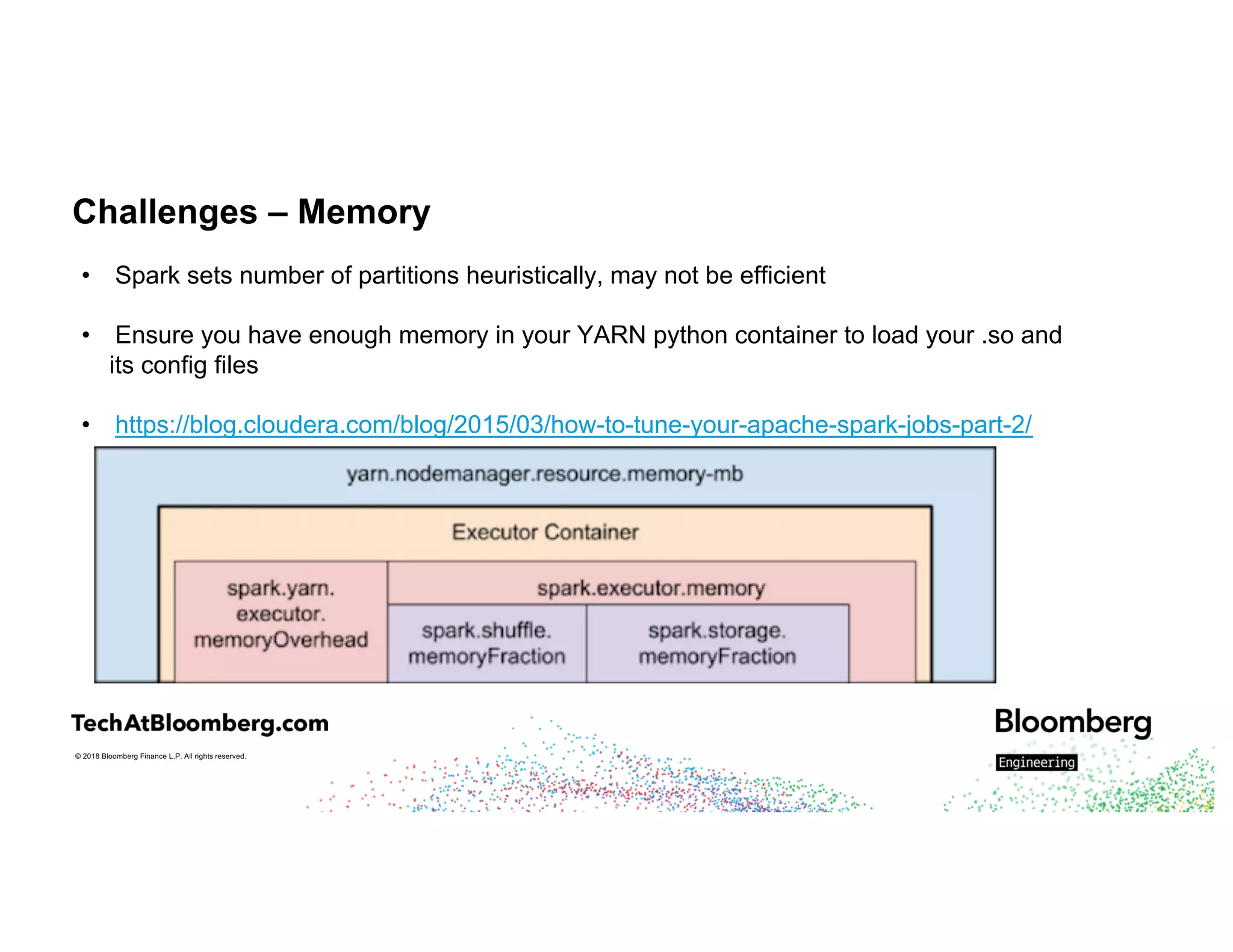

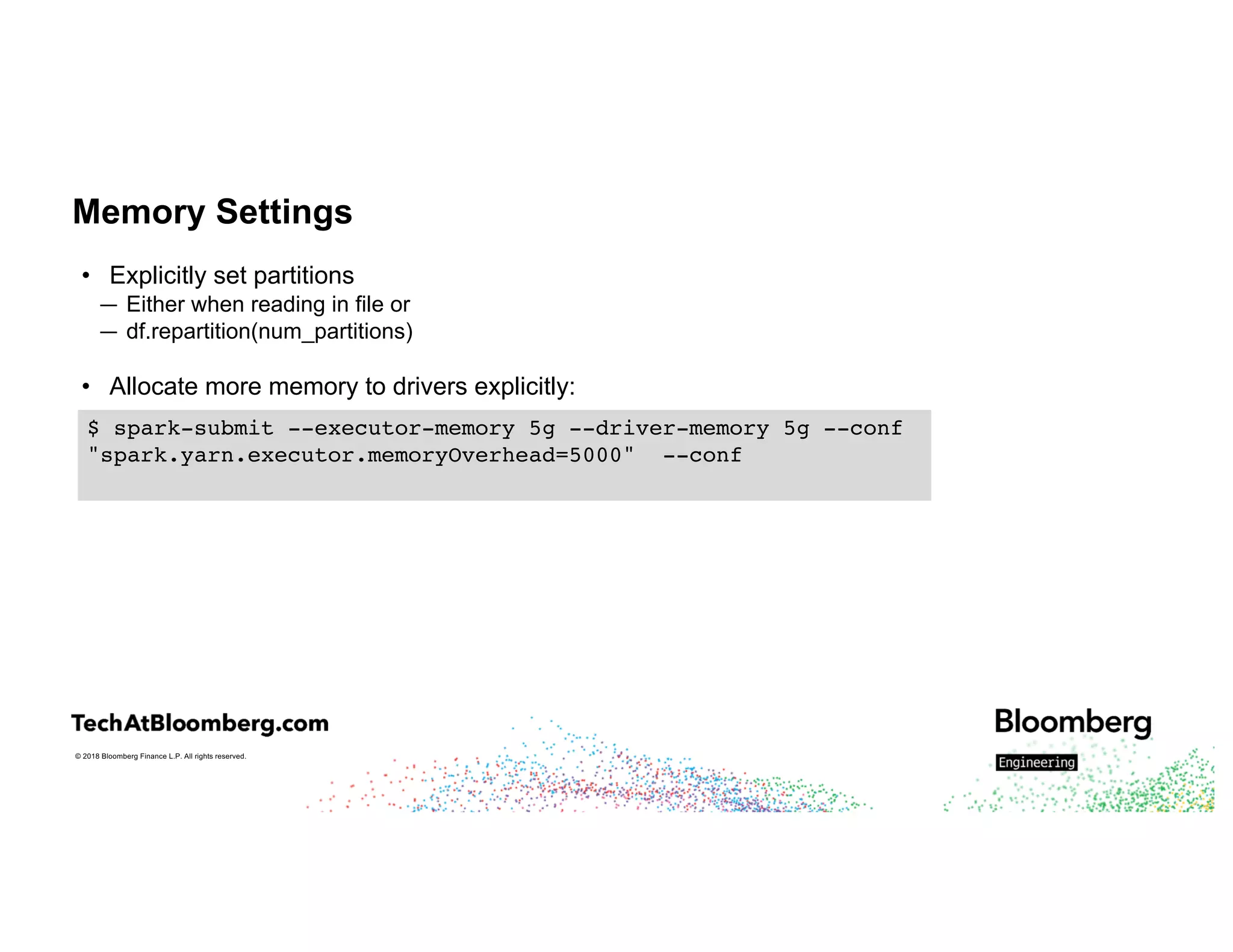

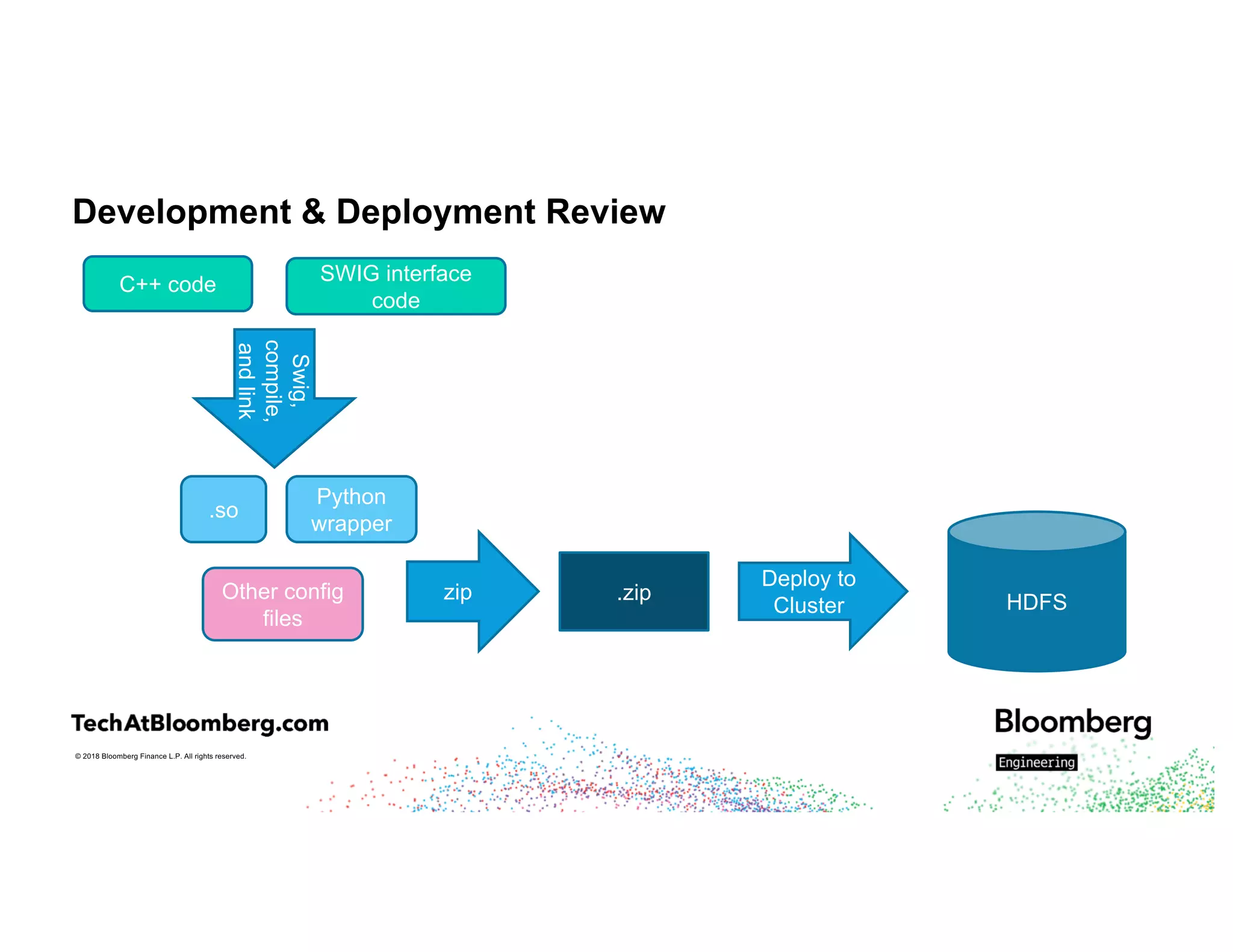

The document discusses integrating existing C++ libraries into PySpark for real-time sentiment analysis of news stories, emphasizing the importance of efficiency and quick response times. It outlines the process of interfacing C++ with PySpark, the use of different wrappers (like SWIG and ctypes), challenges faced during execution, and strategies for effective resource management. Key takeaways include the ability to run historical data backfills using C++ code in Spark and the need for careful deployment and configuration to ensure smooth operations.

![© 2018 Bloomberg Finance L.P. All rights reserved.

Python UDFs

• Native Python code

• Function objects are pickled and passed to workers

• Row data passed to Python workers one at a time

• Code will pass from Python runtime-> JVM runtime -> Python runtime and back

• [SPARK-22216] [SPARK-21187] – support vectorized UDF support with Arrow format –

see Li Jin’s talk](https://image.slidesharecdn.com/7estherkundin-180611220022/75/Integrating-Existing-C-Libraries-into-PySpark-with-Esther-Kundin-9-2048.jpg)