Slides for keynote at PyData Paris. Abstract:

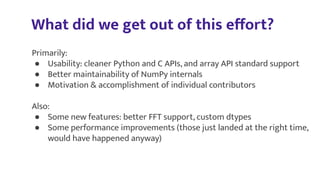

Behind every technical leap in scientific Python lies a human ecosystem of volunteers, companies, and institutions working in tension and collaboration. This keynote explores how innovation actually happens in open source, through the lens of recent and ongoing initiatives that aim to move the needle on performance and usability - from the ideas that went into NumPy 2.0 and its relatively smooth rollout to the ongoing efforts to leverage the performance GPUs offer without sacrificing maintainability and usability.

Takeaways for the audience: Whether you’re an ML engineer tired of debugging GPU-CPU inconsistencies, a researcher pushing Python to its limits, or an open-source maintainer seeking sustainable funding, this keynote will equip you with both practical solutions and a clear vision of where scientific Python is headed next.

![$ uv venv venv-pandas --python=3.12

$ source venv-pandas/bin/activate

$ uv pip install ipython pandas==2.1.1

$ ipython

...

In [1]: import pandas as pd

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

...

File interval.pyx:1, in init pandas._libs.interval

()

ValueError: numpy.dtype size changed, may indicate binary incompatibility.

Expected 96 from C head

er, got 88 from PyObject

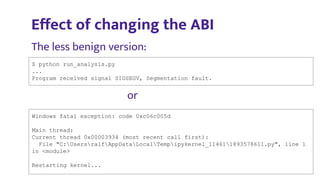

Effect of changing the ABI

The more benign version:](https://image.slidesharecdn.com/pydatapariskeynote20250930-251104141818-cb3eb944/85/PyData-Paris-keynote-Big-ideas-shaping-scientific-Python-the-quest-for-performance-and-usability-8-320.jpg)