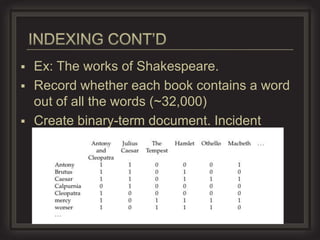

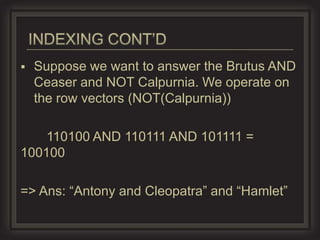

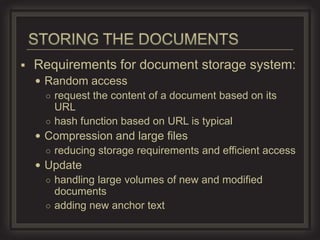

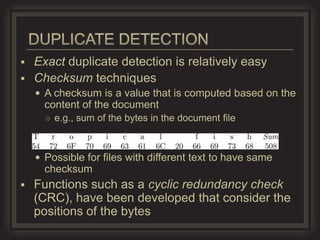

This document provides an overview of key concepts in information retrieval. It discusses issues with crawlers getting stuck in loops and storage issues. It also covers the basics of indexing documents, including creating an inverted index to speed up retrieval. Common techniques for detecting duplicate and near-duplicate documents are also summarized.