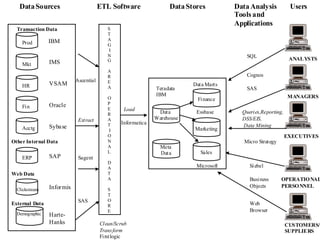

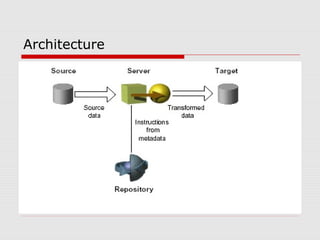

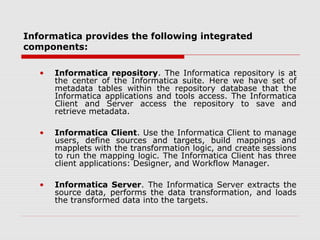

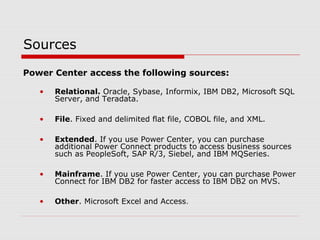

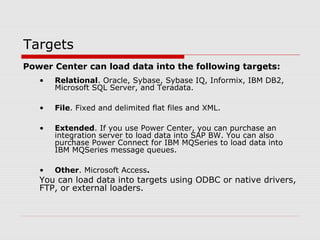

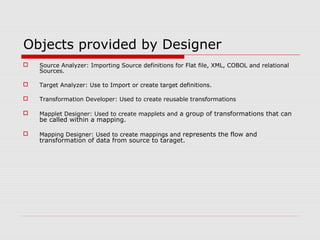

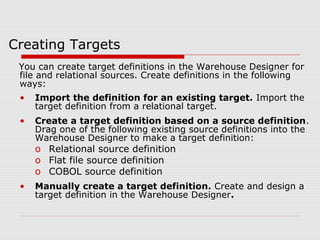

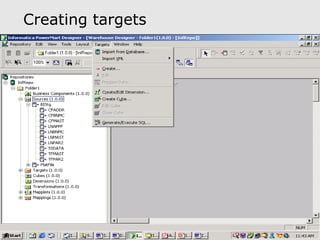

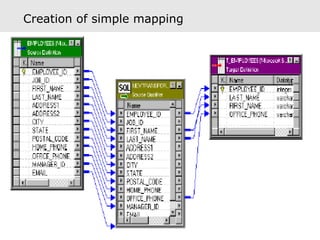

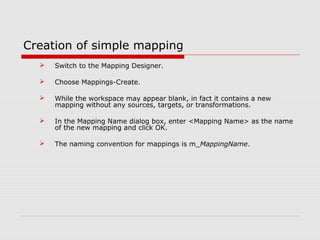

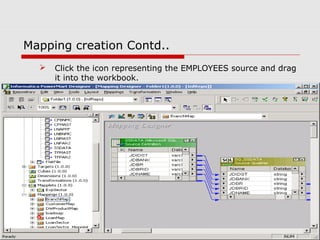

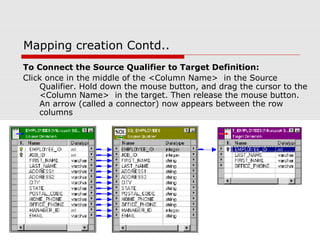

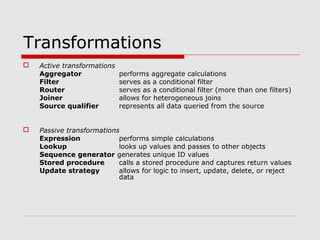

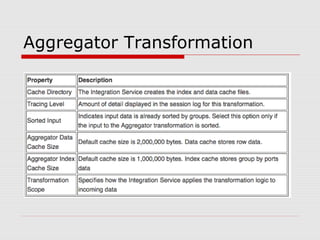

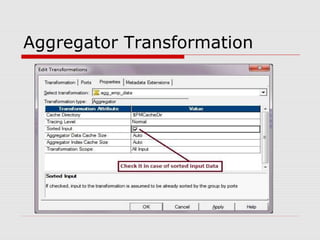

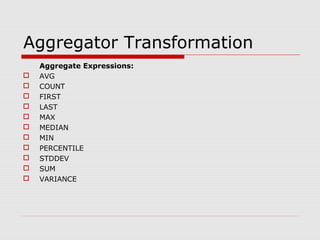

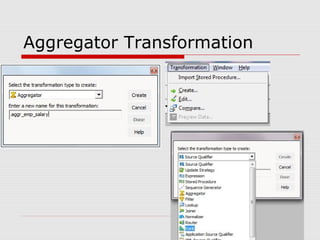

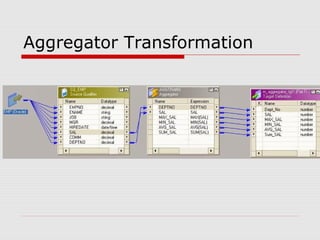

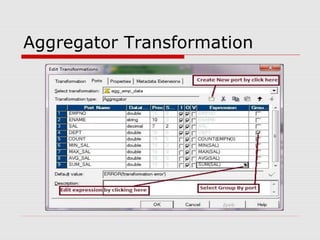

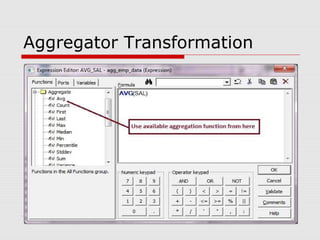

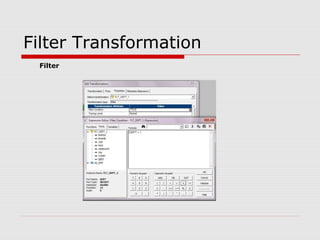

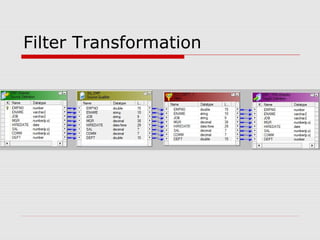

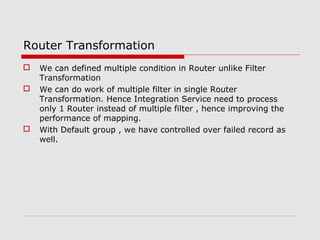

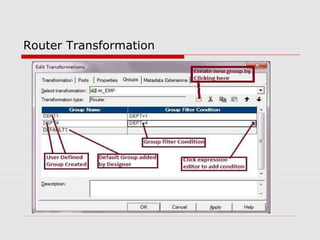

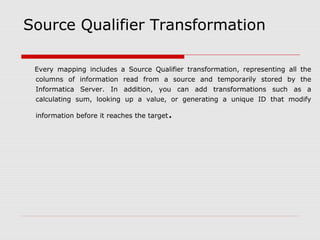

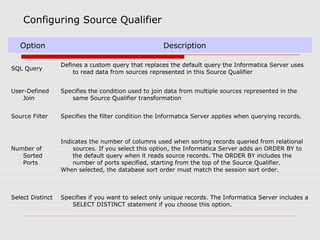

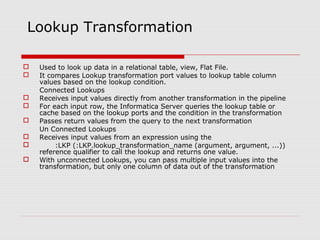

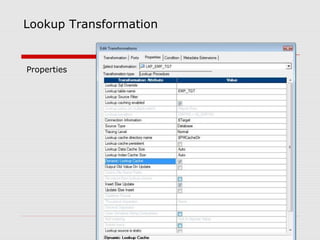

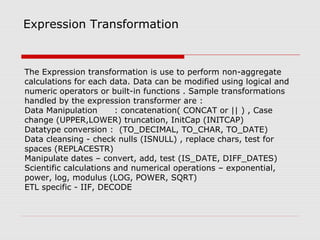

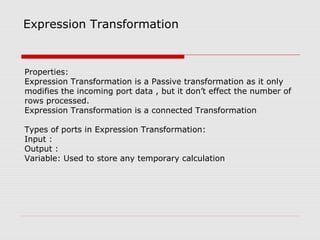

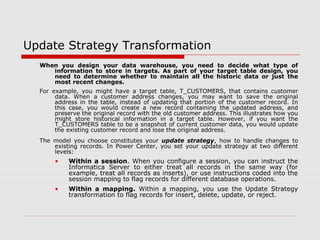

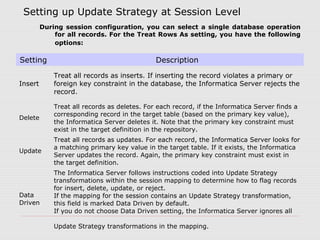

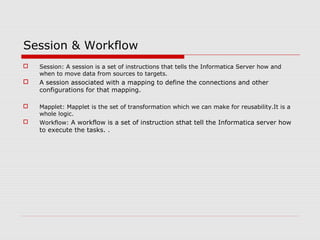

The document provides an overview of the ETL tool Informatica. It discusses that ETL stands for Extraction, Transformation, and Loading and is the process of extracting data from sources, transforming it, and loading it into a data warehouse or other target. It describes the key components of Informatica including the repository, client, server, transformations like filters and aggregators, and how mappings are used to move data from sources to targets. Finally, it provides examples of how to create simple mappings in Informatica Designer.