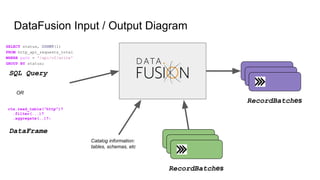

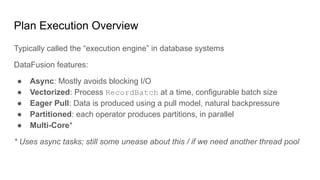

The document discusses updates to InfluxDB IOx, a new columnar time series database. It covers changes and improvements to the API, CLI, query capabilities, and path to open sourcing builds. Key points include moving to gRPC for management, adding PostgreSQL string functions to queries, optimizing functions for scalar values and columns, and monitoring internal systems as the first step to releasing open source builds.

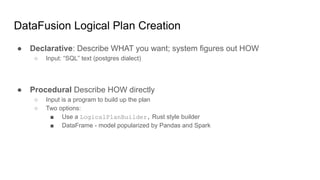

![EXPLAIN Plan

Gets a textual representation of LogicalPlan

+--------------+----------------------------------------------------------+

| plan_type | plan |

+--------------+----------------------------------------------------------+

| logical_plan | Aggregate: groupBy=[[#status]], aggr=[[COUNT(UInt8(1))]] |

| | Selection: #path Eq Utf8("/api/v2/write") |

| | TableScan: http_api_requests_total projection=None |

+--------------+----------------------------------------------------------+

> explain SELECT status, COUNT(1) FROM http_api_requests_total

WHERE path = '/api/v2/write' GROUP BY status;](https://image.slidesharecdn.com/influxdbioxtechtalks-march2021-210310175018/85/InfluxDB-IOx-Tech-Talks-Query-Engine-Design-and-the-Rust-Based-DataFusion-in-Apache-Arrow-23-320.jpg)

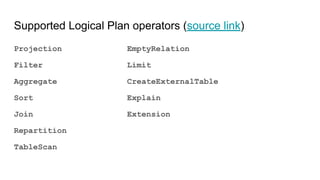

![Plans as DataFlow graphs

Filter:

#path Eq Utf8("/api/v2/write")

Aggregate:

groupBy=[[#status]],

aggr=[[COUNT(UInt8(1))]]

TableScan: http_api_requests_total

projection=None

Step 2: Predicate is applied

Step 1: Parquet file is read

Step 3: Data is aggregated

Data flows up from the

leaves to the root of the

tree](https://image.slidesharecdn.com/influxdbioxtechtalks-march2021-210310175018/85/InfluxDB-IOx-Tech-Talks-Query-Engine-Design-and-the-Rust-Based-DataFusion-in-Apache-Arrow-24-320.jpg)

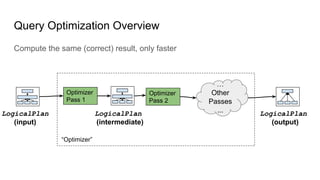

![More than initially meets the eye

Use EXPLAIN VERBOSE to see optimizations applied

> EXPLAIN VERBOSE SELECT status, COUNT(1) FROM http_api_requests_total

WHERE path = '/api/v2/write' GROUP BY status;

+----------------------+----------------------------------------------------------------+

| plan_type | plan |

+----------------------+----------------------------------------------------------------+

| logical_plan | Aggregate: groupBy=[[#status]], aggr=[[COUNT(UInt8(1))]] |

| | Selection: #path Eq Utf8("/api/v2/write") |

| | TableScan: http_api_requests_total projection=None |

| projection_push_down | Aggregate: groupBy=[[#status]], aggr=[[COUNT(UInt8(1))]] |

| | Selection: #path Eq Utf8("/api/v2/write") |

| | TableScan: http_api_requests_total

projection=Some([6, 8]) |

| type_coercion | Aggregate: groupBy=[[#status]], aggr=[[COUNT(UInt8(1))]] |

| | Selection: #path Eq Utf8("/api/v2/write") |

| | TableScan: http_api_requests_total

projection=Some([6, 8]) |

...

+----------------------+----------------------------------------------------------------+

Optimizer “pushed” down

projection so only status

and path columns from

file were read from

parquet](https://image.slidesharecdn.com/influxdbioxtechtalks-march2021-210310175018/85/InfluxDB-IOx-Tech-Talks-Query-Engine-Design-and-the-Rust-Based-DataFusion-in-Apache-Arrow-25-320.jpg)

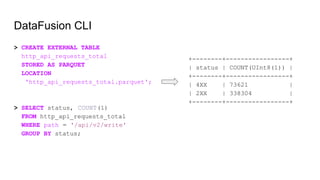

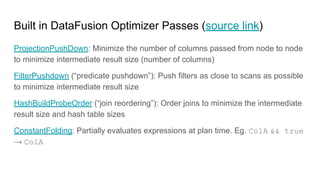

![Array + Record Batches + Schema

+--------+--------+

| status | COUNT |

+--------+--------+

| 4XX | 73621 |

| 2XX | 338304 |

| 5XX | 42 |

| 1XX | 3 |

+--------+--------+

4XX

2XX

5XX

* StringArray representation is somewhat misleading as it actually has a fixed length portion and the character data in different locations

StringArray

1XX

StringArray

73621

338304

42

UInt64Array

3

UInt64Array

Schema:

fields[0]: “status”, Utf8

fields[1]: “COUNT()”, UInt64

RecordBatch

cols:

schema:

RecordBatch

cols:

schema:](https://image.slidesharecdn.com/influxdbioxtechtalks-march2021-210310175018/85/InfluxDB-IOx-Tech-Talks-Query-Engine-Design-and-the-Rust-Based-DataFusion-in-Apache-Arrow-27-320.jpg)

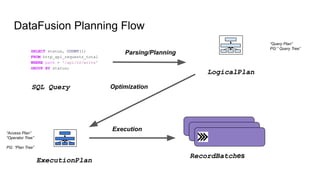

![SQL → LogicalPlan

SQL Parser

SQL Query

SELECT status, COUNT(1)

FROM http_api_requests_total

WHERE path = '/api/v2/write'

GROUP BY status;

Planner

Query {

ctes: [],

body: Select(

Select {

distinct: false,

top: None,

projection: [

UnnamedExpr(

Identifier(

Ident {

value: "status",

quote_style: None,

},

),

),

...

Parsed

Statement

LogicalPlan](https://image.slidesharecdn.com/influxdbioxtechtalks-march2021-210310175018/85/InfluxDB-IOx-Tech-Talks-Query-Engine-Design-and-the-Rust-Based-DataFusion-in-Apache-Arrow-31-320.jpg)

![“DataFrame” → Logical Plan

Rust Code

let df = ctx

.read_table("http_api_requests_total")?

.filter(col("path").eq(lit("/api/v2/write")))?

.aggregate([col("status")]), [count(lit(1))])?;

DataFrame

(Builder)

LogicalPlan](https://image.slidesharecdn.com/influxdbioxtechtalks-march2021-210310175018/85/InfluxDB-IOx-Tech-Talks-Query-Engine-Design-and-the-Rust-Based-DataFusion-in-Apache-Arrow-32-320.jpg)

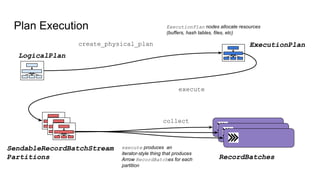

![create_physical_plan

Filter:

#path Eq Utf8("/api/v2/write")

Aggregate:

groupBy=[[#status]],

aggr=[[COUNT(UInt8(1))]]

TableScan: http_api_requests_total

projection=None

HashAggregateExec (1 partition)

AggregateMode::Final

SUM(1), GROUP BY status

HashAggregateExec (2 partitions)

AggregateMode::Partial

COUNT(1), GROUP BY status

FilterExec (2 partitions)

path = “/api/v2/write”

ParquetExec (2 partitions)

files = file1, file2

LogicalPlan ExecutionPlan

MergeExec (1 partition)](https://image.slidesharecdn.com/influxdbioxtechtalks-march2021-210310175018/85/InfluxDB-IOx-Tech-Talks-Query-Engine-Design-and-the-Rust-Based-DataFusion-in-Apache-Arrow-51-320.jpg)