This document provides an overview of Independent Component Analysis (ICA). It discusses:

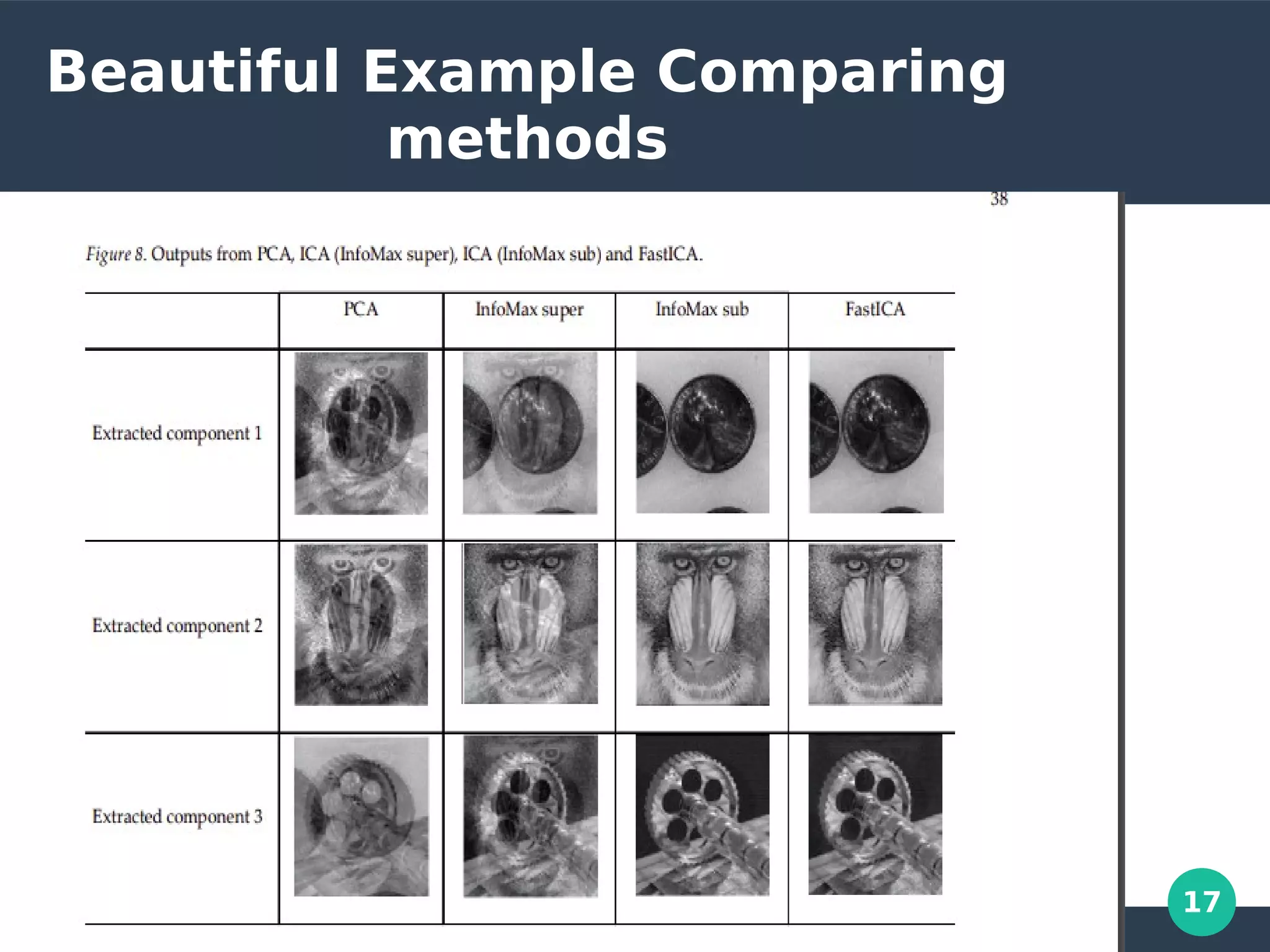

1. ICA is a technique that decomposes linear mixtures of signals into their underlying independent components.

2. The ICA model assumes observed data is generated by some independent source signals that are linearly combined using a mixing matrix. ICA estimates the inverse of this mixing matrix to retrieve the original source signals.

3. For ICA to work, the source signals must be independent and non-Gaussian. The document demonstrates ICA on some toy signals and verifies the recovered components match this criteria.