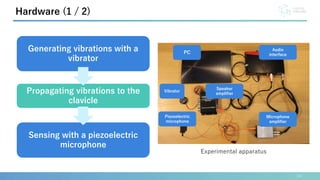

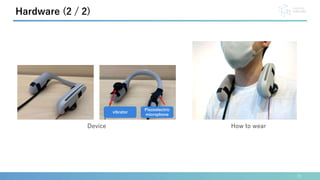

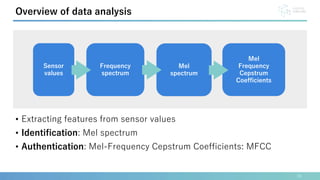

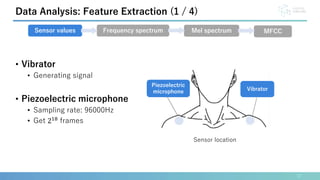

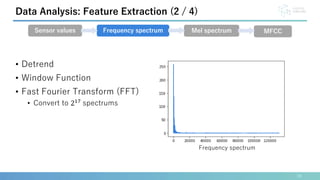

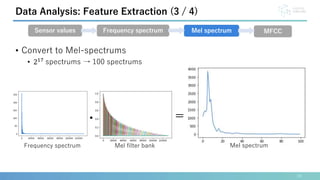

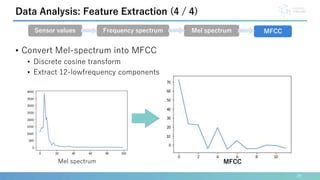

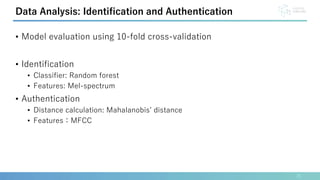

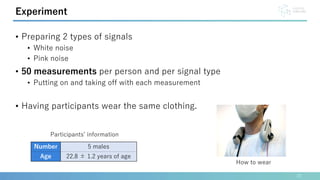

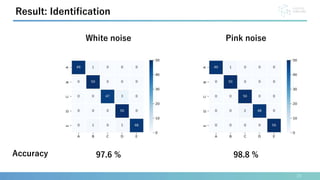

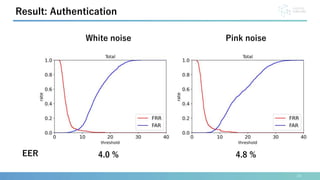

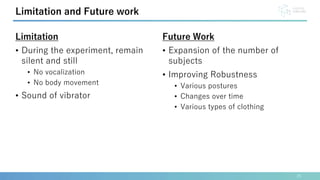

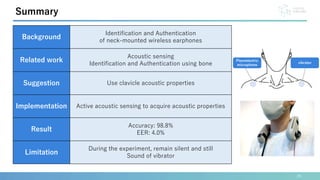

This document presents a method for identification and authentication using the acoustic properties of the clavicle for neck-mounted wireless earphones. The proposed system utilizes a vibrator and a piezoelectric microphone to achieve high accuracy in identifying users (98.8%) with a low equal error rate (4.0%). Limitations include the requirement for participants to remain silent and still during experiments, with suggestions for future work to enhance robustness and expand subject diversity.

![2

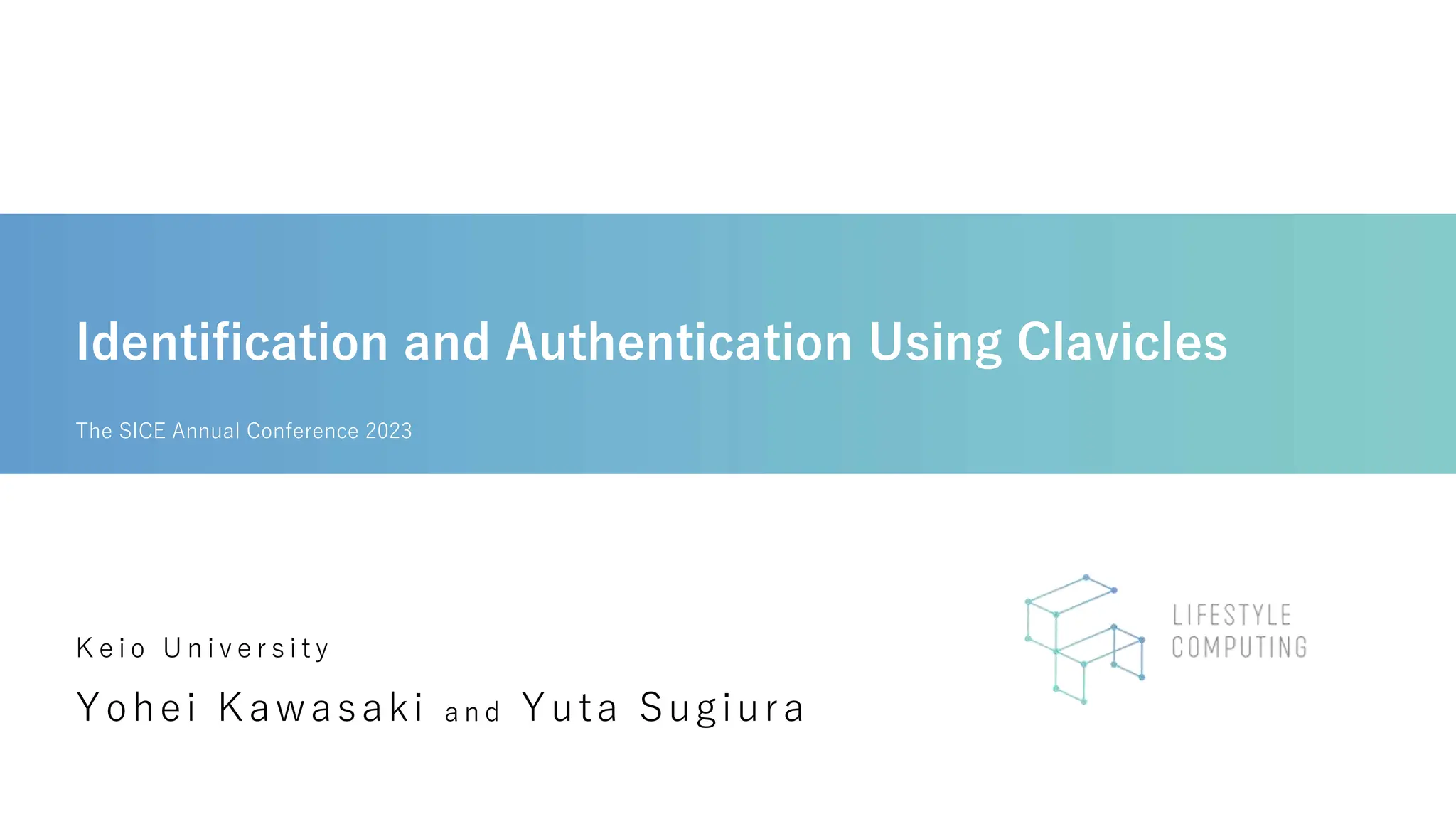

Background

• Use of neck-mounted device

THINKLET [3]

wireless earphones [1] wireless speaker [2]](https://image.slidesharecdn.com/sice2023kawasakinomemo-231030070047-8f127344/85/Identification-and-Authentication-Using-Clavicles-2-320.jpg)

![5

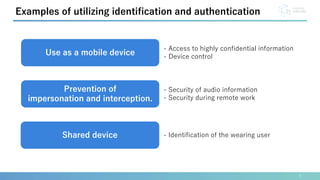

Related Work: Acoustic Sensing

Passive Acoustic Sensing

Teeth Gesture recognition

[Sun, 2021]

Touch location estimation

[Paradiso, 2002]

Contact method estimation

[Murray-Smith, 2008]

Active Acoustic Sensing

Hand pose estimation

[Kato, 2016]

Grasp posture estimation

[Ono, 2013]

Touch location estimation on arm

[Mujibiya, 2013]

Acoustic barcodes

[Harrison, 2012]

Gesture recognition on clothing

[Amesaka, 2022]

• Use only microphone • Use microphone and vibrator](https://image.slidesharecdn.com/sice2023kawasakinomemo-231030070047-8f127344/85/Identification-and-Authentication-Using-Clavicles-5-320.jpg)

![• Measuring bone acoustic properties using active acoustic sensing

7

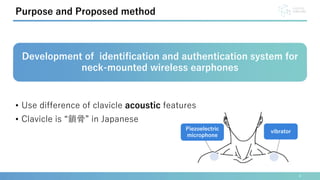

Related Work: identification and authentication using the

acoustic properties of bones

Bone of nose acoustic properties

[Isobe, 2021]

Skull acoustic properties

[Schneegass, 2016]

Bone of wrist acoustic properties

[Sehrt, 2022]](https://image.slidesharecdn.com/sice2023kawasakinomemo-231030070047-8f127344/85/Identification-and-Authentication-Using-Clavicles-7-320.jpg)

![• Individual differences in ear acoustics

• Depends on internal environment

• Some use ultrasound

8

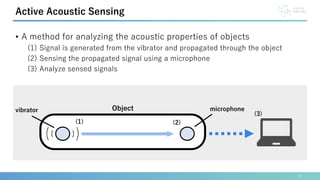

Related Work: Identification and Authentication in

Hearable Devices

[Gao, 2019]

[Grabham, 2013]

[Akkermans, 2005]](https://image.slidesharecdn.com/sice2023kawasakinomemo-231030070047-8f127344/85/Identification-and-Authentication-Using-Clavicles-8-320.jpg)

![• [Paradiso, 2002] Joseph A. Paradiso, Che King Leo, Nisha Checka, and Kaijen Hsiao. 2002. Passive acoustic knock tracking for interactive windows. In

CHI '02 Extended Abstracts on Human Factors in Computing Systems (CHI EA '02). Association for Computing Machinery, New York, NY, USA, 732–733.

• [Murray-Smith, 2008] Roderick Murray-Smith, John Williamson, Stephen Hughes, and Torben Quaade. 2008. Stane: synthesized surfaces for tactile input.

In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '08). Association for Computing Machinery, New York, NY, USA,

1299–1302.

• [Harrison, 2012] Chris Harrison, Robert Xiao, and Scott Hudson. 2012. Acoustic barcodes: passive, durable and inexpensive notched identification tags.

In Proceedings of the 25th annual ACM symposium on User interface software and technology (UIST '12). Association for Computing Machinery, New

York, NY, USA, 563–568.

• [Sun, 2021] Wei Sun, Franklin Mingzhe Li, Benjamin Steeper, Songlin Xu, Feng Tian, and Cheng Zhang. 2021. TeethTap: Recognizing Discrete Teeth

Gestures Using Motion and Acoustic Sensing on an Earpiece. In 26th International Conference on Intelligent User Interfaces (IUI '21). Association for

Computing Machinery, New York, NY, USA, 161–169.

• [Ono, 2013] Makoto Ono, Buntarou Shizuki, and Jiro Tanaka. 2013. Touch & activate: adding interactivity to existing objects using active acoustic sensing.

In Proceedings of the 26th annual ACM symposium on User interface software and technology (UIST '13). Association for Computing Machinery, New

York, NY, USA, 31–40.

• [Kato, 2016] Hiroyuki Kato and Kentaro Takemura. 2016. Hand pose estimation based on active bone-conducted sound sensing. In Proceedings of the

2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct (UbiComp '16). Association for Computing Machinery, New

York, NY, USA, 109–112.

• [Mujibiya, 2013] Adiyan Mujibiya, Xiang Cao, Desney S. Tan, Dan Morris, Shwetak N. Patel, and Jun Rekimoto. 2013. The sound of touch: on-body touch

and gesture sensing based on transdermal ultrasound propagation. In Proceedings of the 2013 ACM international conference on Interactive tabletops

and surfaces (ITS '13). Association for Computing Machinery, New York, NY, USA, 189–198.

• [Amesaka, 2022] Takashi Amesaka, Hiroki Watanabe, Masanori Sugimoto, and Buntarou Shizuki. 2022. Gesture Recognition Method Using Acoustic

Sensing on Usual Garment. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 6, 2, Article 41 (July 2022), 27 pages.

• [Schneegass, 2016] Stefan Schneegass, Youssef Oualil, and Andreas Bulling. 2016. SkullConduct: Biometric User Identification on Eyewear Computers

Using Bone Conduction Through the Skull. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI '16). Association

for Computing Machinery, New York, NY, USA, 1379–1384.

27

Reference](https://image.slidesharecdn.com/sice2023kawasakinomemo-231030070047-8f127344/85/Identification-and-Authentication-Using-Clavicles-27-320.jpg)

![• [Isobe, 2021] Kaito Isobe and Kazuya Murao. 2021. Person-identification Methodusing Active Acoustic Sensing Applied to Nose. In 2021 International

Symposium on Wearable Computers (ISWC '21). Association for Computing Machinery, New York, NY, USA, 138–140.

• [Sehrt, 2022] Jessica Sehrt, Feng Yi Lu, Leonard Husske, Anton Roesler, and Valentin Schwind. 2022. WristConduct: Biometric User Authentication Using

Bone Conduction at the Wrist. In Proceedings of Mensch und Computer 2022 (MuC '22). Association for Computing Machinery, New York, NY, USA, 371–

375

• [Akkermans, 2005] A. H. M. Akkermans, T. A. M. Kevenaar and D. W. E. Schobben, "Acoustic ear recognition for person identification," Fourth IEEE

Workshop on Automatic Identification Advanced Technologies (AutoID'05), 2005, pp. 219-223.

• [Grabham, 2013] N. J. Grabham et al., "An Evaluation of Otoacoustic Emissions as a Biometric," in IEEE Transactions on Information Forensics and

Security, vol. 8, no. 1, pp. 174-183, Jan. 2013

• [Gao, 2019] Yang Gao, Wei Wang, Vir V. Phoha, Wei Sun, and Zhanpeng Jin. 2019. EarEcho: Using Ear Canal Echo for Wearable Authentication. Proc.

ACM Interact. Mob. Wearable Ubiquitous Technol. 3, 3, Article 81 (September 2019), 24 pages.

• [1] +Style,「周囲の騒音をシャットダウン、 自分だけの音楽空間を作り出す、 dyplay ANC 30 Bluetooth Headphone - +Styleショッピング」 ,

https://plusstyle.jp/shopping/item?id=364 (Accessed on 2022/11/10)

• [2] SONY,「 SRS-WS1 | アクティブスピーカー/ネックスピーカー | ソニー」,https://www.sony.jp/active-speaker/products/SRS-WS1/ (Accessed on

2022/11/24)

• [3] Fairy Devices,「コネクテッドワーカーソリューション」,https://fairydevices.jp/cws (Accessed on 2022/11/24)

28

Reference](https://image.slidesharecdn.com/sice2023kawasakinomemo-231030070047-8f127344/85/Identification-and-Authentication-Using-Clavicles-28-320.jpg)