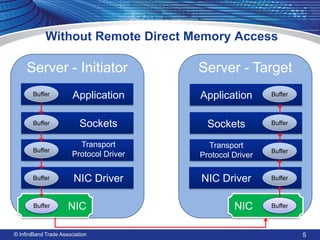

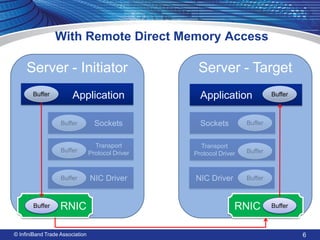

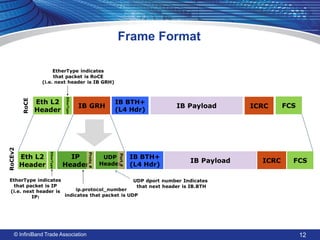

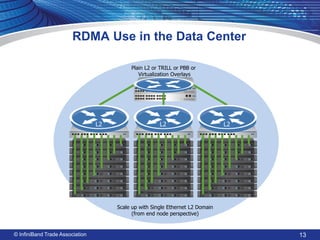

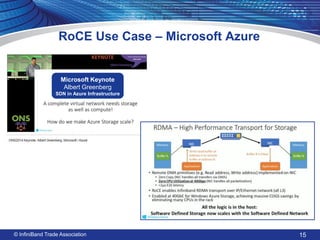

The Infiniband Trade Association has released the RoCEv2 specification to enhance the capabilities of RoCE for evolving enterprise data centers transitioning to hyperscale environments. This update provides low-latency and efficient data transfer, leveraging RDMA to minimize CPU involvement and improve network performance. The RoCEv2 specification includes new functionalities such as Layer 3 routing via UDP headers while remaining transparent to applications and network infrastructures.