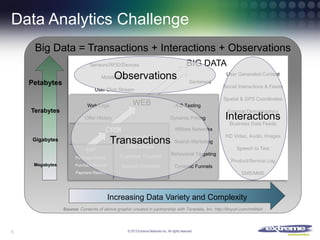

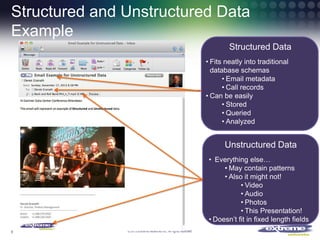

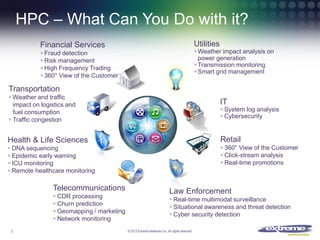

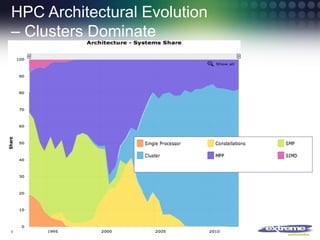

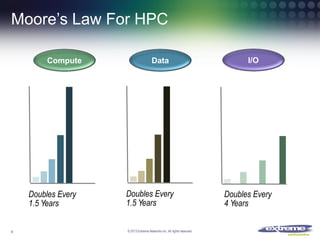

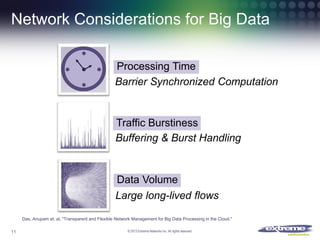

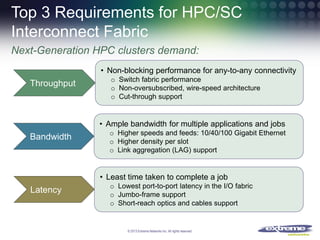

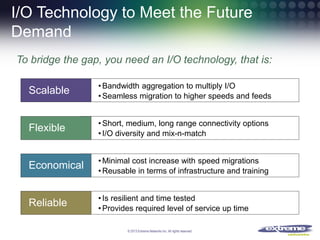

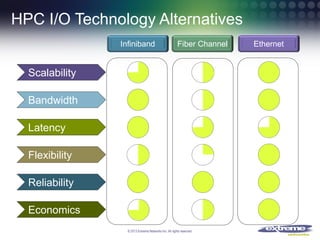

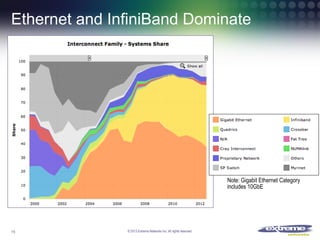

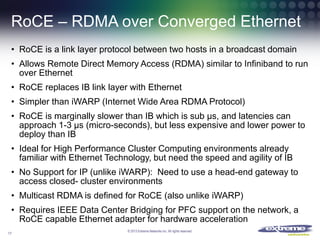

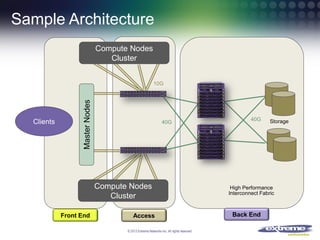

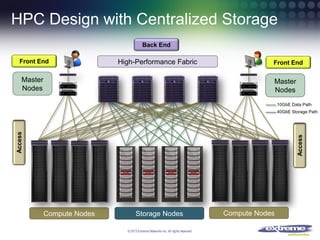

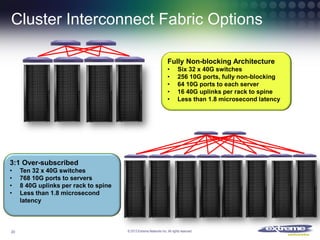

The document discusses the transformation of data into actionable information and the challenges of interconnecting high-performance computing (HPC) systems using Ethernet. It highlights the exponential growth of data, the need for efficient architectures, and presents Ethernet as a viable solution due to its scalability, flexibility, and cost-effectiveness compared to alternatives like InfiniBand. Additionally, it introduces RDMA over Converged Ethernet (RoCE) as a promising technology that combines the benefits of Ethernet with high-speed computing requirements.