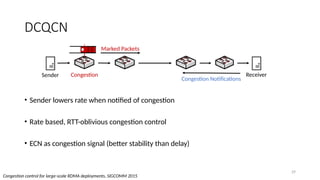

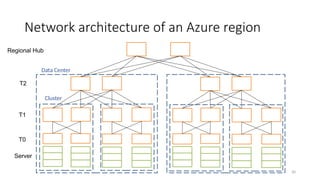

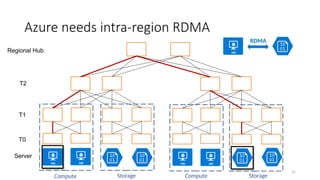

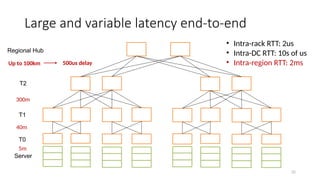

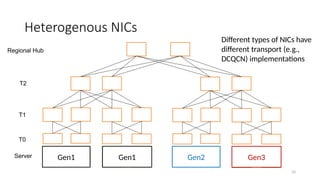

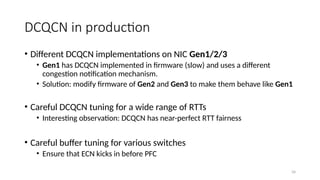

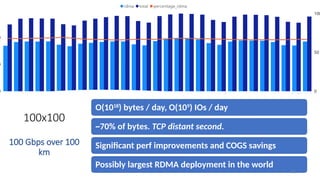

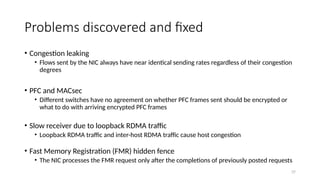

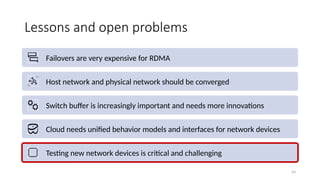

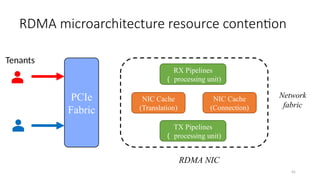

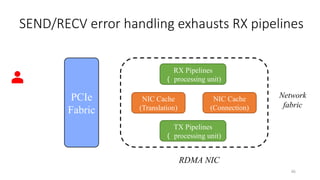

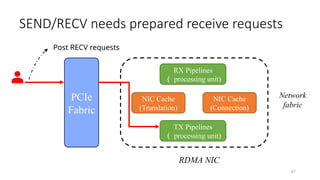

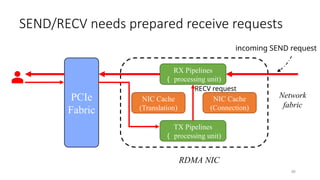

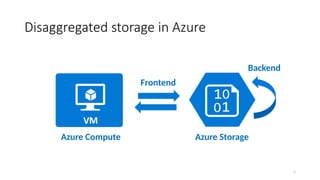

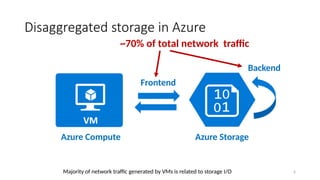

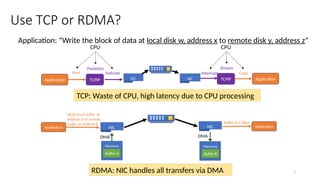

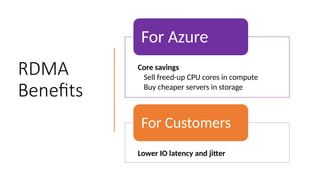

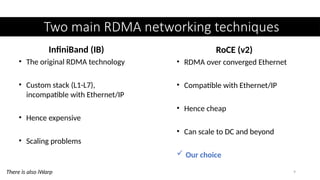

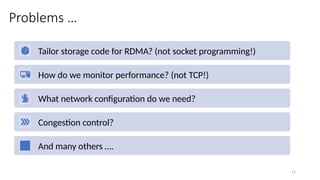

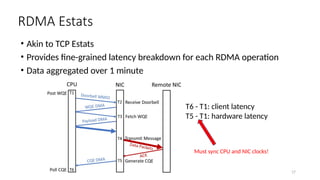

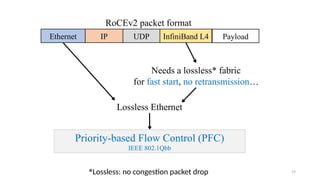

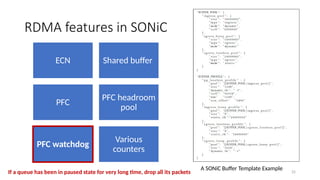

The document discusses the use of RDMA (Remote Direct Memory Access) in Azure storage traffic, emphasizing its efficiency over traditional TCP protocols. With RDMA capabilities allowing for speeds exceeding 100 Gbps and reduced latency, it accounts for approximately 70% of Azure's total network traffic. It also highlights innovations, challenges in configuration, and the need for congestion control in large-scale deployments.

![27

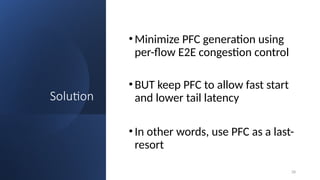

Why do we need congestion control?

PFC operates on a per-port/priority basis, not per-flow.

It can spread congestion, is unfair, and can lead to deadlocks!

Zhu et al. [SIGCOMM’15], Guo et al. [SIGCOMM’16], Hu et al. [HotNets’17]](https://image.slidesharecdn.com/rdma-apnet-240927163852-837d668e/85/RDMA-at-Hyperscale-Experience-and-Future-Directions-27-320.jpg)