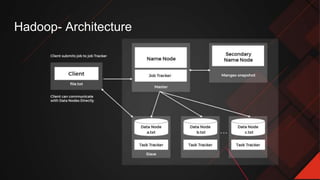

The document provides an overview of high performance computing (HPC), including frameworks like Hadoop and cloud tools from AWS. It discusses the architecture of Hadoop and the advantages of using AWS services such as Amazon EMR, S3, and Redshift for big data processing. A case study on analyzing RNA samples using these technologies highlights their efficiency and cost-effectiveness.

![aws emr create-cluster

--name "Test cluster"

--ami-version 2.4

--applications Name=Hive Name=Pig

--use-default-roles --ec2-attributes KeyName=myKey

--instance-groups

InstanceGroupType=MASTER,InstanceCount=1,InstanceType=m3.xlarge

InstanceGroupType=CORE,InstanceCount=2,InstanceType=m3.xlarge

--steps Type=PIG,Name="Pig Program",ActionOnFailure=CONTINUE,

Args=[-f,s3://mybucket/scripts/pigscript.pig,-p,

INPUT=s3://mybucket/inputdata/,-p,

OUTPUT=s3://mybucket/outputdata/,

$INPUT=s3://mybucket/inputdata/,

$OUTPUT=s3://mybucket/outputdata/]](https://image.slidesharecdn.com/copyofhighperformancecomputinghpcincloud-170707144543/85/High-Performance-Computing-HPC-in-cloud-15-320.jpg)