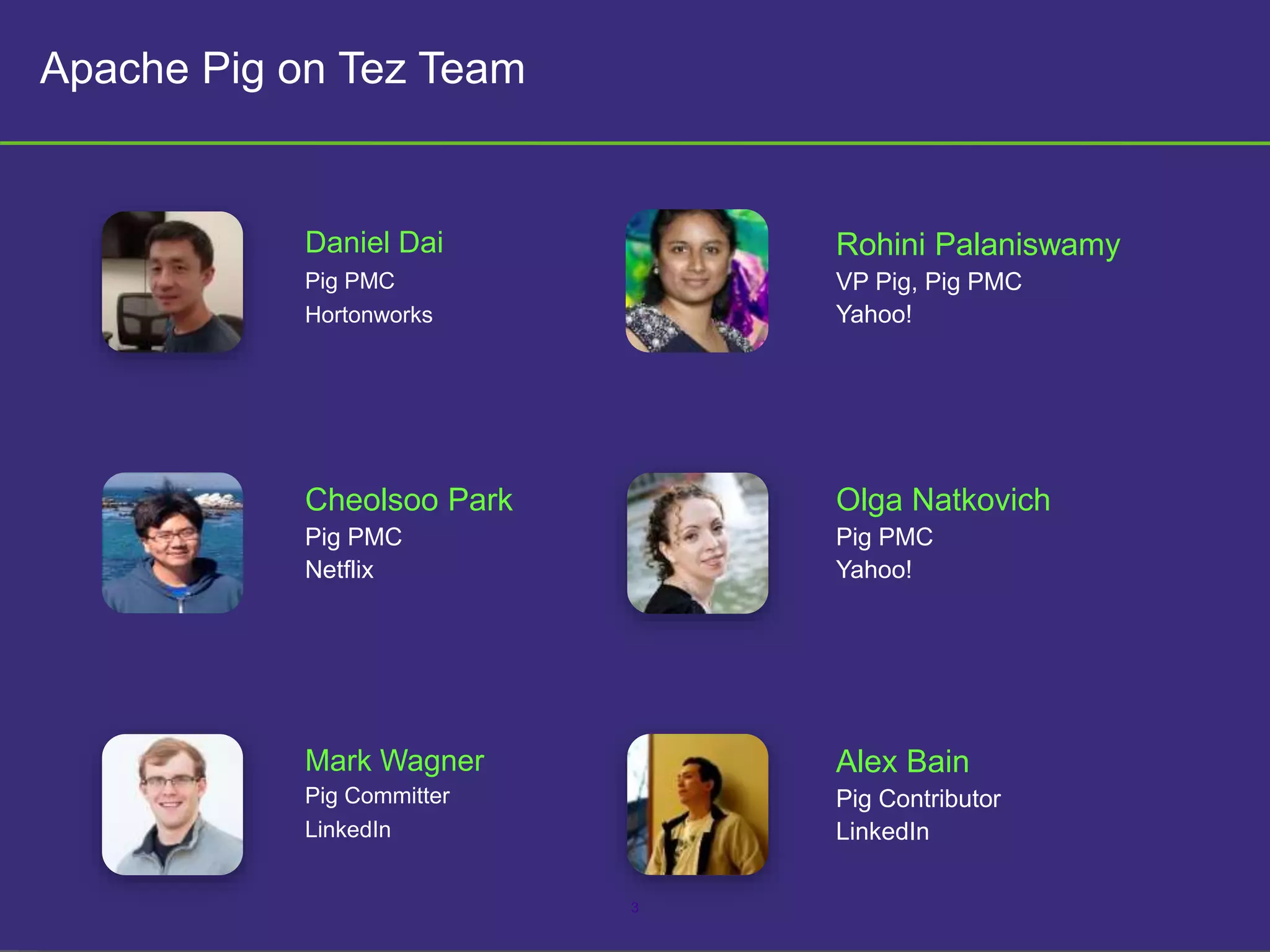

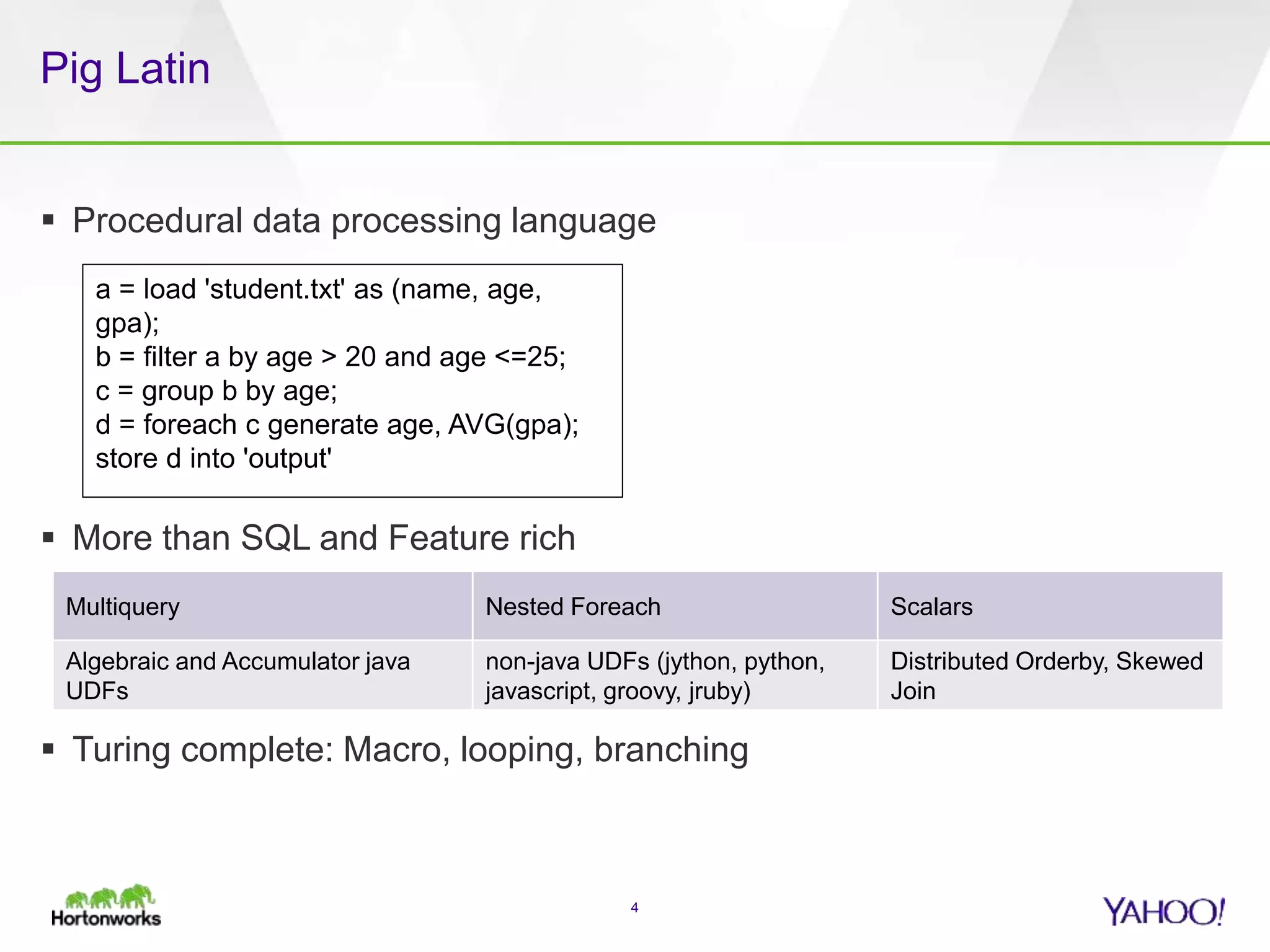

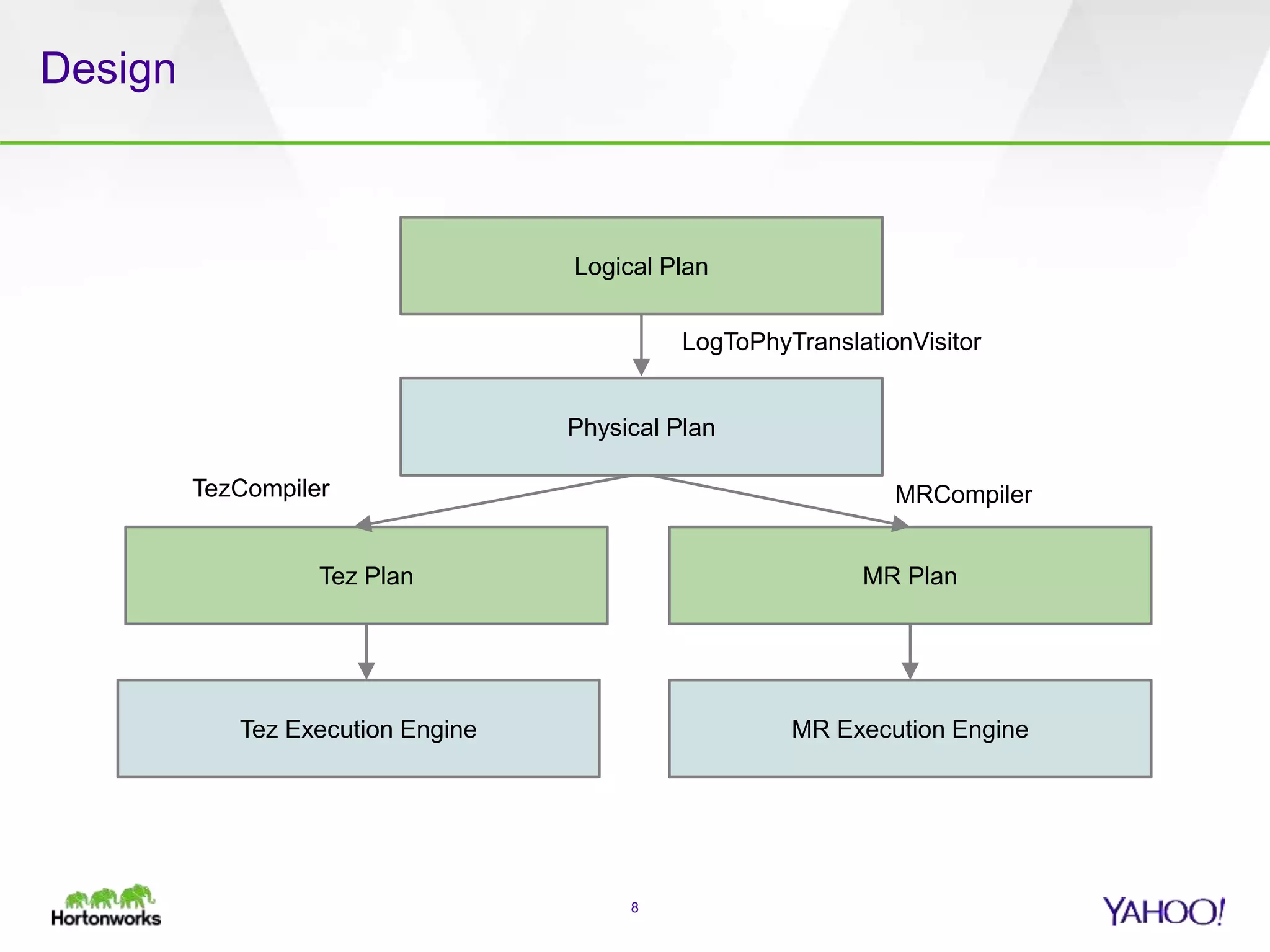

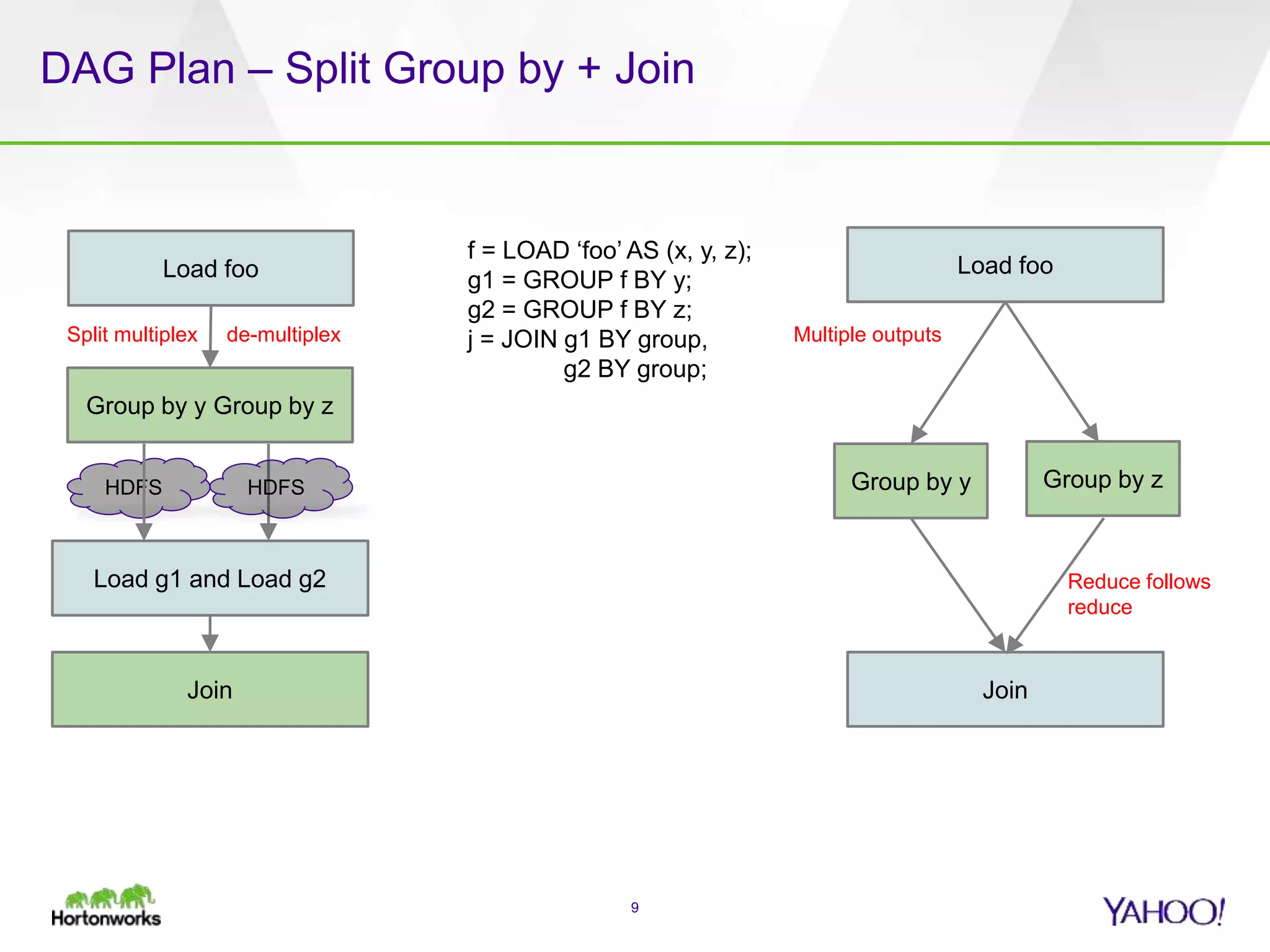

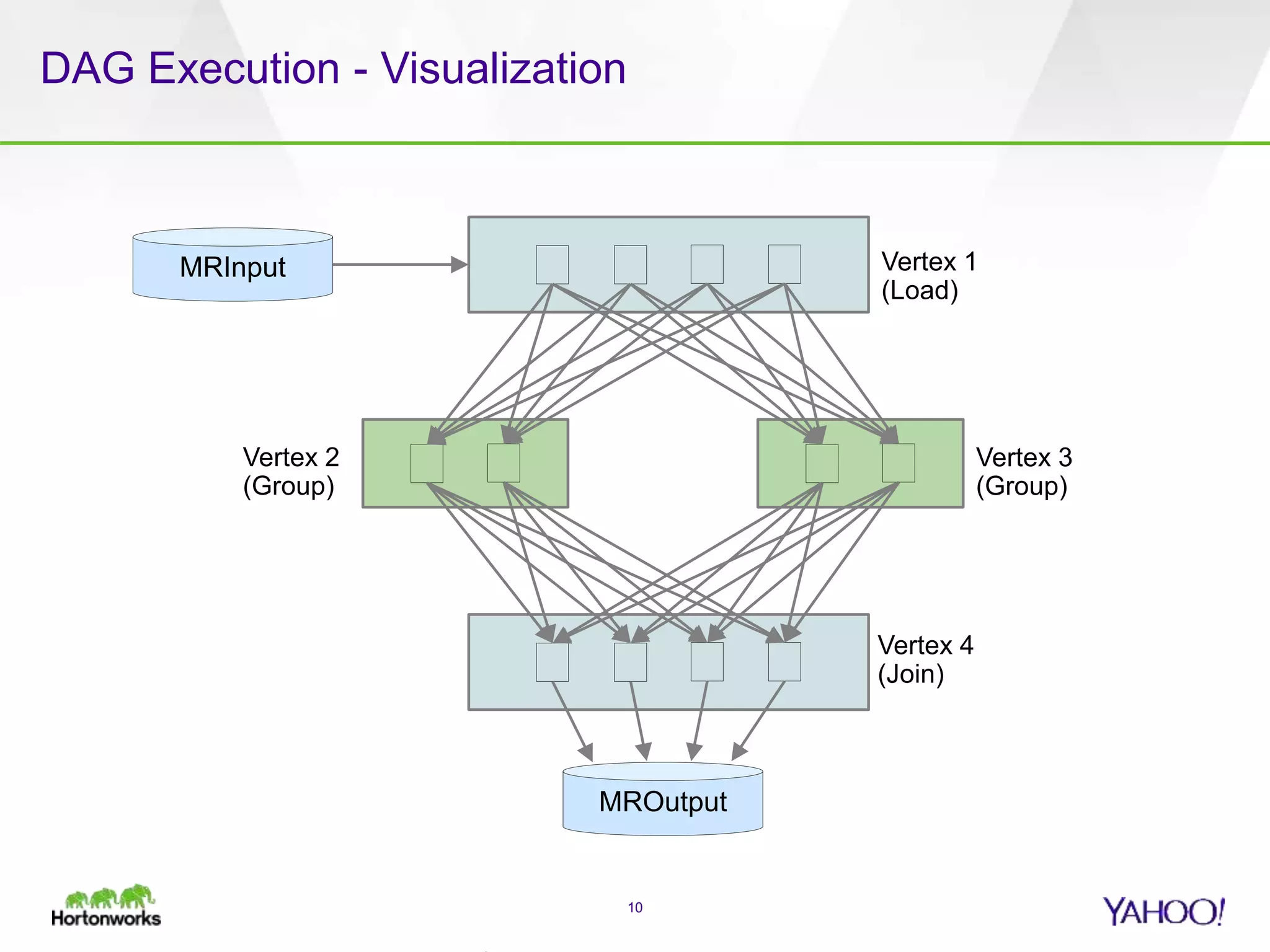

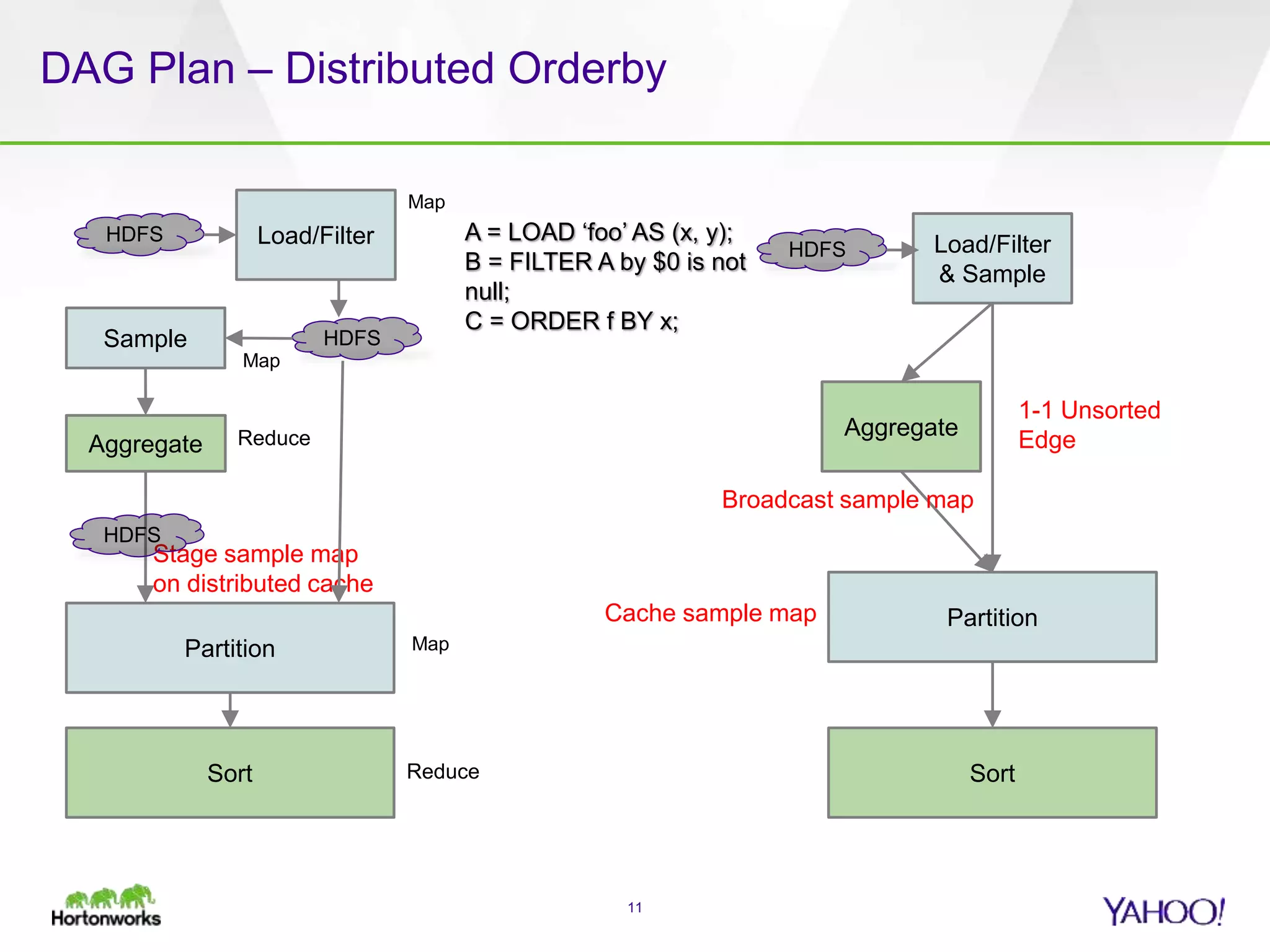

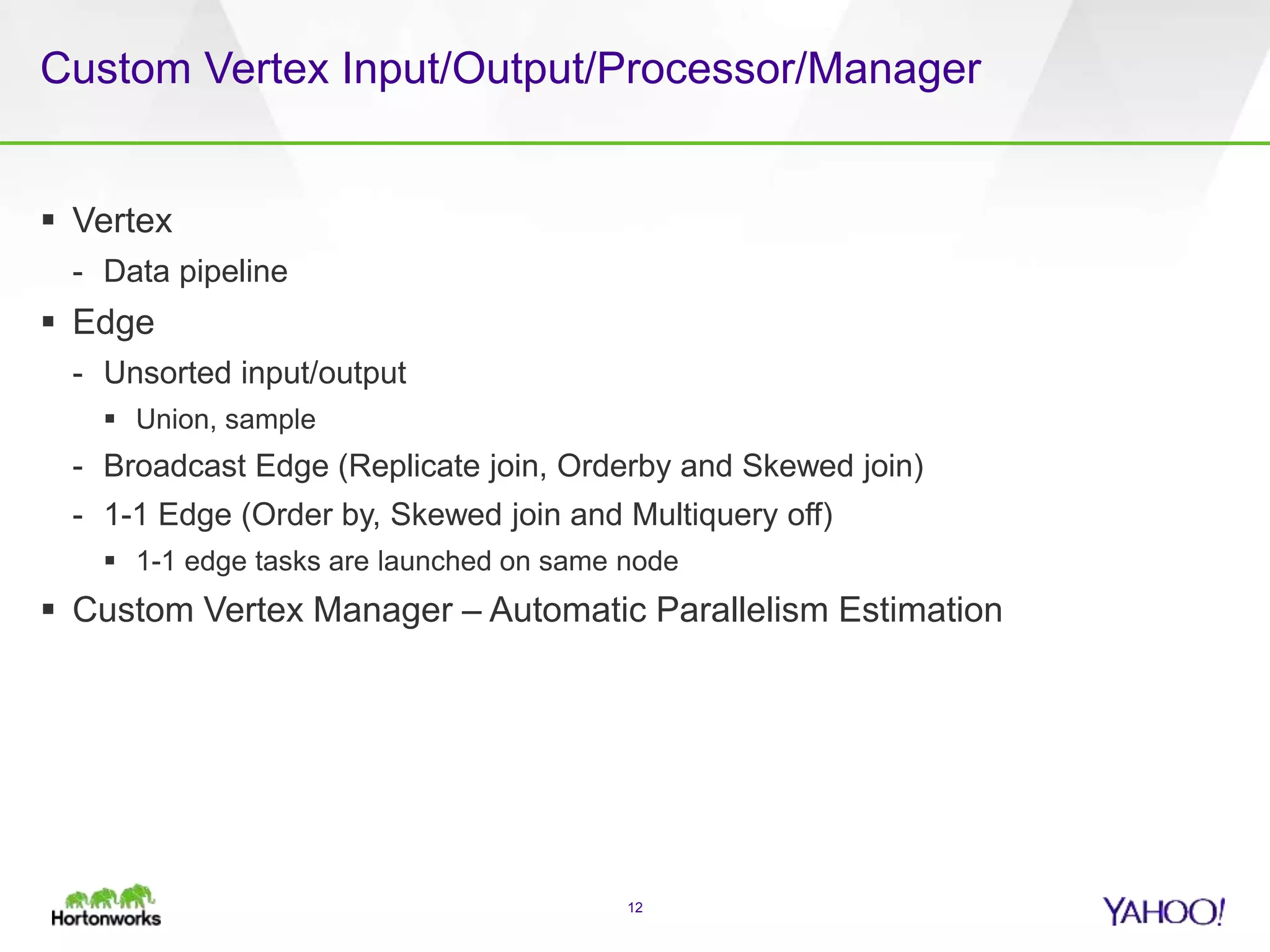

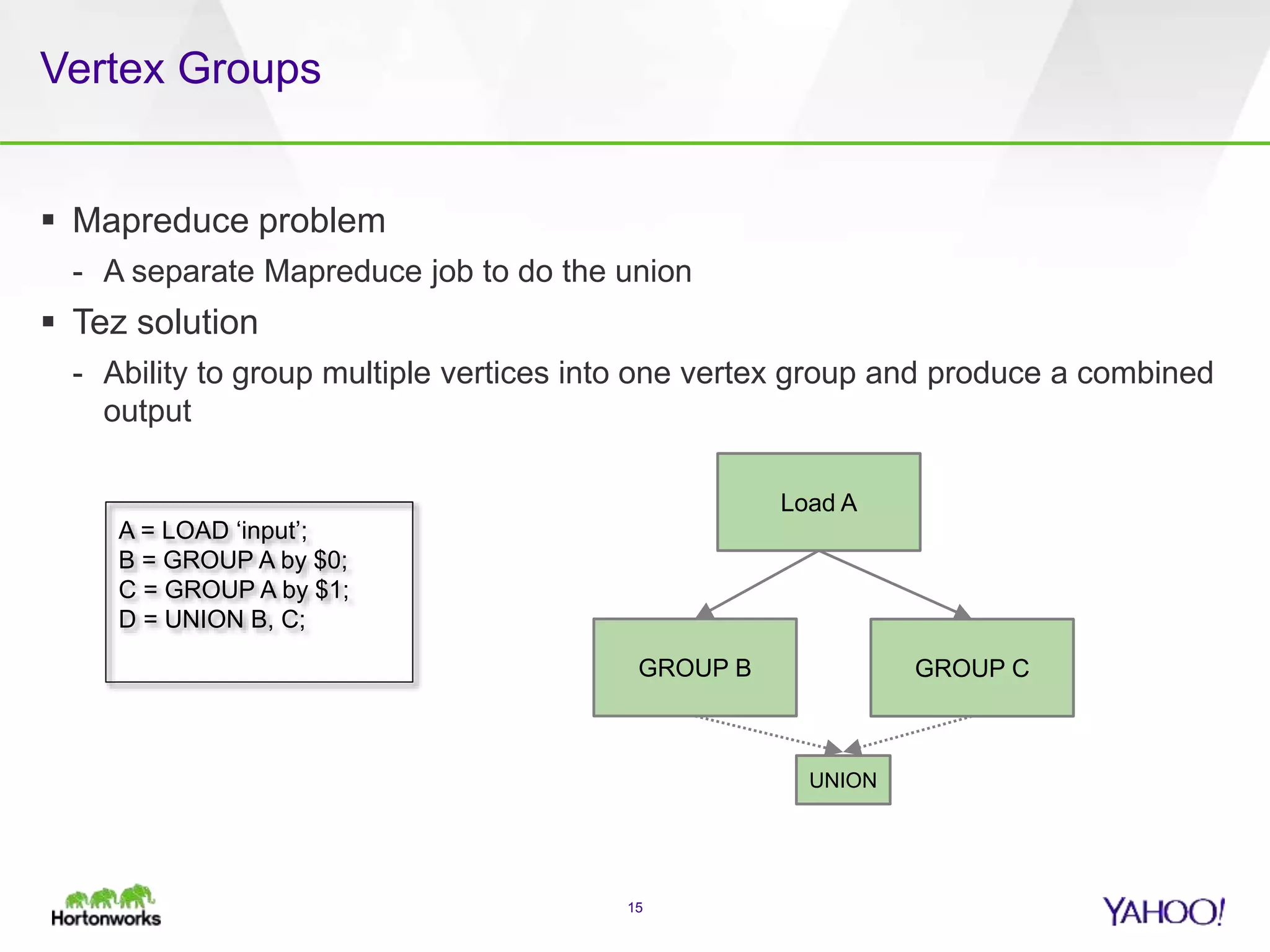

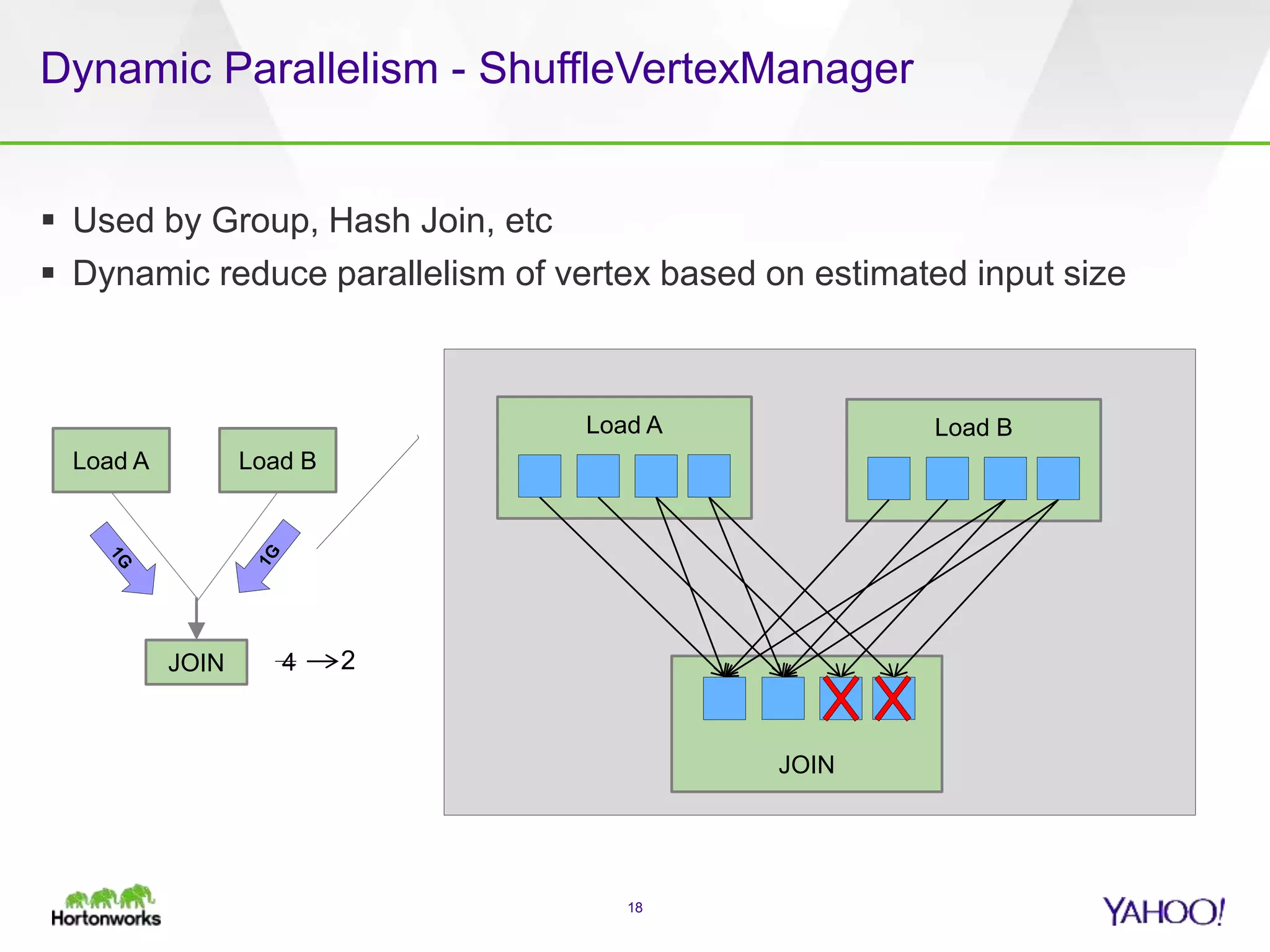

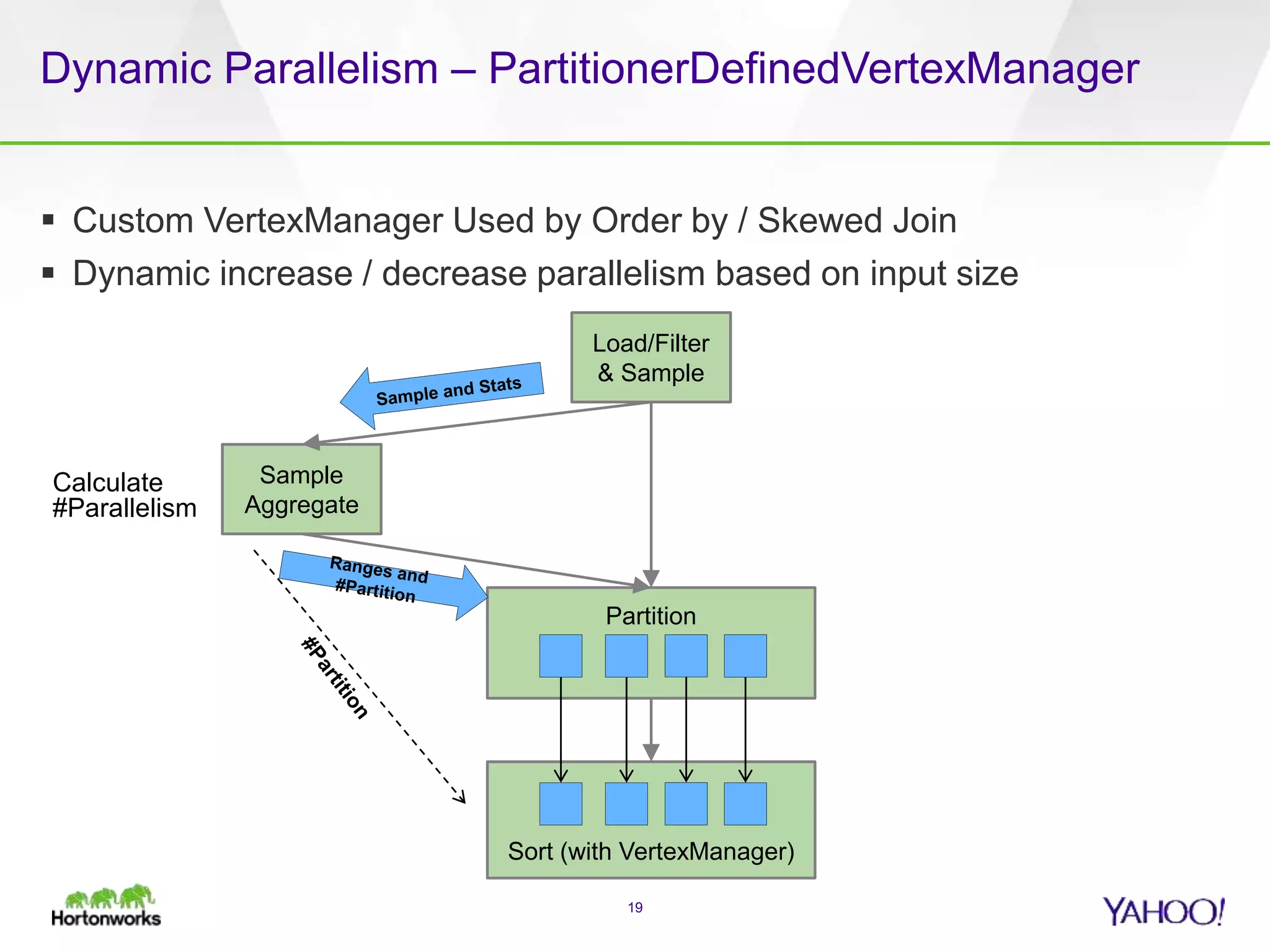

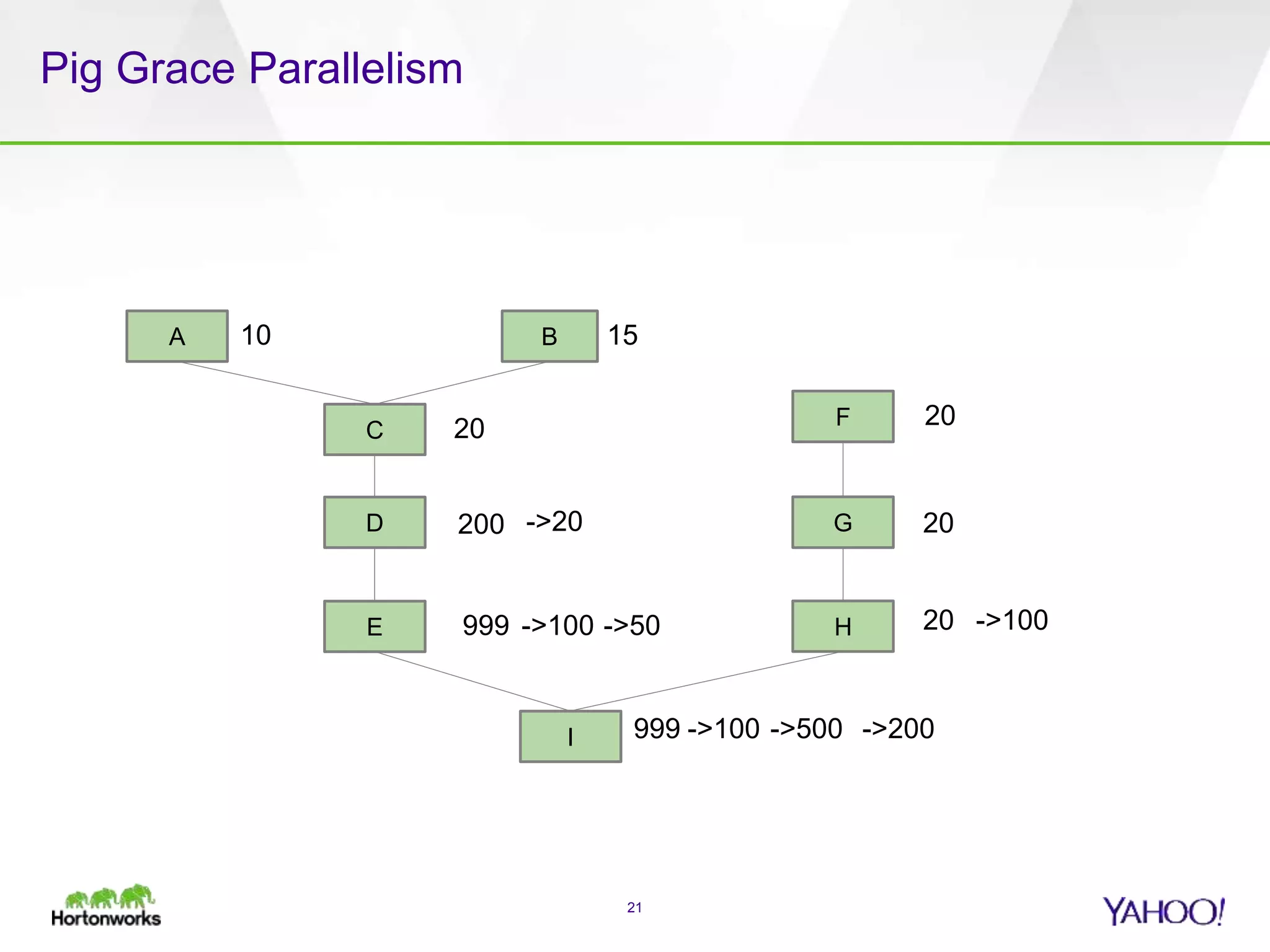

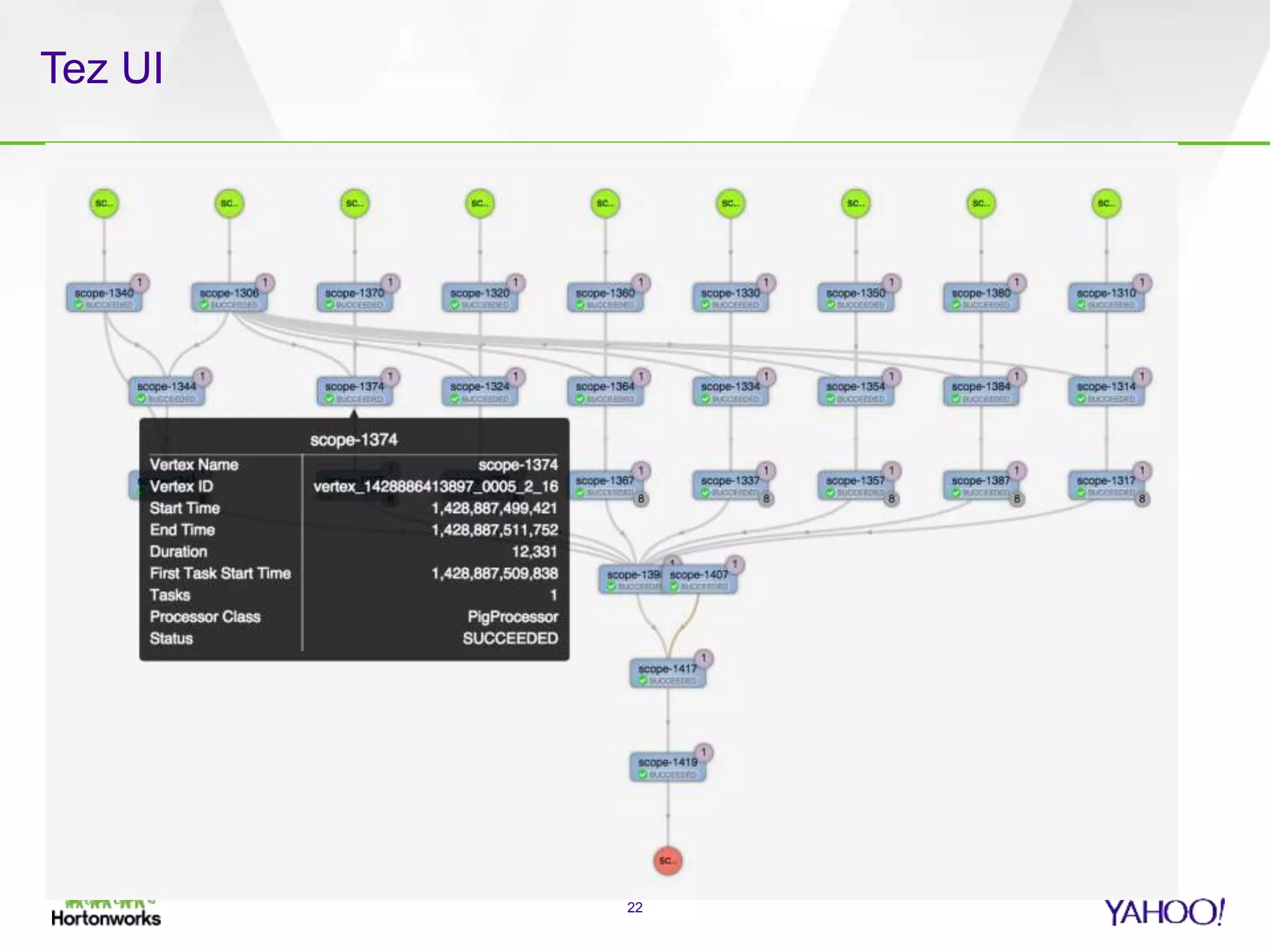

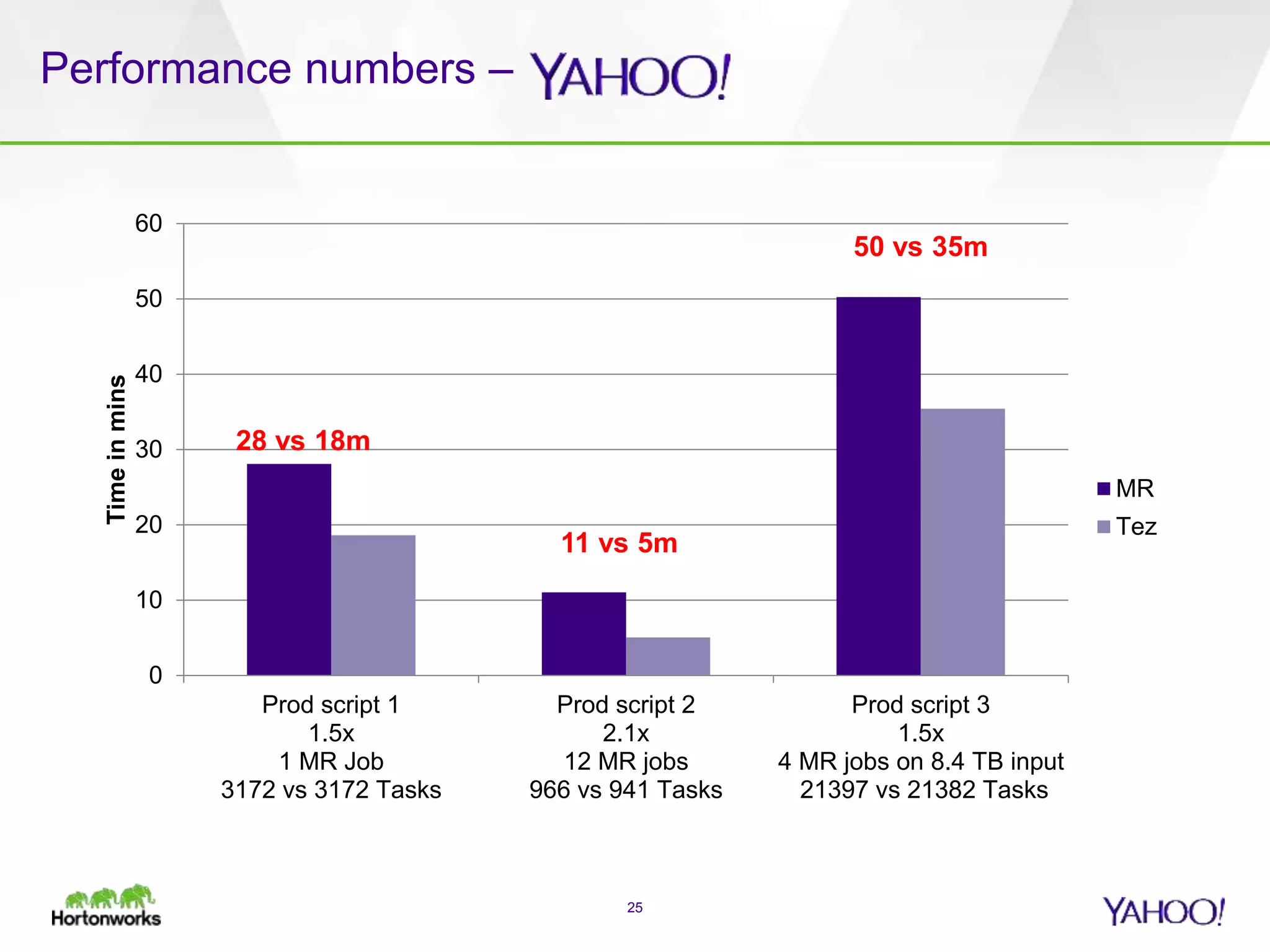

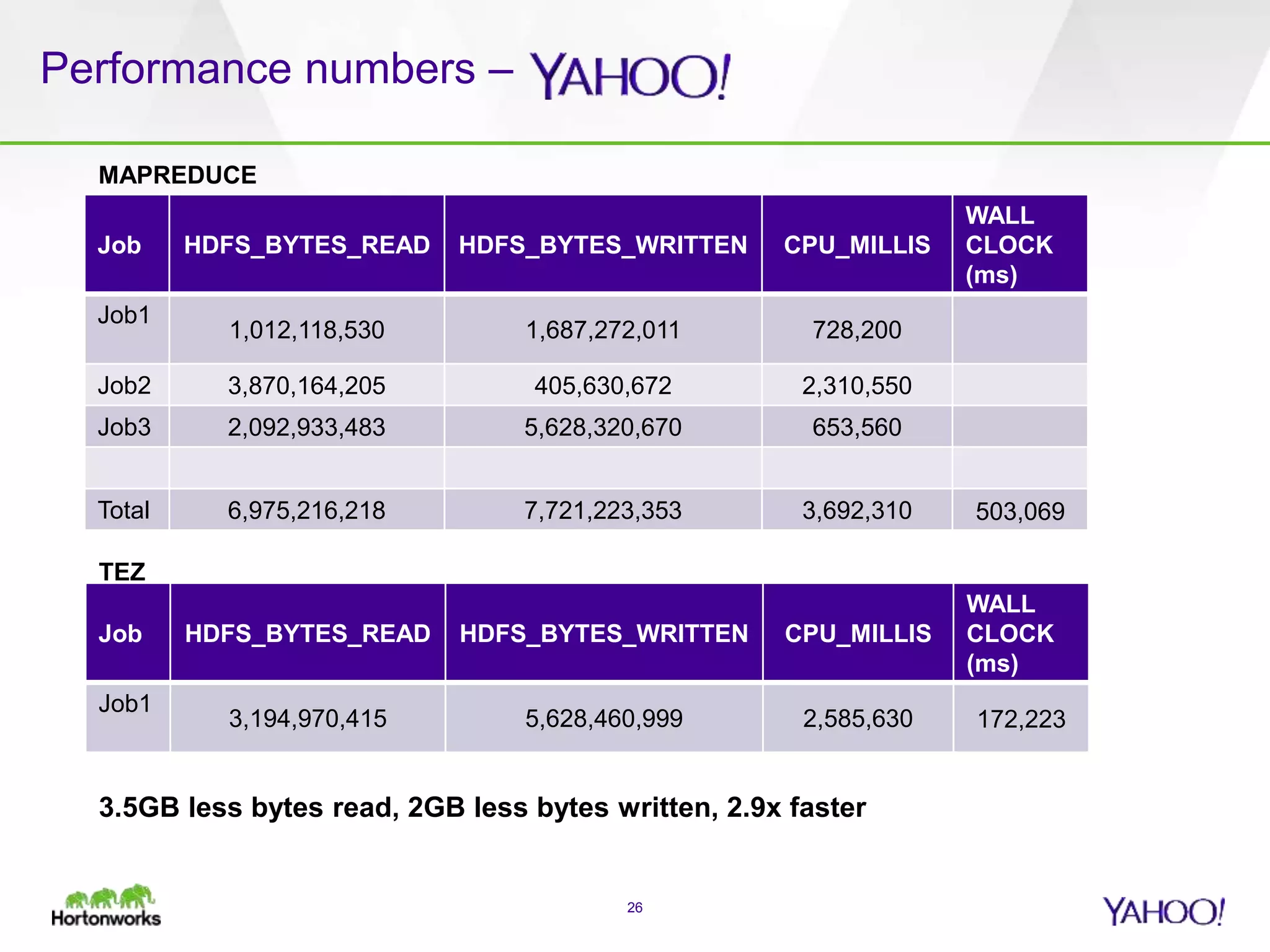

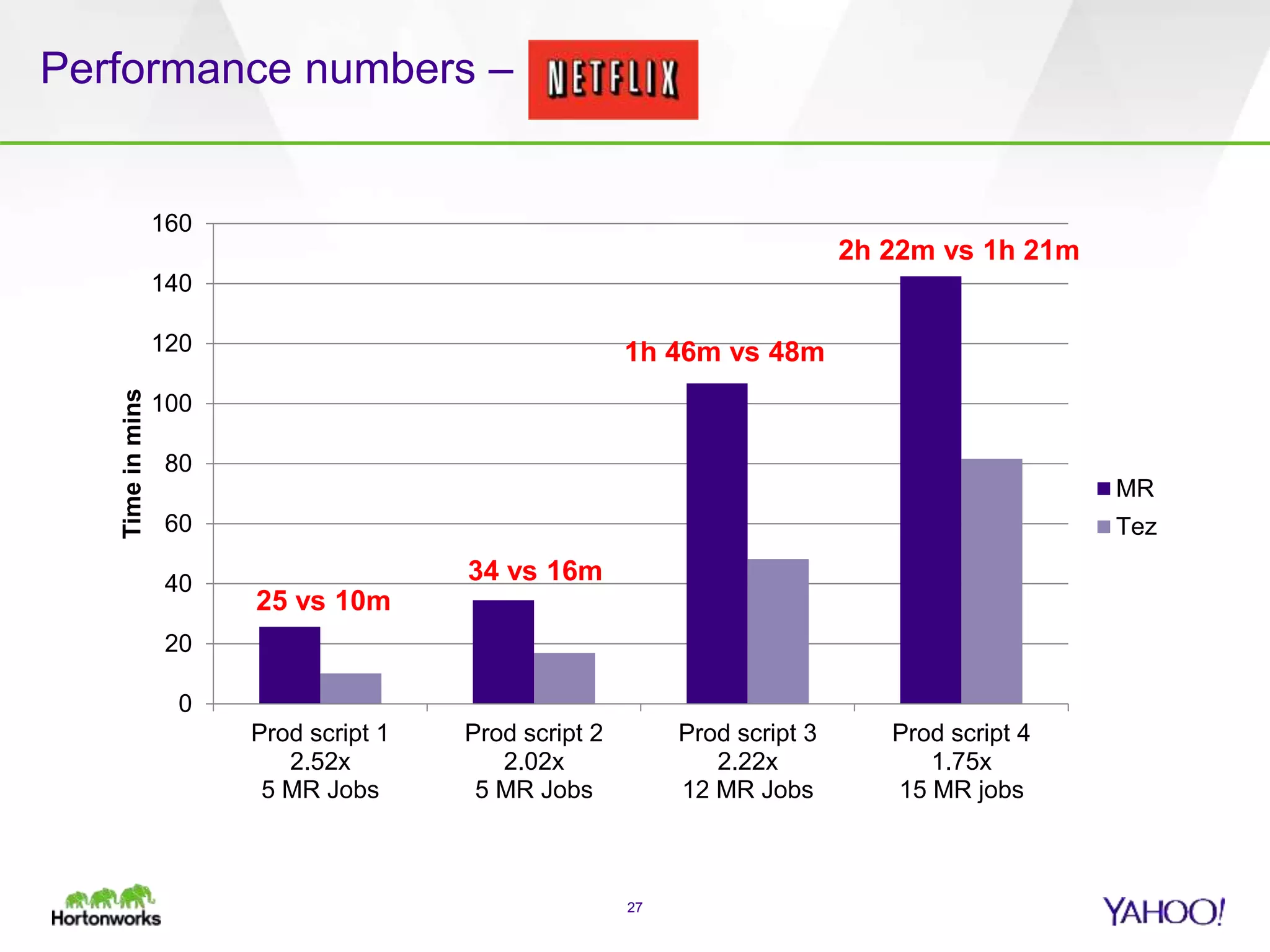

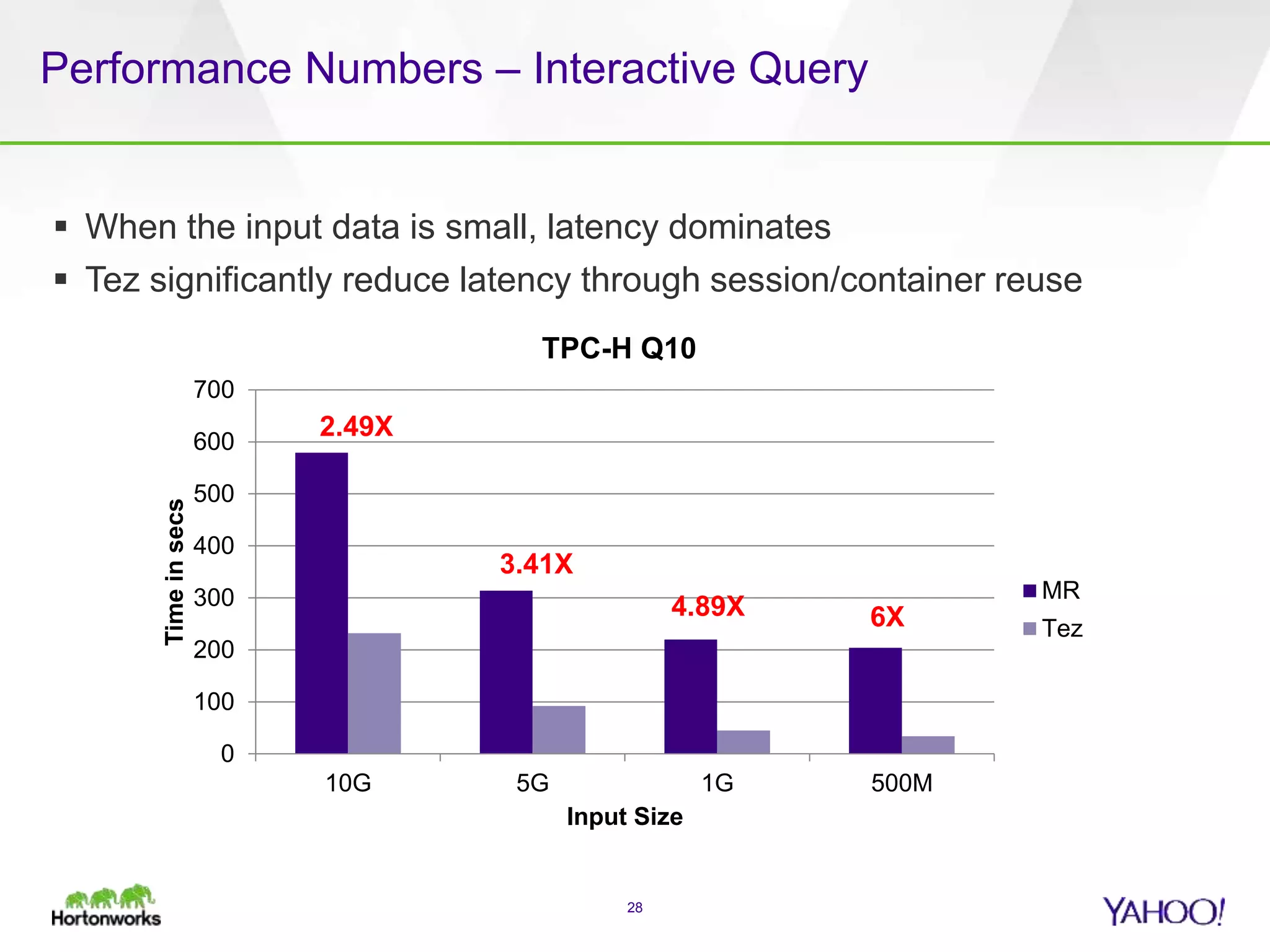

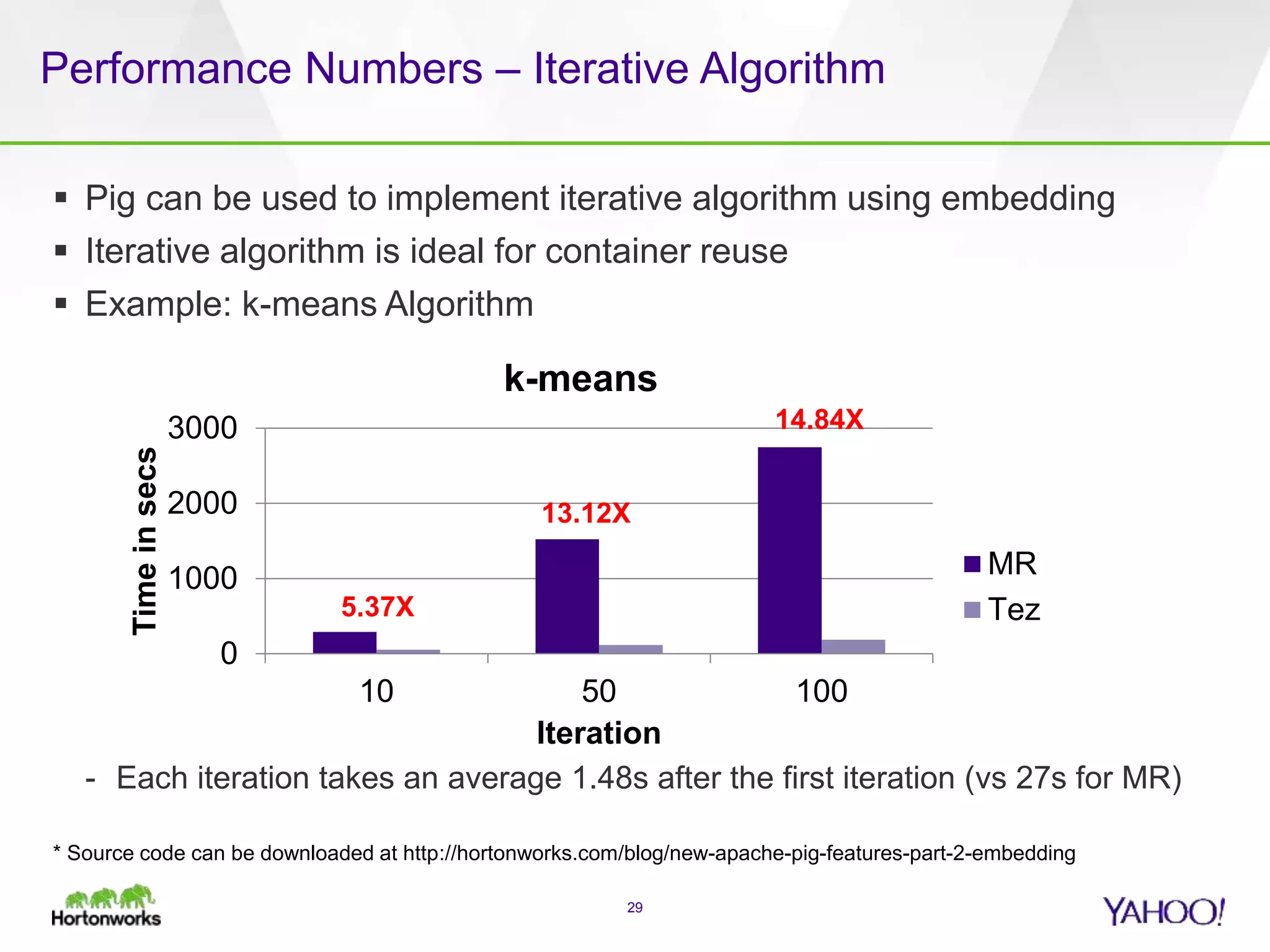

The document discusses the implementation of Apache Pig with the Tez execution engine for low-latency data processing, highlighting its design benefits, performance improvements, and current user experiences. It outlines how Tez enhances efficiency by allowing container reuse, automatic session handling, and dynamic parallelism adjustments. Additionally, it emphasizes Pig's rich features and its widespread use across major organizations, along with future enhancements planned for both Pig and Tez.