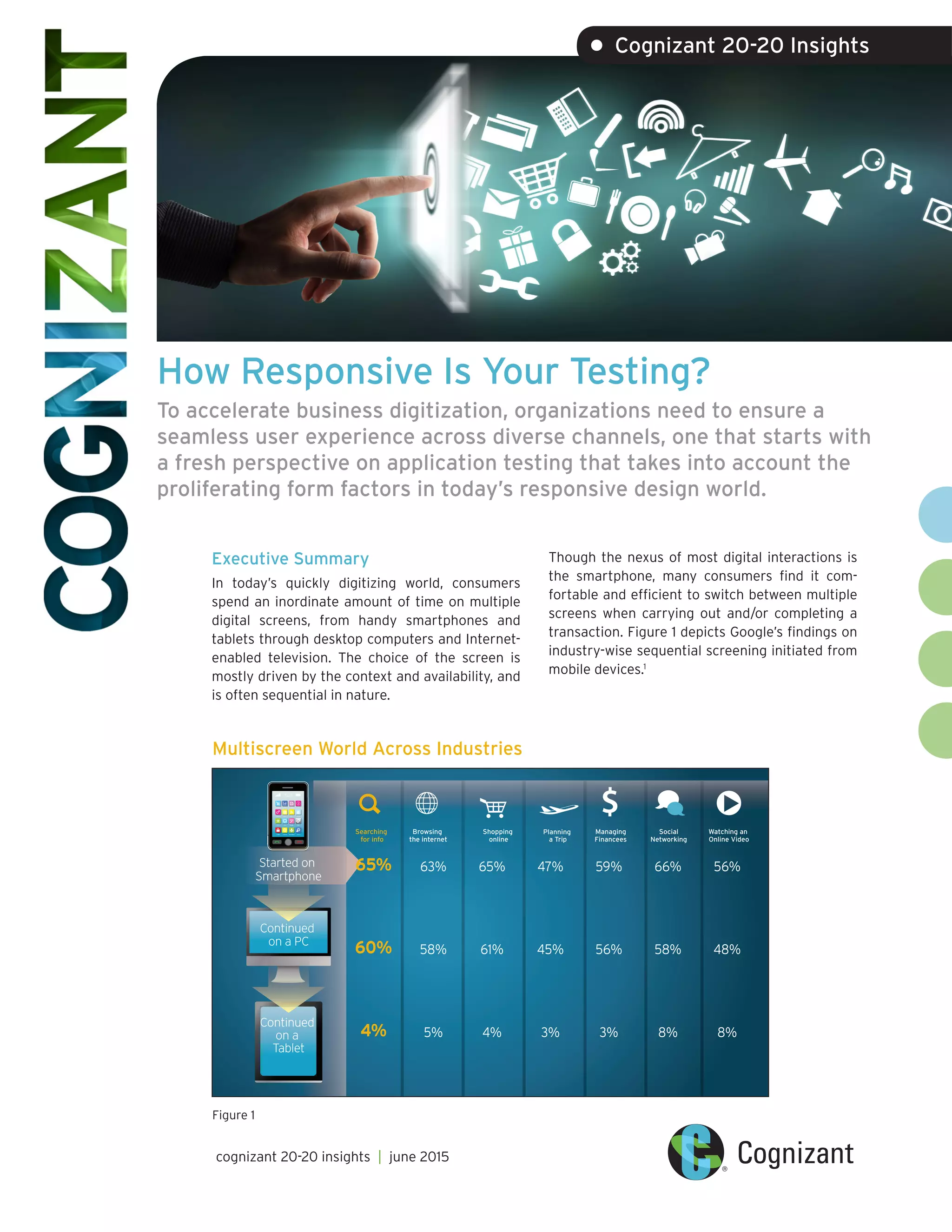

The document discusses the importance of responsive web design (RWD) in testing applications for a seamless user experience across multiple screens. It emphasizes the need for QA organizations to adopt new testing approaches, including user interface and integration testing tailored to various screen sizes and devices. The text also highlights the challenges of automation in testing RWD applications and the necessity for appropriate tool selection to improve efficiency and reduce costs.