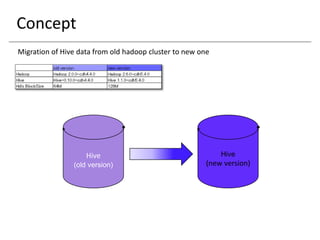

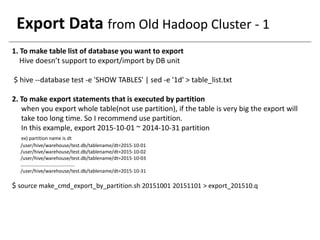

The document provides a detailed guide on migrating Hive data from an old Hadoop cluster to a new one, outlining steps for exporting, copying, and importing data. It includes commands and scripts for creating tables, exporting data by partition, using the distcp command for copying, and finally importing the data to the new cluster. Key references for further information on Hive import/export practices are also provided.

![Export Data from Old Hadoop Cluster - 2

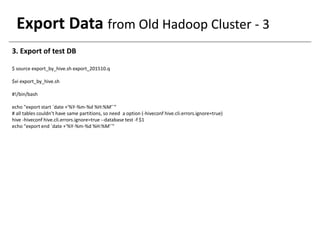

$vi make_cmd_export_by_partition.sh

#!/bin/bash

startdate=$1

enddate=$2

rundate="$startdate"

until [ "$rundate" == "$enddate" ]

do

YEAR=${rundate:0:4}

MONTH=${rundate:4:2}

DAY=${rundate:6:2}

DATE2=${YEAR}'-'${MONTH}'-'${DAY}

TBLS=(`cat table_list.txt`)

for TBL in ${TBLS[@]}

do

echo "export table ${TBL} PARTITION (dt='$DATE2') to '/user/hadoop/temp_export_dir/${TBL}/dt=$DATE2';"

echo

done

rundate=`date --date="$rundate +1 day" +%Y%m%d`

done](https://image.slidesharecdn.com/hivemigration-151105092419-lva1-app6891/85/Hive-data-migration-export-import-5-320.jpg)

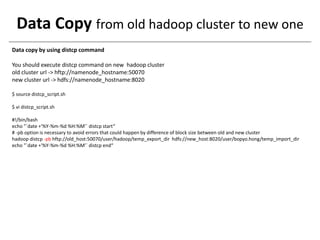

![Import Data to New Hadoop Cluster - 1

1. To make import statement that is executed by partition

$ source make_cmd_import_by_partition.sh 20151001 20151101 > import_201510.q

$ vi make_cmd_import_by_partition.sh

#!/bin/bash

TBLS=(`cat table_list.txt`)

startdate=$1

enddate=$2

rundate="$startdate"

until [ "$rundate" == "$enddate" ]

do

YEAR=${rundate:0:4}

MONTH=${rundate:4:2}

DAY=${rundate:6:2}

DATE2=${YEAR}'-'${MONTH}'-'${DAY}

for TBL in ${TBLS[@]}

do

echo "import table ${TBL} PARTITION (dt='$DATE2') from '/user/bopyo.hong/temp_import_dir/${TBL}/dt=$DATE2';"

echo

done

rundate=`date --date="$rundate +1 day" +%Y%m%d`

done](https://image.slidesharecdn.com/hivemigration-151105092419-lva1-app6891/85/Hive-data-migration-export-import-8-320.jpg)