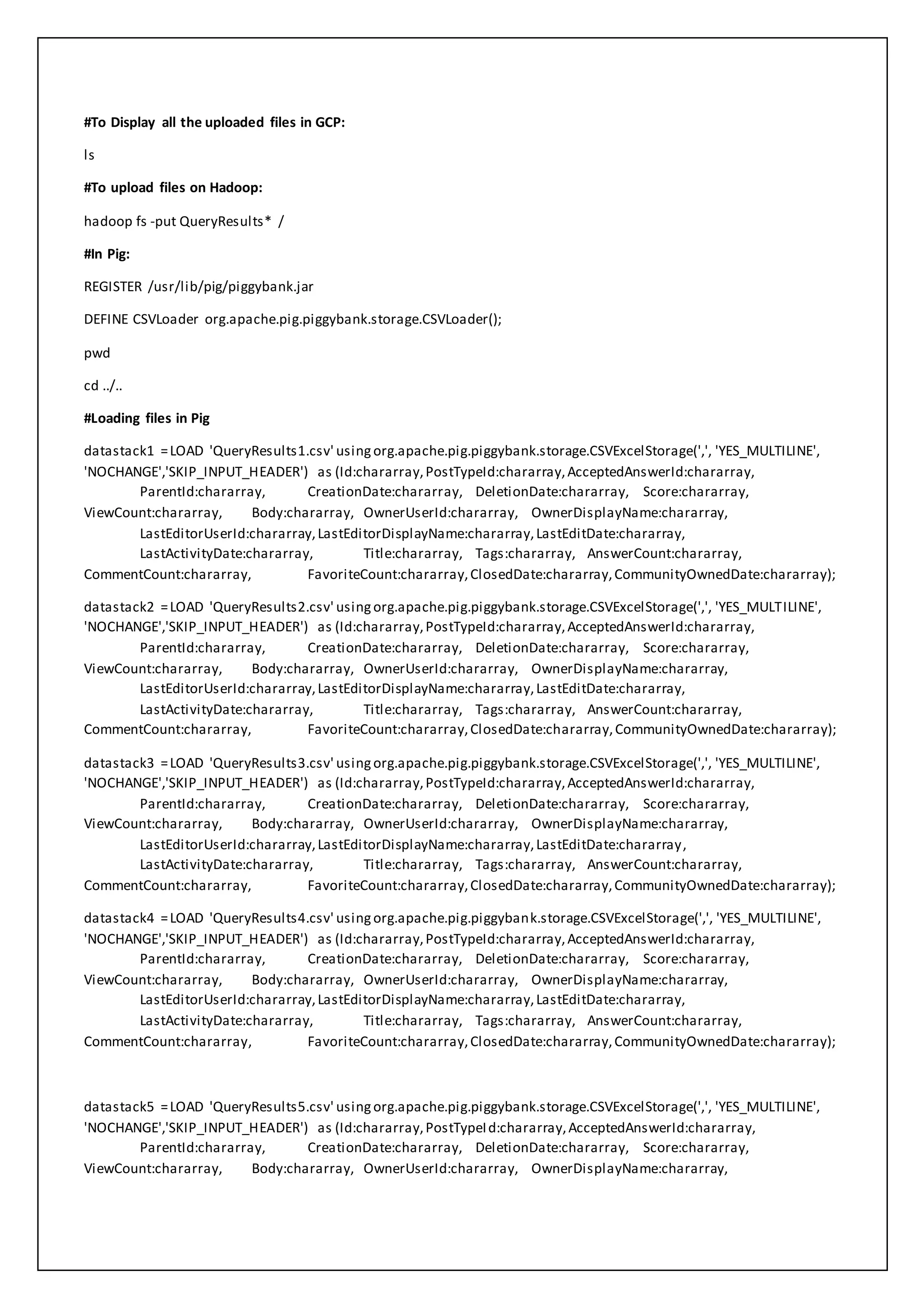

The document describes steps to load CSV files into Pig and Hive, perform data cleaning and merging, and calculate TF-IDF scores for terms in posts by top users to identify top terms. Multiple CSV files are loaded into Pig, cleaned, merged into a single file, and loaded into Hive tables. Python MapReduce programs are then used to calculate TF-IDF scores to find the most important terms per user.

!['some','than','that','the','their','them','then','there','these','they','this','tis','to','too','twas','us','wants','was','we','were','

what',

'when','where','which','while','who','whom','why','will','with','would','yet','you','your'];

for linein sys.stdin:

line= line.translate(None, punctuation).strip('t')

line_contents = line.split("")

doc_name = line_contents[0]

line_contents.remove(doc_name)

for content in line_contents:

content = content.lower()

content = content.rstrip()

if content not in stopwords:

key = content + "," + doc_name

print'%st%s' % (key, 1)](https://image.slidesharecdn.com/commandsdocumentaion-210617101151/85/Commands-documentaion-5-320.jpg)