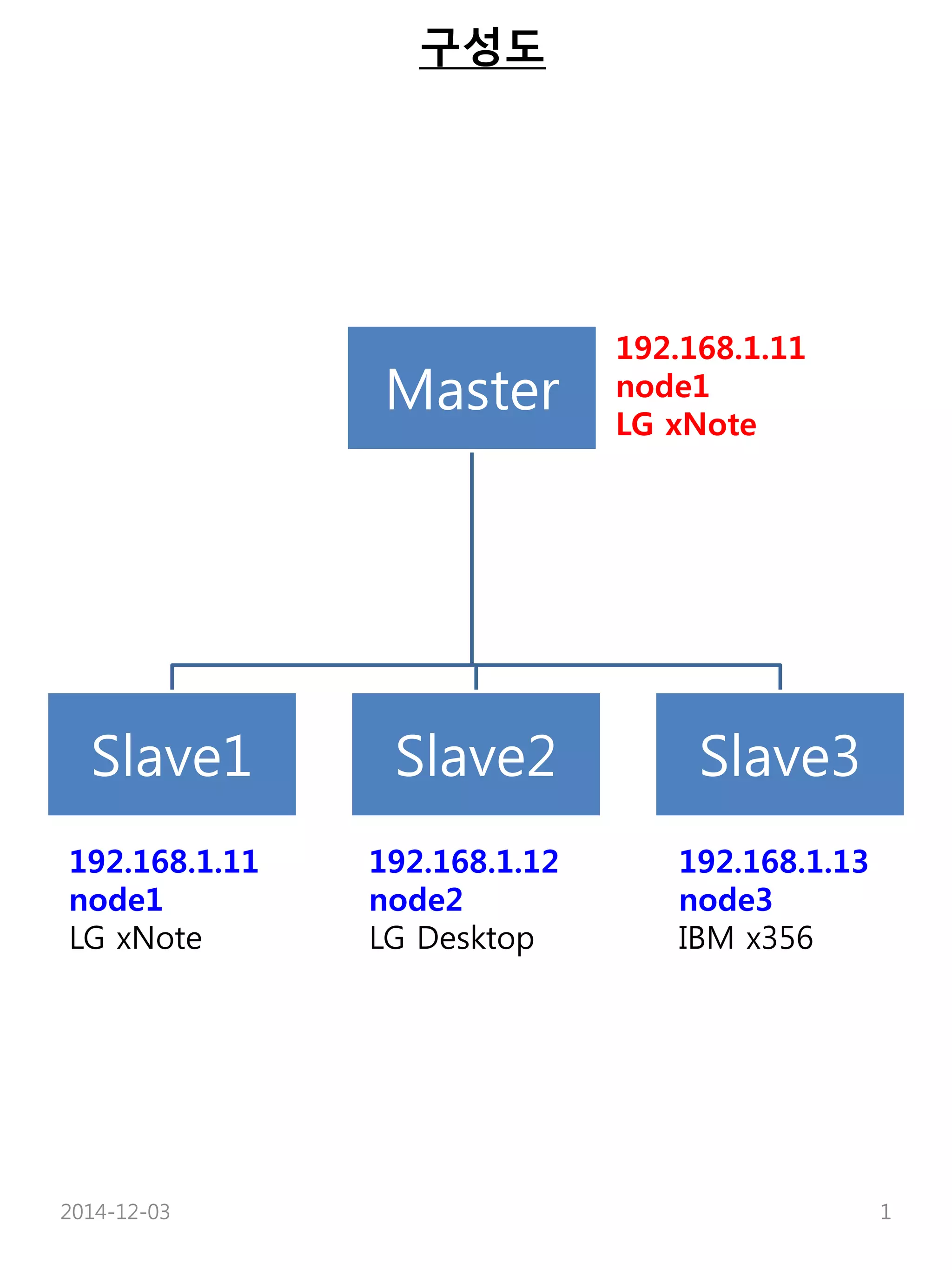

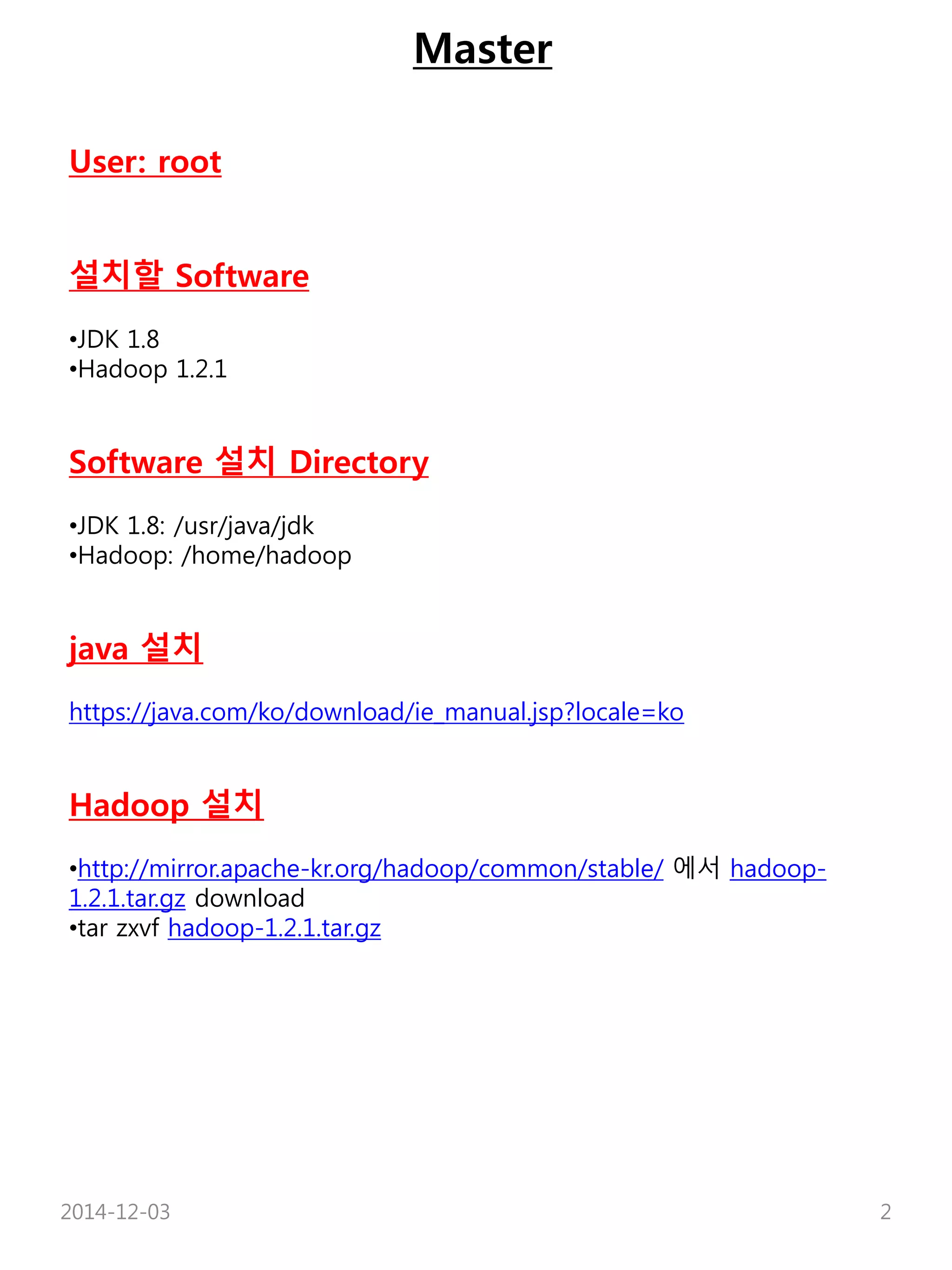

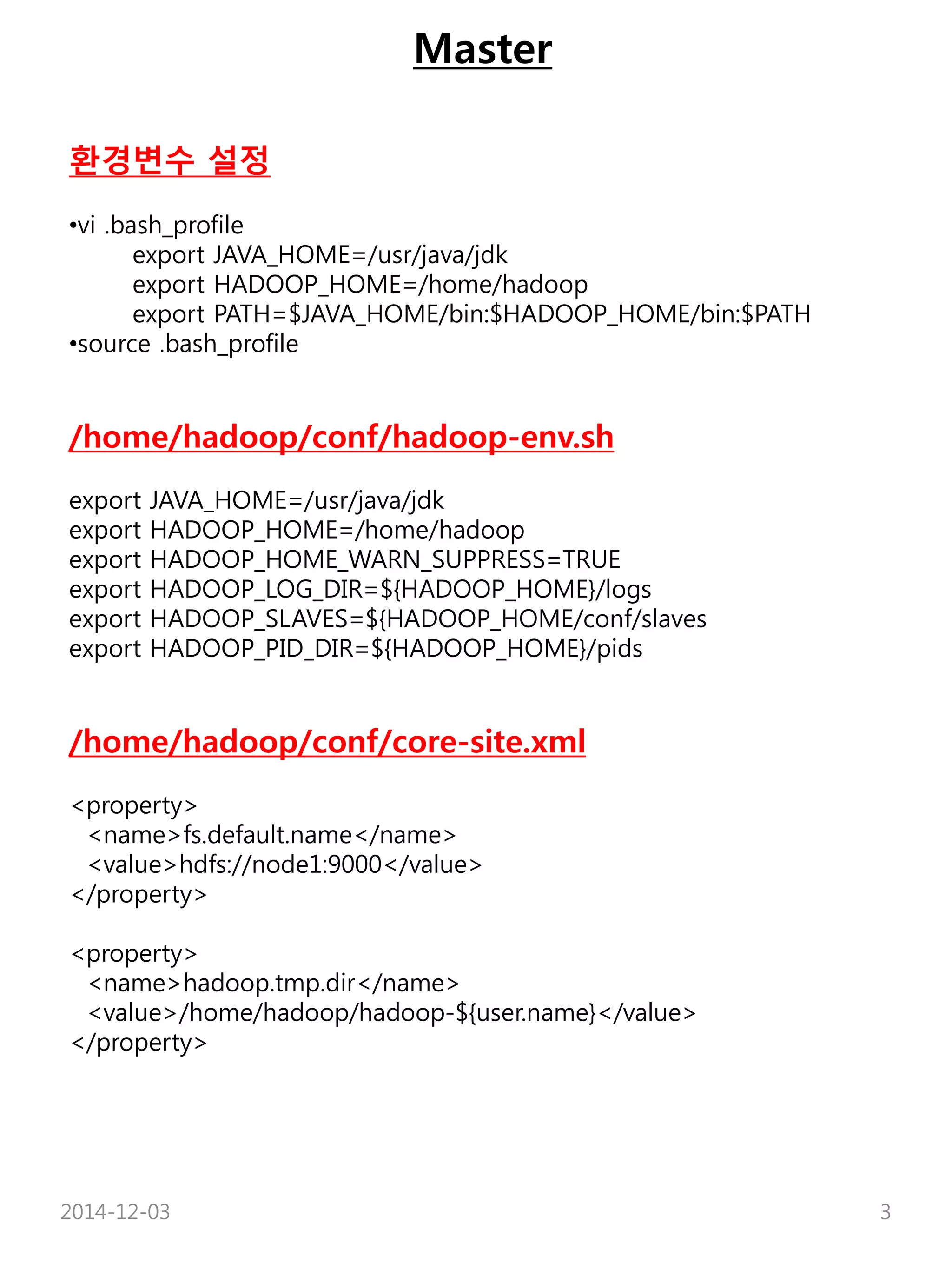

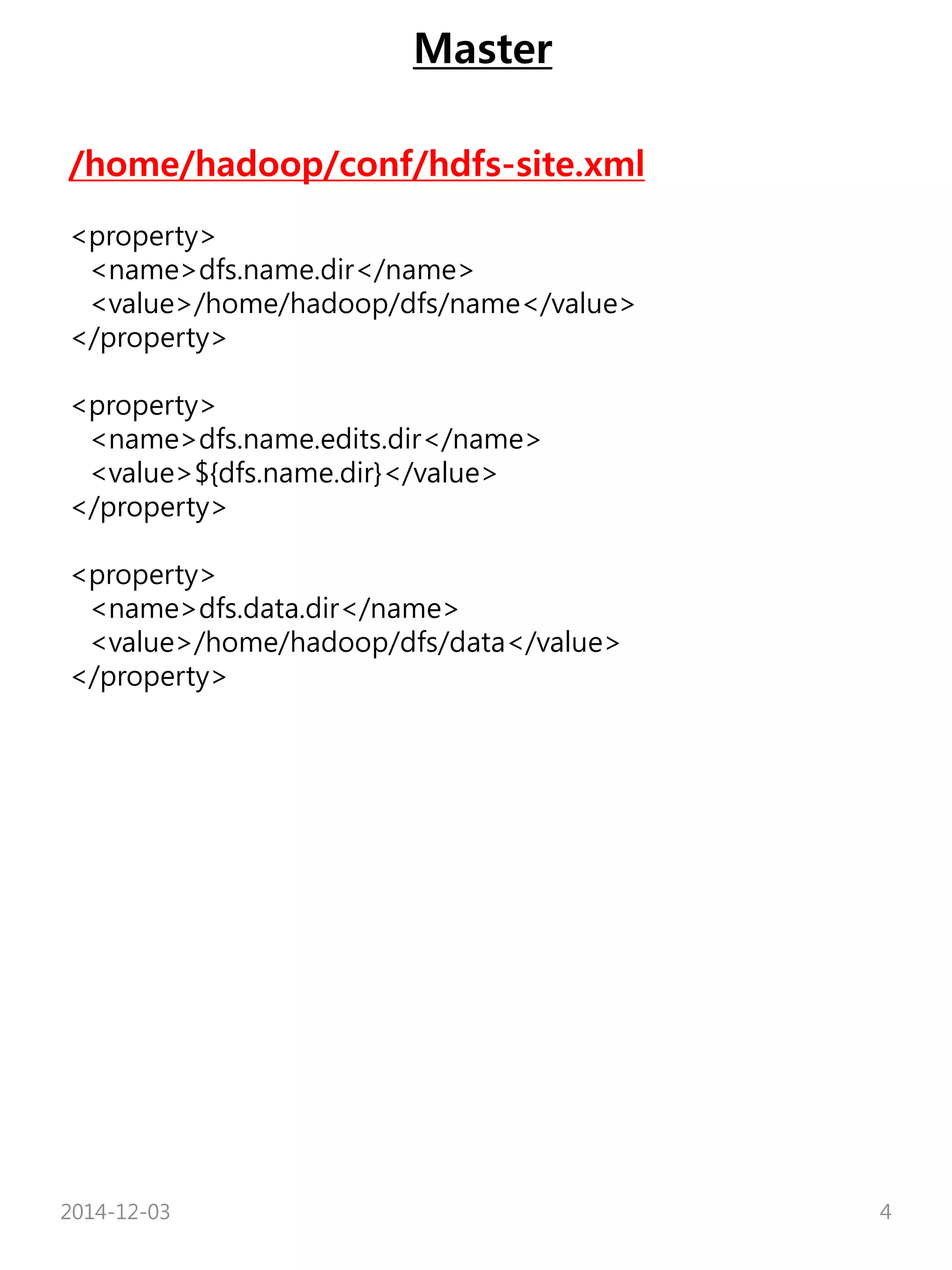

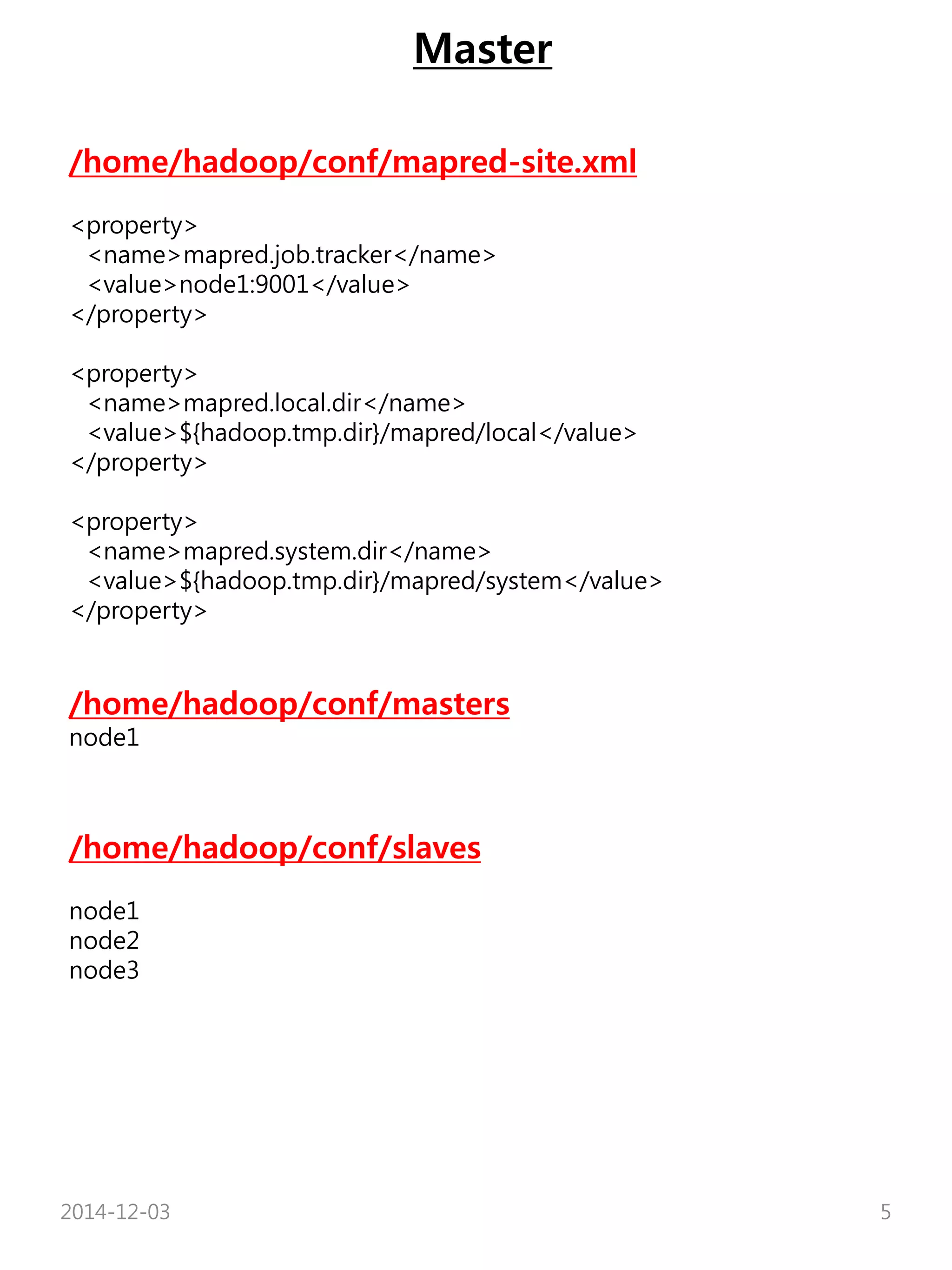

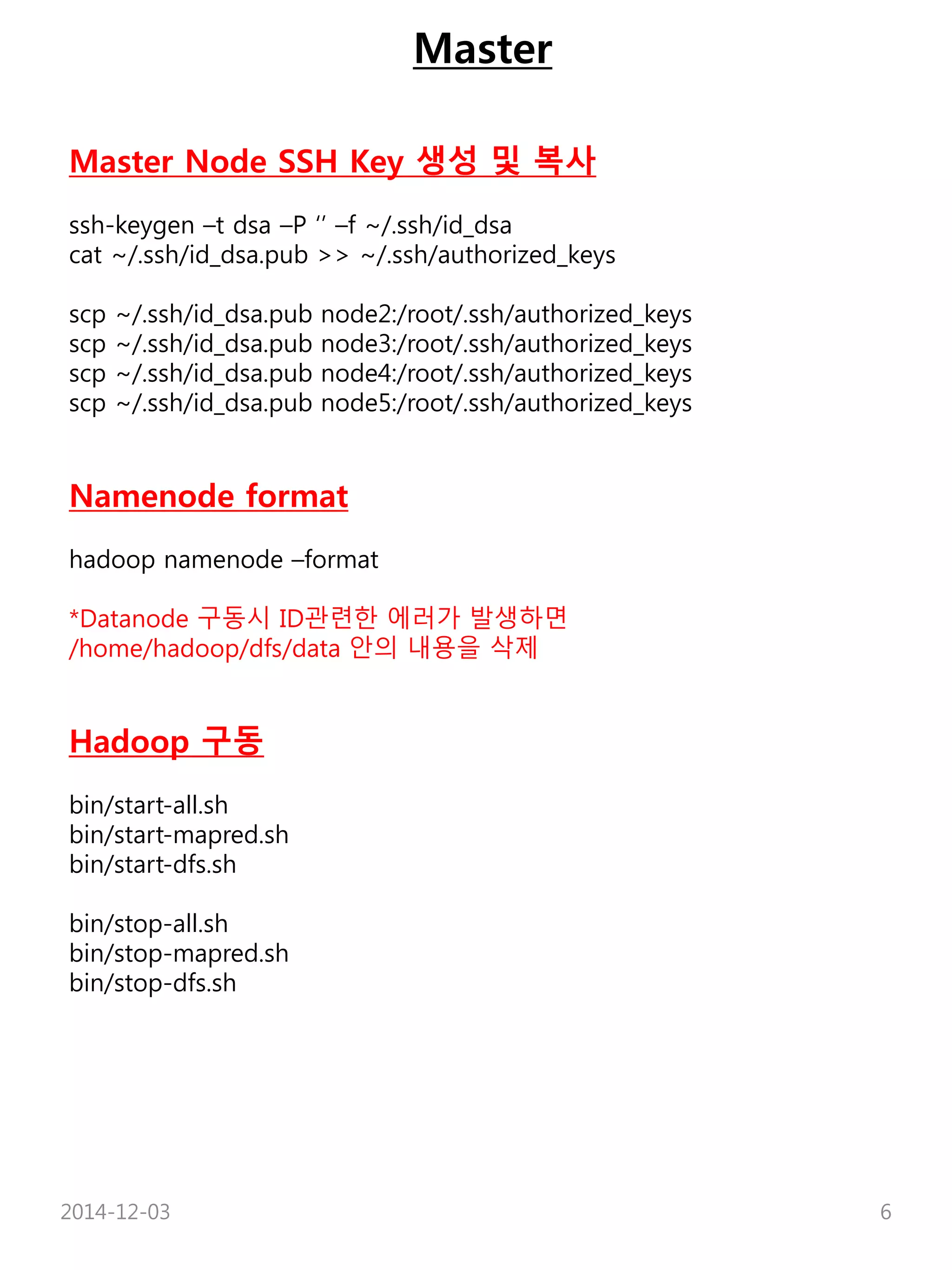

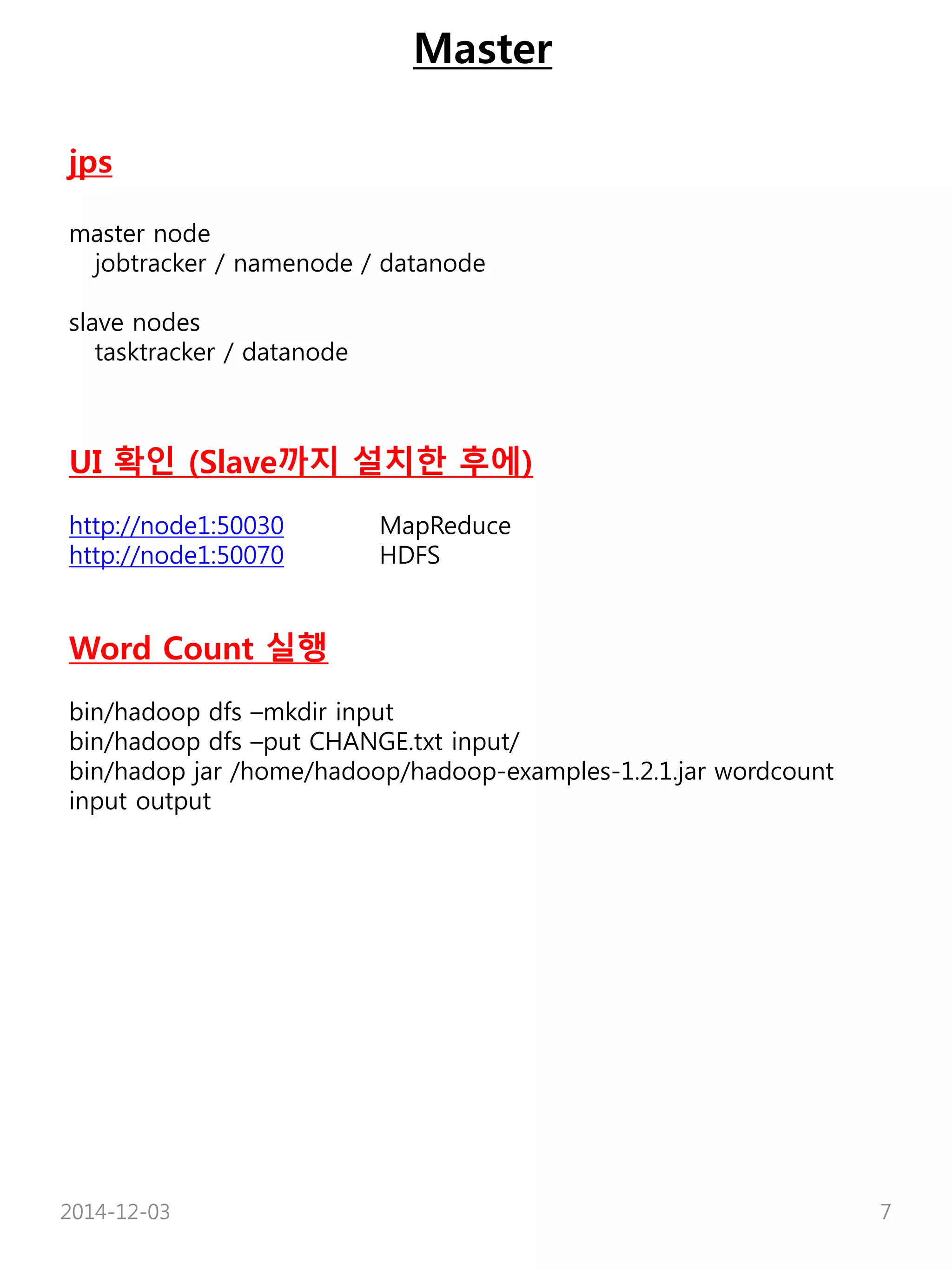

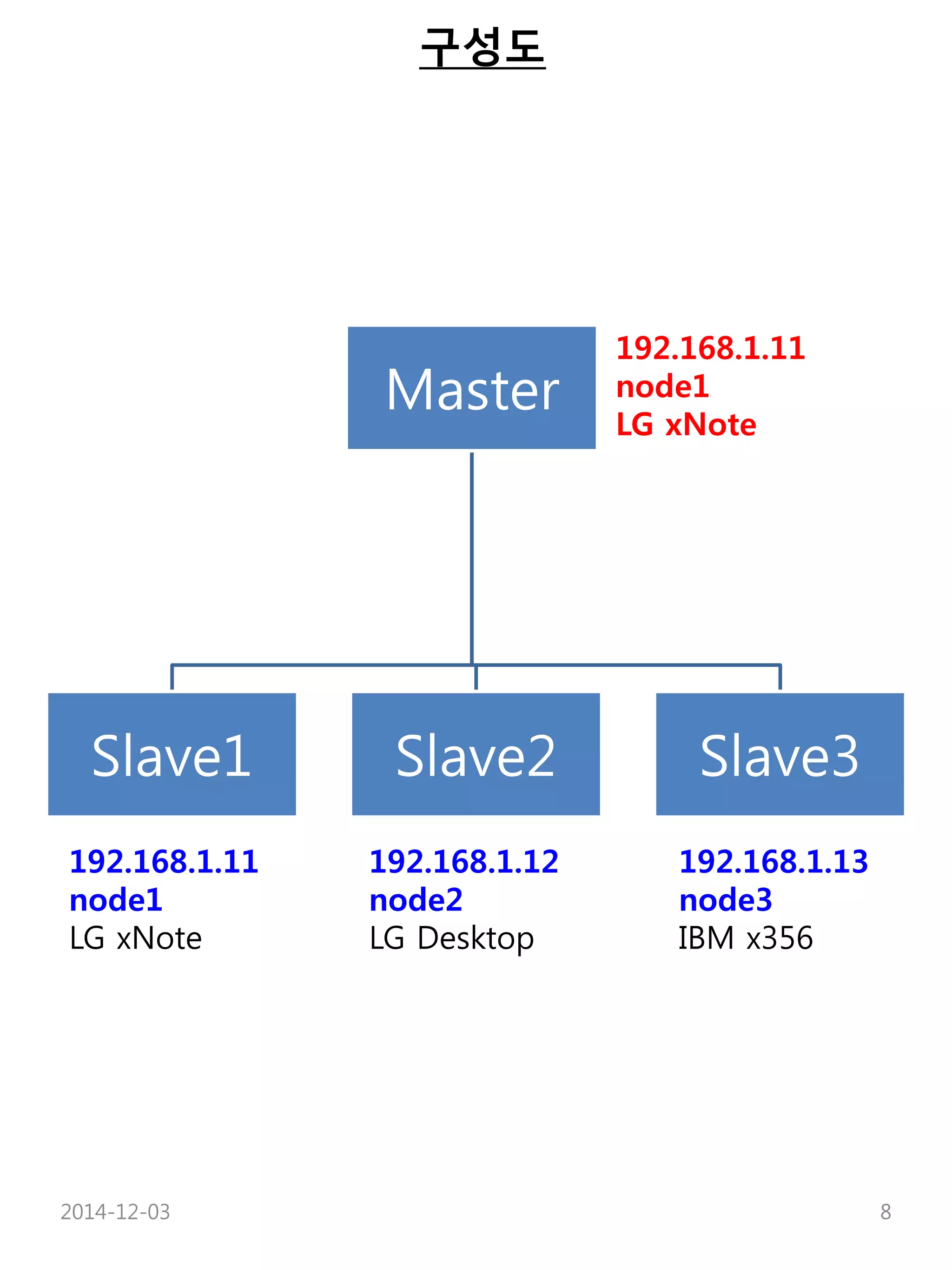

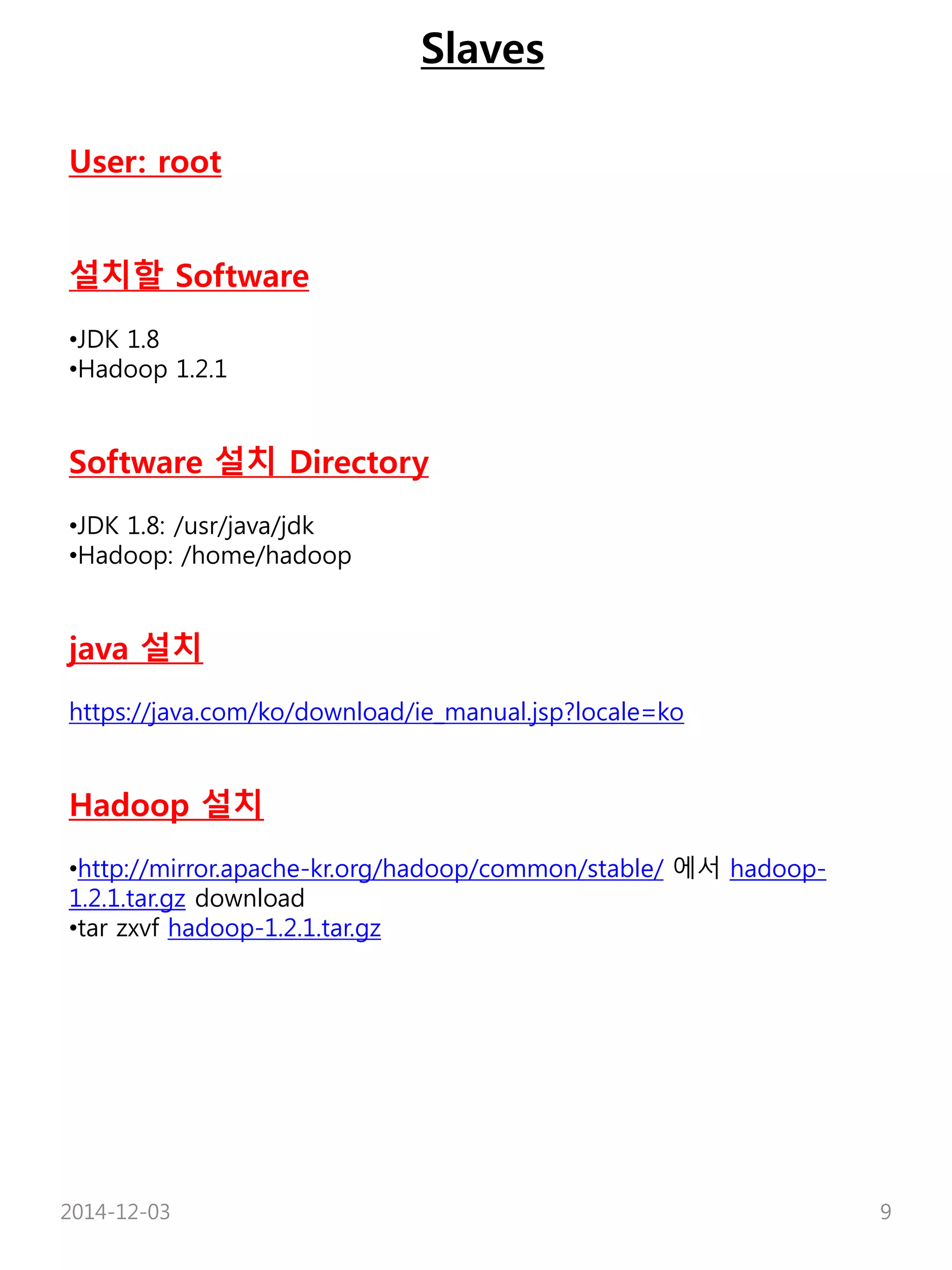

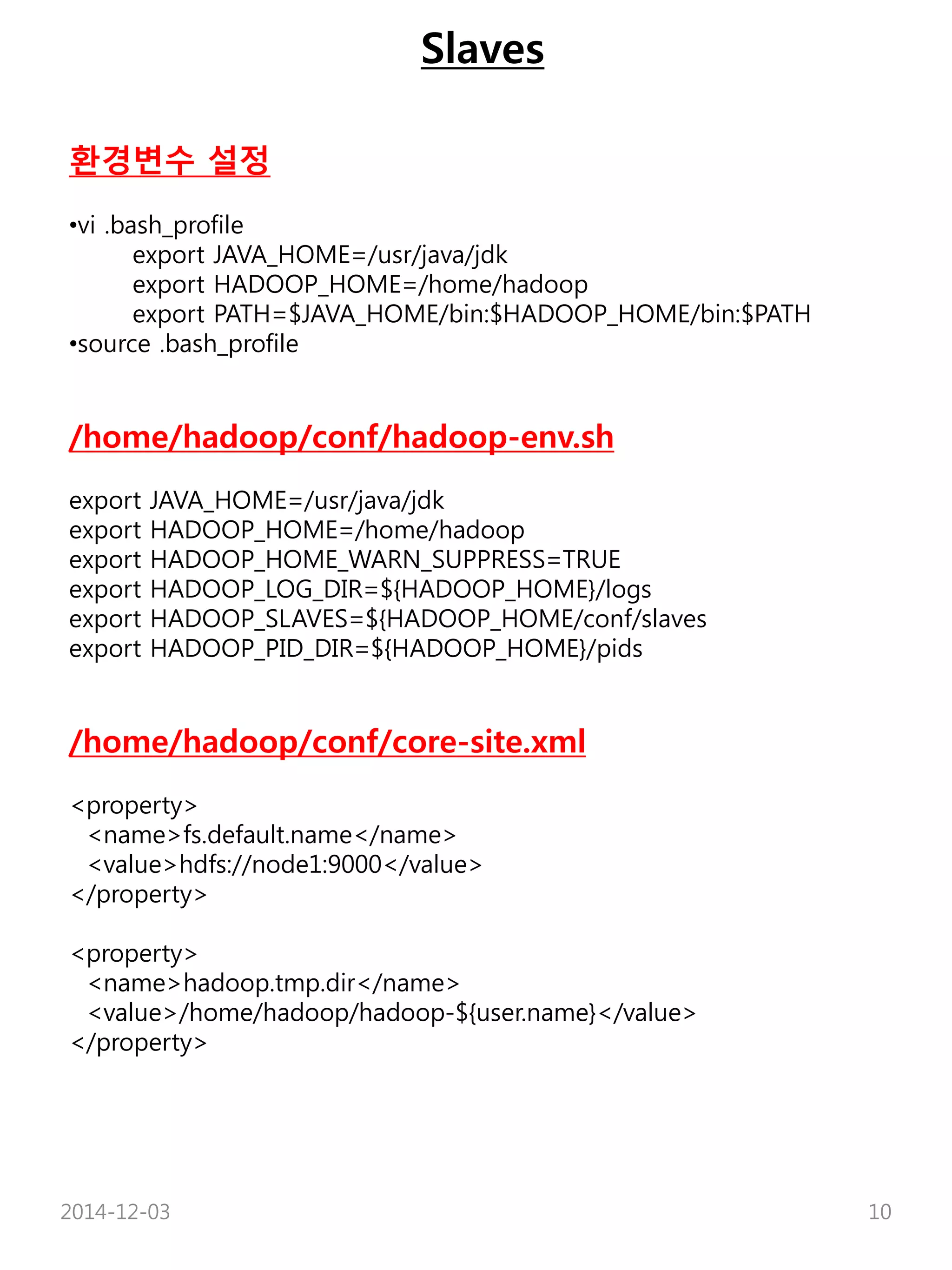

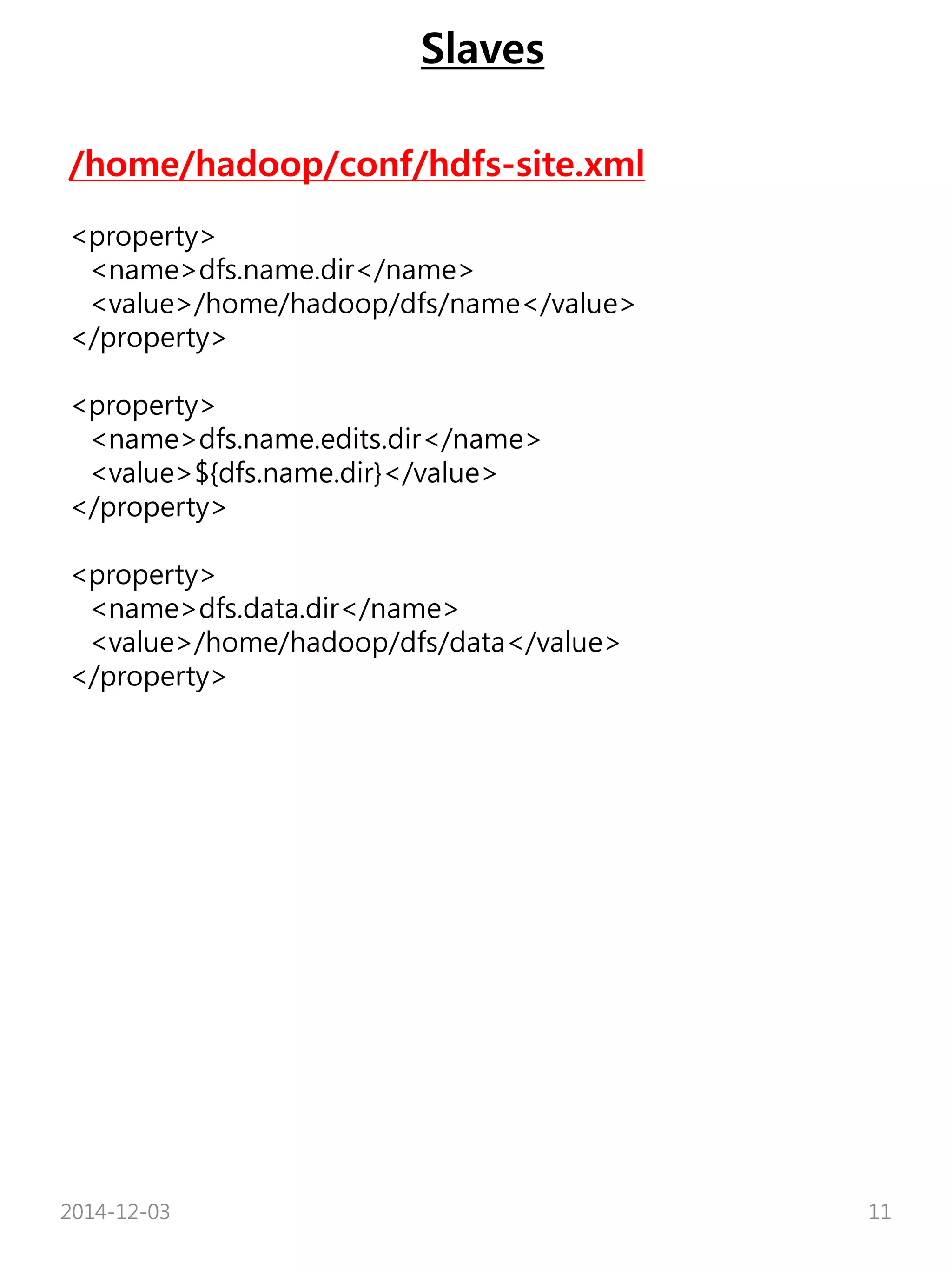

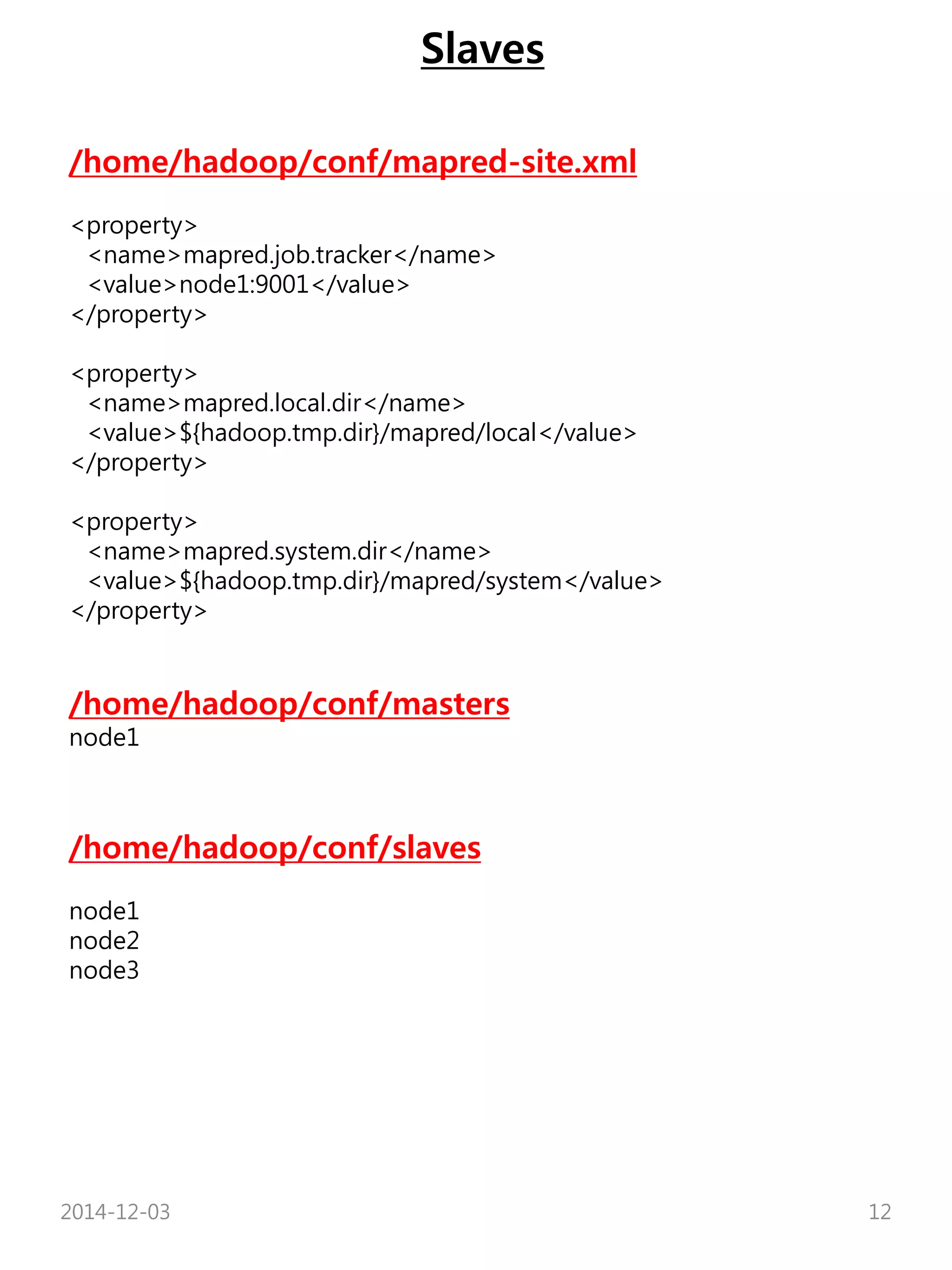

The document describes the steps to set up a Hadoop cluster with one master node and three slave nodes. It includes installing Java and Hadoop, configuring environment variables and Hadoop files, generating SSH keys, formatting the namenode, starting services, and running a sample word count job. Additional sections cover adding and removing nodes and performing health checks on the cluster.