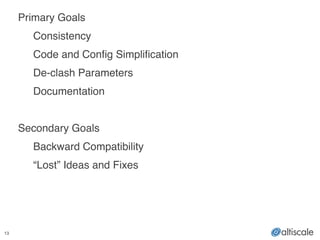

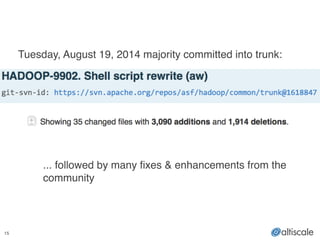

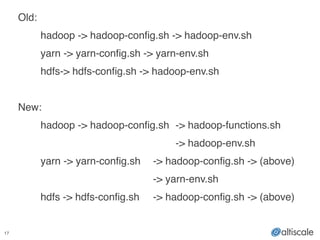

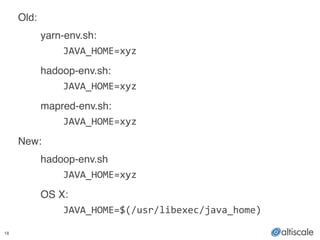

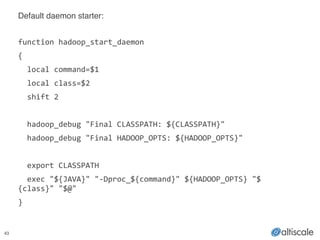

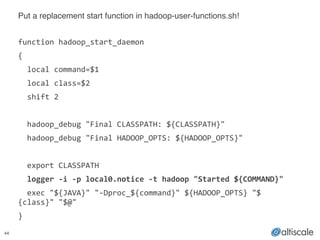

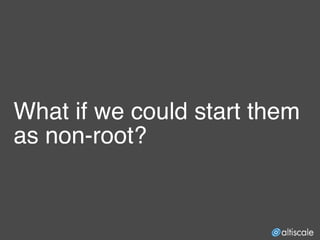

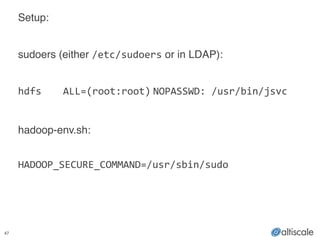

The document outlines the overview of a shell script rewrite for Hadoop, emphasizing goals like consistency and simplification. It details changes to the structure and functionality of various scripts, aiming for backward compatibility while introducing new features. The document also discusses logging, daemon management, and security enhancements for running Hadoop daemons securely.

![“[The scripts] finally got to

you, didn’t they?”](https://image.slidesharecdn.com/2015-08-shellrewrite-150822195231-lva1-app6891/85/Apache-Hadoop-Shell-Rewrite-12-320.jpg)

![20

! $

TOOL_PATH=blah:blah:blah

hadoop

distcp

/old

/new

Error:

could

not

find

or

load

main

class

org.apache.hadoop.tools.DistCp!

!

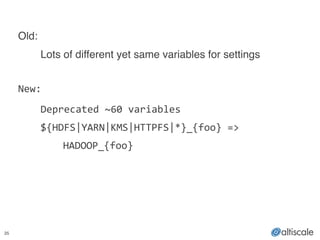

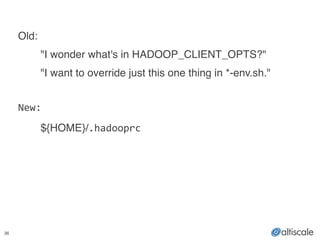

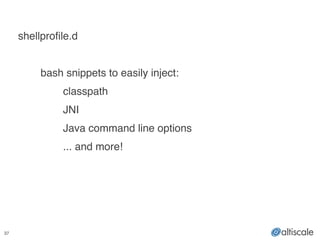

Old:!

! $

bash

-‐x

hadoop

distcp

/old

/new

+

this=/home/aw/HADOOP/hadoop-‐3.0.0-‐SNAPSHOT/bin/hadoop

+++

dirname

-‐-‐

/home/aw/HADOOP/hadoop-‐3.0.0-‐SNAPSHOT/bin/hadoop

++

cd

-‐P

-‐-‐

/home/aw/HADOOP/hadoop-‐3.0.0-‐SNAPSHOT/bin

++

pwd

-‐P

+

bin=/home/aw/HADOOP/hadoop-‐3.0.0-‐SNAPSHOT/bin

+

DEFAULT_LIBEXEC_DIR=/home/aw/HADOOP/hadoop-‐3.0.0-‐SNAPSHOT/bin/../libexec

+

HADOOP_LIBEXEC_DIR=/home/aw/HADOOP/hadoop-‐3.0.0-‐SNAPSHOT/bin/../libexec

+

[[

-‐f

/home/aw/HADOOP/hadoop-‐3.0.0-‐SNAPSHOT/bin/../libexec/hadoop-‐

config.sh

]]

…

!](https://image.slidesharecdn.com/2015-08-shellrewrite-150822195231-lva1-app6891/85/Apache-Hadoop-Shell-Rewrite-20-320.jpg)

![40

Default *.out log rotation:!

!

function

hadoop_rotate_log

{

local

log=$1;

local

num=${2:-‐5};

!

if

[[

-‐f

"${log}"

]];

then

#

rotate

logs

while

[[

${num}

-‐gt

1

]];

do

let

prev=${num}-‐1

if

[[

-‐f

"${log}.${prev}"

]];

then

mv

"${log}.${prev}"

"${log}.${num}"

fi

num=${prev}

done

mv

"${log}"

"${log}.${num}"

fi

}

namenode.out.1

-‐>

namenode.out.2

namenode.out

-‐>

namenode.out.1](https://image.slidesharecdn.com/2015-08-shellrewrite-150822195231-lva1-app6891/85/Apache-Hadoop-Shell-Rewrite-40-320.jpg)

![41

Put a replacement rotate function w/gzip support in hadoop-user-functions.sh!!

!

function

hadoop_rotate_log

{

local

log=$1;

local

num=${2:-‐5};

!

if

[[

-‐f

"${log}"

]];

then

while

[[

${num}

-‐gt

1

]];

do

let

prev=${num}-‐1

if

[[

-‐f

"${log}.${prev}.gz"

]];

then

mv

"${log}.${prev}.gz"

"${log}.${num}.gz"

fi

num=${prev}

done

mv

"${log}"

"${log}.${num}"

gzip

-‐9

"${log}.${num}"

fi

}

namenode.out.1.gz

-‐>

namenode.out.2.gz

namenode.out

-‐>

namenode.out.1

gzip

-‐9

namenode.out.1

-‐>

namenode.out.1.gz](https://image.slidesharecdn.com/2015-08-shellrewrite-150822195231-lva1-app6891/85/Apache-Hadoop-Shell-Rewrite-41-320.jpg)

![48

# hadoop-user-functions.sh: (partial code below)!

function

hadoop_start_secure_daemon

{

…

jsvc="${JSVC_HOME}/jsvc"

!

if

[[

“${USER}”

-‐ne

"${HADOOP_SECURE_USER}"

]];

then

hadoop_error

"You

must

be

${HADOOP_SECURE_USER}

in

order

to

start

a

secure

${daemonname}"

exit

1

fi

…

exec

/usr/sbin/sudo

"${jsvc}"

"-‐Dproc_${daemonname}"

-‐outfile

"${daemonoutfile}"

-‐errfile

"${daemonerrfile}"

-‐pidfile

"${daemonpidfile}"

-‐nodetach

-‐home

"${JAVA_HOME}"

—user

"${HADOOP_SECURE_USER}"

-‐cp

"${CLASSPATH}"

${HADOOP_OPTS}

"${class}"

"$@"

}](https://image.slidesharecdn.com/2015-08-shellrewrite-150822195231-lva1-app6891/85/Apache-Hadoop-Shell-Rewrite-48-320.jpg)