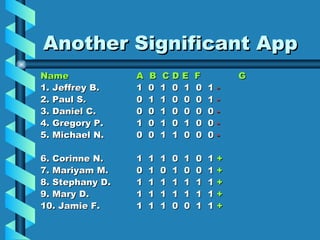

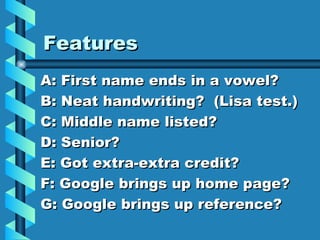

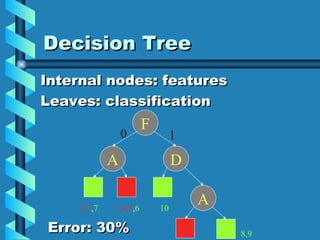

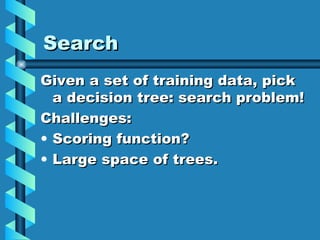

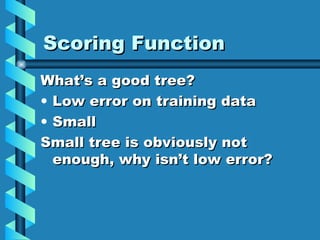

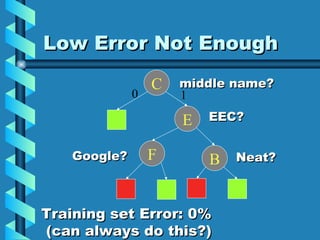

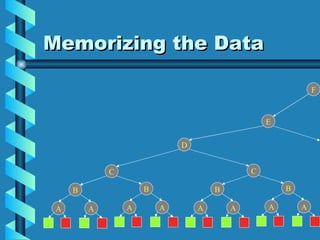

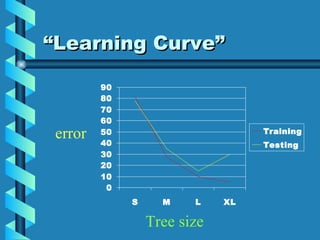

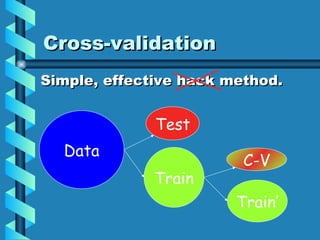

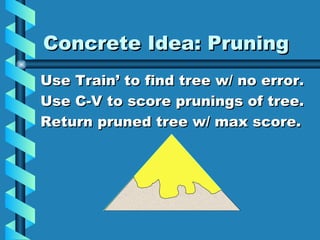

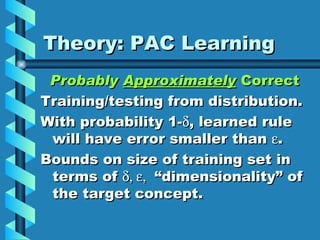

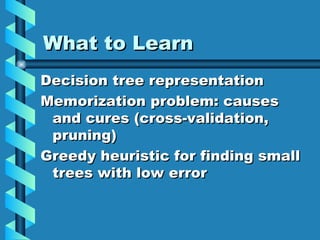

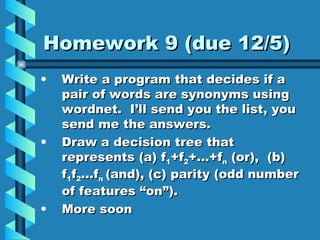

This document discusses supervised learning and decision trees. It introduces supervised learning and how examples are split into training and test sets. It presents an example dataset and features for classification. It shows a decision tree for this data and discusses scoring functions for trees, overfitting, and using cross-validation for pruning trees. It covers PAC learning theory, naive Bayes classification, and what was covered - decision tree representation, memorization problems, and finding small accurate trees greedily. Homework involves using WordNet to check word synonyms and drawing decision trees.