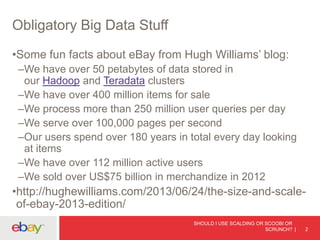

The document discusses different options for performing data analysis on Hadoop clusters, including Scalding, Scoobi, and Scrunch. It provides a brief overview of each option and code examples. While the options are similar, the author notes they are working to develop a common API. The key takeaways are that functional programming is well-suited for mapreduce problems and using Scalding, Scoobi, or Scrunch can increase productivity over traditional mapreduce.

![VANILLA MAPREDUCE

SHOULD I USE SCALDING OR SCOOBI OR

SCRUNCH? 9

package org.myorg;

import java.io.IOException;

import java.util.*;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.*;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class WordCount {

public static class Map extends Mapper<LongWritable, Text, Text,

IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, Context context) throws

IOException, InterruptedException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

context.write(word, one);

}

}

}

public static class Reduce extends Reducer<Text, IntWritable, Text,

IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values, Context

context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

context.write(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = new Job(conf, "wordcount");

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}](https://image.slidesharecdn.com/severs-june26-255pm-room210a-v2-130709113105-phpapp01/85/Should-I-Use-Scalding-or-Scoobi-or-Scrunch-9-320.jpg)

![SOME SCRUNCH CODE

val pipeline = new Pipeline[WordCountExample]

def wordCount(fileName: String) = {

pipeline.read(from.textFile(fileName))

.flatMap(_.toLowerCase.split("W+"))

.count

}

SHOULD I USE SCALDING OR SCOOBI OR

SCRUNCH? 19](https://image.slidesharecdn.com/severs-june26-255pm-room210a-v2-130709113105-phpapp01/85/Should-I-Use-Scalding-or-Scoobi-or-Scrunch-19-320.jpg)

![map

•Does what you think it does

scala> val mylist = List(1,2,3)

mylist: List[Int] = List(1, 2, 3)

scala> mylist.map(x => x + 5)

res0: List[Int] = List(6, 7, 8)

SHOULD I USE SCALDING OR SCOOBI OR

SCRUNCH? 22](https://image.slidesharecdn.com/severs-june26-255pm-room210a-v2-130709113105-phpapp01/85/Should-I-Use-Scalding-or-Scoobi-or-Scrunch-22-320.jpg)

![flatMap

•Kind of like map

•Does a map then a flatten

scala> val mystrings = List("hello there", "hadoop summit")

mystrings: List[String] = List(hello there, hadoop summit)

scala> mystrings.map(x => x.split(" "))

res5: List[Array[String]] =

List(Array(hello, there), Array(hadoop, summit))

scala> mystrings.map(x => x.split(" ")).flatten

res6: List[String] = List(hello, there, hadoop, summit)

scala> mystrings.flatMap(x => x.split(" "))

res7: List[String] = List(hello, there, hadoop, summit)

SHOULD I USE SCALDING OR SCOOBI OR

SCRUNCH? 23](https://image.slidesharecdn.com/severs-june26-255pm-room210a-v2-130709113105-phpapp01/85/Should-I-Use-Scalding-or-Scoobi-or-Scrunch-23-320.jpg)

![filter

•Pretty obvious

scala> mystrings.filter(x => x.contains("hadoop"))

res8: List[String] = List(hadoop summit)

•Takes a predicate function

•Use this a lot

SHOULD I USE SCALDING OR SCOOBI OR

SCRUNCH? 24](https://image.slidesharecdn.com/severs-june26-255pm-room210a-v2-130709113105-phpapp01/85/Should-I-Use-Scalding-or-Scoobi-or-Scrunch-24-320.jpg)

![groupBy

•Puts items together using an arbitrary function

scala> mylist.groupBy(x => x % 2 == 0)

res9: scala.collection.immutable.Map[Boolean,List[Int]] =

Map(false -> List(1, 3), true -> List(2))

scala> mylist.groupBy(x => x % 2)

res10: scala.collection.immutable.Map[Int,List[Int]] =

Map(1 -> List(1, 3), 0 -> List(2))

scala> mystrings.groupBy(x => x.length)

res11: scala.collection.immutable.Map[Int,List[String]] =

Map(11 -> List(hello there), 13 -> List(hadoop summit))

SHOULD I USE SCALDING OR SCOOBI OR

SCRUNCH? 25](https://image.slidesharecdn.com/severs-june26-255pm-room210a-v2-130709113105-phpapp01/85/Should-I-Use-Scalding-or-Scoobi-or-Scrunch-25-320.jpg)

![foldLeft

•This is a fancy reduce

•Signature: (z: B)((B,T) => B

•The input z is called the accumulator

scala> mylist.foldLeft(Set[Int]())((s,x) => s + x)

res15: scala.collection.immutable.Set[Int] =

Set(1, 2, 3)

scala> mylist.foldLeft(List[Int]())((xs, x) => x :: xs)

res16: List[Int] = List(3, 2, 1)

•Like reduce, this happens on the values after a groupBy

•Called slightly different things in Scoobi/Scrunch

SHOULD I USE SCALDING OR SCOOBI OR

SCRUNCH? 27](https://image.slidesharecdn.com/severs-june26-255pm-room210a-v2-130709113105-phpapp01/85/Should-I-Use-Scalding-or-Scoobi-or-Scrunch-27-320.jpg)