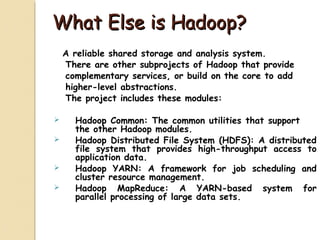

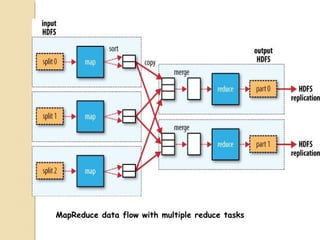

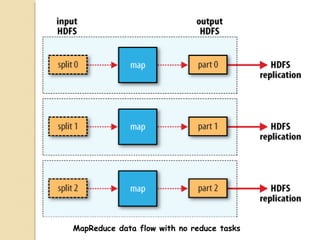

Hadoop is a framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses HDFS for fault-tolerant storage and MapReduce as a programming model for distributed computing. HDFS stores data across clusters of machines and replicates it for reliability. MapReduce allows processing of large datasets in parallel by splitting work into independent tasks. Hadoop provides reliable and scalable storage and analysis of very large amounts of data.