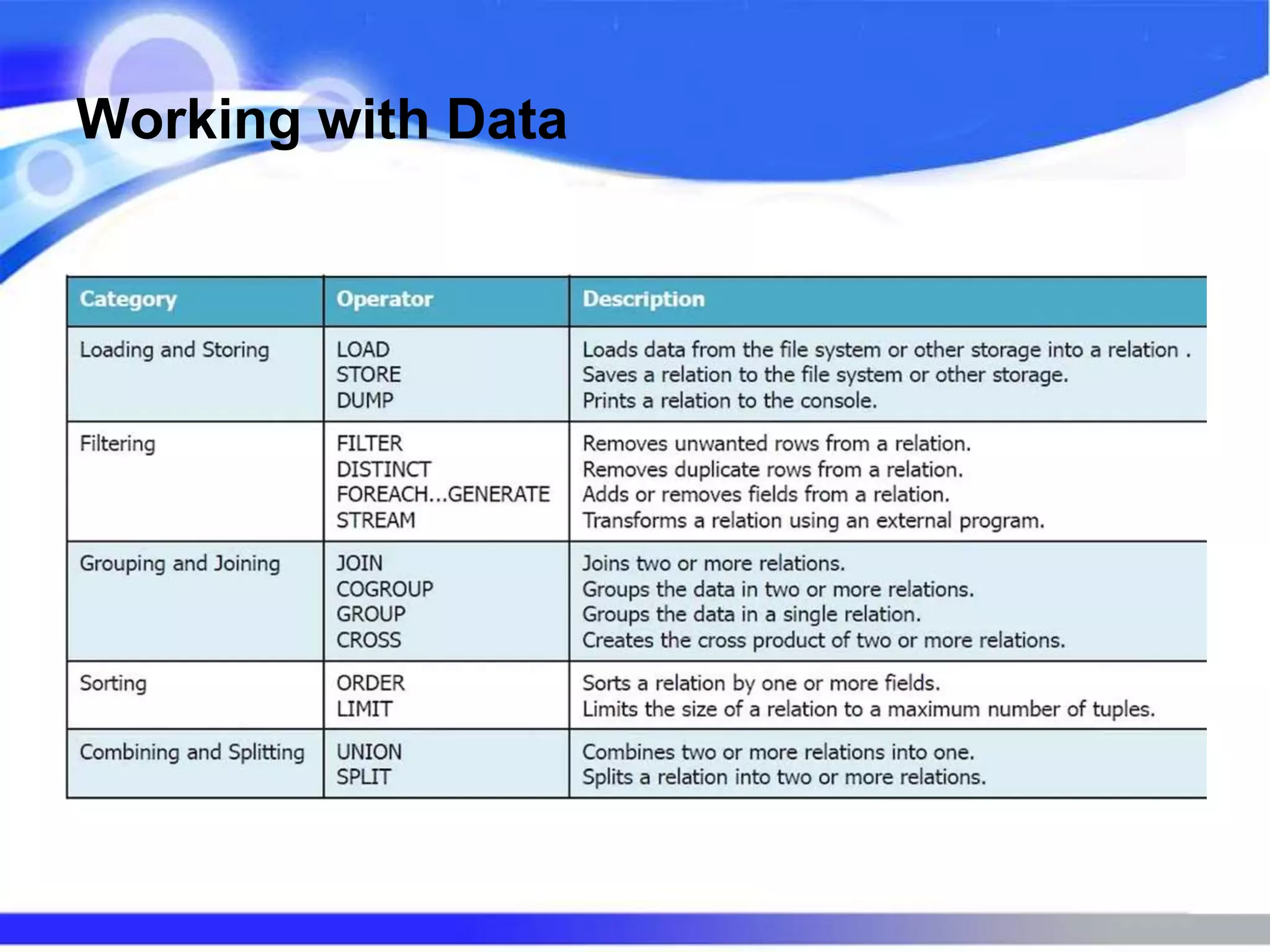

Apache Pig is a high-level platform for creating MapReduce programs in Hadoop, using its data flow language Pig Latin to handle large datasets. It allows for rapid development without the need for Java and provides features for data types, extensibility, and common operations like join and filter. Pig is best used for ETL processes, research on raw data, and iterative processing, though it may not be suitable for unstructured data or performance-critical tasks.

![• A bag is a collection of tuples.

Bag {(12.5,hello world,-2),(2.87,bye world,10)}

A bag is an unordered collection of tuples.

A bag is represented by tuples separated by commas, all

enclosed by curly

• Map [key value]

A map is a set of key/value pairs.

Keys must be unique and be a string (chararray).

The value can be any type.](https://image.slidesharecdn.com/5-170917100629/75/Apache-PIG-15-2048.jpg)