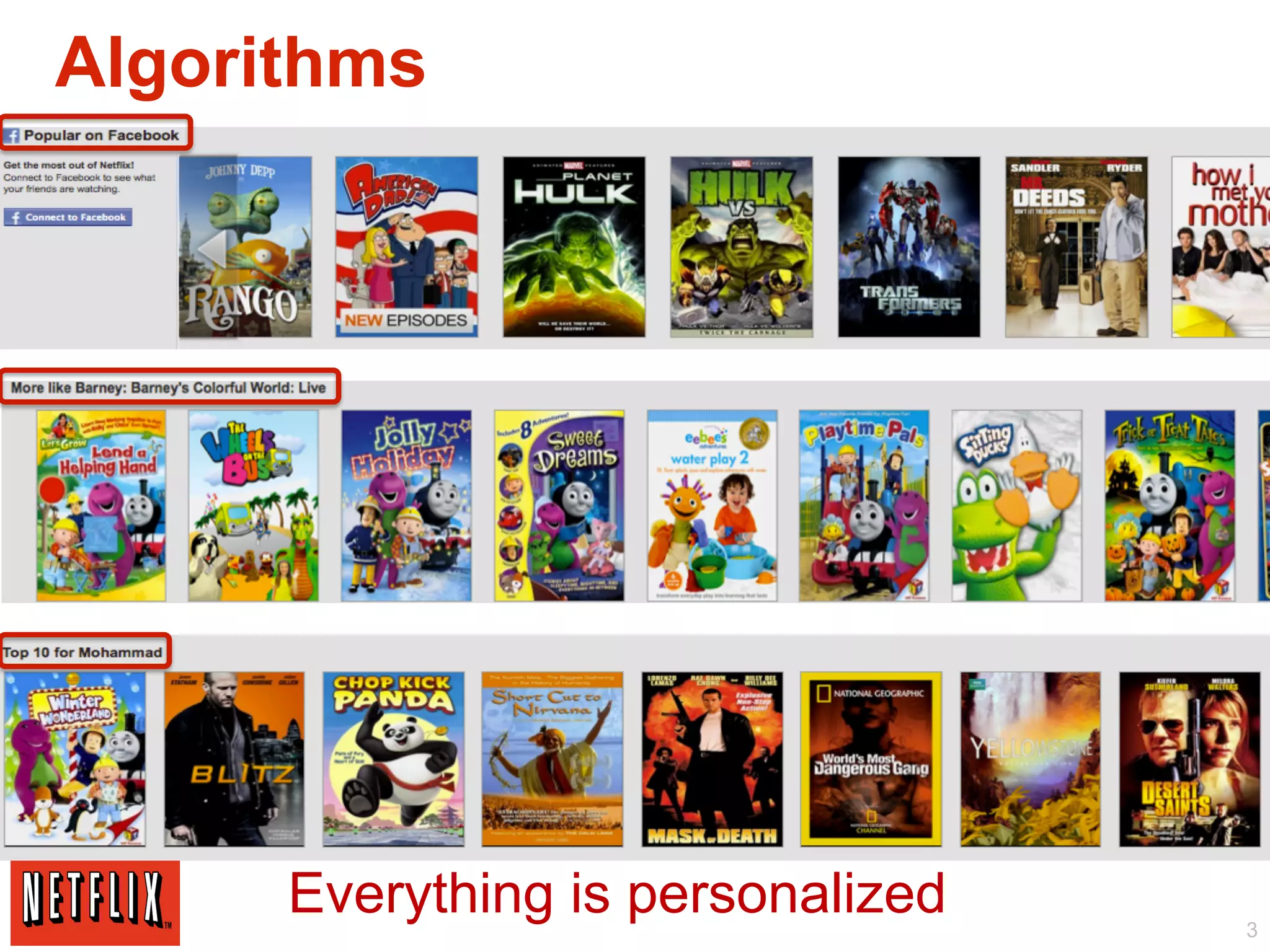

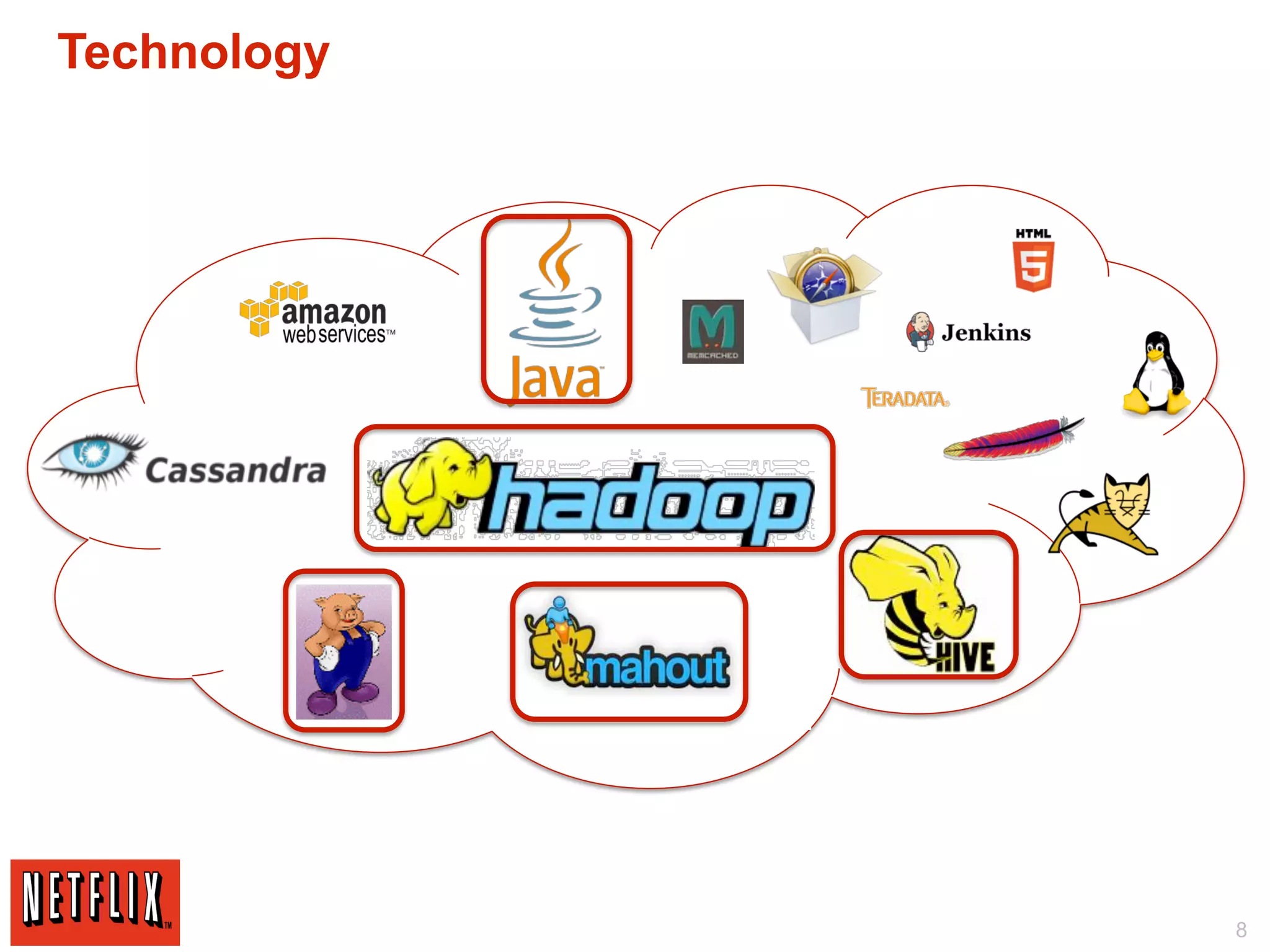

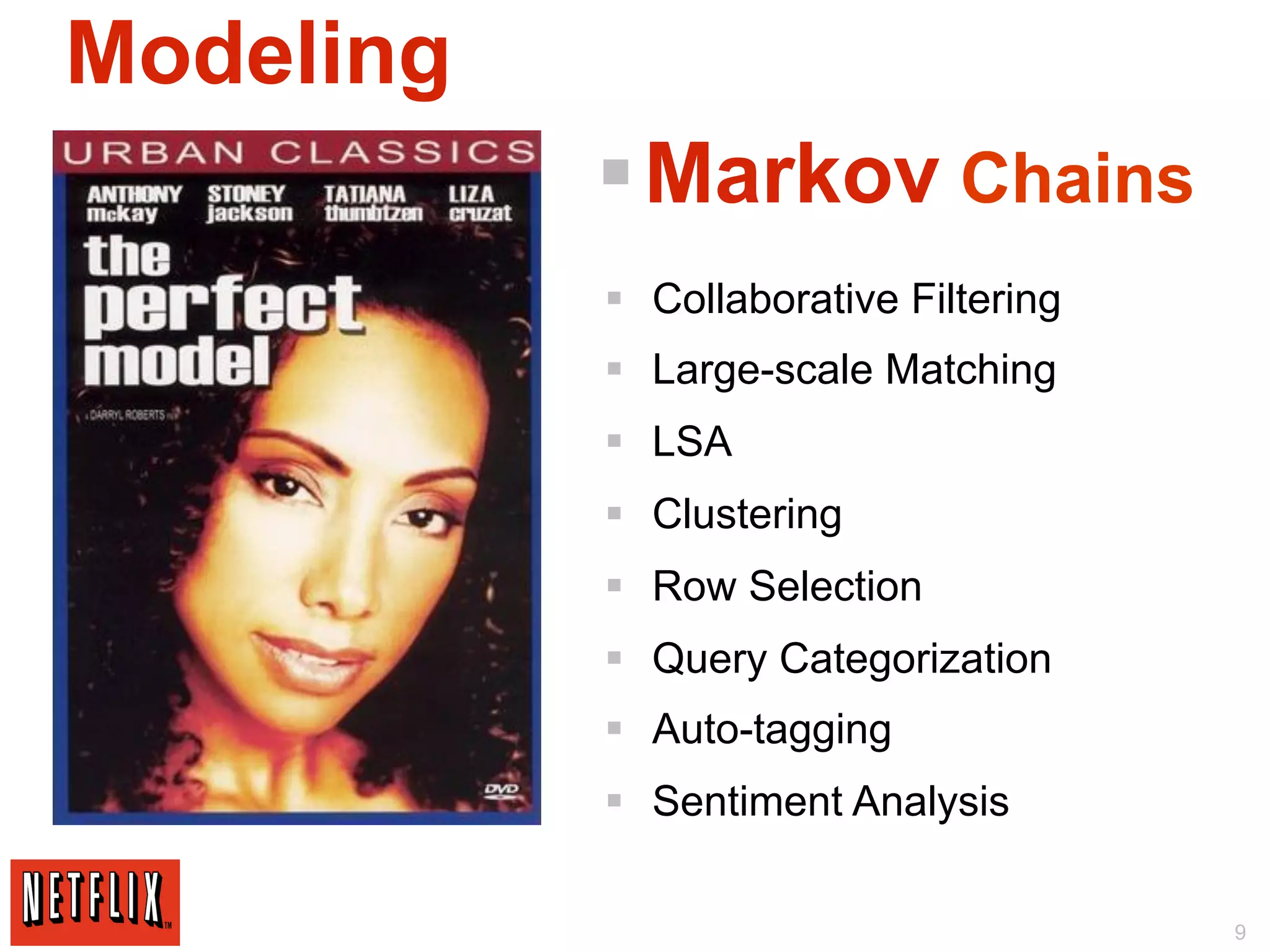

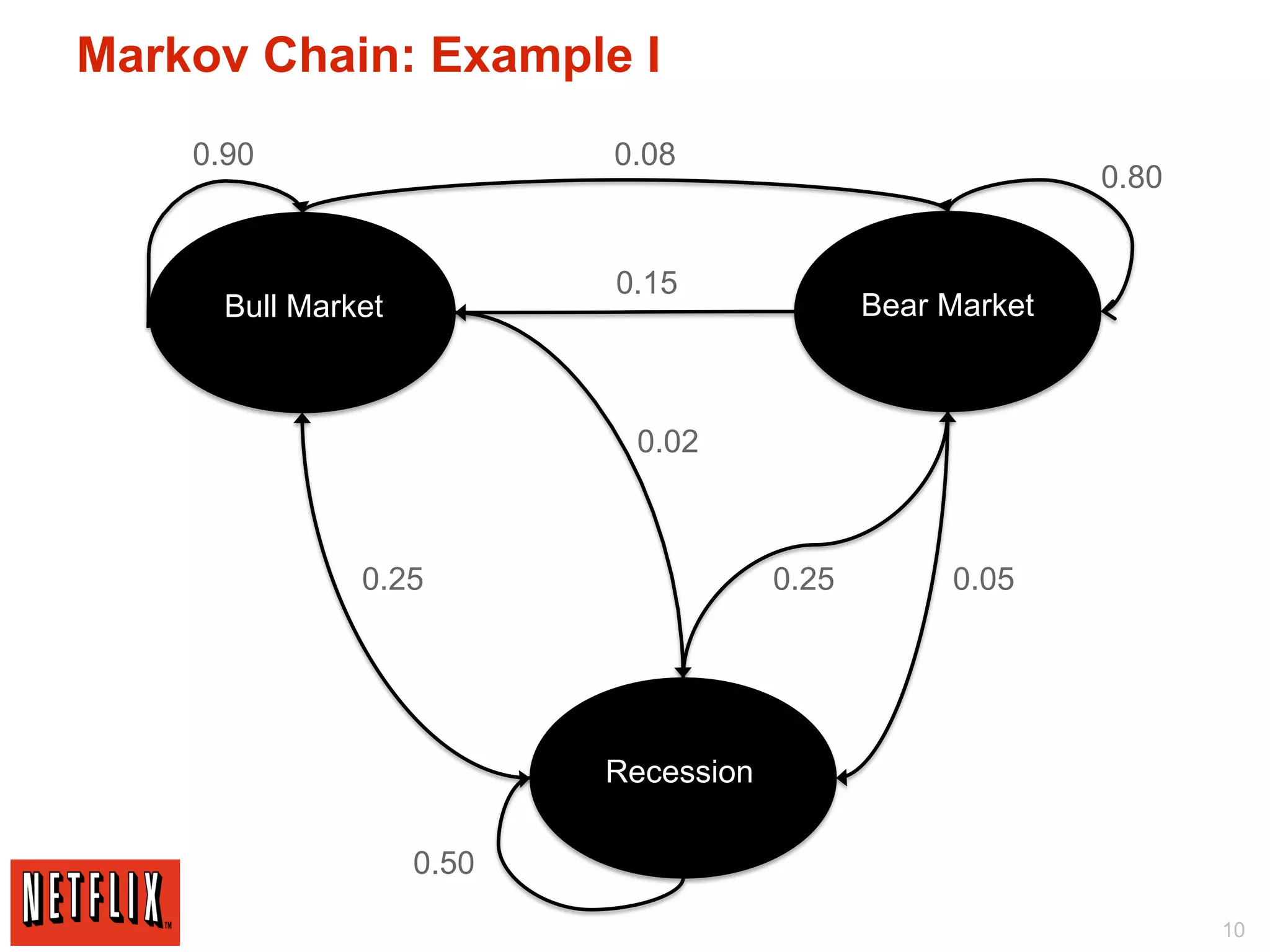

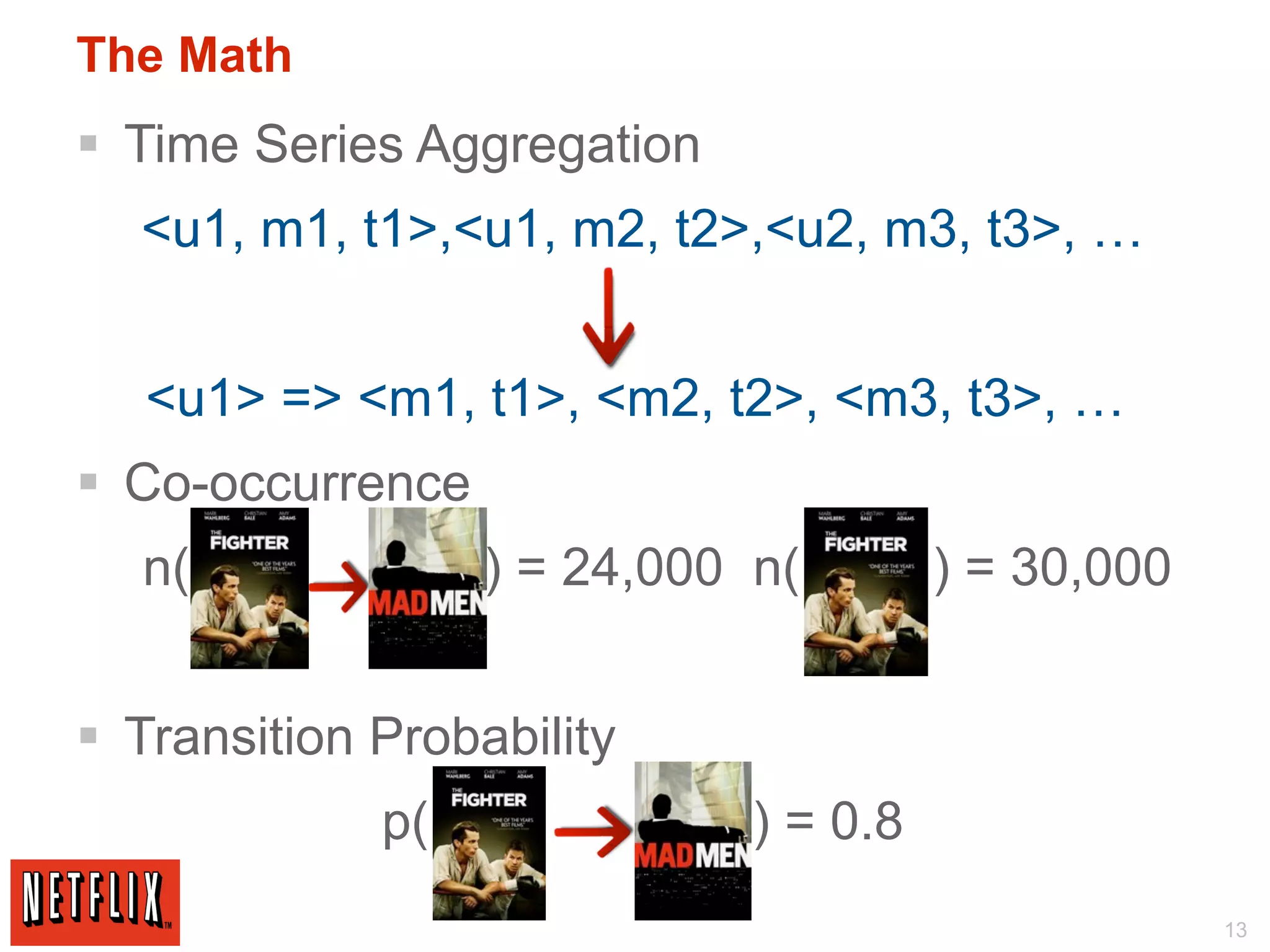

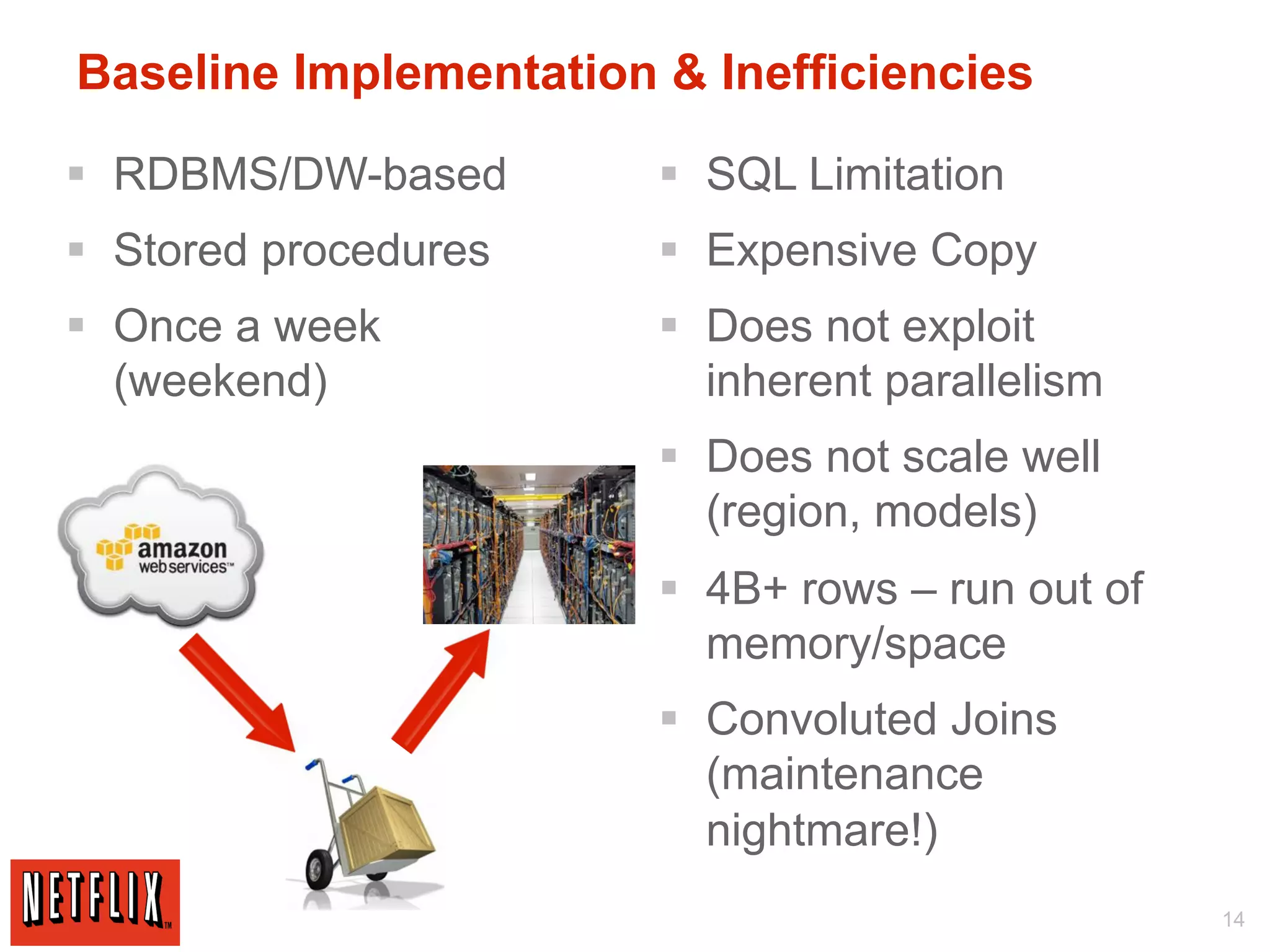

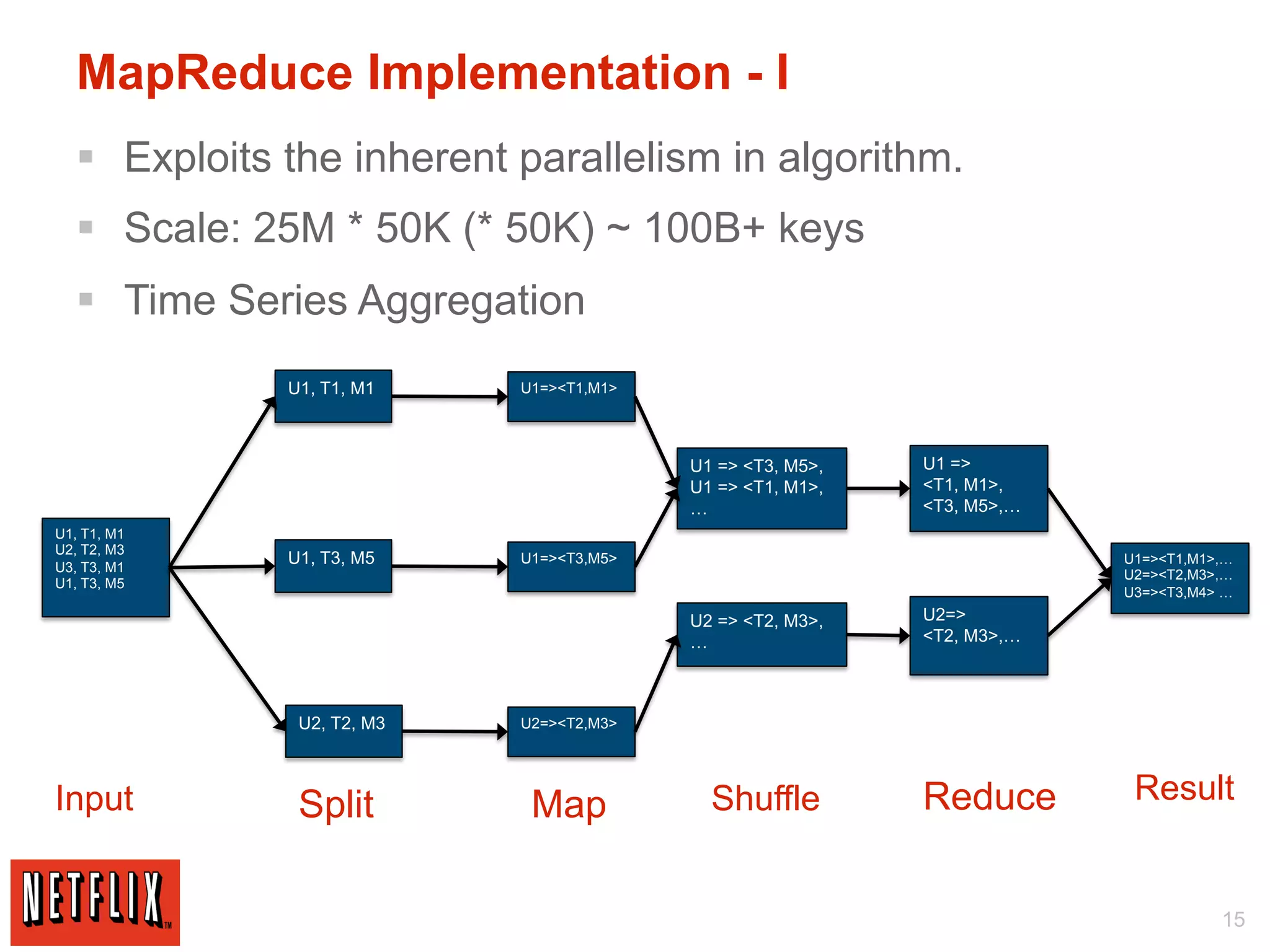

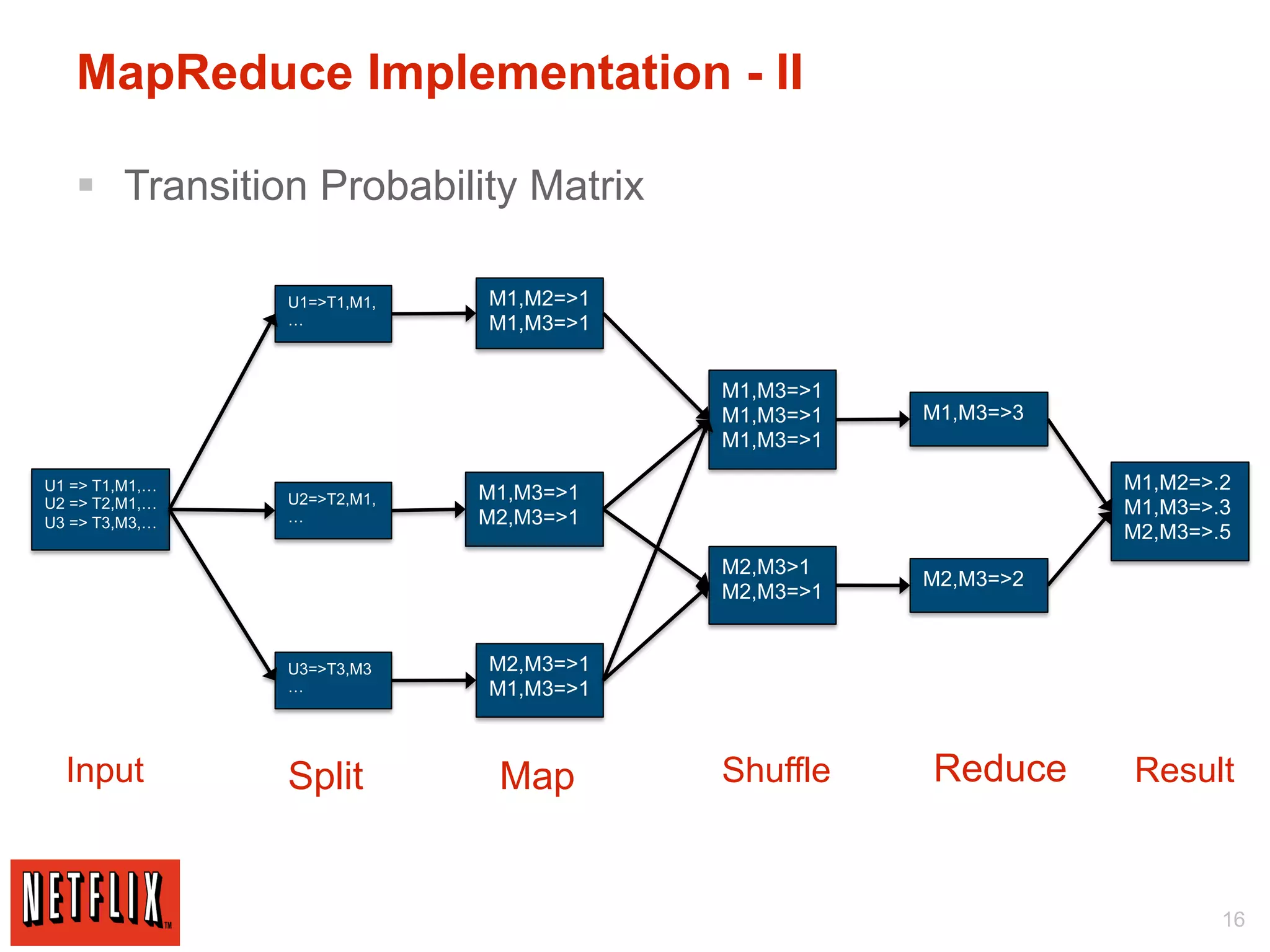

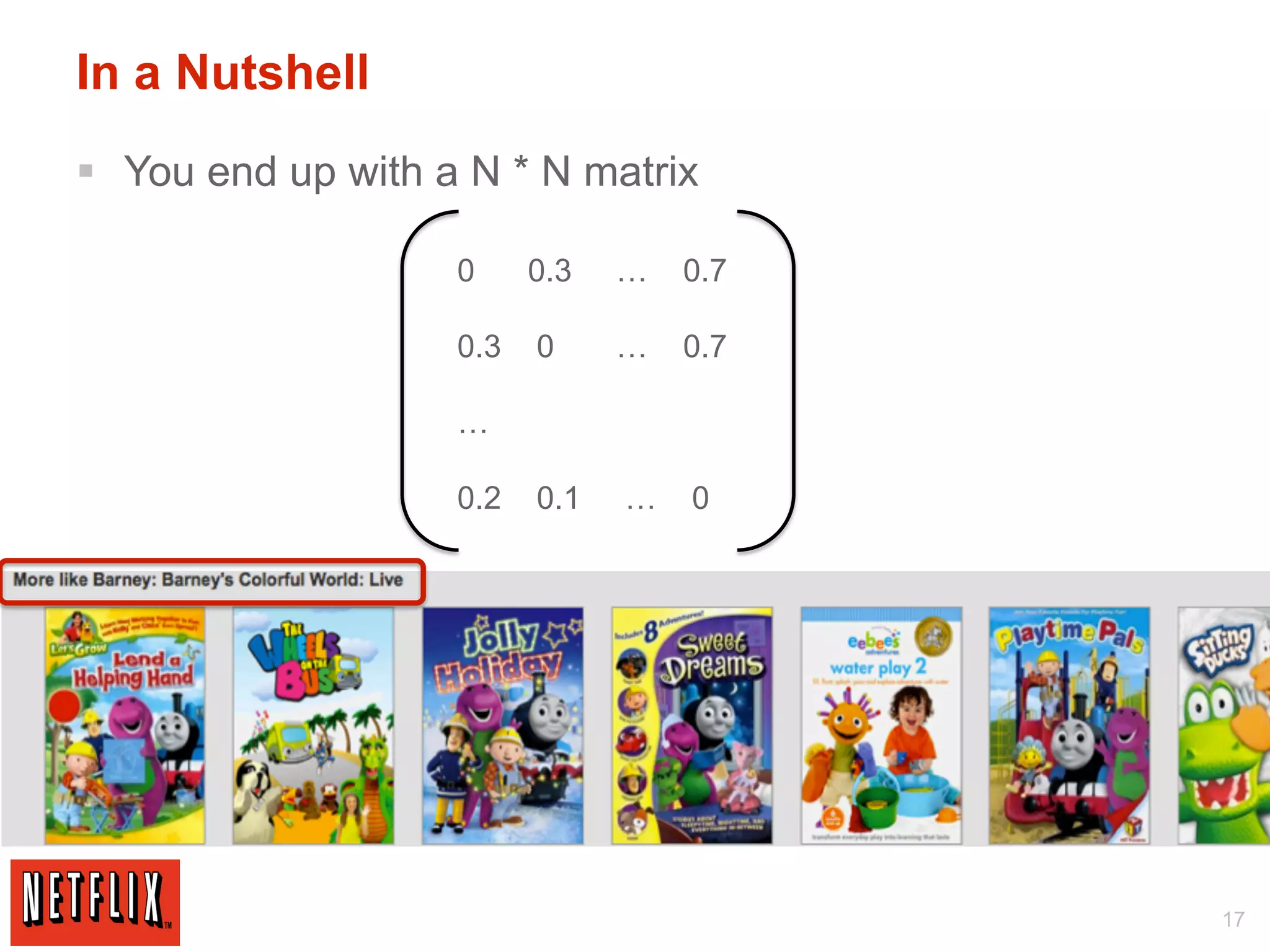

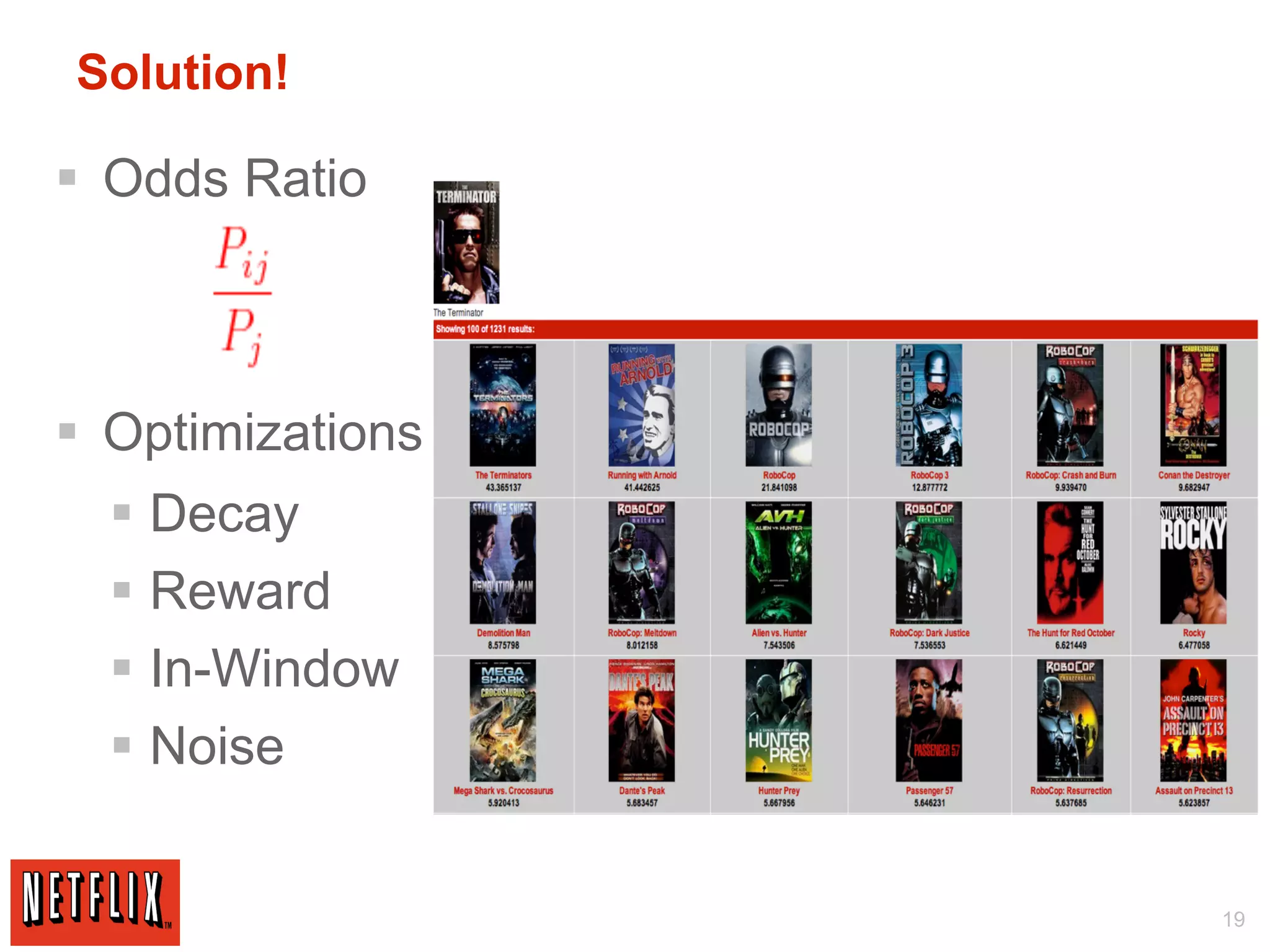

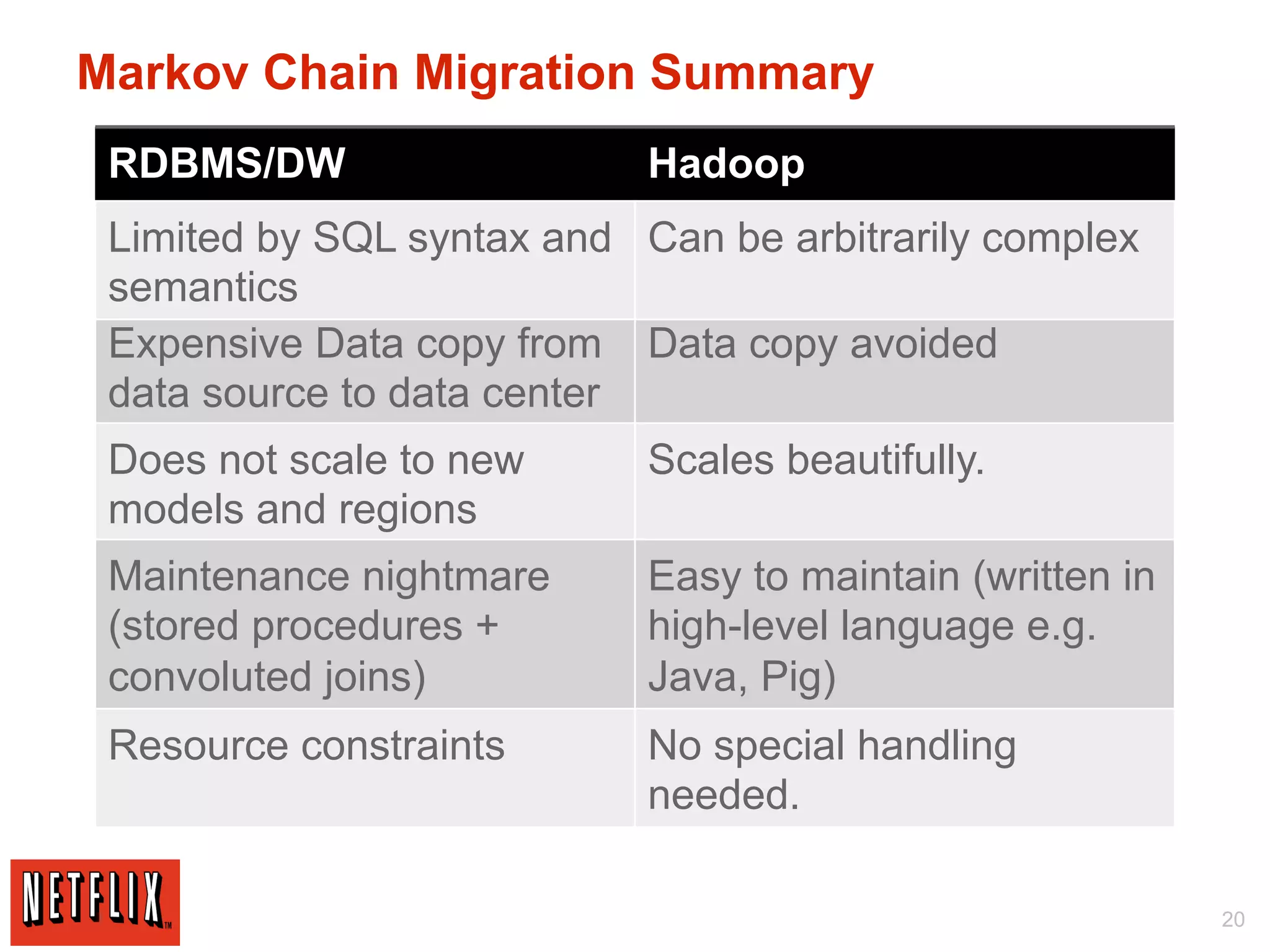

The document discusses Netflix's use of Hadoop and cloud computing to analyze large amounts of social data. It describes how Netflix receives data from 25 million subscribers including ratings, searches, plays, and other information. This amounts to over 4 million ratings and 3 million searches per day. Traditional databases struggled to handle this volume and scale effectively. Netflix migrated its algorithms to run on Hadoop, which allowed for arbitrarily complex modeling and easy scaling across new models and regions. This included using techniques like Markov chains, collaborative filtering, and machine learning on large datasets. The migration from traditional databases to Hadoop improved performance, scalability and maintainability.