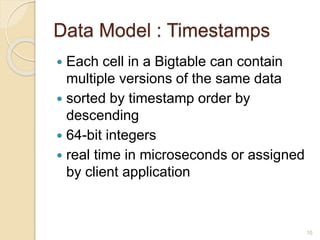

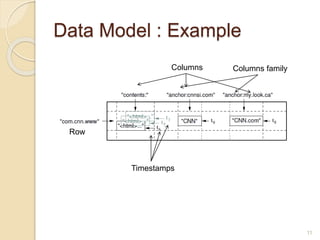

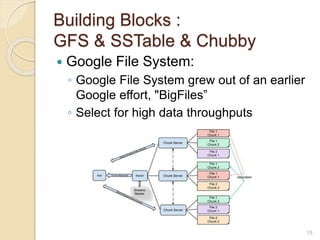

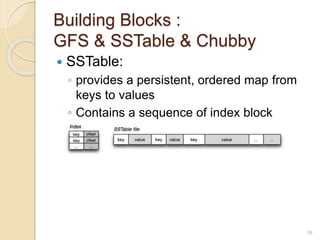

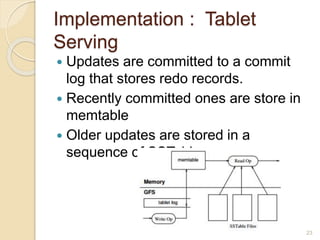

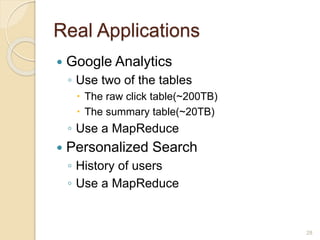

Bigtable is a distributed storage system designed to handle large amounts of structured data across thousands of commodity servers. It provides a simple "big table" abstraction with rows and columns that can be improved by adding additional columns and timestamps. Underneath, it uses Google's distributed file system GFS for storage and relies on the tablet server architecture and SSTable format to achieve high performance for millions of reads/writes per second and dynamic scaling.