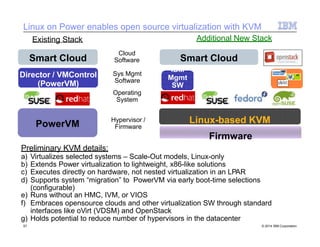

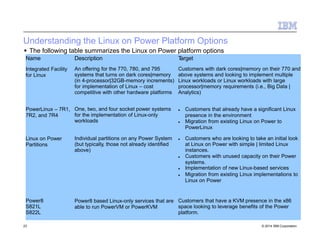

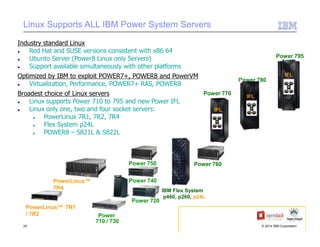

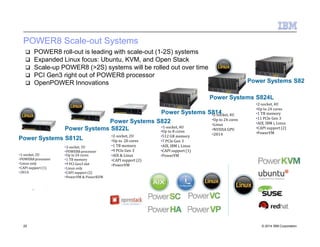

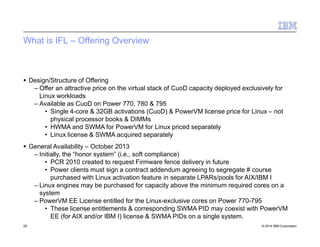

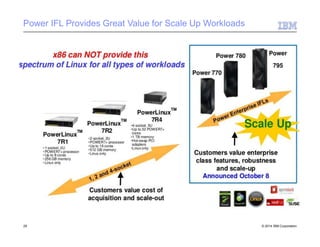

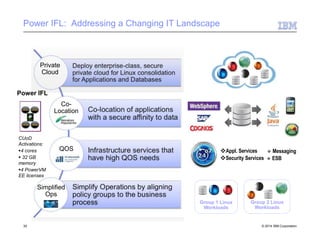

The document is a slide presentation about running Linux on IBM Power systems. It discusses why Linux is widely used, best practices for installing and configuring Linux on Power systems, and options for deploying Linux workloads including the Integrated Facility for Linux (IFL). The IFL allows customers to activate unused cores and memory on Power 770, 780, and 795 systems running only Linux at a lower cost than other hardware platforms.

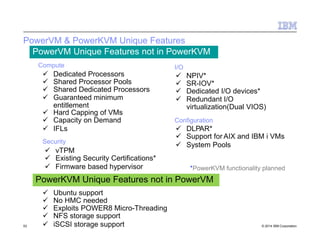

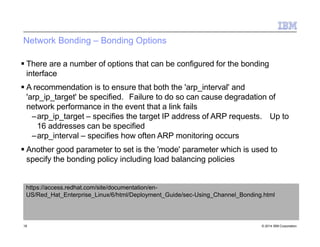

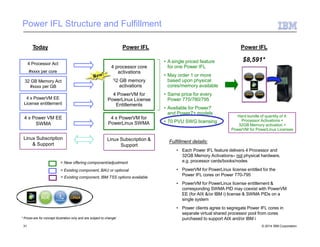

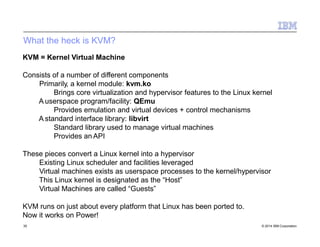

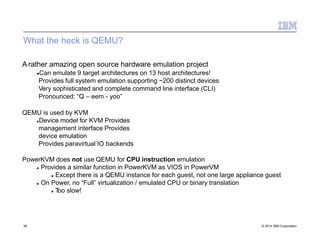

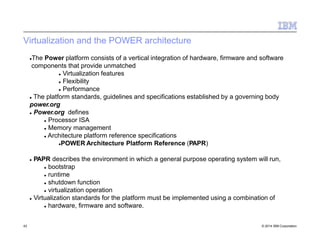

![Establishing a Local Package Repository

21 © 2014 IBM Corporation

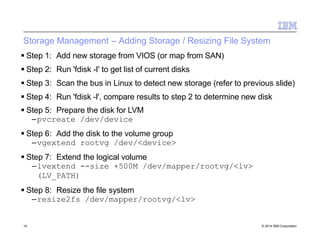

Step 1: Create an ISO image from the installation media

dd if=/dev/sr0 of=/tmp/RHEL65.iso

Step 2: Add mount information to the /etc/fstab file

/tmp/RHEL65.iso /media/RHEL65 iso9660 loop,ro,auto 0 0

Step 3: Mount the ISO

mount /media/RHEL65

Step 4: Create the repository definition file in /etc/yum.repos.d/

–[RHEL65]

–name=Local RedHat 6.5 Repository

–baseurl=file:///media/RHEL65

–gpgkey=file:///media/RHEL65/RPM-GPG-key-redhat-

release

–gpgcheck=1

–enabled=1](https://image.slidesharecdn.com/presentation-linuxonpower-151113091830-lva1-app6891/85/Presentation-linux-on-power-21-320.jpg)

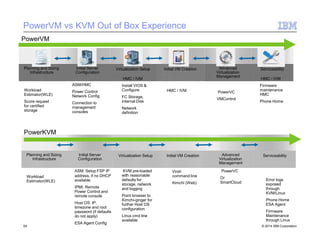

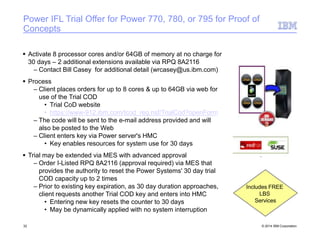

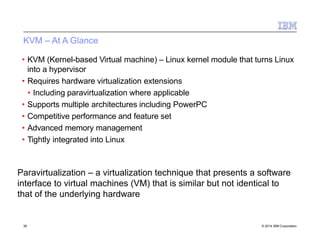

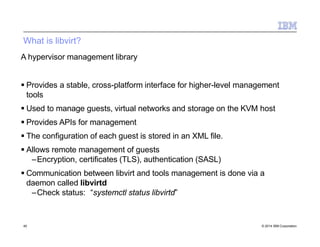

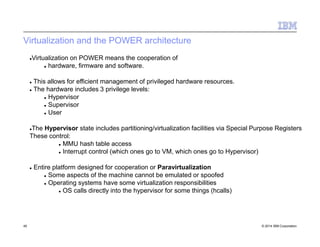

![Power Systems Software Stack

PowerVM Hypervisor

Hypervisor /

System Firmware

Operating

System

System Firmware

FSP

Partition FirmwareOpenFirmware OpenFirmware

V

I

O

S

POWER7 Hardware

[PAPR] Platform interfaces

44 © 2014 IBM Corporation](https://image.slidesharecdn.com/presentation-linuxonpower-151113091830-lva1-app6891/85/Presentation-linux-on-power-44-320.jpg)

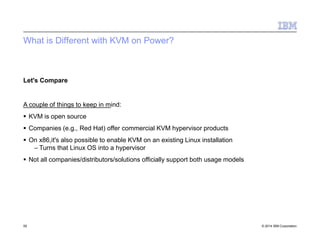

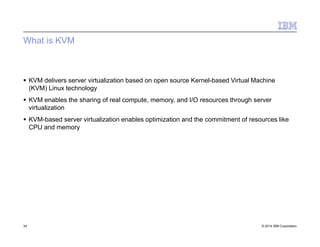

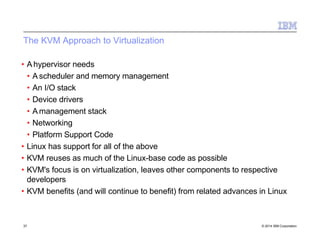

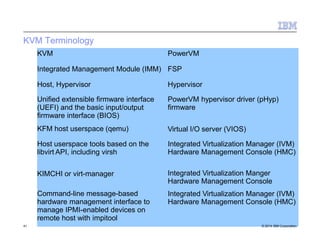

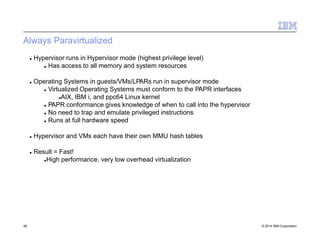

![Power Systems Software Stack with KVM

PowerKVM

Hypervisor

Operating

System

FSP

Partition FirmwareSLOF

OPAL

Firmware

SLOF

POWER8 Hardware

[PAPR] Platform interfaces

System Firmware

qemu qemu

48 © 2014 IBM Corporation](https://image.slidesharecdn.com/presentation-linuxonpower-151113091830-lva1-app6891/85/Presentation-linux-on-power-48-320.jpg)