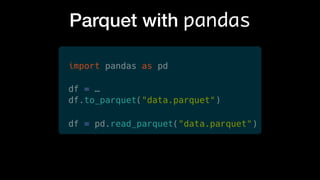

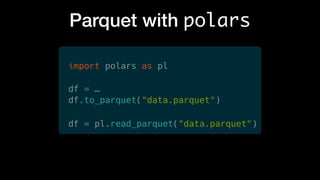

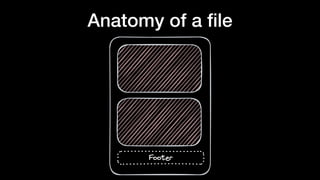

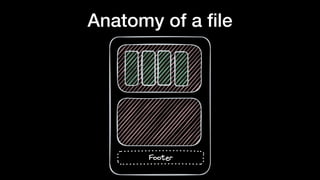

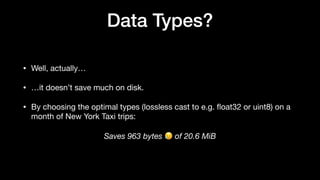

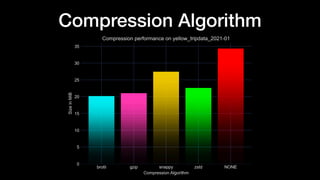

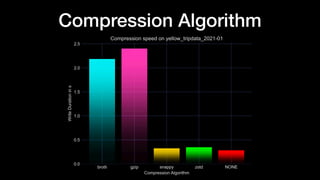

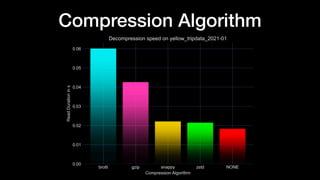

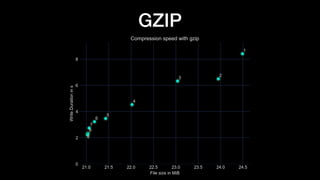

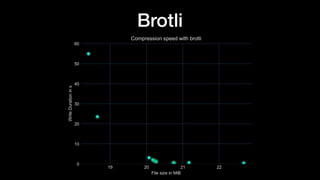

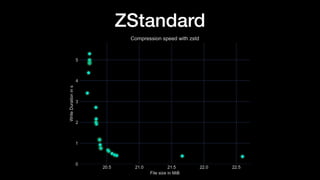

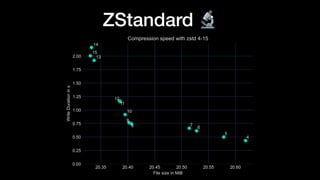

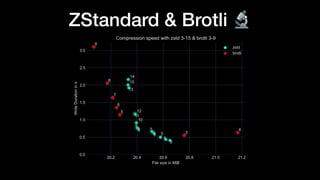

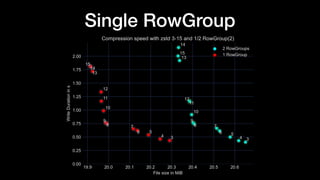

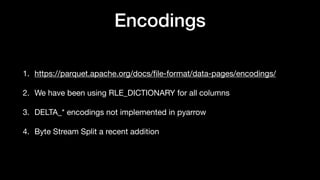

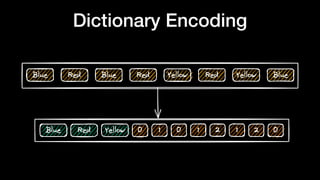

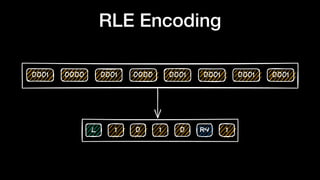

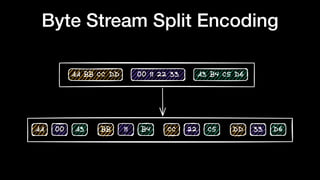

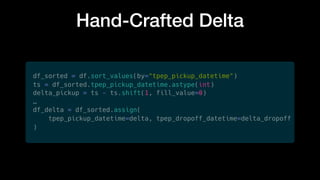

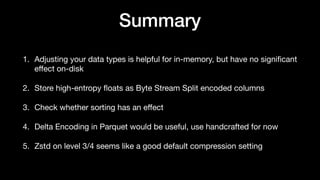

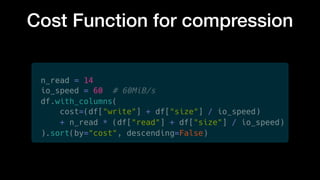

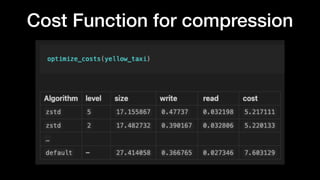

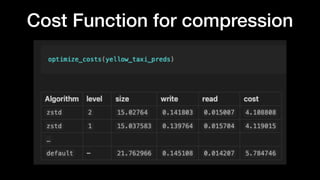

The document discusses optimizing Apache Parquet file settings, including compression algorithms, data types, and file structure for efficient data storage and access. Key recommendations include utilizing Zstandard for compression, fine-tuning rowgroup sizes, and implementing delta encoding where beneficial. Practical examples are provided, emphasizing the importance of testing different configurations to enhance performance with real datasets like New York taxi trips and others.