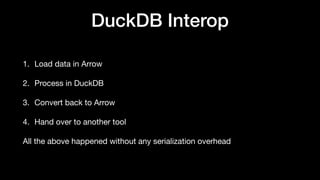

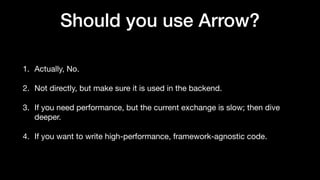

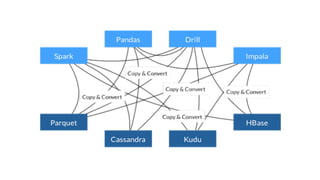

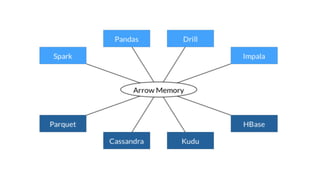

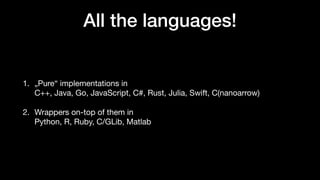

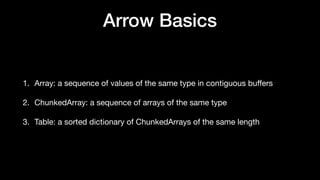

Apache Arrow is a columnar data representation technology that enables efficient data interchange between various programming ecosystems, enhancing performance and reducing conversion delays. It provides libraries for multiple languages and is widely used in databases, data engineering, machine learning libraries, and business intelligence applications. The document outlines Arrow's architecture, its impact on data processing speeds, and interoperation with tools like DuckDB and Parquet.

![Arrow Basics: int array

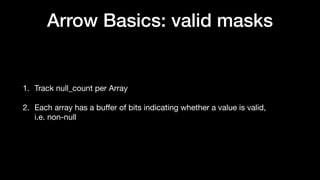

Python array: [1, null, 2, 4, 8]

Length: 5, Null count: 1

Validity bitmap buffer:

| Byte 0 (validity bitmap) | Bytes 1-63 |

|--------------------------|-----------------------|

| 00011101 | 0 (padding) |

Value Buffer:

| Bytes 0-3 | Bytes 4-7 | Bytes 8-11 | Bytes 12-15 | Bytes 16-19 | Bytes 20-63 |

|-------------|-------------|-------------|-------------|-------------|-----------------------|

| 1 | unspecified | 2 | 4 | 8 | unspecified (padding) |](https://image.slidesharecdn.com/pydatasofia-apachearrow-240712101735-d007f75b/85/PyData-Sofia-May-2024-Intro-to-Apache-Arrow-14-320.jpg)

![Arrow Basics: string array

Python array: ['joe', null, null, 'mark']

Length: 4, Null count: 2

Validity bitmap buffer:

| Byte 0 (validity bitmap) | Bytes 1-63 |

|--------------------------|-----------------------|

| 00001001 | 0 (padding) |

Offsets buffer:

| Bytes 0-19 | Bytes 20-63 |

|----------------|-----------------------|

| 0, 3, 3, 3, 7 | unspecified (padding) |

Value buffer:

| Bytes 0-6 | Bytes 7-63 |

|----------------|-----------------------|

| joemark | unspecified (padding) |](https://image.slidesharecdn.com/pydatasofia-apachearrow-240712101735-d007f75b/85/PyData-Sofia-May-2024-Intro-to-Apache-Arrow-15-320.jpg)